National Security Science Computing Issue

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Making of an Atomic Bomb

(Image: Courtesy of United States Government, public domain.) INTRODUCTORY ESSAY "DESTROYER OF WORLDS": THE MAKING OF AN ATOMIC BOMB At 5:29 a.m. (MST), the world’s first atomic bomb detonated in the New Mexican desert, releasing a level of destructive power unknown in the existence of humanity. Emitting as much energy as 21,000 tons of TNT and creating a fireball that measured roughly 2,000 feet in diameter, the first successful test of an atomic bomb, known as the Trinity Test, forever changed the history of the world. The road to Trinity may have begun before the start of World War II, but the war brought the creation of atomic weaponry to fruition. The harnessing of atomic energy may have come as a result of World War II, but it also helped bring the conflict to an end. How did humanity come to construct and wield such a devastating weapon? 1 | THE MANHATTAN PROJECT Models of Fat Man and Little Boy on display at the Bradbury Science Museum. (Image: Courtesy of Los Alamos National Laboratory.) WE WAITED UNTIL THE BLAST HAD PASSED, WALKED OUT OF THE SHELTER AND THEN IT WAS ENTIRELY SOLEMN. WE KNEW THE WORLD WOULD NOT BE THE SAME. A FEW PEOPLE LAUGHED, A FEW PEOPLE CRIED. MOST PEOPLE WERE SILENT. J. ROBERT OPPENHEIMER EARLY NUCLEAR RESEARCH GERMAN DISCOVERY OF FISSION Achieving the monumental goal of splitting the nucleus The 1930s saw further development in the field. Hungarian- of an atom, known as nuclear fission, came through the German physicist Leo Szilard conceived the possibility of self- development of scientific discoveries that stretched over several sustaining nuclear fission reactions, or a nuclear chain reaction, centuries. -

Frontiers of Quantum and Mesoscopic Thermodynamics 29 July - 3 August 2013, Prague, Czech Republic

Frontiers of Quantum and Mesoscopic Thermodynamics 29 July - 3 August 2013, Prague, Czech Republic Under the auspicies of Ing. Milosˇ Zeman President of the Czech Republic Milan Stˇ echˇ President of the Senate of the Parliament of the Czech Republic Prof. Ing. Jirˇ´ı Drahos,ˇ DrSc., dr. h. c. President of the Academy of Sciences of the Czech Republic Dominik Cardinal Duka OP Archbishop of Prague Supported by • Committee on Education, Science, Culture, Human Rights and Petitions of the Senate of the Parliament of the Czech Republic • Institute of Physics, the Academy of Sciences of the Czech Republic • Institute for Theoretical Physics, University of Amsterdam, The Netherlands • Department of Physics, Texas A&M University, USA • Institut de Physique Theorique,´ CEA/CNRS Saclay, France Topics • Foundations of quantum physics • Non-equilibrium statistical physics • Quantum thermodynamics • Quantum measurement, entanglement and coherence • Dissipation, dephasing, noise and decoherence • Quantum optics • Macroscopic quantum behavior, e.g., cold atoms, Bose-Einstein condensates • Physics of quantum computing and quantum information • Mesoscopic, nano-electromechanical and nano-optical systems • Biological systems, molecular motors • Cosmology, gravitation and astrophysics Scientific Committee Chair: Theo M. Nieuwenhuizen (University of Amsterdam) Co-Chair: Vaclav´ Spiˇ ckaˇ (Institute of Physics, Acad. Sci. CR, Prague) Raymond Dean Astumian (University of Maine, Orono) Roger Balian (IPhT, Saclay) Gordon Baym (University of Illinois at Urbana -

The Manhattan Project and Its Legacy

Transforming the Relationship between Science and Society: The Manhattan Project and Its Legacy Report on the workshop funded by the National Science Foundation held on February 14 and 15, 2013 in Washington, DC Table of Contents Executive Summary iii Introduction 1 The Workshop 2 Two Motifs 4 Core Session Discussions 6 Scientific Responsibility 6 The Culture of Secrecy and the National Security State 9 The Decision to Drop the Bomb 13 Aftermath 15 Next Steps 18 Conclusion 21 Appendix: Participant List and Biographies 22 Copyright © 2013 by the Atomic Heritage Foundation. All rights reserved. No part of this book, either text or illustration, may be reproduced or transmit- ted in any form by any means, electronic or mechanical, including photocopying, reporting, or by any information storage or retrieval system without written persmission from the publisher. Report prepared by Carla Borden. Design and layout by Alexandra Levy. Executive Summary The story of the Manhattan Project—the effort to develop and build the first atomic bomb—is epic, and it continues to unfold. The decision by the United States to use the bomb against Japan in August 1945 to end World War II is still being mythologized, argued, dissected, and researched. The moral responsibility of scientists, then and now, also has remained a live issue. Secrecy and security practices deemed necessary for the Manhattan Project have spread through the govern- ment, sometimes conflicting with notions of democracy. From the Manhattan Project, the scientific enterprise has grown enormously, to include research into the human genome, for example, and what became the Internet. Nuclear power plants provide needed electricity yet are controversial for many people. -

The Development of Military Nuclear Strategy And

The Development of Military Nuclear Strategy and Anglo-American Relations, 1939 – 1958 Submitted by: Geoffrey Charles Mallett Skinner to the University of Exeter as a thesis for the degree of Doctor of Philosophy in History, July 2018 This thesis is available for Library use on the understanding that it is copyright material and that no quotation from the thesis may be published without proper acknowledgement. I certify that all material in this thesis which is not my own work has been identified and that no material has previously been submitted and approved for the award of a degree by this or any other University. (Signature) ……………………………………………………………………………… 1 Abstract There was no special governmental partnership between Britain and America during the Second World War in atomic affairs. A recalibration is required that updates and amends the existing historiography in this respect. The wartime atomic relations of those countries were cooperative at the level of science and resources, but rarely that of the state. As soon as it became apparent that fission weaponry would be the main basis of future military power, America decided to gain exclusive control over the weapon. Britain could not replicate American resources and no assistance was offered to it by its conventional ally. America then created its own, closed, nuclear system and well before the 1946 Atomic Energy Act, the event which is typically seen by historians as the explanation of the fracturing of wartime atomic relations. Immediately after 1945 there was insufficient systemic force to create change in the consistent American policy of atomic monopoly. As fusion bombs introduced a new magnitude of risk, and as the nuclear world expanded and deepened, the systemic pressures grew. -

Chicago Pile-1 - Wikipedia, the Free Encyclopedia

Chicago Pile-1 - Wikipedia, the free encyclopedia Not logged in Talk Contributions Create account Log in Article Talk Read Edit View history Chicago Pile-1 From Wikipedia, the free encyclopedia Coordinates : 41°47′32″N 87°36′3″W Main page Contents Chicago Pile-1 (CP-1) was the world's first nuclear Site of the First Self Sustaining Nuclear Featured content reactor to achieve criticality. Its construction was part of Reaction Current events the Manhattan Project, the Allied effort to create atomic U.S. National Register of Historic Places Random article bombs during World War II. It was built by the U.S. National Historic Landmark Donate to Wikipedia Manhattan Project's Metallurgical Laboratory at the Wikipedia store Chicago Landmark University of Chicago , under the west viewing stands of Interaction the original Stagg Field . The first man-made self- Help sustaining nuclear chain reaction was initiated in CP-1 About Wikipedia on 2 December 1942, under the supervision of Enrico Community portal Recent changes Fermi, who described the apparatus as "a crude pile of [4] Contact page black bricks and wooden timbers". Tools The reactor was assembled in November 1942, by a team What links here that included Fermi, Leo Szilard , discoverer of the chain Related changes reaction, and Herbert L. Anderson, Walter Zinn, Martin Upload file D. Whitaker, and George Weil . It contained 45,000 Drawing of the reactor Special pages graphite blocks weighing 400 short tons (360 t) used as Permanent link a neutron moderator , and was fueled by 6 short tons Page information Wikidata item (5.4 t) of uranium metal and 50 short tons (45 t) of Cite this page uranium oxide. -

Concept and Types of Tourism

m Tourism: Concept and Types of Tourism m m 1.1 CONCEPT OF TOURISM Tourism is an ever-expanding service industry with vast growth potential and has therefore become one of the crucial concerns of the not only nations but also of the international community as a whole. Infact, it has come up as a decisive link in gearing up the pace of the socio-economic development world over. It is believed that the word tour in the context of tourism became established in the English language by the eighteen century. On the other hand, according to oxford dictionary, the word tourism first came to light in the English in the nineteen century (1811) from a Greek word 'tomus' meaning a round shaped tool.' Tourism as a phenomenon means the movement of people (both within and across the national borders).Tourism means different things to different people because it is an abstraction of a wide range of consumption activities which demand products and services from a wide range of industries in the economy. In 1905, E. Freuler defined tourism in the modem sense of the world "as a phenomena of modem times based on the increased need for recuperation and change of air, the awakened, and cultivated appreciation of scenic beauty, the pleasure in. and the enjoyment of nature and in particularly brought about by the increasing mingling of various nations and classes of human society, as a result of the development of commerce, industry and trade, and the perfection of the means of transport'.^ Professor Huziker and Krapf of the. -

Dark-Bright Solitons in Boseâ•fi-Einstein

DARK-BRIGHT SOLITONS IN BOSE-EINSTEIN CONDENSATES: DYNAMICS, SCATTERING, AND OSCILLATION by Majed O. D. Alotaibi c Copyright by Majed O. D. Alotaibi, 2018 All Rights Reserved A thesis submitted to the Faculty and the Board of Trustees of the Colorado School of Mines in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Physical Science). Golden, Colorado Date Signed: Majed O. D. Alotaibi Signed: Prof. Lincoln Carr Thesis Advisor Golden, Colorado Date Signed: Prof. Uwe Greife Professor and Head Department of Physics ii ABSTRACT In this thesis, we have studied the behavior of two-component dark-bright solitons in multicomponent Bose-Einstein condensates (BECs) analytically and numerically in different situations. We utilized various analytical methods including the variational method and perturbation theory. By imprinting a linear phase on the bright component only, we were able to impart a velocity relative to the dark component and thereby we obtain an internal oscillation between the two components. We find that there are two modes of the oscillation of the dark-bright soliton. The first one is the famous Goldstone mode. This mode represents a moving dark-bright soliton without internal oscillation and is related to the continuous translational symmetry of the underlying equations of motion in the uniform potential. The second mode is the oscillation of the two components relative to each other. We compared the results obtained from the variational method with numerical simulations and found that the oscillation frequency range is 90 to 405 Hz and therefore observable in multicomponent Bose-Einstein condensate experiments. Also, we studied the binding energy and found a critical value for the breakup of the dark-bright soliton. -

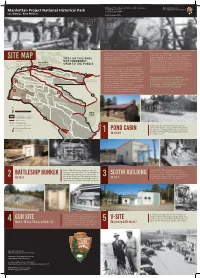

Manhattan Park Map

Manhattan Project National Historical Park - Los Alamos National Park Service 475 20th Street, Suite C U.S. Department of the Interior Manhattan Project National Historical Park Los Alamos, NM 87544 Los Alamos, New Mexico 505-661-MAPR (6277) Project Y workers with the Norris Bradbury with Thin Man plutonium gun the Trinity device. device at Gun Site. In 1943, the United States government’s Manhattan Three locations comprise the park: Project Y at Los Alamos, Project built a secret laboratory at Los Alamos, New New Mexico; Site X at Oak Ridge, Tennessee; and Site W at site map SITES ON THIS PAGE Mexico, for a single military purpose—to develop the Hanford, Washington. The Manhattan Project National world’s first atomic weapons. The success of this Historical Park legislation references 17 sites at Los Alamos NOT CURRENTLY unprecedented, top-secret government program National Laboratory, as well as 13 sites in downtown Los forever changed the world. Alamos. These sites represent the world-changing history of Original Technical Area 1 OPEN TO THE PUBLIC (TA-1); see reverse. the Manhattan Project at Los Alamos. Their preservation and In 2004, the U.S. Congress directed the National Park interpretation will show visitors the scientific, social, Service and the Department of Energy to determine political, and cultural stories of the men and women who the significance, suitability, and feasibility of including ushered in the atomic age. signature facilities in a national historical park. In 2014, the National Defense Authorization Act, signed by President Obama, authorized creation of the Park. This The properties below are within the legislation stated the purpose of the park: “to improve Manhattan Project National Historical Park 4 the understanding of the Manhattan Project and the boundaries on land managed by the legacy of the Manhattan Project through Department of Energy. -

Foundation Document Manhattan Project National Historical Park Tennessee, New Mexico, Washington January 2017 Foundation Document

NATIONAL PARK SERVICE • U.S. DEPARTMENT OF THE INTERIOR Foundation Document Manhattan Project National Historical Park Tennessee, New Mexico, Washington January 2017 Foundation Document MANHATTAN PROJECT NATIONAL HISTORICAL PARK Hanford Washington ! Los Alamos Oak Ridge New Mexico Tennessee ! ! North 0 700 Kilometers 0 700 Miles More detailed maps of each park location are provided in Appendix E. Manhattan Project National Historical Park Contents Mission of the National Park Service 1 Mission of the Department of Energy 2 Introduction 3 Part 1: Core Components 4 Brief Description of the Park. 4 Oak Ridge, Tennessee. 5 Los Alamos, New Mexico . 6 Hanford, Washington. 7 Park Management . 8 Visitor Access. 8 Brief History of the Manhattan Project . 8 Introduction . 8 Neutrons, Fission, and Chain Reactions . 8 The Atomic Bomb and the Manhattan Project . 9 Bomb Design . 11 The Trinity Test . 11 Hiroshima and Nagasaki, Japan . 12 From the Second World War to the Cold War. 13 Legacy . 14 Park Purpose . 15 Park Signifcance . 16 Fundamental Resources and Values . 18 Related Resources . 22 Interpretive Themes . 26 Part 2: Dynamic Components 27 Special Mandates and Administrative Commitments . 27 Special Mandates . 27 Administrative Commitments . 27 Assessment of Planning and Data Needs . 28 Analysis of Fundamental Resources and Values . 28 Identifcation of Key Issues and Associated Planning and Data Needs . 28 Planning and Data Needs . 31 Part 3: Contributors 36 Appendixes 38 Appendix A: Enabling Legislation for Manhattan Project National Historical Park. 38 Appendix B: Inventory of Administrative Commitments . 43 Appendix C: Fundamental Resources and Values Analysis Tables. 48 Appendix D: Traditionally Associated Tribes . 87 Appendix E: Department of Energy Sites within Manhattan Project National Historical Park . -

The Los Alamos Thermonuclear Weapon Project, 1942-1952

Igniting The Light Elements: The Los Alamos Thermonuclear Weapon Project, 1942-1952 by Anne Fitzpatrick Dissertation submitted to the Faculty of Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY in SCIENCE AND TECHNOLOGY STUDIES Approved: Joseph C. Pitt, Chair Richard M. Burian Burton I. Kaufman Albert E. Moyer Richard Hirsh June 23, 1998 Blacksburg, Virginia Keywords: Nuclear Weapons, Computing, Physics, Los Alamos National Laboratory Igniting the Light Elements: The Los Alamos Thermonuclear Weapon Project, 1942-1952 by Anne Fitzpatrick Committee Chairman: Joseph C. Pitt Science and Technology Studies (ABSTRACT) The American system of nuclear weapons research and development was conceived and developed not as a result of technological determinism, but by a number of individual architects who promoted the growth of this large technologically-based complex. While some of the technological artifacts of this system, such as the fission weapons used in World War II, have been the subject of many historical studies, their technical successors -- fusion (or hydrogen) devices -- are representative of the largely unstudied highly secret realms of nuclear weapons science and engineering. In the postwar period a small number of Los Alamos Scientific Laboratory’s staff and affiliates were responsible for theoretical work on fusion weapons, yet the program was subject to both the provisions and constraints of the U. S. Atomic Energy Commission, of which Los Alamos was a part. The Commission leadership’s struggle to establish a mission for its network of laboratories, least of all to keep them operating, affected Los Alamos’s leaders’ decisions as to the course of weapons design and development projects. -

The New Nuclear Forensics: Analysis of Nuclear Material for Security

THE NEW NUCLEAR FORENSICS Analysis of Nuclear Materials for Security Purposes edited by vitaly fedchenko The New Nuclear Forensics Analysis of Nuclear Materials for Security Purposes STOCKHOLM INTERNATIONAL PEACE RESEARCH INSTITUTE SIPRI is an independent international institute dedicated to research into conflict, armaments, arms control and disarmament. Established in 1966, SIPRI provides data, analysis and recommendations, based on open sources, to policymakers, researchers, media and the interested public. The Governing Board is not responsible for the views expressed in the publications of the Institute. GOVERNING BOARD Sven-Olof Petersson, Chairman (Sweden) Dr Dewi Fortuna Anwar (Indonesia) Dr Vladimir Baranovsky (Russia) Ambassador Lakhdar Brahimi (Algeria) Jayantha Dhanapala (Sri Lanka) Ambassador Wolfgang Ischinger (Germany) Professor Mary Kaldor (United Kingdom) The Director DIRECTOR Dr Ian Anthony (United Kingdom) Signalistgatan 9 SE-169 70 Solna, Sweden Telephone: +46 8 655 97 00 Fax: +46 8 655 97 33 Email: [email protected] Internet: www.sipri.org The New Nuclear Forensics Analysis of Nuclear Materials for Security Purposes EDITED BY VITALY FEDCHENKO OXFORD UNIVERSITY PRESS 2015 1 Great Clarendon Street, Oxford OX2 6DP, United Kingdom Oxford University Press is a department of the University of Oxford. It furthers the University’s objective of excellence in research, scholarship, and education by publishing worldwide. Oxford is a registered trade mark of Oxford University Press in the UK and in certain other countries © SIPRI 2015 The moral rights of the authors have been asserted All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, without the prior permission in writing of SIPRI, or as expressly permitted by law, or under terms agreed with the appropriate reprographics rights organizations. -

Coupled Oscillator Models with No Scale Separation

Coupled Oscillator Models with no Scale Separation Philip Du Toit a;∗, Igor Mezi´c b, Jerrold Marsden a aControl and Dynamical Systems, California Institute of Technology, MC 107-81, Pasadena, CA 91125 bDepartment of Mechanical Engineering, University of California Santa Barbara, Santa Barbara, CA 93106 Abstract We consider a class of spatially discrete wave equations that describe the motion of a system of linearly coupled oscillators perturbed by a nonlinear potential. We show that the dynamical behavior of this system cannot be understood by considering the slowest modes only: there is an \inverse cascade" in which the effects of changes in small scales are felt by the largest scales and the mean-field closure does not work. Despite this, a one and a half degree of freedom model is derived that includes the influence of the small-scale dynamics and predicts global conformational changes accurately. Thus, we provide a reduced model for a system in which there is no separation of scales. We analyze a specific coupled-oscillator system that models global conformation change in biomolecules, introduced in [4]. In this model, the conformational states are stable to random perturbations, yet global conformation change can be quickly and robustly induced by the action of a targeted control. We study the efficiency of small-scale perturbations on conformational change and show that \zipper" traveling wave perturbations provide an efficient means for inducing such change. A visualization method for the transport barriers in the reduced model yields insight into the mechanism by which the conformation change occurs. Key words: coarse variables, reduced model, coupled oscillators, dynamics of biomolecules PACS: 87.15.hp, 87.15.hj ∗ Corresponding author.