Hate Speech and Radicalisation Online the OCCI Research Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany

University of Vermont ScholarWorks @ UVM UVM Honors College Senior Theses Undergraduate Theses 2018 Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany William Peter Fitz University of Vermont Follow this and additional works at: https://scholarworks.uvm.edu/hcoltheses Recommended Citation Fitz, William Peter, "Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany" (2018). UVM Honors College Senior Theses. 275. https://scholarworks.uvm.edu/hcoltheses/275 This Honors College Thesis is brought to you for free and open access by the Undergraduate Theses at ScholarWorks @ UVM. It has been accepted for inclusion in UVM Honors College Senior Theses by an authorized administrator of ScholarWorks @ UVM. For more information, please contact [email protected]. REACTIONARY POSTMODERNISM? NEOLIBERALISM, MULTICULTURALISM, THE INTERNET, AND THE IDEOLOGY OF THE NEW FAR RIGHT IN GERMANY A Thesis Presented by William Peter Fitz to The Faculty of the College of Arts and Sciences of The University of Vermont In Partial Fulfilment of the Requirements For the Degree of Bachelor of Arts In European Studies with Honors December 2018 Defense Date: December 4th, 2018 Thesis Committee: Alan E. Steinweis, Ph.D., Advisor Susanna Schrafstetter, Ph.D., Chairperson Adriana Borra, M.A. Table of Contents Introduction 1 Chapter One: Neoliberalism and Xenophobia 17 Chapter Two: Multiculturalism and Cultural Identity 52 Chapter Three: The Philosophy of the New Right 84 Chapter Four: The Internet and Meme Warfare 116 Conclusion 149 Bibliography 166 1 “Perhaps one will view the rise of the Alternative for Germany in the foreseeable future as inevitable, as a portent for major changes, one that is as necessary as it was predictable. -

Framing Through Names and Titles in German

Proceedings of the 12th Conference on Language Resources and Evaluation (LREC 2020), pages 4924–4932 Marseille, 11–16 May 2020 c European Language Resources Association (ELRA), licensed under CC-BY-NC Doctor Who? Framing Through Names and Titles in German Esther van den Berg∗z, Katharina Korfhagey, Josef Ruppenhofer∗z, Michael Wiegand∗ and Katja Markert∗y ∗Leibniz ScienceCampus, Heidelberg/Mannheim, Germany yInstitute of Computational Linguistics, Heidelberg University, Germany zInstitute for German Language, Mannheim, Germany fvdberg|korfhage|[email protected] fruppenhofer|[email protected] Abstract Entity framing is the selection of aspects of an entity to promote a particular viewpoint towards that entity. We investigate entity framing of political figures through the use of names and titles in German online discourse, enhancing current research in entity framing through titling and naming that concentrates on English only. We collect tweets that mention prominent German politicians and annotate them for stance. We find that the formality of naming in these tweets correlates positively with their stance. This confirms sociolinguistic observations that naming and titling can have a status-indicating function and suggests that this function is dominant in German tweets mentioning political figures. We also find that this status-indicating function is much weaker in tweets from users that are politically left-leaning than in tweets by right-leaning users. This is in line with observations from moral psychology that left-leaning and right-leaning users assign different importance to maintaining social hierarchies. Keywords: framing, naming, Twitter, German, stance, sentiment, social media 1. Introduction An interesting language to contrast with English in terms of naming and titling is German. -

Free, Hateful, and Posted: Rethinking First Amendment Protection of Hate Speech in a Social Media World

Boston College Law Review Volume 60 Issue 7 Article 6 10-30-2019 Free, Hateful, and Posted: Rethinking First Amendment Protection of Hate Speech in a Social Media World Lauren E. Beausoleil Boston College Law School, [email protected] Follow this and additional works at: https://lawdigitalcommons.bc.edu/bclr Part of the First Amendment Commons, and the Internet Law Commons Recommended Citation Lauren E. Beausoleil, Free, Hateful, and Posted: Rethinking First Amendment Protection of Hate Speech in a Social Media World, 60 B.C.L. Rev. 2100 (2019), https://lawdigitalcommons.bc.edu/bclr/vol60/iss7/6 This Notes is brought to you for free and open access by the Law Journals at Digital Commons @ Boston College Law School. It has been accepted for inclusion in Boston College Law Review by an authorized editor of Digital Commons @ Boston College Law School. For more information, please contact [email protected]. FREE, HATEFUL, AND POSTED: RETHINKING FIRST AMENDMENT PROTECTION OF HATE SPEECH IN A SOCIAL MEDIA WORLD Abstract: Speech is meant to be heard, and social media allows for exaggeration of that fact by providing a powerful means of dissemination of speech while also dis- torting one’s perception of the reach and acceptance of that speech. Engagement in online “hate speech” can interact with the unique characteristics of the Internet to influence users’ psychological processing in ways that promote violence and rein- force hateful sentiments. Because hate speech does not squarely fall within any of the categories excluded from First Amendment protection, the United States’ stance on hate speech is unique in that it protects it. -

1 Kenneth Burke and the Theory of Scapegoating Charles K. Bellinger Words Sometimes Play Important Roles in Human History. I

Kenneth Burke and the Theory of Scapegoating Charles K. Bellinger Words sometimes play important roles in human history. I think, for example, of Martin Luther’s use of the word grace to shatter Medieval Catholicism, or the use of democracy as a rallying cry for the American colonists in their split with England, or Karl Marx’s vision of the proletariat as a class that would end all classes. More recently, freedom has been used as a mantra by those on the political left and the political right. If a president decides to go war, with the argument that freedom will be spread in the Middle East, then we are reminded once again of the power of words in shaping human actions. This is a notion upon which Kenneth Burke placed great stress as he painted a picture of human beings as word-intoxicated, symbol-using agents whose motives ought to be understood logologically, that is, from the perspective of our use and abuse of words. In the following pages, I will argue that there is a key word that has the potential to make a large impact on human life in the future, the word scapegoat. This word is already in common use, of course, but I suggest that it is something akin to a ticking bomb in that it has untapped potential to change the way human beings think and act. This potential has two main aspects: 1) the ambiguity of the word as it is used in various contexts, and 2) the sense in which the word lies on the boundary between human self-consciousness and unself-consciousness. -

The Kremlin Trojan Horses | the Atlantic Council

Atlantic Council DINU PATRICIU EURASIA CENTER THE KREMLIN’S TROJAN HORSES Alina Polyakova, Marlene Laruelle, Stefan Meister, and Neil Barnett Foreword by Radosław Sikorski THE KREMLIN’S TROJAN HORSES Russian Influence in France, Germany, and the United Kingdom Alina Polyakova, Marlene Laruelle, Stefan Meister, and Neil Barnett Foreword by Radosław Sikorski ISBN: 978-1-61977-518-3. This report is written and published in accordance with the Atlantic Council Policy on Intellectual Independence. The authors are solely responsible for its analysis and recommendations. The Atlantic Council and its donors do not determine, nor do they necessarily endorse or advocate for, any of this report’s conclusions. November 2016 TABLE OF CONTENTS 1 Foreword Introduction: The Kremlin’s Toolkit of Influence 3 in Europe 7 France: Mainstreaming Russian Influence 13 Germany: Interdependence as Vulnerability 20 United Kingdom: Vulnerable but Resistant Policy recommendations: Resisting Russia’s 27 Efforts to Influence, Infiltrate, and Inculcate 29 About the Authors THE KREMLIN’S TROJAN HORSES FOREWORD In 2014, Russia seized Crimea through military force. With this act, the Kremlin redrew the political map of Europe and upended the rules of the acknowledged international order. Despite the threat Russia’s revanchist policies pose to European stability and established international law, some European politicians, experts, and civic groups have expressed support for—or sympathy with—the Kremlin’s actions. These allies represent a diverse network of political influence reaching deep into Europe’s core. The Kremlin uses these Trojan horses to destabilize European politics so efficiently, that even Russia’s limited might could become a decisive factor in matters of European and international security. -

Drucksache 18/10796

Deutscher Bundestag Drucksache 18/10796 18. Wahlperiode 22.12.2016 Unterrichtung durch die Delegation der Bundesrepublik Deutschland in der Parlamentarischen Versammlung des Europarates Tagung der Parlamentarischen Versammlung des Europarates vom 20. bis 24. Juni 2016 Inhaltsverzeichnis Seite I. Delegationsmitglieder ..................................................................... 2 II. Einführung ...................................................................................... 3 III. Ablauf der 3. Sitzungswoche 2016 ................................................. 4 III.1 Wahlen und Geschäftsordnungsfragen ............................................. 4 III.2 Schwerpunkte der Beratungen .......................................................... 4 III.3 Auswärtige Redner ............................................................................ 9 IV. Tagesordnung der 3. Sitzungswoche 2016 .................................... 10 V. Verabschiedete Empfehlungen und Entschließungen ................. 13 VI. Reden deutscher Delegationsmitglieder ........................................ 43 VII. Funktionsträger der Parlamentarischen Versammlung des Europarates ............................................................................... 51 VIII. Ständiger Ausschuss vom 27. Mai 2016 in Tallinn ....................... 53 IX. Mitgliedsländer des Europarates ................................................... 55 Drucksache 18/10796 – 2 – Deutscher Bundestag – 18. Wahlperiode I. Delegationsmitglieder Unter Vorsitz von Delegationsleiter -

Politicallyincorrect: the Pejoration of Political Language

North Texas Journal of Undergraduate Research, Vol. 1, No. 1, 2019 http://honors.unt.edu #politicallyincorrect: The Pejoration of Political Language Ashley Balcazar1* Abstract How is the term “political correctness” understood in the context of modern American politics, particularly in the context of the 2016 election? More specifically, what triggers perceived offensiveness in political language? At the crux of the matter is the distinction between oneself or one’s social group, those perceived as “the other,” and what one is and is not allowed to say in a social forum. This study aims to analyze common language usage and identify factors contributing to the offensification of political language in social media and the types of language in social media that trigger a sense of political outrage. We examine Facebook and Twitter memes, using API searches referring directly to the terms “PC” or “politically correct.” Dedoose, a text content-analysis package is used to identify recurring themes in online interactions that are used to criticize perceived political enemies. Results show that themes primarily related to “feminism” and “redneck” reflect cross-cutting cleavages in the political landscape primarily related to Hillary Clinton’s candidacy. We also identify significant cleavages in racial identity and quantify these statistically. Our results compliment other recent studies which aim to gauge the impact of social media on political and social polarization. Keywords Political Correctness — PC Speech — Memes — Social Media — Content Analysis 1Department of Linguistics, University of North Texas *Faculty Mentor: Dr. Tom Miles Contents became a divisive inclusion in the American English lexicon. Language is subject to the collective approval of a society, Introduction 1 yet a gulf separates conflicting perceptions of political cor- 1 Background: An Overview of the Controversy 2 rectness. -

Report Legal Research Assistance That Can Make a Builds

EUROPEAN DIGITAL RIGHTS A LEGAL ANALYSIS OF BIOMETRIC MASS SURVEILLANCE PRACTICES IN GERMANY, THE NETHERLANDS, AND POLAND By Luca Montag, Rory Mcleod, Lara De Mets, Meghan Gauld, Fraser Rodger, and Mateusz Pełka EDRi - EUROPEAN DIGITAL RIGHTS 2 INDEX About the Edinburgh 1.4.5 ‘Biometric-Ready’ International Justice Cameras 38 Initiative (EIJI) 5 1.4.5.1 The right to dignity 38 Introductory Note 6 1.4.5.2 Structural List of Abbreviations 9 Discrimination 39 1.4.5.3 Proportionality 40 Key Terms 10 2. Fingerprints on Personal Foreword from European Identity Cards 42 Digital Rights (EDRi) 12 2.1 Analysis 43 Introduction to Germany 2.1.1 Human rights country study from EDRi 15 concerns 43 Germany 17 2.1.2 Consent 44 1 Facial Recognition 19 2.1.3 Access Extension 44 1.1 Local Government 19 3. Online Age and Identity 1.1.1 Case Study – ‘Verification’ 46 Cologne 20 3.1 Analysis 47 1.2 Federal Government 22 4. COVID-19 Responses 49 1.3 Biometric Technology 4.1 Analysis 50 Providers in Germany 23 4.2 The Convenience 1.3.1 Hardware 23 of Control 51 1.3.2 Software 25 5. Conclusion 53 1.4 Legal Analysis 31 Introduction to the Netherlands 1.4.1 German Law 31 country study from EDRi 55 1.4.1.1 Scope 31 The Netherlands 57 1.4.1.2 Necessity 33 1. Deployments by Public 1.4.2 EU Law 34 Entities 60 1.4.3 European 1.1. Dutch police and law Convention on enforcement authorities 61 Human Rights 37 1.1.1 CATCH Facial 1.4.4 International Recognition Human Rights Law 37 Surveillance Technology 61 1.1.1.1 CATCH - Legal Analysis 64 EDRi - EUROPEAN DIGITAL RIGHTS 3 1.1.2. -

IN AMERICAN POLITICS TODAY, EVEN FACT-CHECKERS ARE VIEWED THROUGH PARTISAN LENSES by David C

IN AMERICAN POLITICS TODAY, EVEN FACT-CHECKERS ARE VIEWED THROUGH PARTISAN LENSES by David C. Barker, American University What can be done about the “post-truth” era in American politics? Many hope that beefed-up fact-checking by reputable nonpartisan organizations like Fact Checker, PolitiFact, FactCheck.org, and Snopes can equip citizens with the tools needed to identify lies, thus reducing the incentive politicians have to propagate untruths. Unfortunately, as revealed by research I have done with colleagues, fact-checking organizations have not provided a cure-all to misinformation. Fact-checkers cannot prevent the politicization of facts, because they themselves are evaluated through a partisan lens. Partisan Biases and Trust of Fact Checkers in the 2016 Election In May of 2016, just a couple of weeks before the California Democratic primary, my colleagues and I conducted an experiment that exposed a representative sample of Californians to the statement: “Nonpartisan fact-checking organizations like PolitiFact rate controversial candidate statements for truthfulness.” We also exposed one randomized half of the sample to the following statement and image: “Each presidential candidate's current PolitiFact average truthfulness score is placed on the scale below.” The Politifact image validated the mainstream narrative about Donald Trump’s disdain for facts, and also reinforced Bernie Sanders’s tell-it-like-it-is reputation. But it contradicted conventional wisdom by revealing that Clinton had been the most accurate candidate overall (though the difference between the Clinton and Sanders ratings was not statistically significant). What did we expect to find? Some observers presume that Republicans are the most impervious to professional fact-checking. -

Feminist Linguistic Theories and “Political Correctness” Modifying the Discourse on Women? Christèle Le Bihan-Colleran University of Poitiers, France

Feminist Linguistic Theories and “Political Correctness” Modifying the Discourse on Women? Christèle Le Bihan-Colleran University of Poitiers, France Abstract. This article proposes a historical perspective looking at the beginnings of feminist linguistics during the liberation movements and its link with the “politically correct” movement. Initially a mostly ironical left-wing expression, it was used by the right notably to denounce the attempt by this movement to reform the language used to describe racial, ethnic and sexual minorities. This attempt at linguistic reform rested on a theoretical approach to language use, with borrowings from feminist linguistic theories. This led to an association being made between feminist linguistic reforms and “political correctness”, thereby impacting the changes brought to the discourse about women. Keywords: feminist linguistic theories, political correctness, language, discourse, sexism Introduction In the late 1980s and early 1990s, a debate over what came to be known as the “politically correct” movement [1] erupted in the United States first and then in Britain. It focused notably on the linguistic reforms associated with this movement. These reforms aimed at eradicating all forms of discrimination in language use in particular through the adoption of speech codes and anti- harassment guidelines, so as to modify the discourse about racial and ethnic minorities but also women, and thereby bring about social change by transforming social attitudes. However, these attempts at a linguistic reform were viewed as a threat to freedom of speech by its detractors who used the phrase “political correctness” to denounce the imposition of a form of “linguistic correctness”. Conversely, their use of the phrase “political correctness” was widely viewed as an attack on the progress made by minorities as a result of the social movements of the 1960s and 1970s. -

Starr Forum: Russia's Information War on America

MIT Center for Intnl Studies | Starr Forum: Russia’s Information War on America CAROL Welcome everyone. We're delighted that so many people could join us today. Very SAIVETZ: excited that we have such a timely topic to discuss, and we have two experts in the field to discuss it. But before I do that, I'm supposed to tell you that this is an event that is co-sponsored by the Center for International Studies at MIT, the Security Studies program at MIT, and MIT Russia. I should also introduce myself. My name is Carol Saivetz. I'm a senior advisor at the Security Studies program at MIT, and I co-chair a seminar, along with my colleague Elizabeth Wood, whom we will meet after the talk. And we co-chair a seminar series called Focus on Russia. And this is part of that seminar series as well. I couldn't think of a better topic to talk about in the lead-up to the US presidential election, which is now only 40 days away. We've heard so much in 2016 about Russian attempts to influence the election then, and we're hearing again from the CIA and from the intelligence community that Russia is, again, trying to influence who shows up, where people vote. They are mimicking some of Donald Trump's talking points about Joe Biden's strength and intellectual capabilities, et cetera. And we've really brought together two experts in the field. Nina Jankowicz studies the intersection of democracy and technology in central and eastern Europe. -

0714685003.Pdf

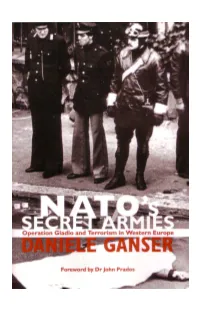

CONTENTS Foreword xi Acknowledgements xiv Acronyms xviii Introduction 1 1 A terrorist attack in Italy 3 2 A scandal shocks Western Europe 15 3 The silence of NATO, CIA and MI6 25 4 The secret war in Great Britain 38 5 The secret war in the United States 51 6 The secret war in Italy 63 7 The secret war in France 84 8 The secret war in Spain 103 9 The secret war in Portugal 114 10 The secret war in Belgium 125 11 The secret war in the Netherlands 148 12 The secret war in Luxemburg 165 ix 13 The secret war in Denmark 168 14 The secret war in Norway 176 15 The secret war in Germany 189 16 The secret war in Greece 212 17 The secret war in Turkey 224 Conclusion 245 Chronology 250 Notes 259 Select bibliography 301 Index 303 x FOREWORD At the height of the Cold War there was effectively a front line in Europe. Winston Churchill once called it the Iron Curtain and said it ran from Szczecin on the Baltic Sea to Trieste on the Adriatic Sea. Both sides deployed military power along this line in the expectation of a major combat. The Western European powers created the North Atlantic Treaty Organization (NATO) precisely to fight that expected war but the strength they could marshal remained limited. The Soviet Union, and after the mid-1950s the Soviet Bloc, consistently had greater numbers of troops, tanks, planes, guns, and other equipment. This is not the place to pull apart analyses of the military balance, to dissect issues of quantitative versus qualitative, or rigid versus flexible tactics.