Perception-Motivated Parallel Algorithms for Haptics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Elementary Functions: Towards Automatically Generated, Efficient

Elementary functions : towards automatically generated, efficient, and vectorizable implementations Hugues De Lassus Saint-Genies To cite this version: Hugues De Lassus Saint-Genies. Elementary functions : towards automatically generated, efficient, and vectorizable implementations. Other [cs.OH]. Université de Perpignan, 2018. English. NNT : 2018PERP0010. tel-01841424 HAL Id: tel-01841424 https://tel.archives-ouvertes.fr/tel-01841424 Submitted on 17 Jul 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Délivré par l’Université de Perpignan Via Domitia Préparée au sein de l’école doctorale 305 – Énergie et Environnement Et de l’unité de recherche DALI – LIRMM – CNRS UMR 5506 Spécialité: Informatique Présentée par Hugues de Lassus Saint-Geniès [email protected] Elementary functions: towards automatically generated, efficient, and vectorizable implementations Version soumise aux rapporteurs. Jury composé de : M. Florent de Dinechin Pr. INSA Lyon Rapporteur Mme Fabienne Jézéquel MC, HDR UParis 2 Rapporteur M. Marc Daumas Pr. UPVD Examinateur M. Lionel Lacassagne Pr. UParis 6 Examinateur M. Daniel Menard Pr. INSA Rennes Examinateur M. Éric Petit Ph.D. Intel Examinateur M. David Defour MC, HDR UPVD Directeur M. Guillaume Revy MC UPVD Codirecteur À la mémoire de ma grand-mère Françoise Lapergue et de Jos Perrot, marin-pêcheur bigouden. -

Run-Time Adaptation to Heterogeneous Processing Units for Real-Time Stereo Vision

Run-time Adaptation to Heterogeneous Processing Units for Real-time Stereo Vision Benjamin Ranft Oliver Denninger FZI Research Center for Information Technology FZI Research Center for Information Technology Karlsruhe, Germany Karlsruhe, Germany [email protected] [email protected] Abstract—Todays systems from smartphones to workstations task are becoming increasingly parallel and heterogeneous: Pro- data cessing units not only consist of more and more identical element cores – furthermore, systems commonly contain either a discrete general-purpose GPU alongside with their CPU or even integrate both on a single chip. To benefit from this trend, software should utilize all available resources and adapt to varying configurations, including different CPU and GPU performance or competing processes. This paper investigates parallelization and adaptation strate- gies using dense stereo vision as an example application – a basis e. g. for advanced driver assistance systems, but also robotics or gesture recognition. At this, task-driven as well as data element- and data flow-driven parallelization approaches data are feasible. To achieve real-time performance, we first utilize flow data element-parallelism individually on each processing unit. Figure 1. Parallelization approaches offered by our application On this basis, we develop and implement further strategies for heterogeneous systems and automatic adaptation to the units (GPUs) often outperform multi-core CPUs with single hardware available at run-time. Each approach is described instruction, multiple data (SIMD) capabilities w. r. t. frame concerning i. a. the propagation of data to processors and its rates and energy efficiency [4], [5], although there are relation to established methods. An experimental evaluation with multiple test systems and usage scenarious reveals advan- exceptions [6]. -

A Compiler Target Model for Line Associative Registers

University of Kentucky UKnowledge Theses and Dissertations--Electrical and Computer Engineering Electrical and Computer Engineering 2019 A Compiler Target Model for Line Associative Registers Paul S. Eberhart University of Kentucky, [email protected] Digital Object Identifier: https://doi.org/10.13023/etd.2019.141 Right click to open a feedback form in a new tab to let us know how this document benefits ou.y Recommended Citation Eberhart, Paul S., "A Compiler Target Model for Line Associative Registers" (2019). Theses and Dissertations--Electrical and Computer Engineering. 138. https://uknowledge.uky.edu/ece_etds/138 This Master's Thesis is brought to you for free and open access by the Electrical and Computer Engineering at UKnowledge. It has been accepted for inclusion in Theses and Dissertations--Electrical and Computer Engineering by an authorized administrator of UKnowledge. For more information, please contact [email protected]. STUDENT AGREEMENT: I represent that my thesis or dissertation and abstract are my original work. Proper attribution has been given to all outside sources. I understand that I am solely responsible for obtaining any needed copyright permissions. I have obtained needed written permission statement(s) from the owner(s) of each third-party copyrighted matter to be included in my work, allowing electronic distribution (if such use is not permitted by the fair use doctrine) which will be submitted to UKnowledge as Additional File. I hereby grant to The University of Kentucky and its agents the irrevocable, non-exclusive, and royalty-free license to archive and make accessible my work in whole or in part in all forms of media, now or hereafter known. -

High Performance Motion Detection: Some Trends

High performance motion detection: some trends toward new embedded architectures for vision systems Lionel Lacassagne, Antoine Manzanera, Julien Denoulet, Alain Mérigot To cite this version: Lionel Lacassagne, Antoine Manzanera, Julien Denoulet, Alain Mérigot. High performance motion detection: some trends toward new embedded architectures for vision systems. Journal of Real- Time Image Processing, Springer Verlag, 2009, 4 (2), pp.127-146. 10.1007/s11554-008-0096-7. hal- 01131002 HAL Id: hal-01131002 https://hal.archives-ouvertes.fr/hal-01131002 Submitted on 12 Mar 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Journal of RealTime Image Processing manuscript No. (will be inserted by the editor) Lionel Lacassagne · Antoine Manzanera · Julien Denoulet · Alain Merigot´ High Performance Motion Detection: Some trends toward new embedded architectures for vision systems Received: date / Revised: date Abstract The goal of this article is to compare some opti- Introduction mized implementations on current High Performance Plat- forms in order to highlight architectural trends in the field For more than thirty years, Moore’s law had ruled the perfor- of embedded architectures and to get an estimation of what mance and the development of computers: speed and clock should be the components of a next generation vision sys- frequency were the races to win. -

Low Power Image Processing: Analog Versus Digital Comparison Jacques-Olivier Klein, Lionel Lacassagne, Herve Mathias, Sebastien Moutault, Antoine Dupret

Low power image processing: analog versus digital comparison Jacques-Olivier Klein, Lionel Lacassagne, Herve Mathias, Sebastien Moutault, Antoine Dupret To cite this version: Jacques-Olivier Klein, Lionel Lacassagne, Herve Mathias, Sebastien Moutault, Antoine Dupret. Low power image processing: analog versus digital comparison. 2005, pp.111-115, 10.1109/CAMP.2005.33. hal-00015955 HAL Id: hal-00015955 https://hal.archives-ouvertes.fr/hal-00015955 Submitted on 15 Dec 2005 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Low power Image Processing: Analog versus Digital comparison Jacques-Olivier Klein, Lionel Lacassagne, Herve´ Mathias, Sebastien´ Moutault and Antoine Dupret Institut d’Electronique Fondamentale Bat.ˆ 220, Univ. Paris-Sud, France Email: [email protected] Abstract— In this paper, a programmable analog retina is SENSORS ARAM MUX3 A/D-PROC I/O presented and compared with state of the art MPU for embedded Y - D Q imaging applications. The comparison is based on the energy D requirement to implement the same image processing task. E C D Q Results showed that analog processing requires lower power O consumption than digital processing. In addition, the execution D D Q E time is shorter since the size of the retina is reasonably large. -

Low Overhead Memory Subsystem Design for a Multicore Parallel DSP Processor

Linköping Studies in Science and Technology Dissertation No. 1532 Low Overhead Memory Subsystem Design for a Multicore Parallel DSP Processor Jian Wang Department of Electrical Engineering Linköping University SE-581 83 Linköping, Sweden Linköping 2014 ISBN 978-91-7519-556-8 ISSN 0345-7524 ii Low Overhead Memory Subsystem Design for a Multicore Parallel DSP Processor Jian Wang ISBN 978-91-7519-556-8 Copyright ⃝c Jian Wang, 2014 Linköping Studies in Science and Technology Dissertation No. 1532 ISSN 0345-7524 Department of Electrical Engineering Linköping University SE-581 83 Linköping Sweden Phone: +46 13 28 10 00 Author e-mail: [email protected] Cover image Combined Star and Ring onchip interconnection of the ePUMA multicore DSP. Parts of this thesis are reprinted with permission from the IEEE. Printed by UniTryck, Linköping University Linköping, Sweden, 2014 Abstract The physical scaling following Moore’s law is saturated while the re- quirement on computing keeps growing. The gain from improving sili- con technology is only the shrinking of the silicon area, and the speed- power scaling has almost stopped in the last two years. It calls for new parallel computing architectures and new parallel programming meth- ods. Traditional ASIC (Application Specific Integrated Circuits) hardware has been used for acceleration of Digital Signal Processing (DSP) subsys- tems on SoC (System-on-Chip). Embedded systems become more com- plicated, and more functions, more applications, and more features must be integrated in one ASIC chip to follow up the market requirements. At the same time, the product lifetime of a SoC with ASIC has been much reduced because of the dynamic market. -

FULLTEXT01.Pdf

Institutionen f¨or systemteknik Department of Electrical Engineering Examensarbete Master’s Thesis Implementation Aspects of Image Processing Per Nordl¨ow LITH-ISY-EX-3088 Mars 2001 Abstract This Master’s Thesis discusses the different trade-offs a programmer needs to consider when constructing image processing systems. First, an overview of the different alternatives available is given followed by a focus on systems based on general hardware. General, in this case, means mass-market with a low price- performance-ratio. The software environment is focused on UNIX, sometimes restricted to Linux, together with C, C++ and ANSI-standardized APIs. Contents 1Overview 4 2 Parallel Processing 6 2.1 Sequential, Parallel, Concurrent Execution ............. 6 2.2 Task and Data Parallelism ...................... 6 2.3 Architecture Independent APIs ................... 7 2.3.1 Parallel languages ...................... 7 2.3.2 Threads ............................ 8 2.3.3 Message Sending Interfaces . .............. 8 3 Computer Architectures 10 3.1Flynn’sTaxonomy.......................... 10 3.2MemoryHierarchies......................... 11 3.3 Data Locality ............................. 12 3.4 Instruction Locality ......................... 12 3.5SharedandDistributedMemory.................. 13 3.6Pipelining............................... 13 3.7ClusteredComputing......................... 14 3.8SWAR................................. 15 3.8.1 SWARC and Scc ....................... 15 3.8.2 SWAR in gcc ......................... 17 3.8.3 Trend ............................ -

Scalar Arithmetic Multiple Data: Customizable Precision for Deep Neural Networks

Scalar Arithmetic Multiple Data: Customizable Precision for Deep Neural Networks Andrew Anderson∗, Michael J. Doyle∗, David Gregg∗ ∗School of Computer Science and Statistics Trinity College Dublin faanderso, mjdoyle, [email protected] Abstract—Quantization of weights and activations in Deep custom precision in software. Instead, we argue that the greatest Neural Networks (DNNs) is a powerful technique for network opportunities arise from defining entirely new operations that do compression, and has enjoyed significant attention and success. not necessarily correspond to existing SIMD vector instructions. However, much of the inference-time benefit of quantization is accessible only through customized hardware accelerators or with These new operations are not easy to find because they an FPGA implementation of quantized arithmetic. depend on novel insights into the sub-structure of existing Building on prior work, we show how to construct very fast machine instructions. Nonetheless, we provide an example of implementations of arbitrary bit-precise signed and unsigned one such operation: a custom bit-level precise operation which integer operations using a software technique which logically computes the 1D convolution of two input vectors, based on the embeds a vector architecture with custom bit-width lanes in fixed- width scalar arithmetic. At the strongest level of quantization, wide integer multiplier found in general-purpose processors. our approach yields a maximum speedup of s 6× on an x86 Mixed signed-unsigned integer arithmetic is a particular chal- platform, and s 10× on an ARM platform versus quantization lenge, and we present a novel solution. While the performance to native 8-bit integers. benefits are attractive, writing this kind of code by hand is difficult and error prone. -

The Design of Scalar AES Instruction Set Extensions for RISC-V

The design of scalar AES Instruction Set Extensions for RISC-V Ben Marshall1, G. Richard Newell2, Dan Page1, Markku-Juhani O. Saarinen3 and Claire Wolf4 1 Department of Computer Science, University of Bristol {ben.marshall,daniel.page}@bristol.ac.uk 2 Microchip Technology Inc., USA [email protected] 3 PQShield, UK [email protected] 4 Symbiotic EDA [email protected] Abstract. Secure, efficient execution of AES is an essential requirement on most computing platforms. Dedicated Instruction Set Extensions (ISEs) are often included for this purpose. RISC-V is a (relatively) new ISA that lacks such a standardised ISE. We survey the state-of-the-art industrial and academic ISEs for AES, implement and evaluate five different ISEs, one of which is novel. We recommend separate ISEs for 32 and 64-bit base architectures, with measured performance improvements for an AES-128 block encryption of 4× and 10× with a hardware cost of 1.1K and 8.2K gates respectivley, when compared to a software-only implementation based on use of T-tables. We also explore how the proposed standard bit-manipulation extension to RISC-V can be harnessed for efficient implementation of AES-GCM. Our work supports the ongoing RISC-V cryptography extension standardisation process. Keywords: ISE, AES, RISC-V 1 Introduction Implementing the Advanced Encryption Standard (AES). Compared to more general workloads, cryptographic algorithms like AES present a significant implementation chal- lenge. They involve computationally intensive and specialised functionality, are used in a wide range of contexts, and form a central target in a complex attack surface. The demand for efficiency (however measured) is an example of this challenge in two ways. -

An Instruction Set Extension to Support Software-Based Masking

An Instruction Set Extension to Support Software-Based Masking Si Gao1, Johann Großschädl2, Ben Marshall3,6, Dan Page3, Thinh Pham3 and Francesco Regazzoni4,5 1 Alpen-Adria Universität Klagenfurt. [email protected] 2 Department of Computer Science, University of Luxembourg. [email protected] 3 Department of Computer Science, University of Bristol. {ben.marshall,daniel.page,th.pham}@bristol.ac.uk 4 University of Amsterdam, [email protected] 5 Università della Svizzera italiana, [email protected] 6 PQShield Ltd, Oxford, UK. [email protected] Abstract. In both hardware and software, masking can represent an effective means of hardening an implementation against side-channel attack vectors such as Differential Power Analysis (DPA). Focusing on software, however, the use of masking can present various challenges: specifically, it often 1) requires significant effort to translate any theoretical security properties into practice, and, even then, 2) imposes a significant overhead in terms of efficiency. To address both challenges, this paper explores the use of an Instruction Set Extension (ISE) to support masking in software-based implementations of a range of (symmetric) cryptographic kernels including AES: we design, implement, and evaluate such an ISE, using RISC-V as the base ISA. Our ISE-supported first-order masked implementation of AES, for example, is an order of magnitude more efficient than a software-only alternative with respect to both execution latency and memory footprint; this renders it comparable to an unmasked implementation using the same metrics, but also first-order secure. Keywords: side-channel attack, masking, RISC-V, ISE 1 Introduction The threat of implementation attacks. -

ESA IP Core Extensions for LEON2FT

ESA IP core extensions for LEON2FT A new FPU with configurable (half/full/double) precision SWAR (SIMD Within A Register) instruction extensions TEC-ED & TEC-SW Final Presentation Days 2020-05-13 ESA UNCLASSIFIED - For Official Use ESA IP core extensions for LEON2FT • Budget: 156363 EUR (GSTP) + CCN 10000 EUR • Duration: 2.5 years including CCN • Prime: daiteq s.r.o., Prague, Czech Republic (CZ) • Extensions will be available with the LEON2-FT ESA IP core • Main Objectives: 1. Develop a new versatile FPU for LEON2-FT; 2. Specify and implement custom instructions for LEON2-FT; ● SWAR: Multiple simultaneous operations of reduced bit-width ● Apply new instructions on a GNSS SW receiver tracking loop 3. CCN work: Port LEON2-FT and extensions to BRAVE-Medium • Future work: evaluation of and extensions for the NOEL-V RISC-V IP core ESA UNCLASSIFIED - For Official Use TEC-ED & TEC-SW FP Days | 2020-05-13 | Slide 2 ESA IP Core Extensions for LEON2: daiFPU and SWAR ESA contract 4000122242/17/NL/LF Presenter Martin Daněk daiteq s.r.o. TEC-ED & TEC-SW Final Presentation Days May 13, 2020 www.daiteq.com Company overview Based in Prague, the Czech Republic Founded in 2013 Focus on Embedded systems, HW and low-level SW design FPGA acceleration for image processing and AI Processor architecture Floating-point processing Customized configurable datapaths Dataflow processing, microthreading Independent technical reviews Background: 15+ years of experience in digital design, FPGA technology, processor architecture, algorithm design, control. 13.5.2020 Copyright (c) 2020 daiteq s.r.o. TEC-ED&TEC-SW FPD 2 www.daiteq.com Agenda Overview of the activity Application domain: GNSS Performance evaluation: tracking loop LEON2FT extensions: daiFPU, SWAR = SIMD-within-a-register Resource requirements: daiFPU, SWAR Performance evaluation: Whetstone Software support: binutils, llvm, SoftFloat Experience/Summary 13.5.2020 Copyright (c) 2020 daiteq s.r.o. -

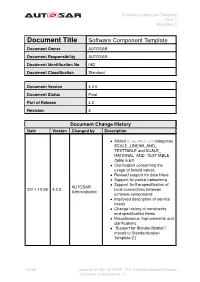

Software Component Template V4.2.0 R4.0 Rev 3

Software Component Template V4.2.0 R4.0 Rev 3 Document Title Software Component Template Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 062 Document Classification Standard Document Version 4.2.0 Document Status Final Part of Release 4.0 Revision 3 Document Change History Date Version Changed by Description • Added CompuMethod categories SCALE_LINEAR_AND_ TEXTTABLE and SCALE_ RATIONAL_AND_TEXTTABLE (table 5.67) • Clarification concerning the usage of invalid values • Revised support for data filters • Support for partial networking • Support for the specification of AUTOSAR 2011-10-26 4.2.0 local connections between Administration software-components • Improved description of service needs • Change history of constraints and specification items • Miscellaneous improvements and clarifications • “Support for Standardization” moved to Standardization Template [1] 1 of 566 Document ID 062: AUTOSAR_TPS_SoftwareComponentTemplate — AUTOSAR CONFIDENTIAL — Software Component Template V4.2.0 R4.0 Rev 3 • Remove restriction on data type of inter-runnable variables • Rework end-to-end AUTOSAR 2010-10-14 4.1.0 communication protection Administration • Add more constraints on the usage of the meta-model • Various fixes and clarifications • New requirements tracing table • Support for fixed data exchange • Implementation of meta-model cleanup • Fundamental revision of the data type concept • Support for variant handling • Support for end-to-end communication protection • Support for documentation AUTOSAR • Support for stopping