The Design of Scalar AES Instruction Set Extensions for RISC-V

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ADSP-21065L SHARC User's Manual; Chapter 2, Computation

&20387$7,2181,76 Figure 2-0. Table 2-0. Listing 2-0. The processor’s computation units provide the numeric processing power for performing DSP algorithms, performing operations on both fixed-point and floating-point numbers. Each computation unit executes instructions in a single cycle. The processor contains three computation units: • An arithmetic/logic unit (ALU) Performs a standard set of arithmetic and logic operations in both fixed-point and floating-point formats. • A multiplier Performs floating-point and fixed-point multiplication as well as fixed-point dual multiply/add or multiply/subtract operations. •A shifter Performs logical and arithmetic shifts, bit manipulation, field deposit and extraction operations on 32-bit operands and can derive exponents as well. ADSP-21065L SHARC User’s Manual 2-1 PM Data Bus DM Data Bus Register File Multiplier Shifter ALU 16 × 40-bit MR2 MR1 MR0 Figure 2-1. Computation units block diagram The computation units are architecturally arranged in parallel, as shown in Figure 2-1. The output from any computation unit can be input to any computation unit on the next cycle. The computation units store input operands and results locally in a ten-port register file. The Register File is accessible to the processor’s pro- gram memory data (PMD) bus and its data memory data (DMD) bus. Both of these buses transfer data between the computation units and internal memory, external memory, or other parts of the processor. This chapter covers these topics: • Data formats • Register File data storage and transfers -

New Instruction Set Extensions

New Instruction Set Extensions Instruction Set Innovation in Intels Processor Code Named Haswell [email protected] Agenda • Introduction - Overview of ISA Extensions • Haswell New Instructions • New Instructions Overview • Intel® AVX2 (256-bit Integer Vectors) • Gather • FMA: Fused Multiply-Add • Bit Manipulation Instructions • TSX/HLE/RTM • Tools Support for New Instruction Set Extensions • Summary/References Copyright© 2012, Intel Corporation. All rights reserved. Partially Intel Confidential Information. 2 *Other brands and names are the property of their respective owners. Instruction Set Architecture (ISA) Extensions 199x MMX, CMOV, Multiple new instruction sets added to the initial 32bit instruction PAUSE, set of the Intel® 386 processor XCHG, … 1999 Intel® SSE 70 new instructions for 128-bit single-precision FP support 2001 Intel® SSE2 144 new instructions adding 128-bit integer and double-precision FP support 2004 Intel® SSE3 13 new 128-bit DSP-oriented math instructions and thread synchronization instructions 2006 Intel SSSE3 16 new 128-bit instructions including fixed-point multiply and horizontal instructions 2007 Intel® SSE4.1 47 new instructions improving media, imaging and 3D workloads 2008 Intel® SSE4.2 7 new instructions improving text processing and CRC 2010 Intel® AES-NI 7 new instructions to speedup AES 2011 Intel® AVX 256-bit FP support, non-destructive (3-operand) 2012 Ivy Bridge NI RNG, 16 Bit FP 2013 Haswell NI AVX2, TSX, FMA, Gather, Bit NI A long history of ISA Extensions ! Copyright© 2012, Intel Corporation. All rights reserved. Partially Intel Confidential Information. 3 *Other brands and names are the property of their respective owners. Instruction Set Architecture (ISA) Extensions • Why new instructions? • Higher absolute performance • More energy efficient performance • New application domains • Customer requests • Fill gaps left from earlier extensions • For a historical overview see http://en.wikipedia.org/wiki/X86_instruction_listings Copyright© 2012, Intel Corporation. -

A Quantitative Study of Advanced Encryption Standard Performance

United States Military Academy USMA Digital Commons West Point ETD 12-2018 A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility Daniel Hawthorne United States Military Academy, [email protected] Follow this and additional works at: https://digitalcommons.usmalibrary.org/faculty_etd Part of the Information Security Commons Recommended Citation Hawthorne, Daniel, "A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility" (2018). West Point ETD. 9. https://digitalcommons.usmalibrary.org/faculty_etd/9 This Doctoral Dissertation is brought to you for free and open access by USMA Digital Commons. It has been accepted for inclusion in West Point ETD by an authorized administrator of USMA Digital Commons. For more information, please contact [email protected]. A QUANTITATIVE STUDY OF ADVANCED ENCRYPTION STANDARD PERFORMANCE AS IT RELATES TO CRYPTOGRAPHIC ATTACK FEASIBILITY A Dissertation Presented in Partial Fulfillment of the Requirements for the Degree of Doctor of Computer Science By Daniel Stephen Hawthorne Colorado Technical University December, 2018 Committee Dr. Richard Livingood, Ph.D., Chair Dr. Kelly Hughes, DCS, Committee Member Dr. James O. Webb, Ph.D., Committee Member December 17, 2018 © Daniel Stephen Hawthorne, 2018 1 Abstract The advanced encryption standard (AES) is the premier symmetric key cryptosystem in use today. Given its prevalence, the security provided by AES is of utmost importance. Technology is advancing at an incredible rate, in both capability and popularity, much faster than its rate of advancement in the late 1990s when AES was selected as the replacement standard for DES. Although the literature surrounding AES is robust, most studies fall into either theoretical or practical yet infeasible. -

Elementary Functions: Towards Automatically Generated, Efficient

Elementary functions : towards automatically generated, efficient, and vectorizable implementations Hugues De Lassus Saint-Genies To cite this version: Hugues De Lassus Saint-Genies. Elementary functions : towards automatically generated, efficient, and vectorizable implementations. Other [cs.OH]. Université de Perpignan, 2018. English. NNT : 2018PERP0010. tel-01841424 HAL Id: tel-01841424 https://tel.archives-ouvertes.fr/tel-01841424 Submitted on 17 Jul 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Délivré par l’Université de Perpignan Via Domitia Préparée au sein de l’école doctorale 305 – Énergie et Environnement Et de l’unité de recherche DALI – LIRMM – CNRS UMR 5506 Spécialité: Informatique Présentée par Hugues de Lassus Saint-Geniès [email protected] Elementary functions: towards automatically generated, efficient, and vectorizable implementations Version soumise aux rapporteurs. Jury composé de : M. Florent de Dinechin Pr. INSA Lyon Rapporteur Mme Fabienne Jézéquel MC, HDR UParis 2 Rapporteur M. Marc Daumas Pr. UPVD Examinateur M. Lionel Lacassagne Pr. UParis 6 Examinateur M. Daniel Menard Pr. INSA Rennes Examinateur M. Éric Petit Ph.D. Intel Examinateur M. David Defour MC, HDR UPVD Directeur M. Guillaume Revy MC UPVD Codirecteur À la mémoire de ma grand-mère Françoise Lapergue et de Jos Perrot, marin-pêcheur bigouden. -

Hamming Weight Attacks on Cryptographic Hardware – Breaking Masking Defense?

Hamming Weight Attacks on Cryptographic Hardware { Breaking Masking Defense? Marcin Gomu lkiewicz1 and Miros law Kuty lowski12 1 Cryptology Centre, Poznan´ University 2 Institute of Mathematics, Wroc law University of Technology, ul. Wybrzeze_ Wyspianskiego´ 27 50-370 Wroc law, Poland Abstract. It is believed that masking is an effective countermeasure against power analysis attacks: before a certain operation involving a key is performed in a cryptographic chip, the input to this operation is com- bined with a random value. This has to prevent leaking information since the input to the operation is random. We show that this belief might be wrong. We present a Hamming weight attack on an addition operation. It works with random inputs to the addition circuit, hence masking even helps in the case when we cannot control the plaintext. It can be applied to any round of the encryption. Even with moderate accuracy of measuring power consumption it de- termines explicitly subkey bits. The attack combines the classical power analysis (over Hamming weight) with the strategy of the saturation at- tack performed using a random sample. We conclude that implementing addition in cryptographic devices must be done very carefully as it might leak secret keys used for encryption. In particular, the simple key schedule of certain algorithms (such as IDEA and Twofish) combined with the usage of addition might be a serious danger. Keywords: cryptographic hardware, side channel cryptanalysis, Ham- ming weight, power analysis 1 Introduction Symmetric encryption algorithms are often used to protect data stored in inse- cure locations such as hard disks, archive copies, and so on. -

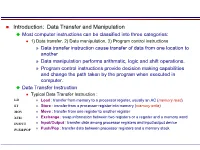

Most Computer Instructions Can Be Classified Into Three Categories

Introduction: Data Transfer and Manipulation Most computer instructions can be classified into three categories: 1) Data transfer, 2) Data manipulation, 3) Program control instructions » Data transfer instruction cause transfer of data from one location to another » Data manipulation performs arithmatic, logic and shift operations. » Program control instructions provide decision making capabilities and change the path taken by the program when executed in computer. Data Transfer Instruction Typical Data Transfer Instruction : LD » Load : transfer from memory to a processor register, usually an AC (memory read) ST » Store : transfer from a processor register into memory (memory write) MOV » Move : transfer from one register to another register XCH » Exchange : swap information between two registers or a register and a memory word IN/OUT » Input/Output : transfer data among processor registers and input/output device PUSH/POP » Push/Pop : transfer data between processor registers and a memory stack MODE ASSEMBLY REGISTER TRANSFER CONVENTION Direct Address LD ADR ACM[ADR] Indirect Address LD @ADR ACM[M[ADR]] Relative Address LD $ADR ACM[PC+ADR] Immediate Address LD #NBR ACNBR Index Address LD ADR(X) ACM[ADR+XR] Register LD R1 ACR1 Register Indirect LD (R1) ACM[R1] Autoincrement LD (R1)+ ACM[R1], R1R1+1 8 Addressing Mode for the LOAD Instruction Data Manipulation Instruction 1) Arithmetic, 2) Logical and bit manipulation, 3) Shift Instruction Arithmetic Instructions : NAME MNEMONIC Increment INC Decrement DEC Add ADD Subtract SUB Multiply -

Hyper-Threading Performance with Intel Cpus for Linux SAP Deployment on Proliant Servers

Hyper-Threading Performance with Intel CPUs for Linux SAP Deployment on ProLiant Servers Session #3798 Hein van den Heuvel Performance Engineer Hewlett-Packard © 2004 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice Topics • Hyper-Threading Intro • Implementation details Intel, IBM, Sun • Linux implementation • My own tests • SAP (SD) benchmark • Benchmark Results • Conclusions: (18% improvement for SAP 2-tier) Intel Hyper-Threading Overview “Hyper-Threading Technology is a form of simultaneous multithreading technology (SMT), where multiple threads of software applications can be run simultaneously on one processor. This is achieved by duplicating the architectural state on each processor, while sharing one set of processor execution resources. The architectural state tracks the flow of a program or thread, and the execution resources are the units on the processor that do the work: add, multiply, load, etc. “ http://www.intel.com/business/bss/products/hyperthreading/server/ht_server.pdf http://www.intel.com/technology/hyperthread/ Intel HT in a picture To-be-updated Hyper-Threading Versus Dual Core • HP (PA + ipf) opted for ‘dual core’ technology. − Each processor has full set of resources − Only limitation is shared ‘system’ connection. − Allows for dense (8p – 4u – 4640) − minimally constrained systems • Software licensing impact (Oracle!) • Hyper-Threading technology effectiveness will depend on application IBM P5 SMT Summary Enhanced Simultaneous Multi-Threading features To improve SMT performance for various workload mixes and provide robust quality of service, POWER5 provides two features: • Dynamic resource balancing – The objective of dynamic resource balancing is to ensure that the two threads executing on the same processor flow smoothly through the system. -

Robust Architectural Support for Transactional Memory in the Power Architecture

Robust Architectural Support for Transactional Memory in the Power Architecture Harold W. Cain∗ Brad Frey Derek Williams IBM Research IBM STG IBM STG Yorktown Heights, NY, USA Austin, TX, USA Austin, TX, USA [email protected] [email protected] [email protected] Maged M. Michael Cathy May Hung Le IBM Research IBM Research (retired) IBM STG Yorktown Heights, NY, USA Yorktown Heights, NY, USA Austin, TX, USA [email protected] [email protected] [email protected] ABSTRACT in current p795 systems, with 8 TB of DRAM), as well as On the twentieth anniversary of the original publication [10], strengths in RAS that differentiate it in the market, adding following ten years of intense activity in the research lit- TM must not compromise any of these virtues. A robust erature, hardware support for transactional memory (TM) system is one that is sturdy in construction, a trait that has finally become a commercial reality, with HTM-enabled does not usually come to mind in respect to HTM systems. chips currently or soon-to-be available from many hardware We structured TM to work in harmony with features that vendors. In this paper we describe architectural support for support the architecture's scalability. Our goal has been to TM provide a comprehensive programming environment includ- TM added to a future version of the Power ISA . Two im- ing support for simple system calls and debug aids, while peratives drove the development: the desire to complement providing a robust (in the sense of "no surprises") execu- our weakly-consistent memory model with a more friendly tion environment with reasonably consistent performance interface to simplify the development and porting of multi- and without unexpected transaction failures.2 TM must be threaded applications, and the need for robustness beyond usable throughout the system stack: in hypervisors, oper- that of some early implementations. -

Type I Codes Over GF(4)

Type I Codes over GF(4) Hyun Kwang Kim¤ San 31, Hyoja Dong Department of Mathematics Pohang University of Science and Technology Pohang, 790-784, Korea e-mail: [email protected] Dae Kyu Kim School of Electronics & Information Engineering Chonbuk National University Chonju, Chonbuk 561-756, Korea e-mail: [email protected] Jon-Lark Kimy Department of Mathematics University of Louisville Louisville, KY 40292, USA e-mail: [email protected] Abstract It was shown by Gaborit el al. [10] that a Euclidean self-dual code over GF (4) with the property that there is a codeword whose Lee weight ´ 2 (mod 4) is of interest because of its connection to a binary singly-even self-dual code. Such a self-dual code over GF (4) is called Type I. The purpose of this paper is to classify all Type I codes of lengths up to 10 and extremal Type I codes of length 12, and to construct many new extremal Type I codes over GF (4) of ¤The present study was supported by Com2MaC-KOSEF, POSTECH BSRI research fund, and grant No. R01-2006-000-11176-0 from the Basic Research Program of the Korea Science & Engineering Foundation. ycorresponding author, supported in part by a Project Completion Grant from the University of Louisville. 1 lengths from 14 to 22 and 34. As a byproduct, we construct a new extremal singly-even self-dual binary [36; 18; 8] code, and a new ex- tremal singly-even self-dual binary [68; 34; 12] code with a previously unknown weight enumerator W2 for ¯ = 95 and γ = 1. -

![Arxiv:1311.6244V1 [Physics.Comp-Ph] 25 Nov 2013](https://docslib.b-cdn.net/cover/4591/arxiv-1311-6244v1-physics-comp-ph-25-nov-2013-444591.webp)

Arxiv:1311.6244V1 [Physics.Comp-Ph] 25 Nov 2013

An efficient implementation of Slater-Condon rules Anthony Scemama,∗ Emmanuel Giner November 26, 2013 Abstract Slater-Condon rules are at the heart of any quantum chemistry method as they allow to simplify 3N- dimensional integrals as sums of 3- or 6-dimensional integrals. In this paper, we propose an efficient implementation of those rules in order to identify very rapidly which integrals are involved in a matrix ele- ment expressed in the determinant basis set. This implementation takes advantage of the bit manipulation instructions on x86 architectures that were introduced in 2008 with the SSE4.2 instruction set. Finding which spin-orbitals are involved in the calculation of a matrix element doesn't depend on the number of electrons of the system. In this work we consider wave functions Ψ ex- For two determinants which differ by two spin- pressed as linear combinations of Slater determinants orbitals: D of orthonormal spin-orbitals φ(r): jl hDjO1jDiki = 0 (4) X Ψ = c D (1) jl i i hDjO2jDiki = hφiφkjO2jφjφli − hφiφkjO2jφlφji i All other matrix elements involving determinants Using the Slater-Condon rules,[1, 2] the matrix ele- with more than two substitutions are zero. ments of any one-body (O1) or two-body (O2) oper- An efficient implementation of those rules requires: ator expressed in the determinant space have simple expressions involving one- and two-electron integrals 1. to find the number of spin-orbital substitutions in the spin-orbital space. The diagonal elements are between two determinants given by: 2. to find which spin-orbitals are involved in the X substitution hDjO1jDi = hφijO1jφii (2) i2D 3. -

Run-Time Adaptation to Heterogeneous Processing Units for Real-Time Stereo Vision

Run-time Adaptation to Heterogeneous Processing Units for Real-time Stereo Vision Benjamin Ranft Oliver Denninger FZI Research Center for Information Technology FZI Research Center for Information Technology Karlsruhe, Germany Karlsruhe, Germany [email protected] [email protected] Abstract—Todays systems from smartphones to workstations task are becoming increasingly parallel and heterogeneous: Pro- data cessing units not only consist of more and more identical element cores – furthermore, systems commonly contain either a discrete general-purpose GPU alongside with their CPU or even integrate both on a single chip. To benefit from this trend, software should utilize all available resources and adapt to varying configurations, including different CPU and GPU performance or competing processes. This paper investigates parallelization and adaptation strate- gies using dense stereo vision as an example application – a basis e. g. for advanced driver assistance systems, but also robotics or gesture recognition. At this, task-driven as well as data element- and data flow-driven parallelization approaches data are feasible. To achieve real-time performance, we first utilize flow data element-parallelism individually on each processing unit. Figure 1. Parallelization approaches offered by our application On this basis, we develop and implement further strategies for heterogeneous systems and automatic adaptation to the units (GPUs) often outperform multi-core CPUs with single hardware available at run-time. Each approach is described instruction, multiple data (SIMD) capabilities w. r. t. frame concerning i. a. the propagation of data to processors and its rates and energy efficiency [4], [5], although there are relation to established methods. An experimental evaluation with multiple test systems and usage scenarious reveals advan- exceptions [6]. -

On Security and Privacy for Networked Information Society

Antti Hakkala On Security and Privacy for Networked Information Society Observations and Solutions for Security Engineering and Trust Building in Advanced Societal Processes Turku Centre for Computer Science TUCS Dissertations No 225, November 2017 ON SECURITY AND PRIVACY FOR NETWORKED INFORMATIONSOCIETY Observations and Solutions for Security Engineering and Trust Building in Advanced Societal Processes antti hakkala To be presented, with the permission of the Faculty of Mathematics and Natural Sciences of the University of Turku, for public criticism in Auditorium XXII on November 18th, 2017, at 12 noon. University of Turku Department of Future Technologies FI-20014 Turun yliopisto 2017 supervisors Adjunct professor Seppo Virtanen, D. Sc. (Tech.) Department of Future Technologies University of Turku Turku, Finland Professor Jouni Isoaho, D. Sc. (Tech.) Department of Future Technologies University of Turku Turku, Finland reviewers Professor Tuomas Aura Department of Computer Science Aalto University Espoo, Finland Professor Olaf Maennel Department of Computer Science Tallinn University of Technology Tallinn, Estonia opponent Professor Jarno Limnéll Department of Communications and Networking Aalto University Espoo, Finland The originality of this thesis has been checked in accordance with the University of Turku quality assurance system using the Turnitin OriginalityCheck service ISBN 978-952-12-3607-5 (Online) ISSN 1239-1883 To my wife Maria, I am forever grateful for everything. Thank you. ABSTRACT Our society has developed into a networked information soci- ety, in which all aspects of human life are interconnected via the Internet — the backbone through which a significant part of communications traffic is routed. This makes the Internet ar- guably the most important piece of critical infrastructure in the world.