2 a First Look at Quantum Fields

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Electron-Positron Pairs in Physics and Astrophysics

Electron-positron pairs in physics and astrophysics: from heavy nuclei to black holes Remo Ruffini1,2,3, Gregory Vereshchagin1 and She-Sheng Xue1 1 ICRANet and ICRA, p.le della Repubblica 10, 65100 Pescara, Italy, 2 Dip. di Fisica, Universit`adi Roma “La Sapienza”, Piazzale Aldo Moro 5, I-00185 Roma, Italy, 3 ICRANet, Universit´ede Nice Sophia Antipolis, Grand Chˆateau, BP 2135, 28, avenue de Valrose, 06103 NICE CEDEX 2, France. Abstract Due to the interaction of physics and astrophysics we are witnessing in these years a splendid synthesis of theoretical, experimental and observational results originating from three fundamental physical processes. They were originally proposed by Dirac, by Breit and Wheeler and by Sauter, Heisenberg, Euler and Schwinger. For almost seventy years they have all three been followed by a continued effort of experimental verification on Earth-based experiments. The Dirac process, e+e 2γ, has been by − → far the most successful. It has obtained extremely accurate experimental verification and has led as well to an enormous number of new physics in possibly one of the most fruitful experimental avenues by introduction of storage rings in Frascati and followed by the largest accelerators worldwide: DESY, SLAC etc. The Breit–Wheeler process, 2γ e+e , although conceptually simple, being the inverse process of the Dirac one, → − has been by far one of the most difficult to be verified experimentally. Only recently, through the technology based on free electron X-ray laser and its numerous applications in Earth-based experiments, some first indications of its possible verification have been reached. The vacuum polarization process in strong electromagnetic field, pioneered by Sauter, Heisenberg, Euler and Schwinger, introduced the concept of critical electric 2 3 field Ec = mec /(e ). -

An Original Way of Teaching Geometrical Optics C. Even

Learning through experimenting: an original way of teaching geometrical optics C. Even1, C. Balland2 and V. Guillet3 1 Laboratoire de Physique des Solides, CNRS, Université Paris-Sud, Université Paris-Saclay, F-91405 Orsay, France 2 Sorbonne Universités, UPMC Université Paris 6, Université Paris Diderot, Sorbonne Paris Cite, CNRS-IN2P3, Laboratoire de Physique Nucléaire et des Hautes Energies (LPNHE), 4 place Jussieu, F-75252, Paris Cedex 5, France 3 Institut d’Astrophysique Spatiale, CNRS, Université Paris-Sud, Université Paris-Saclay, F- 91405 Orsay, France E-mail : [email protected] Abstract Over the past 10 years, we have developed at University Paris-Sud a first year course on geometrical optics centered on experimentation. In contrast with the traditional top-down learning structure usually applied at university, in which practical sessions are often a mere verification of the laws taught during preceding lectures, this course promotes “active learning” and focuses on experiments made by the students. Interaction among students and self questioning is strongly encouraged and practicing comes first, before any theoretical knowledge. Through a series of concrete examples, the present paper describes the philosophy underlying the teaching in this course. We demonstrate that not only geometrical optics can be taught through experiments, but also that it can serve as a useful introduction to experimental physics. Feedback over the last ten years shows that our approach succeeds in helping students to learn better and acquire motivation and autonomy. This approach can easily be applied to other fields of physics. Keywords : geometrical optics, experimental physics, active learning 1. Introduction Teaching geometrical optics to first year university students is somewhat challenging, as this field of physics often appears as one of the less appealing to students. -

Lhcb Prepares for RICH Physics

I n t e r n at I o n a l J o u r n a l o f H I g H - e n e r g y P H y s I c s CERN COURIERV o l u m e 47 n u m b e r 6 J u ly/a u g u s t 2 0 07 LHCb prepares for RICH physics NEUTRINOS LHC FOCUS InSIDE STORY Borexino starts On the trail of At the far side to take data p8 heavy flavour p30 of the world p58 CCJulAugCover1.indd 1 11/7/07 13:50:51 Project1 10/7/07 13:56 Page 1 CONTENTS Covering current developments in high- energy physics and related fields worldwide CERN Courier is distributed to member-state governments, institutes and laboratories affiliated with CERN, and to their personnel. It is published monthly, except for January and August. The views expressed are not necessarily those of the CERN management. Editor Christine Sutton CERN CERN, 1211 Geneva 23, Switzerland E-mail [email protected] Fax +41 (0) 22 785 0247 Web cerncourier.com Advisory board James Gillies, Rolf Landua and Maximilian Metzger Laboratory correspondents: COURIERo l u m e u m b e r u ly u g u s t V 47 N 6 J /A 20 07 Argonne National Laboratory (US) Cosmas Zachos Brookhaven National Laboratory (US) P Yamin Cornell University (US) D G Cassel DESY Laboratory (Germany) Ilka Flegel, Ute Wilhelmsen EMFCSC (Italy) Anna Cavallini Enrico Fermi Centre (Italy) Guido Piragino Fermi National Accelerator Laboratory (US) Judy Jackson Forschungszentrum Jülich (Germany) Markus Buescher GSI Darmstadt (Germany) I Peter IHEP, Beijing (China) Tongzhou Xu IHEP, Serpukhov (Russia) Yu Ryabov INFN (Italy) Barbara Gallavotti Jefferson Laboratory (US) Steven Corneliussen JINR -

On the Occasion of the 14Th Marcel Grossmann Meeting

ICRANet on the occasion of the 14 th Marcel Grossmann Meeting – MGXIV in celebration of the International Year of Light 2015 the 100 th anniversary of the Einstein’s equations the golden jubilee of Relativistic Astrophysics The ICRANet Seats The University of Rome “La Sapienza” where the Physics Department hosts the ICRANet ICRANet Headquarters in Pescara (Italy). seat in Rome (Italy). ICRANet seat in Nice (France). National Academy of Sciences of Armenia, which hosts the ICRANet seat in Yerevan (Armenia). (Above:) CBPF, which hosts the ICRANet seat in Rio de Janeiro. (Below:) The planned seat at Cassino da Urca (Brazil). II This brochure reviews some background facts concerning the founding of ICRANet and its current structures and then turns to the 2015 celebrations of the Year of Light and the ICRANet initiated International Relativistic Astrophysics Ph.D. program (the IRAP-PhD). It then addresses the birth of relativistic astrophysics following the first fifty years of the existence of general relativity plagued mainly by the absence of observational or experimental activity. Four events marked the onset of relativistic astrophysics: the discovery by Schmidt of the first quasar in 1962, of Scorpius X1 by Riccardo Giacconi in 1963, of the cosmic background radiation by Penzias and Wilson in 1964, and of pulsars by Jocelyn-Bell and Antony Hewish in 1967. These events led to a systematic development of the understanding of the four pillars of relativistic astrophysics: supernovae where observations of the CRAB Nebula are still relevant today, white dwarfs and neutron stars, black holes and their first identification in Nature in Cygnus X1 found by Riccardo Giacconi with the UHURU satellite based on the conceptual background developed by our group in Princeton, and finally the discovery of gamma ray bursts as the largest energy source delivered in the shortest time of any astrophysical phenomenon. -

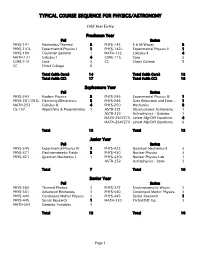

Typical Course Sequence for Physics/Astronomy

TYPICAL COURSE SEQUENCE FOR PHYSICS/ASTRONOMY Odd Year Entry Freshman Year Fall Spring PHYS-141 Mechanics/Thermal 3 PHYS-142 E & M/Waves 3 PHYS-141L Experimental Physics I 1 PHYS-142L Experimental Physics II 1 PHYS-190 Freshman Seminar 1 MATH-132 Calculus II 4 MATH-131 Calculus I 4 CORE-115 Core 5 CORE-110 Core 5 CC Christ College 8 CC Christ College 8 Total (with Core) 14 Total (with Core) 13 Total (with CC) 17 Total (with CC) 16 Sophomore Year Fall Spring PHYS-243 Modern Physics 3 PHYS-245 Experimental Physics III 1 PHYS-281/281L Electricity/Electronics 3 PHYS-246 Data Reduction and Error... 1 MATH-253 Calculus III 4 PHYS-250 Mechanics 3 CS-157 Algorithms & Programming 3 ASTR-221 Observational Astronomy 1 ASTR-253 Astrophysics - Galaxies 3 MATH-260/270 Linear Alg/Diff Equations 4 MATH-264/270 Linear Alg/Diff Equations 6 Total 13 Total 13 Junior Year Fall Spring PHYS-345 Experimental Physics IV 1 PHYS-422 Quantum Mechanics II 3 PHYS-371 Electromagnetic Fields 3 PHYS-430 Nuclear Physics 3 PHYS-421 Quantum Mechanics I 3 PHYS-430L Nuclear Physics Lab 1 ASTR-252 Astrophysics - Stars 3 Total 7 Total 10 Senior Year Fall Spring PHYS-360 Thermal Physics 3 PHYS-372 Electromagnetic Waves 3 PHYS-381 Advanced Mechanics 3 PHYS-440 Condensed Matter Physics 3 PHYS-440 Condensed Matter Physics 3 PHYS-445 Senior Research 1 PHYS-445 Senior Research 1 MATH-330 Partial Diff. Eq. 3 MATH-334 Complex Variables 3 Total 13 Total 10 Page 1 TYPICAL COURSE SEQUENCE FOR PHYSICS/ASTRONOMY Even Year Entry Freshman Year Fall Spring PHYS-141 Mechanics/Thermal 3 PHYS-142 E & M/Waves 3 PHYS-141L Experimental Physics I 1 PHYS-142L Experimental Physics II 1 PHYS-190 Freshman Seminar 1 MATH-132 Calculus II 4 MATH-131 Calculus I 4 CORE-115 Core 5 CORE-110 Core 5 CC Christ College 8 CC Christ College 8 Total (with Core) 14 Total (with Core) 13 Total (with CC) 17 Total (with CC) 16 Sophomore Year Fall Spring PHYS-243 Modern Physics 3 PHYS-245 Experimental Physics III 1 PHYS-281 Electricity/Electronics 3 PHYS-250 Mechanics 3 MATH-253 Calculus III 4 PHYS-246 Data Reduction and Error.. -

Heisenberg and the Nazi Atomic Bomb Project, 1939-1945: a Study in German Culture

Heisenberg and the Nazi Atomic Bomb Project http://content.cdlib.org/xtf/view?docId=ft838nb56t&chunk.id=0&doc.v... Preferred Citation: Rose, Paul Lawrence. Heisenberg and the Nazi Atomic Bomb Project, 1939-1945: A Study in German Culture. Berkeley: University of California Press, c1998 1998. http://ark.cdlib.org/ark:/13030/ft838nb56t/ Heisenberg and the Nazi Atomic Bomb Project A Study in German Culture Paul Lawrence Rose UNIVERSITY OF CALIFORNIA PRESS Berkeley · Los Angeles · Oxford © 1998 The Regents of the University of California In affectionate memory of Brian Dalton (1924–1996), Scholar, gentleman, leader, friend And in honor of my father's 80th birthday Preferred Citation: Rose, Paul Lawrence. Heisenberg and the Nazi Atomic Bomb Project, 1939-1945: A Study in German Culture. Berkeley: University of California Press, c1998 1998. http://ark.cdlib.org/ark:/13030/ft838nb56t/ In affectionate memory of Brian Dalton (1924–1996), Scholar, gentleman, leader, friend And in honor of my father's 80th birthday ― ix ― ACKNOWLEDGMENTS For hospitality during various phases of work on this book I am grateful to Aryeh Dvoretzky, Director of the Institute of Advanced Studies of the Hebrew University of Jerusalem, whose invitation there allowed me to begin work on the book while on sabbatical leave from James Cook University of North Queensland, Australia, in 1983; and to those colleagues whose good offices made it possible for me to resume research on the subject while a visiting professor at York University and the University of Toronto, Canada, in 1990–92. Grants from the College of the Liberal Arts and the Institute for the Arts and Humanistic Studies of The Pennsylvania State University enabled me to complete the research and writing of the book. -

Physics, M.S. 1

Physics, M.S. 1 PHYSICS, M.S. DEPARTMENT OVERVIEW The Department of Physics has a strong tradition of graduate study and research in astrophysics; atomic, molecular, and optical physics; condensed matter physics; high energy and particle physics; plasma physics; quantum computing; and string theory. There are many facilities for carrying out world-class research (https://www.physics.wisc.edu/ research/areas/). We have a large professional staff: 45 full-time faculty (https://www.physics.wisc.edu/people/staff/) members, affiliated faculty members holding joint appointments with other departments, scientists, senior scientists, and postdocs. There are over 175 graduate students in the department who come from many countries around the world. More complete information on the graduate program, the faculty, and research groups is available at the department website (http:// www.physics.wisc.edu). Research specialties include: THEORETICAL PHYSICS Astrophysics; atomic, molecular, and optical physics; condensed matter physics; cosmology; elementary particle physics; nuclear physics; phenomenology; plasmas and fusion; quantum computing; statistical and thermal physics; string theory. EXPERIMENTAL PHYSICS Astrophysics; atomic, molecular, and optical physics; biophysics; condensed matter physics; cosmology; elementary particle physics; neutrino physics; experimental studies of superconductors; medical physics; nuclear physics; plasma physics; quantum computing; spectroscopy. M.S. DEGREES The department offers the master science degree in physics, with two named options: Research and Quantum Computing. The M.S. Physics-Research option (http://guide.wisc.edu/graduate/physics/ physics-ms/physics-research-ms/) is non-admitting, meaning it is only available to students pursuing their Ph.D. The M.S. Physics-Quantum Computing option (http://guide.wisc.edu/graduate/physics/physics-ms/ physics-quantum-computing-ms/) (MSPQC Program) is a professional master's program in an accelerated format designed to be completed in one calendar year.. -

Bruno Benedetto Rossi

Intellectuals Displaced from Fascist Italy Firenze University Press 2019- Bruno Benedetto Rossi Go to personal file When he was expelled from the Università di Padova in 1938, the professor Link to other connected Lives on the move: of experimental physics from the Arcetri school was 33 years old, with an extraordinary international reputation and contacts and experiments abroad, Vinicio Barocas Sergio De Benedetti and had just married. With his young wife Nora Lombroso, he emigrated at Laura Capon Fermi Enrico Fermi once: first to Copenhagen, then to Manchester, and in June 1939 to the Guglielmo Ferrero United States. where their children were born. America, from the outset, was Leo Ferrero Nina Ferrero Raditsa his goal. Correspondence with New York and London reveals from Cesare Lombroso Gina Lombroso Ferrero September 1938 almost a competition to be able to have «one of the giants» Nora Lombroso Rossi of physics in the 20th century, whom fascism was hounding for the so-called Giuseppe (Beppo) Occhialini defence of the race. He was reappointed at the Università di Palermo in 1974, Leo Pincherle Maurizio Pincherle at the age of 70, when he was by then retired from MIT. Giulio Racah Bogdan Raditsa (Radica) Gaetano Salvemini Education Bruno was the eldest of three sons of Rino Rossi (1876-1927), an engineer who worked on the electrification of Venice, and of Lina Minerbi, from Ferrara (1868-1967). After attending the Marco Polo high school in Venice, he enrolled at the Università di Padova, and then at Bologna, where he graduated on 28 December 1927,1 defending a thesis in experimental physics on imperfect contacts between metals. -

Experimental Physics I PHYS 3250/3251

Syllabus Experimental Physics I PHYS 3250/3251 Course Description: Introduction to experimental modern physics, measurement of fundamental constants, repetition of crucial experiments of modern physics (Stern Gerlach, Zeeman effect, photoelectric effect, etc.) Course Objectives: The objective of this course is introduce you to the proper methods for conducting controlled physics experiments, including the acquisition, analysis and physical interpretation of data. The course involves experiments which illustrate the principles of modern physics, e.g. the quantum nature of charge and energy, etc. Succinct, journal-style reports will be the primary output for each experiment, with at least one additional presentation (oral and/or poster) required. Text: An Introduction to Error Analysis, 2nd Edition, J.R. Taylor, ISBN 0-935702-75-X. Additional documentation for any assigned experiments will be supplied as needed. Learning Outcomes: At the conclusion of this course, students will be able to: 1) Assemble and document a relevant bibliography for a physics experiment. 2) Prepare a journal-style manuscript using scientific typesetting software. 3) Plan and conduct experimental measurements in physics while employing proper note-taking methods. 4) Calculate uncertainties for physical quantities derived from experimental measurements. Clemson Thinks2: What is Critical Thinking? This course is designed to be part of the Clemson Thinks2 (CT2) program. “Critical thinking is reasoned and reflective judgment applied to solving problems or making decisions about what to believe or what to do. Critical thinking gives reasoned consideration to defining and analyzing problems, identifying and evaluating options, inferring likely outcomes and probable consequences, and explaining the reasons, evidence, methods and standards used in making those analyses, inferences and evaluations. -

Modern Experimental Physics Introduction for Physics 401 Students

Modern Experimental Physics Introduction for Physics 401 students Spring 2014 Eugene V. Colla • Goals of the course • Experiments • Teamwork • Schedule and assignments • Your working mode Physics 401 Spring 2013 2 • Primary: Learn how to “do” research Each project is a mini-research effort How are experiments actually carried out Use of modern tools and modern analysis and data-recording techniques Learn how to document your work • Secondary: Learn some modern physics Many experiments were once Nobel-prize-worthy efforts They touch on important themes in the development of modern physics Some will provide the insight to understand advanced courses Some are just too new to be discussed in textbooks Physics 401 Spring 2013 3 Primary. Each project is a mini-research effort Step1. Preparing: • Sample preparation • Wiring the setup • Testing electronics Preparing the samples for ferroelectric measurements Courtesy of Emily Zarndt & Mike Skulski (F11) Standing waves resonances in Second Step2. Taking data: Sound experiment If problems – go back to Courtesy of Mae Hwee Teo and Step 1. Vernie Redmon (F11) Physics 401 Spring 2013 4 Primary. Each project is a mini-research effort Plot of coincidence rate for 22Na against the angle between detectors A and B. The fit is a Step3. Data Analysis Gaussian function centred at If data is “bad” or not 179.30° with a full width at half maximum (FWHM) of 14.75°. enough data point – go back to Step 2 Courtesy of Bi Ran and Thomas Woodroof Author#1 and Author#2 Step4. Writing report and preparing the talk Physics 401 Spring 2013 5 Primary. -

On the Shoulders of Giants: a Brief History of Physics in Göttingen

1 6 ON THE SHO UL DERS OF G I A NTS : A B RIEF HISTORY OF P HYSI C S IN G Ö TTIN G EN On the Shoulders of Giants: a brief History of Physics in Göttingen 18th and 19th centuries Georg Ch. Lichtenberg (1742-1799) may be considered the fore- under Emil Wiechert (1861-1928), where seismic methods for father of experimental physics in Göttingen. His lectures were the study of the Earth's interior were developed. An institute accompanied by many experiments with equipment which he for applied mathematics and mechanics under the joint direc- had bought privately. To the general public, he is better known torship of the mathematician Carl Runge (1856-1927) (Runge- for his thoughtful and witty aphorisms. Following Lichtenberg, Kutta method) and the pioneer of aerodynamics, or boundary the next physicist of world renown would be Wilhelm Weber layers, Ludwig Prandtl (1875-1953) complemented the range of (1804-1891), a student, coworker and colleague of the „prince institutions related to physics proper. In 1925, Prandtl became of mathematics“ C. F. Gauss, who not only excelled in electro- the director of a newly established Kaiser-Wilhelm-Institute dynamics but fought for his constitutional rights against the for Fluid Dynamics. king of Hannover (1830). After his re-installment as a profes- A new and well-equipped physics building opened at the end sor in 1849, the two Göttingen physics chairs , W. Weber and B. of 1905. After the turn to the 20th century, Walter Kaufmann Listing, approximately corresponded to chairs of experimen- (1871-1947) did precision measurements on the velocity depen- tal and mathematical physics. -

'Vague, but Exciting'

I n t e r n at I o n a l Jo u r n a l o f HI g H -en e r g y PH y s I c s CERN COURIERV o l u m e 49 nu m b e r 4 ma y 2009 ‘Vague, but exciting’ ACCELERATORS COSMIC RAYS COLLABORATIONS FFAGs enter the era of PAMELA finds a ATLAS makes a smooth applications p5 positron excess p12 changeover at the top p31 CCMay09Cover.indd 1 14/4/09 09:44:43 CONTENTS Covering current developments in high- energy physics and related fields worldwide CERN Courier is distributed to member-state governments, institutes and laboratories affiliated with CERN, and to their personnel. It is published monthly, except for January and August. The views expressed are not necessarily those of the CERN management. Editor Christine Sutton Editorial assistant Carolyn Lee CERN CERN, 1211 Geneva 23, Switzerland E-mail [email protected] Fax +41 (0) 22 785 0247 Web cerncourier.com Advisory board James Gillies, Rolf Landua and Maximilian Metzger COURIERo l u m e u m b e r a y Laboratory correspondents: V 49 N 4 m 2009 Argonne National Laboratory (US) Cosmas Zachos Brookhaven National Laboratory (US) P Yamin Cornell University (US) D G Cassel DESY Laboratory (Germany) Ilka Flegel, Ute Wilhelmsen EMFCSC (Italy) Anna Cavallini Enrico Fermi Centre (Italy) Guido Piragino Fermi National Accelerator Laboratory (US) Judy Jackson Forschungszentrum Jülich (Germany) Markus Buescher GSI Darmstadt (Germany) I Peter IHEP, Beijing (China) Tongzhou Xu IHEP, Serpukhov (Russia) Yu Ryabov INFN (Italy) Romeo Bassoli Jefferson Laboratory (US) Steven Corneliussen JINR Dubna (Russia) B Starchenko KEK National Laboratory (Japan) Youhei Morita Lawrence Berkeley Laboratory (US) Spencer Klein Seeing beam once again p6 Dirac was right about antimatter p15 A new side to Peter Higgs p36 Los Alamos National Laboratory (US) C Hoffmann NIKHEF Laboratory (Netherlands) Paul de Jong Novosibirsk Institute (Russia) S Eidelman News 5 NCSL (US) Geoff Koch Orsay Laboratory (France) Anne-Marie Lutz FFAGs enter the applications era.