Resampling Residuals on Phylogenetic Trees: Extended Results Peter J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

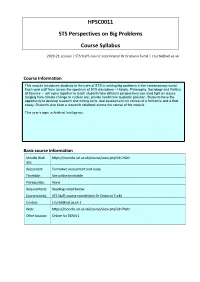

HPSC0011 STS Perspectives on Big Problems Course Syllabus

HPSC0011 STS Perspectives on Big Problems Course Syllabus 2020-21 session | STS Staff, course coordinator Dr Cristiano Turbil | [email protected] Course Information This module introduces students to the uses of STS in solving big problems in the contemporary world. Each year staff from across the spectrum of STS disciplines – History, Philosophy, Sociology and Politics of Science – will come together to teach students how different perspectives can shed light on issues ranging from climate change to nuclear war, private healthcare to plastic pollution. Students have the opportunity to develop research and writing skills, and assessment will consist of a formative and a final essay. Students also keep a research notebook across the course of the module This year’s topic is Artificial Intelligence. Basic course information Moodle Web https://moodle.ucl.ac.uk/course/view.php?id=7420 site: Assessment: Formative assessment and essay Timetable: See online timetable Prerequisites: None Required texts: Readings listed below Course tutor(s): STS Staff, course coordinator Dr Cristiano Turbil Contact: [email protected] | Web: https://moodle.ucl.ac.uk/course/view.php?id=7420 Office location: Online for TERM 1 Schedule UCL Date Topic Activity Wk 1 & 2 20 7 Oct Introduction and discussion of AI See Moodle for details as ‘Big Problem’ (Turbil) 3 21 14 Oct Mindful Hands (Werret) See Moodle for details 4 21 14 Oct Engineering Difference: the Birth See Moodle for details of A.I. and the Abolition Lie (Bulstrode) 5 22 21 Oct Measuring ‘Intelligence’ (Cain & See Moodle for details Turbil) 6 22 21 Oct Artificial consciousness and the See Moodle for details ‘race for supremacy’ in Erewhon’s ‘The Book of the Machines’ (1872) (Cain & Turbil) 7 & 8 23 28 Oct History of machine intelligence in See Moodle for details the twentieth century. -

Gender and the Quest in British Science Fiction Television CRITICAL EXPLORATIONS in SCIENCE FICTION and FANTASY (A Series Edited by Donald E

Gender and the Quest in British Science Fiction Television CRITICAL EXPLORATIONS IN SCIENCE FICTION AND FANTASY (a series edited by Donald E. Palumbo and C.W. Sullivan III) 1 Worlds Apart? Dualism and Transgression in Contemporary Female Dystopias (Dunja M. Mohr, 2005) 2 Tolkien and Shakespeare: Essays on Shared Themes and Language (ed. Janet Brennan Croft, 2007) 3 Culture, Identities and Technology in the Star Wars Films: Essays on the Two Trilogies (ed. Carl Silvio, Tony M. Vinci, 2007) 4 The Influence of Star Trek on Television, Film and Culture (ed. Lincoln Geraghty, 2008) 5 Hugo Gernsback and the Century of Science Fiction (Gary Westfahl, 2007) 6 One Earth, One People: The Mythopoeic Fantasy Series of Ursula K. Le Guin, Lloyd Alexander, Madeleine L’Engle and Orson Scott Card (Marek Oziewicz, 2008) 7 The Evolution of Tolkien’s Mythology: A Study of the History of Middle-earth (Elizabeth A. Whittingham, 2008) 8 H. Beam Piper: A Biography (John F. Carr, 2008) 9 Dreams and Nightmares: Science and Technology in Myth and Fiction (Mordecai Roshwald, 2008) 10 Lilith in a New Light: Essays on the George MacDonald Fantasy Novel (ed. Lucas H. Harriman, 2008) 11 Feminist Narrative and the Supernatural: The Function of Fantastic Devices in Seven Recent Novels (Katherine J. Weese, 2008) 12 The Science of Fiction and the Fiction of Science: Collected Essays on SF Storytelling and the Gnostic Imagination (Frank McConnell, ed. Gary Westfahl, 2009) 13 Kim Stanley Robinson Maps the Unimaginable: Critical Essays (ed. William J. Burling, 2009) 14 The Inter-Galactic Playground: A Critical Study of Children’s and Teens’ Science Fiction (Farah Mendlesohn, 2009) 15 Science Fiction from Québec: A Postcolonial Study (Amy J. -

The BG News October 28, 2004

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 10-28-2004 The BG News October 28, 2004 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News October 28, 2004" (2004). BG News (Student Newspaper). 7344. https://scholarworks.bgsu.edu/bg-news/7344 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. j^k M V Bowling Green State University THURSDAY October 28, 2004 Country looking forward ^^ 1 111 1 PM SHOWERS toIB MAC tourney; PAGE 9 KII I -i-WHN1 A \ \ \< ) HIGH63 LOW48 www.bgnews.com —U VJ ■■" VOLUME 99 ISSUE 56 Political artwork stolen from FAC Six student posters said she feels discouraged and BUSH KERRY fears that the artwork theft were taken last week may hinder students' chances from Fine Arts Center. TWO FLAVORS OF COLA of entering the contest. "It was disrespectful and a violation of their first amend- By lanell Kingsborough SENIOR REPORTER ment rights," Rusnak said. She feels that taking the If il was any other year, the artwork from the display artwork of students in the was one way of silencing the Intermediate Digital Imaging students' voices. course might have lasted longer "Whether people agreed on the wall than one or two with the student's messages days- BOIH BAO (OR vou or not, that did not give Ehem Six posters disappeared last Graphic Created the right to take matters into week from the Fine Arts Center. -

Read Ebook {PDF EPUB} the Man on Platform Five by Robert Llewellyn Robert Llewellyn

Read Ebook {PDF EPUB} The Man On Platform Five by Robert Llewellyn Robert Llewellyn. Robert Llewellyn is the actor who portrays Kryten from Series III to Series XII. He has also appeared as the presenter of Scrapheap Challenge which ran from 1998-2010 on Channel 4, the host of the web series Carpool which ran from 2009-2014, and as host of the YouTube channel Fully Charged which started in 2010 and still uploads today. Starring on Red Dwarf. Llewellyn's involvement with Red Dwarf came about as a result of his appearance at the Edinburgh Festival Fringe, performing in his comedy, Mammon, Robot Born Of Woman . He was invited to audition for the role of Kryten, by Paul Jackson, before joining the cast for Series III. The story is about a robot who, as he becomes more human, begins to behave increasingly badly. This was seen by Paul Jackson, producer of Red Dwarf , and he was invited to audition for the role of Kryten. Llewellyn joined the cast of Red Dwarf in 1989 at the start of Series III and continued in the role until the end of Series VIII in 1999. His skills as a physical performer encouraged Rob Grant and Doug Naylor to write him additional characters for the series, namely Jim Reaper ("The Last Day"), the Data Doctor ("Back in the Red II"), Human Kryten ("DNA"), Bongo ("Dimension Jump") and Able ("Beyond a Joke"). Llewellyn co- wrote the Red Dwarf Series VII episode "Beyond A Joke" with Doug Naylor. He was also the only British cast member originally to participate in the American version of Red Dwarf , though other actors such as Craig Charles and Chris Barrie were also approached to reprise their roles. -

+14 Days of Tv Listings Free

CINEMA VOD SPORTS TECH + 14 DAYS OF TV LISTINGS 1 JUNE 2015 ISSUE 2 TVGUIDE.CO.UK TVDAILY.COM Jurassic World Orange is the New Black Formula 1 Addictive Apps FREE 1 JUNE 2015 Issue 2 Contents TVGUIDE.CO.UK TVDAILY.COM EDITOR’S LETTER 4 Latest TV News 17 Food We are living in a The biggest news from the world of television. Your television dinners sorted with revolutionary age for inspiration from our favourite dramas. television. Not only is the way we watch television being challenged by the emergence of video on 18 Travel demand, but what we watch on television is Journey to the dizzying desert of Dorne or becoming increasingly take a trip to see the stunning setting of diverse and, thankfully, starting to catch up with Downton Abbey. real world demographics. With Orange Is The New Black back for another run on Netflix this month, we 19 Fashion decided to celebrate the 6 Top 100 WTF Steal some shadespiration from the arduous journey it’s taken to get to where we are in coolest sunglass-wearing dudes on TV. 2015 (p14). We still have a Moments (Part 2) long way to go, but we’re The final countdown of the most unbelievable getting there. Sports Susan Brett, Editor scenes ever to grace the small screen, 20 including the electrifying number one. All you need to know about the upcoming TVGuide.co.uk Formula 1 and MotoGP races. 104-08 Oxford Street, London, W1D 1LP [email protected] 8 Cinema CONTENT 22 Addictive Apps Editor: Susan Brett Everything you need to know about what’s Deputy Editor: Ally Russell A handy guide to all the best apps for Artistic Director: Francisco on at the Box Office right now. -

Illustrated Flora of East Texas Illustrated Flora of East Texas

ILLUSTRATED FLORA OF EAST TEXAS ILLUSTRATED FLORA OF EAST TEXAS IS PUBLISHED WITH THE SUPPORT OF: MAJOR BENEFACTORS: DAVID GIBSON AND WILL CRENSHAW DISCOVERY FUND U.S. FISH AND WILDLIFE FOUNDATION (NATIONAL PARK SERVICE, USDA FOREST SERVICE) TEXAS PARKS AND WILDLIFE DEPARTMENT SCOTT AND STUART GENTLING BENEFACTORS: NEW DOROTHEA L. LEONHARDT FOUNDATION (ANDREA C. HARKINS) TEMPLE-INLAND FOUNDATION SUMMERLEE FOUNDATION AMON G. CARTER FOUNDATION ROBERT J. O’KENNON PEG & BEN KEITH DORA & GORDON SYLVESTER DAVID & SUE NIVENS NATIVE PLANT SOCIETY OF TEXAS DAVID & MARGARET BAMBERGER GORDON MAY & KAREN WILLIAMSON JACOB & TERESE HERSHEY FOUNDATION INSTITUTIONAL SUPPORT: AUSTIN COLLEGE BOTANICAL RESEARCH INSTITUTE OF TEXAS SID RICHARDSON CAREER DEVELOPMENT FUND OF AUSTIN COLLEGE II OTHER CONTRIBUTORS: ALLDREDGE, LINDA & JACK HOLLEMAN, W.B. PETRUS, ELAINE J. BATTERBAE, SUSAN ROBERTS HOLT, JEAN & DUNCAN PRITCHETT, MARY H. BECK, NELL HUBER, MARY MAUD PRICE, DIANE BECKELMAN, SARA HUDSON, JIM & YONIE PRUESS, WARREN W. BENDER, LYNNE HULTMARK, GORDON & SARAH ROACH, ELIZABETH M. & ALLEN BIBB, NATHAN & BETTIE HUSTON, MELIA ROEBUCK, RICK & VICKI BOSWORTH, TONY JACOBS, BONNIE & LOUIS ROGNLIE, GLORIA & ERIC BOTTONE, LAURA BURKS JAMES, ROI & DEANNA ROUSH, LUCY BROWN, LARRY E. JEFFORDS, RUSSELL M. ROWE, BRIAN BRUSER, III, MR. & MRS. HENRY JOHN, SUE & PHIL ROZELL, JIMMY BURT, HELEN W. JONES, MARY LOU SANDLIN, MIKE CAMPBELL, KATHERINE & CHARLES KAHLE, GAIL SANDLIN, MR. & MRS. WILLIAM CARR, WILLIAM R. KARGES, JOANN SATTERWHITE, BEN CLARY, KAREN KEITH, ELIZABETH & ERIC SCHOENFELD, CARL COCHRAN, JOYCE LANEY, ELEANOR W. SCHULTZE, BETTY DAHLBERG, WALTER G. LAUGHLIN, DR. JAMES E. SCHULZE, PETER & HELEN DALLAS CHAPTER-NPSOT LECHE, BEVERLY SENNHAUSER, KELLY S. DAMEWOOD, LOGAN & ELEANOR LEWIS, PATRICIA SERLING, STEVEN DAMUTH, STEVEN LIGGIO, JOE SHANNON, LEILA HOUSEMAN DAVIS, ELLEN D. -

The Death of Tragedy: Examining Nietzsche's Return to the Greeks

Xavier University Exhibit Honors Bachelor of Arts Undergraduate 2018-4 The eD ath of Tragedy: Examining Nietzsche’s Return to the Greeks Brian R. Long Xavier University, Cincinnati, OH Follow this and additional works at: https://www.exhibit.xavier.edu/hab Part of the Ancient History, Greek and Roman through Late Antiquity Commons, Ancient Philosophy Commons, Classical Archaeology and Art History Commons, Classical Literature and Philology Commons, and the Other Classics Commons Recommended Citation Long, Brian R., "The eD ath of Tragedy: Examining Nietzsche’s Return to the Greeks" (2018). Honors Bachelor of Arts. 34. https://www.exhibit.xavier.edu/hab/34 This Capstone/Thesis is brought to you for free and open access by the Undergraduate at Exhibit. It has been accepted for inclusion in Honors Bachelor of Arts by an authorized administrator of Exhibit. For more information, please contact [email protected]. The Death of Tragedy: Examining Nietzsche’s Return to the Greeks Brian Long 1 Thesis Introduction In the study of philosophy, there are many dichotomies: Eastern philosophy versus Western philosophy, analytic versus continental, and so on. But none of these is as fundamental as the struggle between the ancients and the moderns. With the writings of Descartes, and perhaps even earlier with those of Machiavelli, there was a transition from “man in the world” to “man above the world.” Plato’s dialogues, Aristotle’s lecture notes, and the verses of the pre- Socratics are abandoned for having the wrong focus. No longer did philosophers seek to observe and question nature and man’s place in it; now the goal was mastery and possession of nature. -

21St-Century Narratives of World History

21st-Century Narratives of World History Global and Multidisciplinary Perspectives Edited by R. Charles Weller 21st-Century Narratives of World History [email protected] R. Charles Weller Editor 21st-Century Narratives of World History Global and Multidisciplinary Perspectives [email protected] Editor R. Charles Weller Department of History Washington State University Pullman, WA, USA and Center for Muslim-Christian Understanding Georgetown University Washington, DC, USA ISBN 978-3-319-62077-0 ISBN 978-3-319-62078-7 (eBook) DOI 10.1007/978-3-319-62078-7 Library of Congress Control Number: 2017945807 © The Editor(s) (if applicable) and The Author(s) 2017 This work is subject to copyright. All rights are solely and exclusively licensed by the Publisher, whether the whole or part of the material is concerned, specifcally the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microflms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specifc statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or the editors give a warranty, express or implied, with respect to the material contained herein or for any errors or omissions that may have been made. -

The Logicist Manifesto: at Long Last Let Logic-Based Artificial Intelligence Become a Field Unto Itself∗

The Logicist Manifesto: At Long Last Let Logic-Based Artificial Intelligence Become a Field Unto Itself∗ Selmer Bringsjord Rensselaer AI & Reasoning (RAIR) Lab Department of Cognitive Science Department of Computer Science Rensselaer Polytechnic Institute (RPI) Troy NY 12180 USA [email protected] version 9.18.08 Contents 1 Introduction 1 2 Background 1 2.1 Logic-Based AI Encapsulated . .1 2.1.1 LAI is Ambitious . .3 2.1.2 LAI is Based on Logical Systems . .4 2.1.3 LAI is a Top-Down Enterprise . .5 2.2 Ignoring the \Strong" vs. \Weak" Distinction . .5 2.3 A Slice in the Day of a Life of a LAI Agent . .6 2.3.1 Knowledge Representation in Elementary Logic . .8 2.3.2 Deductive Reasoning . .8 2.3.3 A Note on Nonmonotonic Reasoning . 12 2.3.4 Beyond Elementary Logical Systems . 13 2.4 Examples of Logic-Based Cognitive Systems . 15 3 Factors Supporting Logicist AI as an Independent Field 15 3.1 History Supports the Divorce . 15 3.2 The Advent of the Web . 16 3.3 The Remarkable Effectiveness of Logic . 16 3.4 Logic Top to Bottom Now Possible . 17 3.5 Learning and Denial . 19 3.6 Logic is an Antidote to \Cheating" in AI . 19 3.7 Logic Our Only Hope Against the Dark AI Future . 21 4 Objections; Rebuttals 22 4.1 \But you are trapped in a fundamental dilemma: your position is either redundant, or false." . 22 4.2 \But you're neglecting probabilistic AI." . 23 4.3 \But we now know that the mind, contra logicists, is continuous, and hence dynamical, not logical, systems are superior." . -

“The Caine Mutiny Court-Martial”

Performance Dates: October 11, 12, 13, 14, 18, 19, 20, 21, 25, 26, 27, 28, 2012 Thurs Fri, Sat at 8pm…Sun at 2pm “THE CAINE MUTINY COURT-MARTIAL” Written by Herman Wouk Directed by Sherry Ingbritsen Under special arrangement with Samuel French, Inc. New Dawn Theater Company 3087 Main St. Duluth, GA 30096 678-887-5015 www.newdawntheatercompanycom "Caine Mutiny Court-Martial" Cast of Characters Name Character Sherry Ingbritsen ………….. Director / Tech Ramona Werner ……………. Stage Mgr / Orderly / Cigarette Girl Marla Krohn …………………. Backstage Asst / Stenographer / Officer Wife Paul Ingbritsen ……………... Set Build Sherry Ingbritsen, Lisa Cox, Karyn West, Uniforms Bruce Saarela ……………….. John Mistretta ………………. Lt. Stephen Maryk Jay Croft ……………………... Lt. Barney Greenwald Mike Yow …………………….. Lt. Cmdr John Challee John Laszlo …………………. Capt Blakely Eric Arvidson ……………….. Lt Cmdr Philip Francis Queeg Roger Ferrier ……………….. Lt Thomas Keefer David Allen ………………….. Signalman 3rd Cl. Junius Urban Steve Werner ……………….. Lt. J.G. Wiilis Seward Keith Charles Hannum ……………. Capt. Randolph Southard Joe Springer ………………… Dr. Forrest Lundeen Chuck Mason ……………….. Dr Allen Bird Paul Ingbritsen………………. Cmdr Kelvey Mike Stevens ………………... Lt Cmdr Pendleton (wk 1 & 3) Robert Seith …………………. Lt Norris (wk1 & 2) John Brackett ……………….. Cmdr MacDonald (wk 1 & 2) / Stilwell Voice Bruce Saarela ……………….. Lt Tomeck (wk 2 & 3) / Stilwell Voice Keith Burke ………………….. Lt Cmdr McVey (wk 1 & 3) Andy Hoeckele ……………… Cmdr McGuire (wk 2 & 3) Michelle Saarela ……………. Officers Wife A special thanks goes out to: Webmaster: Paul Ingbritsen Set Build: Paul Ingbritsen Stage Crew: Paul, Sherry & Jacob Ingbritsen Photographer: Cathy Seith Videographer: Robert Seith Programs: Beth Peters "The Caine Mutiny Court-Martial" Written by Herman Wouk Directed by: Sherry Ingbritsen Under special arrangement with Samuel French, Inc. -

Role of Deficient DNA Mismatch Repair Status In

Coordinating Center, NCCTG: N0147 CTSU: N0147 CALGB: N0147 ECOG: N0147 NCIC CTG: CRC.2 SWOG: N0147 North Central Cancer Treatment Group A Randomized Phase III Trial of Oxaliplatin (OXAL) Plus 5-Fluorouracil (5-FU)/Leucovorin (CF) with or without Cetuximab (C225) after Curative Resection for Patients with Stage III Colon Cancer Intergroup Study Chairs Steven R. Alberts, M.D. (Research Base)* Mayo Foundation 200 First Street, SW Rochester, MN 55905 Phone: (507) 284-1328 Fax: (507) 284-5280 E-mail: [email protected] Statisticians Daniel J. Sargent, Ph.D. Mayo Foundation 200 First Street SW Rochester, MN 55905 E-mail: [email protected] Michelle R. Mahoney, M.S. Fax: (507) 266-2477 Phone: (507) 284-8803 * Investigator having NCI responsibility for this protocol. DCTD Supplied Agent via Clinical Supplies Agreement (CSA): Cetuximab (NSC #714692) (Discontinued as of November 25, 2009) Commercial Supplied for patients pre-randomized following the implementation of Addendum 10: Oxaliplatin Document History (Effective Date) Document History (Effective Date) Activation February 10, 2004 NCCTG Addendum 7 January 4, 2008 NCCTG Update 1 February 10, 2004 NCCTG Addendum 8 May 12, 2008 NCCTG Addendum 1 March 29, 2004 NCCTG Addendum 9 August 18, 2008 NCCTG Addendum 2 September 1, 2004 NCCTG Update 3 August 18, 2008 NCCTG Addendum 3 June 1, 2005 NCCTG Addendum 10 May 8, 2009 NCCTG Addendum 4 June 1, 2005 NCCTG Addendum 11 December 18, 2009 NCCTG Addendum 5 August 1, 2005 NCCTG Addendum 12 September 22, 2010 NCCTG Addendum 6 August 15, 2007 NCCTG Addendum 13 June 1, 2011 NCCTG Update 2 August 15, 2007 NCI Version date: April 21, 2011 N0147 2 Addendum 13 Add As of November 25, 2009, all patient enrollments are complete. -

Filozofické Aspekty Technologií V Komediálním Sci-Fi Seriálu Červený Trpaslík

Masarykova univerzita Filozofická fakulta Ústav hudební vědy Teorie interaktivních médií Dominik Zaplatílek Bakalářská diplomová práce Filozofické aspekty technologií v komediálním sci-fi seriálu Červený trpaslík Vedoucí práce: PhDr. Martin Flašar, Ph.D. 2020 Prohlašuji, že jsem tuto práci vypracoval samostatně a použil jsem literárních a dalších pramenů a informací, které cituji a uvádím v seznamu použité literatury a zdrojů informací. V Brně dne ....................................... Dominik Zaplatílek Poděkování Tímto bych chtěl poděkovat panu PhDr. Martinu Flašarovi, Ph.D za odborné vedení této bakalářské práce a podnětné a cenné připomínky, které pomohly usměrnit tuto práci. Obsah Úvod ................................................................................................................................................. 5 1. Seriál Červený trpaslík ................................................................................................................... 6 2. Vyobrazené technologie ............................................................................................................... 7 2.1. Android Kryton ....................................................................................................................... 14 2.1.1. Teologická námitka ........................................................................................................ 15 2.1.2. Argument z vědomí ....................................................................................................... 18 2.1.3. Argument z