Ontology Pattern-Based Data Integration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Collaborative Storytelling 2.0: a Framework for Studying Forum-Based Role-Playing Games

COLLABORATIVE STORYTELLING 2.0: A FRAMEWORK FOR STUDYING FORUM-BASED ROLE-PLAYING GAMES Csenge Virág Zalka A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2017 Committee: Kristine Blair, Committee Co-Chair Lisa M. Gruenhagen Graduate Faculty Representative Radhika Gajjala, Committee Co-Chair Jeremy Wallach ii ABSTRACT Kristine Blair and Radhika Gajjala, Committee Co-Chairs Forum-based role-playing games are a rich, yet barely researched subset of text- based digital gaming. They are a form of storytelling where narratives are created through acts of play by multiple people in an online space, combining collaboration and improvisation. This dissertation acts as a pilot study for exploring these games in their full complexity at the intersection of play, narrative, and fandom. Building on theories of interactivity, digital storytelling, and fan fiction studies, it highlights forum games’ most unique features, and proves that they are is in no way liminal or secondary to more popular forms of role-playing. The research is based on data drawn from a large sample of forums of various genres. One hundred sites were explored through close textual analysis in order to outline their most common features. The second phase of the project consisted of nine months of participant observation on select forums, in order to gain a better understanding of how their rules and practices influence the emergent narratives. Participants from various sites contributed their own interpretations of forum gaming through a series of ethnographic interviews. This did not only allow agency to the observed communities to voice their thoughts and explain their practices, but also spoke directly to the key research question of why people are drawn to forum gaming. -

V a Lida T in G R D F Da

Series ISSN: 2160-4711 LABRA GAYO • ET AL GAYO LABRA Series Editors: Ying Ding, Indiana University Paul Groth, Elsevier Labs Validating RDF Data Jose Emilio Labra Gayo, University of Oviedo Eric Prud’hommeaux, W3C/MIT and Micelio Iovka Boneva, University of Lille Dimitris Kontokostas, University of Leipzig VALIDATING RDF DATA This book describes two technologies for RDF validation: Shape Expressions (ShEx) and Shapes Constraint Language (SHACL), the rationales for their designs, a comparison of the two, and some example applications. RDF and Linked Data have broad applicability across many fields, from aircraft manufacturing to zoology. Requirements for detecting bad data differ across communities, fields, and tasks, but nearly all involve some form of data validation. This book introduces data validation and describes its practical use in day-to-day data exchange. The Semantic Web offers a bold, new take on how to organize, distribute, index, and share data. Using Web addresses (URIs) as identifiers for data elements enables the construction of distributed databases on a global scale. Like the Web, the Semantic Web is heralded as an information revolution, and also like the Web, it is encumbered by data quality issues. The quality of Semantic Web data is compromised by the lack of resources for data curation, for maintenance, and for developing globally applicable data models. At the enterprise scale, these problems have conventional solutions. Master data management provides an enterprise-wide vocabulary, while constraint languages capture and enforce data structures. Filling a need long recognized by Semantic Web users, shapes languages provide models and vocabularies for expressing such structural constraints. -

Semantic Integration and Knowledge Discovery for Environmental Research

Journal of Database Management, 18(1), 43-67, January-March 2007 43 Semantic Integration and Knowledge Discovery for Environmental Research Zhiyuan Chen, University of Maryland, Baltimore County (UMBC), USA Aryya Gangopadhyay, University of Maryland, Baltimore County (UMBC), USA George Karabatis, University of Maryland, Baltimore County (UMBC), USA Michael McGuire, University of Maryland, Baltimore County (UMBC), USA Claire Welty, University of Maryland, Baltimore County (UMBC), USA ABSTRACT Environmental research and knowledge discovery both require extensive use of data stored in various sources and created in different ways for diverse purposes. We describe a new metadata approach to elicit semantic information from environmental data and implement semantics- based techniques to assist users in integrating, navigating, and mining multiple environmental data sources. Our system contains specifications of various environmental data sources and the relationships that are formed among them. User requests are augmented with semantically related data sources and automatically presented as a visual semantic network. In addition, we present a methodology for data navigation and pattern discovery using multi-resolution brows- ing and data mining. The data semantics are captured and utilized in terms of their patterns and trends at multiple levels of resolution. We present the efficacy of our methodology through experimental results. Keywords: environmental research, knowledge discovery and navigation, semantic integra- tion, semantic networks, -

Semantics Developer's Guide

MarkLogic Server Semantic Graph Developer’s Guide 2 MarkLogic 10 May, 2019 Last Revised: 10.0-8, October, 2021 Copyright © 2021 MarkLogic Corporation. All rights reserved. MarkLogic Server MarkLogic 10—May, 2019 Semantic Graph Developer’s Guide—Page 2 MarkLogic Server Table of Contents Table of Contents Semantic Graph Developer’s Guide 1.0 Introduction to Semantic Graphs in MarkLogic ..........................................11 1.1 Terminology ..........................................................................................................12 1.2 Linked Open Data .................................................................................................13 1.3 RDF Implementation in MarkLogic .....................................................................14 1.3.1 Using RDF in MarkLogic .........................................................................15 1.3.1.1 Storing RDF Triples in MarkLogic ...........................................17 1.3.1.2 Querying Triples .......................................................................18 1.3.2 RDF Data Model .......................................................................................20 1.3.3 Blank Node Identifiers ..............................................................................21 1.3.4 RDF Datatypes ..........................................................................................21 1.3.5 IRIs and Prefixes .......................................................................................22 1.3.5.1 IRIs ............................................................................................22 -

Semantic Integration Across Heterogeneous Databases Finding Data Correspondences Using Agglomerative Hierarchical Clustering and Artificial Neural Networks

DEGREE PROJECT IN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2018 Semantic Integration across Heterogeneous Databases Finding Data Correspondences using Agglomerative Hierarchical Clustering and Artificial Neural Networks MARK HOBRO KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Semantic Integration across Heterogeneous Databases Finding Data Correspondences using Agglomerative Hierarchical Clustering and Artificial Neural Networks MARK HOBRO Master in Computer Science Date: April 11, 2018 Supervisor: John Folkesson Examiner: Hedvig Kjellström Swedish title: Semantisk integrering mellan heterogena databaser: Hitta datakopplingar med hjälp av hierarkisk klustring och artificiella neuronnät School of Computer Science and Communication iii Abstract The process of data integration is an important part of the database field when it comes to database migrations and the merging of data. The research in the area has grown with the addition of machine learn- ing approaches in the last 20 years. Due to the complexity of the re- search field, no go-to solutions have appeared. Instead, a wide variety of ways of enhancing database migrations have emerged. This thesis examines how well a learning-based solution performs for the seman- tic integration problem in database migrations. Two algorithms are implemented. One that is based on informa- tion retrieval theory, with the goal of yielding a matching result that can be used as a benchmark for measuring the performance of the machine learning algorithm. The machine learning approach is based on grouping data with agglomerative hierarchical clustering and then training a neural network to recognize patterns in the data. This al- lows making predictions about potential data correspondences across two databases. -

Bi-Directional Transformation Between Normalized Systems Elements and Domain Ontologies in OWL

Bi-directional Transformation between Normalized Systems Elements and Domain Ontologies in OWL Marek Suchanek´ 1 a, Herwig Mannaert2, Peter Uhnak´ 3 b and Robert Pergl1 c 1Faculty of Information Technology, Czech Technical University in Prague, Thakurova´ 9, Prague, Czech Republic 2Normalized Systems Institute, University of Antwerp, Prinsstraat 13, Antwerp, Belgium 3NSX bvba, Wetenschapspark Universiteit Antwerpen, Galileilaan 15, 2845 Niel, Belgium Keywords: Ontology, Normalized Systems, Transformation, Model-driven Development, Ontology Engineering, Software Modelling. Abstract: Knowledge representation in OWL ontologies gained a lot of popularity with the development of Big Data, Artificial Intelligence, Semantic Web, and Linked Open Data. OWL ontologies are very versatile, and there are many tools for analysis, design, documentation, and mapping. They can capture concepts and categories, their properties and relations. Normalized Systems (NS) provide a way of code generation from a model of so-called NS Elements resulting in an information system with proven evolvability. The model used in NS contains domain-specific knowledge that can be represented in an OWL ontology. This work clarifies the potential advantages of having OWL representation of the NS model, discusses the design of a bi-directional transformation between NS models and domain ontologies in OWL, and describes its implementation. It shows how the resulting ontology enables further work on the analytical level and leverages the system design. Moreover, due to the fact that NS metamodel is metacircular, the transformation can generate ontology of NS metamodel itself. It is expected that the results of this work will help with the design of larger real-world applications as well as the metamodel and that the transformation tool will be further extended with additional features which we proposed. -

15 Years of Semantic Web: an Incomplete Survey

Ku¨nstl Intell (2016) 30:117–130 DOI 10.1007/s13218-016-0424-1 TECHNICAL CONTRIBUTION 15 Years of Semantic Web: An Incomplete Survey 1 2 Birte Glimm • Heiner Stuckenschmidt Received: 7 November 2015 / Accepted: 5 January 2016 / Published online: 23 January 2016 Ó Springer-Verlag Berlin Heidelberg 2016 Abstract It has been 15 years since the first publications today’s Web: Uniform Resource Identifiers (URIs) for proposed the use of ontologies as a basis for defining assigning unique identifiers to resources, the HyperText information semantics on the Web starting what today is Markup Language (HTML) for specifying the formatting known as the Semantic Web Research Community. This of Web pages, and the Hypertext Transfer Protocol (HTTP) work undoubtedly had a significant influence on AI as a that allows for the retrieval of linked resources from across field and in particular the knowledge representation and the Web. Typically, the markup of standard Web pages Reasoning Community that quickly identified new chal- describes only the formatting and, hence, Web pages and lenges and opportunities in using Description Logics in a the navigation between them using hyperlinks is targeted practical setting. In this survey article, we will try to give towards human users (cf. Fig. 1). an overview of the developments the field has gone through In 2001, Tim Berners-Lee, James Hendler, and Ora in these 15 years. We will look at three different aspects: Lassila describe their vision for a Semantic Web [5]: the evolution of Semantic Web Language Standards, the The Semantic Web is not a separate Web but an evolution of central topics in the Semantic Web Commu- extension of the current one, in which information is nity and the evolution of the research methodology. -

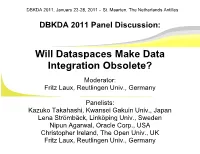

L Dataspaces Make Data Ntegration Obsolete?

DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles DBKDA 2011 Panel Discussion: Will Dataspaces Make Data Integration Obsolete? Moderator: Fritz Laux, Reutlingen Univ., Germany Panelists: Kazuko Takahashi, Kwansei Gakuin Univ., Japan Lena Strömbäck, Linköping Univ., Sweden Nipun Agarwal, Oracle Corp., USA Christopher Ireland, The Open Univ., UK Fritz Laux, Reutlingen Univ., Germany DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles The Dataspace Idea Space of Data Management Scalable Functionality and Costs far Web Search Functionality virtual Organization pay-as-you-go, Enterprise Dataspaces Admin. Portal Schema Proximity Federated first, DBMS DBMS scient. Desktop Repository Search DBMS schemaless, near unstructured high Semantic Integration low Time and Cost adopted from [Franklin, Halvey, Maier, 2005] DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Inclusive ● Deal with all the data of interest, in whatever form => but semantics matters ● We need access to the metadata! ● derive schema from instances? ● Discovering new data sources => The Münchhausen bootstrap problem? Theodor Hosemann (1807-1875) DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Co-existence -

Data Models for Home Services

__________________________________________PROCEEDING OF THE 13TH CONFERENCE OF FRUCT ASSOCIATION Data Models for Home Services Vadym Kramar, Markku Korhonen, Yury Sergeev Oulu University of Applied Sciences, School of Engineering Raahe, Finland {vadym.kramar, markku.korhonen, yury.sergeev}@oamk.fi Abstract An ultimate penetration of communication technologies allowing web access has enriched a conception of smart homes with new paradigms of home services. Modern home services range far beyond such notions as Home Automation or Use of Internet. The services expose their ubiquitous nature by being integrated into smart environments, and provisioned through a variety of end-user devices. Computational intelligence require a use of knowledge technologies, and within a given domain, such requirement as a compliance with modern web architecture is essential. This is where Semantic Web technologies excel. A given work presents an overview of important terms, vocabularies, and data models that may be utilised in data and knowledge engineering with respect to home services. Index Terms: Context, Data engineering, Data models, Knowledge engineering, Semantic Web, Smart homes, Ubiquitous computing. I. INTRODUCTION In recent years, a use of Semantic Web technologies to build a giant information space has shown certain benefits. Rapid development of Web 3.0 and a use of its principle in web applications is the best evidence of such benefits. A traditional database design in still and will be widely used in web applications. One of the most important reason for that is a vast number of databases developed over years and used in a variety of applications varying from simple web services to enterprise portals. In accordance to Forrester Research though a growing number of document, or knowledge bases, such as NoSQL is not a hype anymore [1]. -

Learning to Match Ontologies on the Semantic Web

The VLDB Journal manuscript No. (will be inserted by the editor) Learning to Match Ontologies on the Semantic Web AnHai Doan1, Jayant Madhavan2, Robin Dhamankar1, Pedro Domingos2, Alon Halevy2 1 Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA fanhai,[email protected] 2 Department of Computer Science and Engineering, University of Washington, Seattle, WA 98195, USA fjayant,pedrod,[email protected] Received: date / Revised version: date Abstract On the Semantic Web, data will inevitably come and much of the potential of the Web has so far remained from many different ontologies, and information processing untapped. across ontologies is not possible without knowing the seman- In response, researchers have created the vision of the Se- tic mappings between them. Manually finding such mappings mantic Web [BLHL01], where data has structure and ontolo- is tedious, error-prone, and clearly not possible at the Web gies describe the semantics of the data. When data is marked scale. Hence, the development of tools to assist in the ontol- up using ontologies, softbots can better understand the se- ogy mapping process is crucial to the success of the Seman- mantics and therefore more intelligently locate and integrate tic Web. We describe GLUE, a system that employs machine data for a wide variety of tasks. The following example illus- learning techniques to find such mappings. Given two on- trates the vision of the Semantic Web. tologies, for each concept in one ontology GLUE finds the most similar concept in the other ontology. We give well- founded probabilistic definitions to several practical similar- Example 1 Suppose you want to find out more about some- ity measures, and show that GLUE can work with all of them. -

Integrating Fuzzy Logic in Ontologies

INTEGRATING FUZZY LOGIC IN ONTOLOGIES Silvia Calegari and Davide Ciucci Dipartimento di Informatica, Sistemistica e Comunicazione, Universita` degli Studi di Milano Bicocca, via Bicocca degli Arcimboldi 8, 20126 Milano, Italy Keywords: Concept modifiers, fuzzy logics, fuzzy ontologies, membership modifiers, KAON, ontology editor. Abstract: Ontologies have proved to be very useful in sharing concepts across applications in an unambiguous way. Nowadays, in ontology-based applications information is often vague and imprecise. This is a well-known problem especially for semantics-based applications, such as e-commerce, knowledge management, web por- tals, etc. In computer-aided reasoning, the predominant paradigm to manage vague knowledge is fuzzy set theory. This paper presents an enrichment of classical computational ontologies with fuzzy logic to create fuzzy ontologies. So, it is a step towards facing the nuances of natural languages with ontologies. Our pro- posal is developed in the KAON ontology editor, that allows to handle ontology concepts in an high-level environment. 1 INTRODUCTION A possible solution to handle uncertain data and, hence, to tackle these problems, is to incorporate fuzzy logic into ontologies. The aim of fuzzy set An ontology is a formal conceptualization of a partic- theory (Klir and Yuan, 1995) introduced by L. A. ular domain of interest shared among heterogeneous Zadeh (Zadeh, 1965) is to describe vague concepts applications. It consists of entities, attributes, rela- through a generalized notion of set, according to tionships and axioms to provide a common under- which an object may belong to a certain degree (typ- standing of the real world (Lammari and Mtais, 2004; ically a real number from the interval [0,1]) to a set. -

Semantic Integration in the IFF Gies , Etc

ogies, composing ontologies via fusions, noting dependen- cies between ontologies, declaring the use of other ontolo- 3 Semantic Integration in the IFF gies , etc. The IFF takes a building blocks approach to- wards the development of object-level ontological struc- ture. This is a rather elaborate categorical approach, which Robert E. Kent uses insights and ideas from the theory of distributed logic Ontologos known as information flow (Barwise and Seligman, 1997) [email protected] and the theory of formal concept analysis (Ganter and Wille, 1999). The IFF represents metalogic, and as such operates at the structural level of ontologies. In the IFF, Abstract there is a precise boundary between the metalevel and the object level. The IEEE P1600.1 Standard Upper Ontology (SUO) The modular architecture of the IFF consists of metale- project aims to specify an upper ontology that will provide vels, namespaces and meta-ontologies. There are three me- a structure and a set of general concepts upon which do- talevels: top, upper and lower. This partition, which cor- main ontologies could be constructed. The Information responds to the set-theoretic distinction between small Flow Framework (IFF), which is being developed under (sets), large (classes) and generic collections, is permanent. the auspices of the SUO Working Group, represents the Each metalevel services the level below by providing a structural aspect of the SUO. The IFF is based on category language that is used to declare and axiomatize that level. theory. Semantic integration of object-level ontologies in * The top metalevel services the upper metalevel, the upper the IFF is represented with its fusion construction .