Semantic Integration and Analysis of Clinical Data-1024

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Semantic Integration and Knowledge Discovery for Environmental Research

Journal of Database Management, 18(1), 43-67, January-March 2007 43 Semantic Integration and Knowledge Discovery for Environmental Research Zhiyuan Chen, University of Maryland, Baltimore County (UMBC), USA Aryya Gangopadhyay, University of Maryland, Baltimore County (UMBC), USA George Karabatis, University of Maryland, Baltimore County (UMBC), USA Michael McGuire, University of Maryland, Baltimore County (UMBC), USA Claire Welty, University of Maryland, Baltimore County (UMBC), USA ABSTRACT Environmental research and knowledge discovery both require extensive use of data stored in various sources and created in different ways for diverse purposes. We describe a new metadata approach to elicit semantic information from environmental data and implement semantics- based techniques to assist users in integrating, navigating, and mining multiple environmental data sources. Our system contains specifications of various environmental data sources and the relationships that are formed among them. User requests are augmented with semantically related data sources and automatically presented as a visual semantic network. In addition, we present a methodology for data navigation and pattern discovery using multi-resolution brows- ing and data mining. The data semantics are captured and utilized in terms of their patterns and trends at multiple levels of resolution. We present the efficacy of our methodology through experimental results. Keywords: environmental research, knowledge discovery and navigation, semantic integra- tion, semantic networks, -

Semantics Developer's Guide

MarkLogic Server Semantic Graph Developer’s Guide 2 MarkLogic 10 May, 2019 Last Revised: 10.0-8, October, 2021 Copyright © 2021 MarkLogic Corporation. All rights reserved. MarkLogic Server MarkLogic 10—May, 2019 Semantic Graph Developer’s Guide—Page 2 MarkLogic Server Table of Contents Table of Contents Semantic Graph Developer’s Guide 1.0 Introduction to Semantic Graphs in MarkLogic ..........................................11 1.1 Terminology ..........................................................................................................12 1.2 Linked Open Data .................................................................................................13 1.3 RDF Implementation in MarkLogic .....................................................................14 1.3.1 Using RDF in MarkLogic .........................................................................15 1.3.1.1 Storing RDF Triples in MarkLogic ...........................................17 1.3.1.2 Querying Triples .......................................................................18 1.3.2 RDF Data Model .......................................................................................20 1.3.3 Blank Node Identifiers ..............................................................................21 1.3.4 RDF Datatypes ..........................................................................................21 1.3.5 IRIs and Prefixes .......................................................................................22 1.3.5.1 IRIs ............................................................................................22 -

Rdfa in XHTML: Syntax and Processing Rdfa in XHTML: Syntax and Processing

RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing A collection of attributes and processing rules for extending XHTML to support RDF W3C Recommendation 14 October 2008 This version: http://www.w3.org/TR/2008/REC-rdfa-syntax-20081014 Latest version: http://www.w3.org/TR/rdfa-syntax Previous version: http://www.w3.org/TR/2008/PR-rdfa-syntax-20080904 Diff from previous version: rdfa-syntax-diff.html Editors: Ben Adida, Creative Commons [email protected] Mark Birbeck, webBackplane [email protected] Shane McCarron, Applied Testing and Technology, Inc. [email protected] Steven Pemberton, CWI Please refer to the errata for this document, which may include some normative corrections. This document is also available in these non-normative formats: PostScript version, PDF version, ZIP archive, and Gzip’d TAR archive. The English version of this specification is the only normative version. Non-normative translations may also be available. Copyright © 2007-2008 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply. Abstract The current Web is primarily made up of an enormous number of documents that have been created using HTML. These documents contain significant amounts of structured data, which is largely unavailable to tools and applications. When publishers can express this data more completely, and when tools can read it, a new world of user functionality becomes available, letting users transfer structured data between applications and web sites, and allowing browsing applications to improve the user experience: an event on a web page can be directly imported - 1 - How to Read this Document RDFa in XHTML: Syntax and Processing into a user’s desktop calendar; a license on a document can be detected so that users can be informed of their rights automatically; a photo’s creator, camera setting information, resolution, location and topic can be published as easily as the original photo itself, enabling structured search and sharing. -

Semantic Integration Across Heterogeneous Databases Finding Data Correspondences Using Agglomerative Hierarchical Clustering and Artificial Neural Networks

DEGREE PROJECT IN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2018 Semantic Integration across Heterogeneous Databases Finding Data Correspondences using Agglomerative Hierarchical Clustering and Artificial Neural Networks MARK HOBRO KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Semantic Integration across Heterogeneous Databases Finding Data Correspondences using Agglomerative Hierarchical Clustering and Artificial Neural Networks MARK HOBRO Master in Computer Science Date: April 11, 2018 Supervisor: John Folkesson Examiner: Hedvig Kjellström Swedish title: Semantisk integrering mellan heterogena databaser: Hitta datakopplingar med hjälp av hierarkisk klustring och artificiella neuronnät School of Computer Science and Communication iii Abstract The process of data integration is an important part of the database field when it comes to database migrations and the merging of data. The research in the area has grown with the addition of machine learn- ing approaches in the last 20 years. Due to the complexity of the re- search field, no go-to solutions have appeared. Instead, a wide variety of ways of enhancing database migrations have emerged. This thesis examines how well a learning-based solution performs for the seman- tic integration problem in database migrations. Two algorithms are implemented. One that is based on informa- tion retrieval theory, with the goal of yielding a matching result that can be used as a benchmark for measuring the performance of the machine learning algorithm. The machine learning approach is based on grouping data with agglomerative hierarchical clustering and then training a neural network to recognize patterns in the data. This al- lows making predictions about potential data correspondences across two databases. -

An Introduction to RDF

An Introduction to RDF Knowledge Technologies 1 Manolis Koubarakis Acknowledgement • This presentation is based on the excellent RDF primer by the W3C available at http://www.w3.org/TR/rdf-primer/ and http://www.w3.org/2007/02/turtle/primer/ . • Much of the material in this presentation is verbatim from the above Web site. Knowledge Technologies 2 Manolis Koubarakis Presentation Outline • Basic concepts of RDF • Serialization of RDF graphs: XML/RDF and Turtle • Other Features of RDF (Containers, Collections and Reification). Knowledge Technologies 3 Manolis Koubarakis What is RDF? •TheResource Description Framework (RDF) is a data model for representing information (especially metadata) about resources in the Web. • RDF can also be used to represent information about things that can be identified on the Web, even when they cannot be directly retrieved on the Web (e.g., a book or a person). • RDF is intended for situations in which information about Web resources needs to be processed by applications, rather than being only displayed to people. Knowledge Technologies 4 Manolis Koubarakis Some History • RDF draws upon ideas from knowledge representation, artificial intelligence, and data management, including: – Semantic networks –Frames – Conceptual graphs – Logic-based knowledge representation – Relational databases • Shameless self-promotion : The closest to RDF, pre-Web knowledge representation language is Telos: John Mylopoulos, Alexander Borgida, Matthias Jarke, Manolis Koubarakis: Telos: Representing Knowledge About Information Systems. ACM Trans. Inf. Syst. 8(4): 325-362 (1990). Knowledge Technologies 5 Manolis Koubarakis The Semantic Web “Layer Cake” Knowledge Technologies 6 Manolis Koubarakis RDF Basics • RDF is based on the idea of identifying resources using Web identifiers and describing resources in terms of simple properties and property values. -

On Blank Nodes

On Blank Nodes Alejandro Mallea1, Marcelo Arenas1, Aidan Hogan2, and Axel Polleres2;3 1 Department of Computer Science, Pontificia Universidad Católica de Chile, Chile Email: {aemallea,marenas}@ing.puc.cl 2 Digital Enterprise Research Institute, National University of Ireland Galway, Ireland Email: {aidan.hogan,axel.polleres}@deri.org 3 Siemens AG Österreich, Siemensstrasse 90, 1210 Vienna, Austria Abstract. Blank nodes are defined in RDF as ‘existential variables’ in the same way that has been used before in mathematical logic. However, evidence suggests that actual usage of RDF does not follow this definition. In this paper we thor- oughly cover the issue of blank nodes, from incomplete information in database theory, over different treatments of blank nodes across the W3C stack of RDF- related standards, to empirical analysis of RDF data publicly available on the Web. We then summarize alternative approaches to the problem, weighing up advantages and disadvantages, also discussing proposals for Skolemization. 1 Introduction The Resource Description Framework (RDF) is a W3C standard for representing in- formation on the Web using a common data model [18]. Although adoption of RDF is growing (quite) fast [4, § 3], one of its core features—blank nodes—has been sometimes misunderstood, sometimes misinterpreted, and sometimes ignored by implementers, other standards, and the general Semantic Web community. This lack of consistency between the standard and its actual uses calls for attention. The standard semantics for blank nodes interprets them as existential variables, de- noting the existence of some unnamed resource. These semantics make even simple entailment checking intractable. RDF and RDFS entailment are based on simple entail- ment, and are also intractable due to blank nodes [14]. -

L Dataspaces Make Data Ntegration Obsolete?

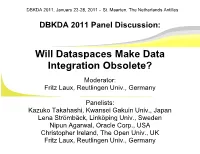

DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles DBKDA 2011 Panel Discussion: Will Dataspaces Make Data Integration Obsolete? Moderator: Fritz Laux, Reutlingen Univ., Germany Panelists: Kazuko Takahashi, Kwansei Gakuin Univ., Japan Lena Strömbäck, Linköping Univ., Sweden Nipun Agarwal, Oracle Corp., USA Christopher Ireland, The Open Univ., UK Fritz Laux, Reutlingen Univ., Germany DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles The Dataspace Idea Space of Data Management Scalable Functionality and Costs far Web Search Functionality virtual Organization pay-as-you-go, Enterprise Dataspaces Admin. Portal Schema Proximity Federated first, DBMS DBMS scient. Desktop Repository Search DBMS schemaless, near unstructured high Semantic Integration low Time and Cost adopted from [Franklin, Halvey, Maier, 2005] DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Inclusive ● Deal with all the data of interest, in whatever form => but semantics matters ● We need access to the metadata! ● derive schema from instances? ● Discovering new data sources => The Münchhausen bootstrap problem? Theodor Hosemann (1807-1875) DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Co-existence -

Learning to Match Ontologies on the Semantic Web

The VLDB Journal manuscript No. (will be inserted by the editor) Learning to Match Ontologies on the Semantic Web AnHai Doan1, Jayant Madhavan2, Robin Dhamankar1, Pedro Domingos2, Alon Halevy2 1 Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA fanhai,[email protected] 2 Department of Computer Science and Engineering, University of Washington, Seattle, WA 98195, USA fjayant,pedrod,[email protected] Received: date / Revised version: date Abstract On the Semantic Web, data will inevitably come and much of the potential of the Web has so far remained from many different ontologies, and information processing untapped. across ontologies is not possible without knowing the seman- In response, researchers have created the vision of the Se- tic mappings between them. Manually finding such mappings mantic Web [BLHL01], where data has structure and ontolo- is tedious, error-prone, and clearly not possible at the Web gies describe the semantics of the data. When data is marked scale. Hence, the development of tools to assist in the ontol- up using ontologies, softbots can better understand the se- ogy mapping process is crucial to the success of the Seman- mantics and therefore more intelligently locate and integrate tic Web. We describe GLUE, a system that employs machine data for a wide variety of tasks. The following example illus- learning techniques to find such mappings. Given two on- trates the vision of the Semantic Web. tologies, for each concept in one ontology GLUE finds the most similar concept in the other ontology. We give well- founded probabilistic definitions to several practical similar- Example 1 Suppose you want to find out more about some- ity measures, and show that GLUE can work with all of them. -

Semantic Integration in the IFF Gies , Etc

ogies, composing ontologies via fusions, noting dependen- cies between ontologies, declaring the use of other ontolo- 3 Semantic Integration in the IFF gies , etc. The IFF takes a building blocks approach to- wards the development of object-level ontological struc- ture. This is a rather elaborate categorical approach, which Robert E. Kent uses insights and ideas from the theory of distributed logic Ontologos known as information flow (Barwise and Seligman, 1997) [email protected] and the theory of formal concept analysis (Ganter and Wille, 1999). The IFF represents metalogic, and as such operates at the structural level of ontologies. In the IFF, Abstract there is a precise boundary between the metalevel and the object level. The IEEE P1600.1 Standard Upper Ontology (SUO) The modular architecture of the IFF consists of metale- project aims to specify an upper ontology that will provide vels, namespaces and meta-ontologies. There are three me- a structure and a set of general concepts upon which do- talevels: top, upper and lower. This partition, which cor- main ontologies could be constructed. The Information responds to the set-theoretic distinction between small Flow Framework (IFF), which is being developed under (sets), large (classes) and generic collections, is permanent. the auspices of the SUO Working Group, represents the Each metalevel services the level below by providing a structural aspect of the SUO. The IFF is based on category language that is used to declare and axiomatize that level. theory. Semantic integration of object-level ontologies in * The top metalevel services the upper metalevel, the upper the IFF is represented with its fusion construction . -

RDF—The Basis of the Semantic Web 3

CHAPTER RDF—The basis of the Semantic Web 3 CHAPTER OUTLINE Distributing Data across the Web ........................................................................................................... 28 Merging Data from Multiple Sources ...................................................................................................... 32 Namespaces, URIs, and Identity............................................................................................................. 33 Expressing URIs in print..........................................................................................................35 Standard namespaces .............................................................................................................37 Identifiers in the RDF Namespace........................................................................................................... 38 Higher-order Relationships .................................................................................................................... 42 Alternatives for Serialization ................................................................................................................. 44 N-Triples................................................................................................................................44 Turtle.....................................................................................................................................45 RDF/XML.............................................................................................................................................. -

Rule-Based Intelligence on the Semantic Web Implications for Military Capabilities

UNCLASSIFIED Rule-Based Intelligence on the Semantic Web Implications for Military Capabilities Dr Paul Smart Senior Research Fellow School of Electronics and Computer Science University of Southampton Southampton SO17 1BJ United Kingdom 26th November 2007 UNCLASSIFIED Report Documentation Page Report Title: Rule-Based Intelligence on the Semantic Web Report Subtitle Implications for Military Capabilities Project Title: N/A Number of Pages: 57 Version: 1.2 Date of Issue: 26/11/2007 Due Date: 22/11/2007 Performance EZ~01~01~17 Number of 95 Indicator: References: Reference Number: DT/Report/RuleIntel Report Availability: APPROVED FOR PUBLIC RELEASE; LIMITED DISTRIBUTION Abstract Availability: APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED Authors: Paul Smart Keywords: semantic web, ontologies, reasoning, decision support, rule languages, military Primary Author Details: Client Details: Dr Paul Smart Senior Research Fellow School of Electronics and Computer Science University of Southampton Southampton, UK SO17 1BJ tel: +44 (0)23 8059 6669 fax: +44 (0)23 8059 2783 email: [email protected] Abstract: Rules are a key element of the Semantic Web vision, promising to provide a foundation for reasoning capabilities that underpin the intelligent manipulation and exploitation of information content. Although ontologies provide the basis for some forms of reasoning, it is unlikely that ontologies, by themselves, will support the range of knowledge-based services that are likely to be required on the Semantic Web. As such, it is important to consider the contribution that rule-based systems can make to the realization of advanced machine intelligence on the Semantic Web. This report aims to review the current state-of-the-art with respect to semantic rule-based technologies. -

Inscribing the Blockchain with Digital Signatures of Signed RDF Graphs. a Worked Example of a Thought Experiment

Inscribing the blockchain with digital signatures of signed RDF graphs. A worked example of a thought experiment. The thing is, ad hoc hash signatures on the blockchain carry no information linking the hash to the resource for which the hash is acting as a digital signature. The onus is on the inscriber to associate the hash with the resource via a separate communications channel, either by publishing the association directly or making use of one of the third-party inscription services that also offer a resource persistence facility – you get to describe what is signed by the hash and they store the description in a database, usually for a fee, or you can upload the resource for storage, definitely for a fee. Riccardo Cassata has a couple of technical articles that expose more of the practical details: https://blog.eternitywall.it/2016/02/16/how-to-verify-notarization/ So what's published is a hexadecimal string indistinguishable from all the others which purportedly matches the hash of Riccardo's mugshot. But which? https://tineye.com/search/ed9f8022c9af413a350ec5758cda520937feab21 What’s needed is a means of creating an inscription that is not only a signature but also a resovable reference to the resource for which it acts as a digital signature. It would be even better if it were possible to create a signature of structured information, such as that describing a social media post – especially if we could leverage off’ve the W3’s recent Activity Streams standard: https://www.w3.org/TR/activitystreams-core/ An example taken from the