What Software Test Approaches, Methods, and Techniques Are Actually Used in Software Industry?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Effectiveness of Software Testing Techniques in Enterprise: a Case Study

MYKOLAS ROMERIS UNIVERSITY BUSINESS AND MEDIA SCHOOL BRIGITA JAZUKEVIČIŪTĖ (Business Informatics) EFFECTIVENESS OF SOFTWARE TESTING TECHNIQUES IN ENTERPRISE: A CASE STUDY Master Thesis Supervisor – Assoc. Prof. Andrej Vlasenko Vilnius, 2016 CONTENTS INTRODUCTION .................................................................................................................................. 7 1. THE RELATIONSHIP BETWEEN SOFTWARE TESTING AND SOFTWARE QUALITY ASSURANCE ........................................................................................................................................ 11 1.1. Introduction to Software Quality Assurance ......................................................................... 11 1.2. The overview of Software testing fundamentals: Concepts, History, Main principles ......... 20 2. AN OVERVIEW OF SOFTWARE TESTING TECHNIQUES AND THEIR USE IN ENTERPRISES ...................................................................................................................................... 26 2.1. Testing techniques as code analysis ....................................................................................... 26 2.1.1. Static testing ...................................................................................................................... 26 2.1.2. Dynamic testing ................................................................................................................. 28 2.2. Test design based Techniques ............................................................................................... -

Microprocessor

MICROPROCESSOR www.MPRonline.com THE REPORTINSIDER’S GUIDE TO MICROPROCESSOR HARDWARE EEMBC’S MULTIBENCH ARRIVES CPU Benchmarks: Not Just For ‘Benchmarketing’ Any More By Tom R. Halfhill {7/28/08-01} Imagine a world without measurements or statistical comparisons. Baseball fans wouldn’t fail to notice that a .300 hitter is better than a .100 hitter. But would they welcome a trade that sends the .300 hitter to Cleveland for three .100 hitters? System designers and software developers face similar quandaries when making trade-offs EEMBC’s role has evolved over the years, and Multi- with multicore processors. Even if a dual-core processor Bench is another step. Originally, EEMBC was conceived as an appears to be better than a single-core processor, how much independent entity that would create benchmark suites and better is it? Twice as good? Would a quad-core processor be certify the scores for accuracy, allowing vendors and customers four times better? Are more cores worth the additional cost, to make valid comparisons among embedded microproces- design complexity, power consumption, and programming sors. (See MPR 5/1/00-02, “EEMBC Releases First Bench- difficulty? marks.”) EEMBC still serves that role. But, as it turns out, most The Embedded Microprocessor Benchmark Consor- EEMBC members don’t openly publish their scores. Instead, tium (EEMBC) wants to help answer those questions. they disclose scores to prospective customers under an NDA or EEMBC’s MultiBench 1.0 is a new benchmark suite for use the benchmarks for internal testing and analysis. measuring the throughput of multiprocessor systems, Partly for this reason, MPR rarely cites EEMBC scores including those built with multicore processors. -

Studying the Feasibility and Importance of Software Testing: an Analysis

Dr. S.S.Riaz Ahamed / Internatinal Journal of Engineering Science and Technology Vol.1(3), 2009, 119-128 STUDYING THE FEASIBILITY AND IMPORTANCE OF SOFTWARE TESTING: AN ANALYSIS Dr.S.S.Riaz Ahamed Principal, Sathak Institute of Technology, Ramanathapuram,India. Email:[email protected], [email protected] ABSTRACT Software testing is a critical element of software quality assurance and represents the ultimate review of specification, design and coding. Software testing is the process of testing the functionality and correctness of software by running it. Software testing is usually performed for one of two reasons: defect detection, and reliability estimation. The problem of applying software testing to defect detection is that software can only suggest the presence of flaws, not their absence (unless the testing is exhaustive). The problem of applying software testing to reliability estimation is that the input distribution used for selecting test cases may be flawed. The key to software testing is trying to find the modes of failure - something that requires exhaustively testing the code on all possible inputs. Software Testing, depending on the testing method employed, can be implemented at any time in the development process. Keywords: verification and validation (V & V) 1 INTRODUCTION Testing is a set of activities that could be planned ahead and conducted systematically. The main objective of testing is to find an error by executing a program. The objective of testing is to check whether the designed software meets the customer specification. The Testing should fulfill the following criteria: ¾ Test should begin at the module level and work “outward” toward the integration of the entire computer based system. -

Customer Success Story

Customer Success Story Interesting Dilemma, Critical Solution Lufthansa Cargo AG The purpose of Lufthansa Cargo AG’s SDB Lufthansa Cargo AG ordered the serves more than 500 destinations world- project was to provide consistent shipment development of SDB from Lufthansa data as an infrastructure for each phase of its Systems. However, functional and load wide with passenger and cargo aircraft shipping process. Consistent shipment data testing is performed at Lufthansa Cargo as well as trucking services. Lufthansa is is a prerequisite for Lufthansa Cargo AG to AG with a core team of six business one of the leaders in the international air efficiently and effectively plan and fulfill the analysts and technical architects, headed cargo industry, and prides itself on high transport of shipments. Without it, much is at by Project Manager, Michael Herrmann. stake. quality service. Herrmann determined that he had an In instances of irregularities caused by interesting dilemma: a need to develop inconsistent shipment data, they would central, stable, and optimal-performance experience additional costs due to extra services for different applications without handling efforts, additional work to correct affecting the various front ends that THE CHALLENGE accounting information, revenue loss, and were already in place or currently under poor feedback from customers. construction. Lufthansa owns and operates a fleet of 19 MD-11F aircrafts, and charters other freight- With such critical factors in mind, Lufthansa Functional testing needed to be performed Cargo AG determined that a well-tested API on services that were independent of any carrying planes. To continue its leadership was the best solution for its central shipment front ends, along with their related test in high quality air cargo services, Lufthansa database. -

ON SOFTWARE TESTING and SUBSUMING MUTANTS an Empirical Study

ON SOFTWARE TESTING AND SUBSUMING MUTANTS An empirical study Master Degree Project in Computer Science Advanced level 15 credits Spring term 2014 András Márki Supervisor: Birgitta Lindström Examiner: Gunnar Mathiason Course: DV736A WECOA13: Web Computing Magister Summary Mutation testing is a powerful, but resource intense technique for asserting software quality. This report investigates two claims about one of the mutation operators on procedural logic, the relation operator replacement (ROR). The constrained ROR mutant operator is a type of constrained mutation, which targets to lower the number of mutants as a “do smarter” approach, making mutation testing more suitable for industrial use. The findings in the report shows that the hypothesis on subsumption is rejected if mutants are to be detected on function return values. The second hypothesis stating that a test case can only detect a single top-level mutant in a subsumption graph is also rejected. The report presents a comprehensive overview on the domain of mutation testing, displays examples of the masking behaviour previously not described in the field of mutation testing, and discusses the importance of the granularity where the mutants should be detected under execution. The contribution is based on literature survey and experiment. The empirical findings as well as the implications are discussed in this master dissertation. Keywords: Software Testing, Mutation Testing, Mutant Subsumption, Relation Operator Replacement, ROR, Empirical Study, Strong Mutation, Weak Mutation -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Acceptance Testing Tools - a Different Perspective on Managing Requirements

Acceptance Testing Tools - A different perspective on managing requirements Wolfgang Emmerich Professor of Distributed Computing University College London http://sse.cs.ucl.ac.uk Learning Objectives • Introduce the V-Model of quality assurance • Stress the importance of testing in terms of software engineering economics • Understand that acceptance tests are requirements specifications • Introduce acceptance and integration testing tools for Test Driven Development • Appreciate that automated acceptance tests are executable requirements specifications 2 V-Model in Distributed System Development Requirements Acceptance Test Software Integration Architecture Test Detailed System Design Test See: B. Boehm Guidelines for Verifying and Validating Software Unit Requirements and Design Code Specifications. Euro IFIP, P. A. Test Samet (editor), North-Holland Publishing Company, IFIP, 1979. 3 1 Traditional Software Development Requirements Acceptance Test Software Integration Architecture Test Detailed System Design Test Unit Code Test 4 Test Driven Development of Distributed Systems Use Cases/ User Stories Acceptance QoS Requirements Test Software Integration & Architecture System Test Detailed Unit Design Test These tests Code should be automated 5 Advantages of Test Driven Development • Early definition of acceptance tests reveals incomplete requirements • Early formalization of requirements into automated acceptance tests unearths ambiguities • Flaws in distributed software architectures (there often are many!) are discovered early • Unit tests become precise specifications • Early resolution improves productivity (see next slide) 6 2 Software Engineering Economics See: B. Boehm: Software Engineering Economics. Prentice Hall. 1981 7 An Example Consider an on-line car dealership User Story: • I first select a locale to determine the language shown at the user interface. I then select the SUV I want to buy. -

Model-Based Fuzzing Using Symbolic Transition Systems

Model-Based Fuzzing Using Symbolic Transition Systems Wouter Bohlken [email protected] January 31, 2021, 48 pages Academic supervisor: Dr. Ana-Maria Oprescu, [email protected] Daily supervisor: Dr. Machiel van der Bijl, [email protected] Host organisation: Axini, https://www.axini.com Universiteit van Amsterdam Faculteit der Natuurwetenschappen, Wiskunde en Informatica Master Software Engineering http://www.software-engineering-amsterdam.nl Abstract As software is getting more complex, the need for thorough testing increases at the same rate. Model- Based Testing (MBT) is a technique for thorough functional testing. Model-Based Testing uses a formal definition of a program and automatically extracts test cases. While MBT is useful for functional testing, non-functional security testing is not covered in this approach. Software vulnerabilities, when exploited, can lead to serious damage in industry. Finding flaws in software is a complicated, laborious, and ex- pensive task, therefore, automated security testing is of high relevance. Fuzzing is one of the most popular and effective techniques for automatically detecting vulnerabilities in software. Many differ- ent fuzzing approaches have been developed in recent years. Research has shown that there is no single fuzzer that works best on all types of software, and different fuzzers should be used for different purposes. In this research, we conducted a systematic review of state-of-the-art fuzzers and composed a list of candidate fuzzers that can be combined with MBT. We present two approaches on how to combine these two techniques: offline and online. An offline approach fully utilizes an existing fuzzer and automatically extracts relevant information from a model, which is then used for fuzzing. -

Load Testing, Benchmarking, and Application Performance Management for the Web

Published in the 2002 Computer Measurement Group (CMG) Conference, Reno, NV, Dec. 2002. LOAD TESTING, BENCHMARKING, AND APPLICATION PERFORMANCE MANAGEMENT FOR THE WEB Daniel A. Menascé Department of Computer Science and E-center of E-Business George Mason University Fairfax, VA 22030-4444 [email protected] Web-based applications are becoming mission-critical for many organizations and their performance has to be closely watched. This paper discusses three important activities in this context: load testing, benchmarking, and application performance management. Best practices for each of these activities are identified. The paper also explains how basic performance results can be used to increase the efficiency of load testing procedures. infrastructure depends on the traffic it expects to see 1. Introduction at its site. One needs to spend enough but no more than is required in the IT infrastructure. Besides, Web-based applications are becoming mission- resources need to be spent where they will generate critical to most private and governmental the most benefit. For example, one should not organizations. The ever-increasing number of upgrade the Web servers if most of the delay is in computers connected to the Internet and the fast the database server. So, in order to maximize the growing number of Web-enabled wireless devices ROI, one needs to know when and how to upgrade create incentives for companies to invest in Web- the IT infrastructure. In other words, not spending at based infrastructures and the associated personnel the right time and spending at the wrong place will to maintain them. By establishing a Web presence, a reduce the cost-benefit of the investment. -

An Analysis of Current Guidance in the Certification of Airborne Software

An Analysis of Current Guidance in the Certification of Airborne Software by Ryan Erwin Berk B.S., Mathematics I Massachusetts Institute of Technology, 2002 B.S., Management Science I Massachusetts Institute of Technology, 2002 Submitted to the System Design and Management Program In Partial Fulfillment of the Requirements for the Degree of Master of Science in Engineering and Management at the ARCHIVES MASSACHUSETrS INS E. MASSACHUSETTS INSTITUTE OF TECHNOLOGY OF TECHNOLOGY June 2009 SEP 2 3 2009 © 2009 Ryan Erwin Berk LIBRARIES All Rights Reserved The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Signature of Author Ryan Erwin Berk / System Design & Management Program May 8, 2009 Certified by _ Nancy Leveson Thesis Supervisor Professor of Aeronautics and Astronautics pm-m A 11A Professor of Engineering Systems Accepted by _ Patrick Hale Director System Design and Management Program This page is intentionally left blank. An Analysis of Current Guidance in the Certification of Airborne Software by Ryan Erwin Berk Submitted to the System Design and Management Program on May 8, 2009 in Partial Fulfillment of the Requirements for the Degree of Master of Science in Engineering and Management ABSTRACT The use of software in commercial aviation has expanded over the last two decades, moving from commercial passenger transport down into single-engine piston aircraft. The most comprehensive and recent official guidance on software certification guidelines was approved in 1992 as DO-178B, before the widespread use of object-oriented design and complex aircraft systems integration in general aviation (GA). -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

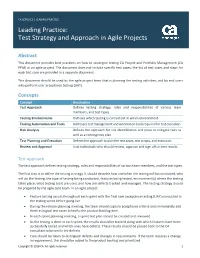

Leading Practice: Test Strategy and Approach in Agile Projects

CA SERVICES | LEADING PRACTICE Leading Practice: Test Strategy and Approach in Agile Projects Abstract This document provides best practices on how to strategize testing CA Project and Portfolio Management (CA PPM) in an agile project. The document does not include specific test cases; the list of test cases and steps for each test case are provided in a separate document. This document should be used by the agile project team that is planning the testing activities, and by end users who perform user acceptance testing (UAT). Concepts Concept Description Test Approach Defines testing strategy, roles and responsibilities of various team members, and test types. Testing Environments Outlines which testing is carried out in which environment. Testing Automation and Tools Addresses test management and automation tools required for test execution. Risk Analysis Defines the approach for risk identification and plans to mitigate risks as well as a contingency plan. Test Planning and Execution Defines the approach to plan the test cases, test scripts, and execution. Review and Approval Lists individuals who should review, approve and sign off on test results. Test Approach The test approach defines testing strategy, roles and responsibilities of various team members, and the test types. The first step is to define the testing strategy. It should describe how and when the testing will be conducted, who will do the testing, the type of testing being conducted, features being tested, environment(s) where the testing takes place, what testing tools are used, and how are defects tracked and managed. The testing strategy should be prepared by the agile core team.