A Comparison Between Packrat Parsing and Conventional Shift-Reduce Parsing on Real-World Grammars and Inputs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

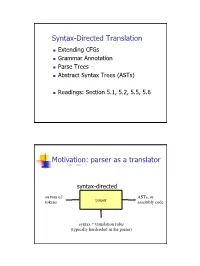

Syntax-Directed Translation, Parse Trees, Abstract Syntax Trees

Syntax-Directed Translation Extending CFGs Grammar Annotation Parse Trees Abstract Syntax Trees (ASTs) Readings: Section 5.1, 5.2, 5.5, 5.6 Motivation: parser as a translator syntax-directed translation stream of ASTs, or tokens parser assembly code syntax + translation rules (typically hardcoded in the parser) 1 Mechanism of syntax-directed translation syntax-directed translation is done by extending the CFG a translation rule is defined for each production given X Æ d A B c the translation of X is defined in terms of translation of nonterminals A, B values of attributes of terminals d, c constants To translate an input string: 1. Build the parse tree. 2. Working bottom-up • Use the translation rules to compute the translation of each nonterminal in the tree Result: the translation of the string is the translation of the parse tree's root nonterminal Why bottom up? a nonterminal's value may depend on the value of the symbols on the right-hand side, so translate a non-terminal node only after children translations are available 2 Example 1: arith expr to its value Syntax-directed translation: the CFG translation rules E Æ E + T E1.trans = E2.trans + T.trans E Æ T E.trans = T.trans T Æ T * F T1.trans = T2.trans * F.trans T Æ F T.trans = F.trans F Æ int F.trans = int.value F Æ ( E ) F.trans = E.trans Example 1 (cont) E (18) Input: 2 * (4 + 5) T (18) T (2) * F (9) F (2) ( E (9) ) int (2) E (4) * T (5) Annotated Parse Tree T (4) F (5) F (4) int (5) int (4) 3 Example 2: Compute type of expr E -> E + E if ((E2.trans == INT) and (E3.trans == INT) then E1.trans = INT else E1.trans = ERROR E -> E and E if ((E2.trans == BOOL) and (E3.trans == BOOL) then E1.trans = BOOL else E1.trans = ERROR E -> E == E if ((E2.trans == E3.trans) and (E2.trans != ERROR)) then E1.trans = BOOL else E1.trans = ERROR E -> true E.trans = BOOL E -> false E.trans = BOOL E -> int E.trans = INT E -> ( E ) E1.trans = E2.trans Example 2 (cont) Input: (2 + 2) == 4 1. -

An Efficient Implementation of the Head-Corner Parser

An Efficient Implementation of the Head-Corner Parser Gertjan van Noord" Rijksuniversiteit Groningen This paper describes an efficient and robust implementation of a bidirectional, head-driven parser for constraint-based grammars. This parser is developed for the OVIS system: a Dutch spoken dialogue system in which information about public transport can be obtained by telephone. After a review of the motivation for head-driven parsing strategies, and head-corner parsing in particular, a nondeterministic version of the head-corner parser is presented. A memorization technique is applied to obtain a fast parser. A goal-weakening technique is introduced, which greatly improves average case efficiency, both in terms of speed and space requirements. I argue in favor of such a memorization strategy with goal-weakening in comparison with ordinary chart parsers because such a strategy can be applied selectively and therefore enormously reduces the space requirements of the parser, while no practical loss in time-efficiency is observed. On the contrary, experiments are described in which head-corner and left-corner parsers imple- mented with selective memorization and goal weakening outperform "standard" chart parsers. The experiments include the grammar of the OV/S system and the Alvey NL Tools grammar. Head-corner parsing is a mix of bottom-up and top-down processing. Certain approaches to robust parsing require purely bottom-up processing. Therefore, it seems that head-corner parsing is unsuitable for such robust parsing techniques. However, it is shown how underspecification (which arises very naturally in a logic programming environment) can be used in the head-corner parser to allow such robust parsing techniques. -

Derivatives of Parsing Expression Grammars

Derivatives of Parsing Expression Grammars Aaron Moss Cheriton School of Computer Science University of Waterloo Waterloo, Ontario, Canada [email protected] This paper introduces a new derivative parsing algorithm for recognition of parsing expression gram- mars. Derivative parsing is shown to have a polynomial worst-case time bound, an improvement on the exponential bound of the recursive descent algorithm. This work also introduces asymptotic analysis based on inputs with a constant bound on both grammar nesting depth and number of back- tracking choices; derivative and recursive descent parsing are shown to run in linear time and constant space on this useful class of inputs, with both the theoretical bounds and the reasonability of the in- put class validated empirically. This common-case constant memory usage of derivative parsing is an improvement on the linear space required by the packrat algorithm. 1 Introduction Parsing expression grammars (PEGs) are a parsing formalism introduced by Ford [6]. Any LR(k) lan- guage can be represented as a PEG [7], but there are some non-context-free languages that may also be represented as PEGs (e.g. anbncn [7]). Unlike context-free grammars (CFGs), PEGs are unambiguous, admitting no more than one parse tree for any grammar and input. PEGs are a formalization of recursive descent parsers allowing limited backtracking and infinite lookahead; a string in the language of a PEG can be recognized in exponential time and linear space using a recursive descent algorithm, or linear time and space using the memoized packrat algorithm [6]. PEGs are formally defined and these algo- rithms outlined in Section 3. -

Modular Logic Grammars

MODULAR LOGIC GRAMMARS Michael C. McCord IBM Thomas J. Watson Research Center P. O. Box 218 Yorktown Heights, NY 10598 ABSTRACT scoping of quantifiers (and more generally focalizers, McCord, 1981) when the building of log- This report describes a logic grammar formalism, ical forms is too closely bonded to syntax. Another Modular Logic Grammars, exhibiting a high degree disadvantage is just a general result of lack of of modularity between syntax and semantics. There modularity: it can be harder to develop and un- is a syntax rule compiler (compiling into Prolog) derstand syntax rules when too much is going on in which takes care of the building of analysis them. structures and the interface to a clearly separated semantic interpretation component dealing with The logic grammars described in McCord (1982, scoping and the construction of logical forms. The 1981) were three-pass systems, where one of the main whole system can work in either a one-pass mode or points of the modularity was a good treatment of a two-pass mode. [n the one-pass mode, logical scoping. The first pass was the syntactic compo- forms are built directly during parsing through nent, written as a definite clause grammar, where interleaved calls to semantics, added automatically syntactic structures were explicitly built up in by the rule compiler. [n the two-pass mode, syn- the arguments of the non-terminals. Word sense tactic analysis trees are built automatically in selection and slot-filling were done in this first the first pass, and then given to the (one-pass) pass, so that the output analysis trees were actu- semantic component. -

Parsing 1. Grammars and Parsing 2. Top-Down and Bottom-Up Parsing 3

Syntax Parsing syntax: from the Greek syntaxis, meaning “setting out together or arrangement.” 1. Grammars and parsing Refers to the way words are arranged together. 2. Top-down and bottom-up parsing Why worry about syntax? 3. Chart parsers • The boy ate the frog. 4. Bottom-up chart parsing • The frog was eaten by the boy. 5. The Earley Algorithm • The frog that the boy ate died. • The boy whom the frog was eaten by died. Slide CS474–1 Slide CS474–2 Grammars and Parsing Need a grammar: a formal specification of the structures allowable in Syntactic Analysis the language. Key ideas: Need a parser: algorithm for assigning syntactic structure to an input • constituency: groups of words may behave as a single unit or phrase sentence. • grammatical relations: refer to the subject, object, indirect Sentence Parse Tree object, etc. Beavis ate the cat. S • subcategorization and dependencies: refer to certain kinds of relations between words and phrases, e.g. want can be followed by an NP VP infinitive, but find and work cannot. NAME V NP All can be modeled by various kinds of grammars that are based on ART N context-free grammars. Beavis ate the cat Slide CS474–3 Slide CS474–4 CFG example CFG’s are also called phrase-structure grammars. CFG’s Equivalent to Backus-Naur Form (BNF). A context free grammar consists of: 1. S → NP VP 5. NAME → Beavis 1. a set of non-terminal symbols N 2. VP → V NP 6. V → ate 2. a set of terminal symbols Σ (disjoint from N) 3. -

Abstract Syntax Trees & Top-Down Parsing

Abstract Syntax Trees & Top-Down Parsing Review of Parsing • Given a language L(G), a parser consumes a sequence of tokens s and produces a parse tree • Issues: – How do we recognize that s ∈ L(G) ? – A parse tree of s describes how s ∈ L(G) – Ambiguity: more than one parse tree (possible interpretation) for some string s – Error: no parse tree for some string s – How do we construct the parse tree? Compiler Design 1 (2011) 2 Abstract Syntax Trees • So far, a parser traces the derivation of a sequence of tokens • The rest of the compiler needs a structural representation of the program • Abstract syntax trees – Like parse trees but ignore some details – Abbreviated as AST Compiler Design 1 (2011) 3 Abstract Syntax Trees (Cont.) • Consider the grammar E → int | ( E ) | E + E • And the string 5 + (2 + 3) • After lexical analysis (a list of tokens) int5 ‘+’ ‘(‘ int2 ‘+’ int3 ‘)’ • During parsing we build a parse tree … Compiler Design 1 (2011) 4 Example of Parse Tree E • Traces the operation of the parser E + E • Captures the nesting structure • But too much info int5 ( E ) – Parentheses – Single-successor nodes + E E int 2 int3 Compiler Design 1 (2011) 5 Example of Abstract Syntax Tree PLUS PLUS 5 2 3 • Also captures the nesting structure • But abstracts from the concrete syntax a more compact and easier to use • An important data structure in a compiler Compiler Design 1 (2011) 6 Semantic Actions • This is what we’ll use to construct ASTs • Each grammar symbol may have attributes – An attribute is a property of a programming language construct -

The Evolution of the Unix Time-Sharing System*

The Evolution of the Unix Time-sharing System* Dennis M. Ritchie Bell Laboratories, Murray Hill, NJ, 07974 ABSTRACT This paper presents a brief history of the early development of the Unix operating system. It concentrates on the evolution of the file system, the process-control mechanism, and the idea of pipelined commands. Some attention is paid to social conditions during the development of the system. NOTE: *This paper was first presented at the Language Design and Programming Methodology conference at Sydney, Australia, September 1979. The conference proceedings were published as Lecture Notes in Computer Science #79: Language Design and Programming Methodology, Springer-Verlag, 1980. This rendition is based on a reprinted version appearing in AT&T Bell Laboratories Technical Journal 63 No. 6 Part 2, October 1984, pp. 1577-93. Introduction During the past few years, the Unix operating system has come into wide use, so wide that its very name has become a trademark of Bell Laboratories. Its important characteristics have become known to many people. It has suffered much rewriting and tinkering since the first publication describing it in 1974 [1], but few fundamental changes. However, Unix was born in 1969 not 1974, and the account of its development makes a little-known and perhaps instructive story. This paper presents a technical and social history of the evolution of the system. Origins For computer science at Bell Laboratories, the period 1968-1969 was somewhat unsettled. The main reason for this was the slow, though clearly inevitable, withdrawal of the Labs from the Multics project. To the Labs computing community as a whole, the problem was the increasing obviousness of the failure of Multics to deliver promptly any sort of usable system, let alone the panacea envisioned earlier. -

An Analysis of the D Programming Language

Journal of International Technology and Information Management Volume 16 Issue 3 Article 7 2007 An Analysis of the D Programming Language Sumanth Yenduri University of Mississippi- Long Beach Louise Perkins University of Southern Mississippi- Long Beach Md. Sarder University of Southern Mississippi- Long Beach Follow this and additional works at: https://scholarworks.lib.csusb.edu/jitim Part of the Business Intelligence Commons, E-Commerce Commons, Management Information Systems Commons, Management Sciences and Quantitative Methods Commons, Operational Research Commons, and the Technology and Innovation Commons Recommended Citation Yenduri, Sumanth; Perkins, Louise; and Sarder, Md. (2007) "An Analysis of the D Programming Language," Journal of International Technology and Information Management: Vol. 16 : Iss. 3 , Article 7. Available at: https://scholarworks.lib.csusb.edu/jitim/vol16/iss3/7 This Article is brought to you for free and open access by CSUSB ScholarWorks. It has been accepted for inclusion in Journal of International Technology and Information Management by an authorized editor of CSUSB ScholarWorks. For more information, please contact [email protected]. Analysis of Programming Language D Journal of International Technology and Information Management An Analysis of the D Programming Language Sumanth Yenduri Louise Perkins Md. Sarder University of Southern Mississippi - Long Beach ABSTRACT The C language and its derivatives have been some of the dominant higher-level languages used, and the maturity has stemmed several newer languages that, while still relatively young, possess the strength of decades of trials and experimentation with programming concepts. While C++ was a major step in the advancement from procedural to object-oriented programming (with a backbone of C), several problems existed that prompted the development of new languages. -

An Analysis of the D Programming Language Sumanth Yenduri University of Mississippi- Long Beach

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CSUSB ScholarWorks Journal of International Technology and Information Management Volume 16 | Issue 3 Article 7 2007 An Analysis of the D Programming Language Sumanth Yenduri University of Mississippi- Long Beach Louise Perkins University of Southern Mississippi- Long Beach Md. Sarder University of Southern Mississippi- Long Beach Follow this and additional works at: http://scholarworks.lib.csusb.edu/jitim Part of the Business Intelligence Commons, E-Commerce Commons, Management Information Systems Commons, Management Sciences and Quantitative Methods Commons, Operational Research Commons, and the Technology and Innovation Commons Recommended Citation Yenduri, Sumanth; Perkins, Louise; and Sarder, Md. (2007) "An Analysis of the D Programming Language," Journal of International Technology and Information Management: Vol. 16: Iss. 3, Article 7. Available at: http://scholarworks.lib.csusb.edu/jitim/vol16/iss3/7 This Article is brought to you for free and open access by CSUSB ScholarWorks. It has been accepted for inclusion in Journal of International Technology and Information Management by an authorized administrator of CSUSB ScholarWorks. For more information, please contact [email protected]. Analysis of Programming Language D Journal of International Technology and Information Management An Analysis of the D Programming Language Sumanth Yenduri Louise Perkins Md. Sarder University of Southern Mississippi - Long Beach ABSTRACT The C language and its derivatives have been some of the dominant higher-level languages used, and the maturity has stemmed several newer languages that, while still relatively young, possess the strength of decades of trials and experimentation with programming concepts. -

Compiler Construction

UNIVERSITY OF CAMBRIDGE Compiler Construction An 18-lecture course Alan Mycroft Computer Laboratory, Cambridge University http://www.cl.cam.ac.uk/users/am/ Lent Term 2007 Compiler Construction 1 Lent Term 2007 Course Plan UNIVERSITY OF CAMBRIDGE Part A : intro/background Part B : a simple compiler for a simple language Part C : implementing harder things Compiler Construction 2 Lent Term 2007 A compiler UNIVERSITY OF CAMBRIDGE A compiler is a program which translates the source form of a program into a semantically equivalent target form. • Traditionally this was machine code or relocatable binary form, but nowadays the target form may be a virtual machine (e.g. JVM) or indeed another language such as C. • Can appear a very hard program to write. • How can one even start? • It’s just like juggling too many balls (picking instructions while determining whether this ‘+’ is part of ‘++’ or whether its right operand is just a variable or an expression ...). Compiler Construction 3 Lent Term 2007 How to even start? UNIVERSITY OF CAMBRIDGE “When finding it hard to juggle 4 balls at once, juggle them each in turn instead ...” character -token -parse -intermediate -target stream stream tree code code syn trans cg lex A multi-pass compiler does one ‘simple’ thing at once and passes its output to the next stage. These are pretty standard stages, and indeed language and (e.g. JVM) system design has co-evolved around them. Compiler Construction 4 Lent Term 2007 Compilers can be big and hard to understand UNIVERSITY OF CAMBRIDGE Compilers can be very large. In 2004 the Gnu Compiler Collection (GCC) was noted to “[consist] of about 2.1 million lines of code and has been in development for over 15 years”. -

The UNIX Time- Sharing System

1. Introduction There have been three versions of UNIX. The earliest version (circa 1969–70) ran on the Digital Equipment Cor- poration PDP-7 and -9 computers. The second version ran on the unprotected PDP-11/20 computer. This paper describes only the PDP-11/40 and /45 [l] system since it is The UNIX Time- more modern and many of the differences between it and older UNIX systems result from redesign of features found Sharing System to be deficient or lacking. Since PDP-11 UNIX became operational in February Dennis M. Ritchie and Ken Thompson 1971, about 40 installations have been put into service; they Bell Laboratories are generally smaller than the system described here. Most of them are engaged in applications such as the preparation and formatting of patent applications and other textual material, the collection and processing of trouble data from various switching machines within the Bell System, and recording and checking telephone service orders. Our own installation is used mainly for research in operating sys- tems, languages, computer networks, and other topics in computer science, and also for document preparation. UNIX is a general-purpose, multi-user, interactive Perhaps the most important achievement of UNIX is to operating system for the Digital Equipment Corpora- demonstrate that a powerful operating system for interac- tion PDP-11/40 and 11/45 computers. It offers a number tive use need not be expensive either in equipment or in of features seldom found even in larger operating sys- human effort: UNIX can run on hardware costing as little as tems, including: (1) a hierarchical file system incorpo- $40,000, and less than two man years were spent on the rating demountable volumes; (2) compatible file, device, main system software. -

Unix Programmer's Manual

There is no warranty of merchantability nor any warranty of fitness for a particu!ar purpose nor any other warranty, either expressed or imp!ied, a’s to the accuracy of the enclosed m~=:crials or a~ Io ~helr ,~.ui~::~::.j!it’/ for ~ny p~rficu~ar pur~.~o~e. ~".-~--, ....-.re: " n~ I T~ ~hone Laaorator es 8ssumg$ no rO, p::::nS,-,,.:~:y ~or their use by the recipient. Furln=,, [: ’ La:::.c:,:e?o:,os ~:’urnes no ob~ja~tjon ~o furnish 6ny a~o,~,,..n~e at ~ny k:nd v,,hetsoever, or to furnish any additional jnformstjcn or documenta’tjon. UNIX PROGRAMMER’S MANUAL F~ifth ~ K. Thompson D. M. Ritchie June, 1974 Copyright:.©d972, 1973, 1974 Bell Telephone:Laboratories, Incorporated Copyright © 1972, 1973, 1974 Bell Telephone Laboratories, Incorporated This manual was set by a Graphic Systems photo- typesetter driven by the troff formatting program operating under the UNIX system. The text of the manual was prepared using the ed text editor. PREFACE to the Fifth Edition . The number of UNIX installations is now above 50, and many more are expected. None of these has exactly the same complement of hardware or software. Therefore, at any particular installa- tion, it is quite possible that this manual will give inappropriate information. The authors are grateful to L. L. Cherry, L. A. Dimino, R. C. Haight, S. C. Johnson, B. W. Ker- nighan, M. E. Lesk, and E. N. Pinson for their contributions to the system software, and to L. E. McMahon for software and for his contributions to this manual.