Comparing Statistics from the Bibliometric Production Platforms Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

In-Text Citation's Frequencies-Based Recommendations of Relevant

In-text citation's frequencies-based recommendations of relevant research papers Abdul Shahid1, Muhammad Tanvir Afzal2, Abdullah Alharbi3, Hanan Aljuaid4 and Shaha Al-Otaibi5 1 Institute of Computing, Kohat University of Science & Technology, Kohat, Pakistan 2 Department of Computer Science, NAMAL Institute, Mianwali, Pakistan 3 Department of Information Technology, College of Computers and Information Technology, Taif University, Taif, Saudi Arabia 4 Computer Sciences Department, College of Computer and Information Sciences, Princess Nourah Bint Abdulrahman University (PNU), Riyadh, Saudi Arabia 5 Information Systems Department, College of Computer and Information Sciences, Princess Nourah Bint Abdulrahman University, Riyadh, Saudi Arabia ABSTRACT From the past half of a century, identification of the relevant documents is deemed an active area of research due to the rapid increase of data on the web. The traditional models to retrieve relevant documents are based on bibliographic information such as Bibliographic coupling, Co-citations, and Direct citations. However, in the recent past, the scientific community has started to employ textual features to improve existing models' accuracy. In our previous study, we found that analysis of citations at a deep level (i.e., content level) can play a paramount role in finding more relevant documents than surface level (i.e., just bibliography details). We found that cited and citing papers have a high degree of relevancy when in-text citations frequency of the cited paper is more than five times in the citing paper's text. This paper is an extension of our previous study in terms of its evaluation of a comprehensive dataset. Moreover, the study results are also compared with other state-of-the-art approaches i.e., content, metadata, and bibliography. -

JOURNAL LIST Total Journals: 3751

Journal Format For Print Page: ISI 页码,1/62 SCIENCE CITATION INDEX - JOURNAL LIST Total journals: 3751 1. AAPG BULLETIN Monthly ISSN: 0149-1423 AMER ASSOC PETROLEUM GEOLOGIST, 1444 S BOULDER AVE, PO BOX 979, TULSA, USA, OK, 74119-3604 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Physical, Chemical & Earth Sciences 2. ABDOMINAL IMAGING Bimonthly ISSN: 0942-8925 SPRINGER, 233 SPRING ST, NEW YORK, USA, NY, 10013 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Clinical Medicine 4. BIOSIS Previews 3. ABSTRACTS OF PAPERS OF THE AMERICAN CHEMICAL SOCIETY Semiannual ISSN: 0065- 7727 AMER CHEMICAL SOC, 1155 16TH ST, NW, WASHINGTON, USA, DC, 20036 1. Science Citation Index 2. Science Citation Index Expanded 3. BIOSIS Previews 4. BIOSIS Reviews Reports And Meetings 4. ACADEMIC EMERGENCY MEDICINE Monthly ISSN: 1069-6563 WILEY-BLACKWELL, 111 RIVER ST, HOBOKEN, USA, NJ, 07030-5774 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Clinical Medicine 5. ACADEMIC MEDICINE Monthly ISSN: 1040-2446 LIPPINCOTT WILLIAMS & WILKINS, 530 WALNUT ST, PHILADELPHIA, USA, PA, 19106- 3621 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Clinical Medicine 6. ACCOUNTS OF CHEMICAL RESEARCH Monthly ISSN: 0001-4842 AMER CHEMICAL SOC, 1155 16TH ST, NW, WASHINGTON, USA, DC, 20036 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Life Sciences 4. Current Contents - Physical, Chemical & Earth Sciences 7. ACI MATERIALS JOURNAL Bimonthly ISSN: 0889-325X AMER CONCRETE INST, 38800 COUNTRY CLUB DR, FARMINGTON HILLS, USA, MI, 48331 1. Science Citation Index 2. -

Google Scholar, Web of Science, and Scopus

Journal of Informetrics, vol. 12, no. 4, pp. 1160-1177, 2018. https://doi.org/10.1016/J.JOI.2018.09.002 Google Scholar, Web of Science, and Scopus: a systematic comparison of citations in 252 subject categories Alberto Martín-Martín1 , Enrique Orduna-Malea2 , Mike 3 1 Thelwall , Emilio Delgado López-Cózar Version 1.6 March 12, 2019 Abstract Despite citation counts from Google Scholar (GS), Web of Science (WoS), and Scopus being widely consulted by researchers and sometimes used in research evaluations, there is no recent or systematic evidence about the differences between them. In response, this paper investigates 2,448,055 citations to 2,299 English-language highly-cited documents from 252 GS subject categories published in 2006, comparing GS, the WoS Core Collection, and Scopus. GS consistently found the largest percentage of citations across all areas (93%-96%), far ahead of Scopus (35%-77%) and WoS (27%-73%). GS found nearly all the WoS (95%) and Scopus (92%) citations. Most citations found only by GS were from non-journal sources (48%-65%), including theses, books, conference papers, and unpublished materials. Many were non-English (19%- 38%), and they tended to be much less cited than citing sources that were also in Scopus or WoS. Despite the many unique GS citing sources, Spearman correlations between citation counts in GS and WoS or Scopus are high (0.78-0.99). They are lower in the Humanities, and lower between GS and WoS than between GS and Scopus. The results suggest that in all areas GS citation data is essentially a superset of WoS and Scopus, with substantial extra coverage. -

The Journal Impact Factor Denominator Defining Citable (Counted) Items

COMMENTARIES The Journal Impact Factor Denominator Defining Citable (Counted) Items Marie E. McVeigh, MS The items counted in the denominator of the impact fac- tor are identifiable in the Web of Science database by hav- Stephen J. Mann ing the index field document type set as “Article,” “Re- view,” or “Proceedings Paper” (a specialized subset of the VER ITS 30-YEAR HISTORY, THE JOURNAL IMPACT article document type). These document types identify the factor has been the subject of much discussion scholarly contribution of the journal to the literature and and debate.1 From its first release in 1975, bib- are counted as “citable items” in the denominator of the im- liometricians and library scientists discussed its pact factor. A journal accepted for coverage in the Thom- Ovalue and its vagaries. In the last decade, discussion has 6 son Reuters citation database is reviewed by experts who shifted to the way in which impact factor data are used. In consider the bibliographic and bibliometric characteristics an environment eager for objective measures of productiv- of all article types published by that journal (eg, items), which ity, relevance, and research value, the impact factor has been are covered by that journal in the context of other materi- applied broadly and indiscriminately.2,3 The impact factor als in the journal, the subject, and the database as a whole. has gone from being a measure of a journal’s citation influ- This journal-specific analysis identifies the journal sec- ence in the broader literature to a surrogate that assesses tions, subsections, or both that contain materials likely to the scholarly value of work published in that journal. -

Networks of Reader and Country Status: an Analysis of Mendeley Reader Statistics

Networks of reader and country status: an analysis of Mendeley reader statistics Robin Haunschild1, Lutz Bornmann2 and Loet LeydesdorV3 1 Information Retrieval Service, Max Planck Institute for Solid State Research, Stuttgart, Germany 2 Division for Science and Innovation Studies, Administrative Headquarters of the Max Planck Society, Munich, Germany 3 Amsterdam School of Communication Research (ASCoR), University of Amsterdam, Amsterdam, The Netherlands ABSTRACT The number of papers published in journals indexed by the Web of Science core collection is steadily increasing. In recent years, nearly two million new papers were published each year; somewhat more than one million papers when primary research papers are considered only (articles and reviews are the document types where primary research is usually reported or reviewed). However, who reads these papers? More precisely, which groups of researchers from which (self-assigned) scientific disciplines and countries are reading these papers? Is it possible to visualize readership patterns for certain countries, scientific disciplines, or academic status groups? One popular method to answer these questions is a network analysis. In this study, we analyze Mendeley readership data of a set of 1,133,224 articles and 64,960 reviews with publication year 2012 to generate three diVerent networks: (1) The net- work based on disciplinary aYliations of Mendeley readers contains four groups: (i) biology, (ii) social sciences and humanities (including relevant computer sciences), (iii) bio-medical sciences, and (iv) natural sciences and engineering. In all four groups, the category with the addition “miscellaneous” prevails. (2) The network of co-readers in terms of professional status shows that a common interest in papers is mainly shared among PhD students, Master’s students, and postdocs. -

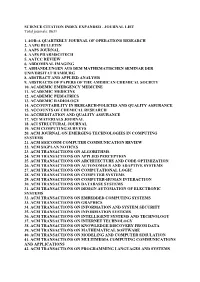

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total Journals: 8631

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total journals: 8631 1. 4OR-A QUARTERLY JOURNAL OF OPERATIONS RESEARCH 2. AAPG BULLETIN 3. AAPS JOURNAL 4. AAPS PHARMSCITECH 5. AATCC REVIEW 6. ABDOMINAL IMAGING 7. ABHANDLUNGEN AUS DEM MATHEMATISCHEN SEMINAR DER UNIVERSITAT HAMBURG 8. ABSTRACT AND APPLIED ANALYSIS 9. ABSTRACTS OF PAPERS OF THE AMERICAN CHEMICAL SOCIETY 10. ACADEMIC EMERGENCY MEDICINE 11. ACADEMIC MEDICINE 12. ACADEMIC PEDIATRICS 13. ACADEMIC RADIOLOGY 14. ACCOUNTABILITY IN RESEARCH-POLICIES AND QUALITY ASSURANCE 15. ACCOUNTS OF CHEMICAL RESEARCH 16. ACCREDITATION AND QUALITY ASSURANCE 17. ACI MATERIALS JOURNAL 18. ACI STRUCTURAL JOURNAL 19. ACM COMPUTING SURVEYS 20. ACM JOURNAL ON EMERGING TECHNOLOGIES IN COMPUTING SYSTEMS 21. ACM SIGCOMM COMPUTER COMMUNICATION REVIEW 22. ACM SIGPLAN NOTICES 23. ACM TRANSACTIONS ON ALGORITHMS 24. ACM TRANSACTIONS ON APPLIED PERCEPTION 25. ACM TRANSACTIONS ON ARCHITECTURE AND CODE OPTIMIZATION 26. ACM TRANSACTIONS ON AUTONOMOUS AND ADAPTIVE SYSTEMS 27. ACM TRANSACTIONS ON COMPUTATIONAL LOGIC 28. ACM TRANSACTIONS ON COMPUTER SYSTEMS 29. ACM TRANSACTIONS ON COMPUTER-HUMAN INTERACTION 30. ACM TRANSACTIONS ON DATABASE SYSTEMS 31. ACM TRANSACTIONS ON DESIGN AUTOMATION OF ELECTRONIC SYSTEMS 32. ACM TRANSACTIONS ON EMBEDDED COMPUTING SYSTEMS 33. ACM TRANSACTIONS ON GRAPHICS 34. ACM TRANSACTIONS ON INFORMATION AND SYSTEM SECURITY 35. ACM TRANSACTIONS ON INFORMATION SYSTEMS 36. ACM TRANSACTIONS ON INTELLIGENT SYSTEMS AND TECHNOLOGY 37. ACM TRANSACTIONS ON INTERNET TECHNOLOGY 38. ACM TRANSACTIONS ON KNOWLEDGE DISCOVERY FROM DATA 39. ACM TRANSACTIONS ON MATHEMATICAL SOFTWARE 40. ACM TRANSACTIONS ON MODELING AND COMPUTER SIMULATION 41. ACM TRANSACTIONS ON MULTIMEDIA COMPUTING COMMUNICATIONS AND APPLICATIONS 42. ACM TRANSACTIONS ON PROGRAMMING LANGUAGES AND SYSTEMS 43. ACM TRANSACTIONS ON RECONFIGURABLE TECHNOLOGY AND SYSTEMS 44. -

Best Practice & Research: Clinical Endocrinology

BEST PRACTICE & RESEARCH: CLINICAL ENDOCRINOLOGY & METABOLISM AUTHOR INFORMATION PACK TABLE OF CONTENTS XXX . • Description p.1 • Impact Factor p.1 • Abstracting and Indexing p.2 • Editorial Board p.2 • Guide for Authors p.3 ISSN: 1521-690X DESCRIPTION . Best Practice & Research Clinical Endocrinology & Metabolism is a topical serial publication integrating the results from the latest original research into practical, evidence-based review articles that seek to address the key clinical issues of diagnosis, treatment and patient management. Each issue follows a problem-orientated approach which focuses on the key questions to be addressed, clearly defining what is known and highlighting topics for future research. Management is described in practical terms so that it can be applied to the individual patient. The series is aimed at the physician either in practice or in training. In practical paperback format, each 200 page issue of Best Practice & Research Clinical Endocrinology & Metabolism provides a comprehensive review of clinical practice and thinking within one specific area of endocrinology and metabolism. Each issue, written by an international team of contributors and guest edited by a renowned expert, form part of a continuous update of current clinical practice. • Attractive format and two-colour text layout • Six issues published annually • Highlighting the latest 'best practice' and 'clinical evidence' • Topic-based, problem-orientated approach • Recommendations on diagnosis, treatment and patient management The objective of the series is to provide the physician with the most up-to-date source of information in the field. IMPACT FACTOR . 2020: 4.690 © Clarivate Analytics Journal Citation Reports 2021 AUTHOR INFORMATION PACK 23 Sep 2021 www.elsevier.com/locate/beem 1 ABSTRACTING AND INDEXING . -

Endocrinology & Metabolis

موسسه تحقیقاتی پژوهشی اشراق www.isi-isc.com لیست مجﻻت ISI هورمون شناسی و متابولیسم SCIENCE CITATION INDEX - ENDOCRINOLOGY & METABOLISM - JOURNAL LIST 1. ACTA DIABETOLOGICA Bimonthly ISSN: 0940-5429 SPRINGER-VERLAG ITALIA SRL, VIA DECEMBRIO, 28, MILAN, ITALY, 20137 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Life Sciences 4. BIOSIS Previews 2. AMERICAN JOURNAL OF PHYSIOLOGY-ENDOCRINOLOGY AND METABOLISM Monthly ISSN: 0193-1849 AMER PHYSIOLOGICAL SOC, 9650 ROCKVILLE PIKE, BETHESDA, USA, MD, 20814 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Life Sciences 4. BIOSIS Previews 3. ANNALES D ENDOCRINOLOGIE Bimonthly ISSN: 0003-4266 MASSON EDITEUR, 21 STREET CAMILLE DESMOULINS, ISSY, MOULINEAUX CEDEX 9, FRANCE, 92789 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Life Sciences 4. BIOSIS Previews 4. ANNALS OF NUTRITION AND METABOLISM Bimonthly ISSN: 0250-6807 KARGER, ALLSCHWILERSTRASSE 10, BASEL, SWITZERLAND, CH-4009 1. Science Citation Index 2. Science Citation Index Expanded موسسه تحقیقاتی پژوهشی اشراق www.isi-isc.com 3. Current Contents - Agriculture, Biology & Environmental Sciences 4. Current Contents - Life Sciences 5. BIOSIS Previews 5. ANTIOXIDANTS & REDOX SIGNALING Biweekly ISSN: 1523-0864 MARY ANN LIEBERT, INC, 140 HUGUENOT STREET, 3RD FL, NEW ROCHELLE. USA, NY, 10801 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Life Sciences 4. BIOSIS Previews 6. BEST PRACTICE & RESEARCH CLINICAL ENDOCRINOLOGY & METABOLISM Bimonthly ISSN: 1521-690X ELSEVIER SCI LTD, THE BOULEVARD, LANGFORD LANE, KIDLINGTON, OXFORD, ENGLAND, OXON, OX5 1GB 1. Science Citation Index 2. Science Citation Index Expanded 3. Current Contents - Clinical Medicine 4. Current Contents - Life Sciences 7. -

Introduction to Scientometrics

INTRODUCTION TO SCIENTOMETRICS Farzaneh Aminpour, PhD. [email protected] Ministry of Health and Medical Education Workshop Objectives Scientometrics: Basics Citation Databases Scientometrics Indices H-index, M-index, G-index Journal Impact Factor Journal Citation Reports CiteScore, SNIP, SJR Researcher Profiles Services Scientometric Reports 2 Definition • Scientometrics is the study of measuring and analyzing science, technology and innovation. • Scientometrics is the measurement of scientific output, and the impact of scientific findings. History Modern Scientometrics is mostly based on the work of Eugene Garfield creator and founder of the Science Citation Index and the Institute for Scientific Information (ISI) which is heavily used for scientometric analysis. Leydesdorff, L. and Milojevic, S., "Scientometrics" in: Lynch, M. (editor), International Encyclopedia of Social and Behavioral Sciences subsection 85030. (2015) Scientometrics Variables • Authors • Publications • References • Citations Importance & Applications Grant / Funding Allocations Benchmarking Research Priorities Scientific Collaboration Models Research Ranking Policy Decisions Science Mapping Academic Promotion Collection management Levels of Study • Microlevel Individuals, Groups • Mesolevel Universities, Institutions, Journals • Macrolevel National, Regional, Global Citation Databases • ISI Web of Science • Scopus • Google Scholar ISI WoS Content Coverage • 12,000 journals • 160,000 conference proceedings • Coverage dating back to 1900 • More -

A Study of SCI, SSCI, CSCD, and CSSCI Journals

publications Article Scholarly Journals’ Publication Frequency and Number of Articles in 2018–2019: A Study of SCI, SSCI, CSCD, and CSSCI Journals Xiaotian Chen Cullom-Davis Library, Bradley University, Peoria, IL 61625, USA; [email protected] Received: 12 August 2019; Accepted: 4 September 2019; Published: 8 September 2019 Abstract: This study used all-journal data and systematic random sampling data to primarily determine the average number of issues per year and the average number of articles per issue among the Science Citation Index (SCI), Social Sciences Citation Index (SSCI), Chinese Science Citation Database (CSCD) (China), and Chinese Social Sciences Citation Index (CSSCI) (China) journals. The random sampling data were based on 5% SCI and SSCI samples and 10% CSCD and CSSCI samples. The objectives were to have a 2018–2019 record of the journal publication frequency detailing the number of articles per issue and the number of issues per year for the journals on the lists, as well as to compare Chinese journals’ data with those of international journals, in the perspective of scholarly publishing transformations in the world as well as in China during the past two decades. The study found that the average number of issues per year for SCI, SSCI, CSCD, and CSSCI journals was 10.95, 5.18, 9.17, and 7.87, respectively, and that CSCD/CSSCI journals publish more articles than SCI/SSCI, with CSSCI journals publishing significantly more articles per year than SSCI journals. The author fees for non-OA journals in China could play a role in the higher number of articles. -

OECD Compendium of Bibliometric Science Indicators

Compendium of Bibliometric Science Indicators COMPENDIUM OF BIBLIOMETRIC SCIENCE INDICATORS NOTE FROM THE SECRETARIAT This document contains the final version of the OECD Compendium of Bibliometric Science Indicators. The report brings together a new collection of statistics depicting recent trends and the structure of scientific production across OECD countries and other major economies that supports indicators contained in the 2015 OECD Science, Technology and Industry Scoreboard. This report was prepared in partnership between the OECD Directorate for Science, Technology and Innovation (DSTI) and the SCImago Research Group (CSIC, Spain). It was presented to the Committee for Scientific and Technological Policy (CSTP) and National Experts in Science and Technology Indicators (NESTI) delegates for comment and approval. This paper was approved and declassified by written procedure by the Committee for Scientific and Technological Policy (CSTP) in May 2016 and prepared for publication by the OECD Secretariat. Note to Delegations: This document is also available on OLIS under the reference code: DSTI/EAS/STP/NESTI(2016)8/FINAL This document and any map included herein are without prejudice to the status of or sovereignty over any territory, to the delimitation of international frontiers and boundaries and to the name of any territory, city or area. The statistical data for Israel are supplied by and under the responsibility of the relevant Israeli authorities or third party. The use of such data by the OECD is without prejudice to the status of the Golan Heights, East Jerusalem and Israeli settlements in the West Bank under the terms of international law. Please cite this publication as: OECD and SCImago Research Group (CSIC) (2016), Compendium of Bibliometric Science Indicators. -

Web of Science Core Collection: Looking Back and Ahead

Web of Science Core Collection: Looking Back and Ahead Clarivate Publisher Forum 2 March 2021 Mireia Guardingo, PhD. [email protected] Outline • Our legacy: Objectivity, selectivity and collection dynamics The Web of Science™ Journal Evaluation Process and Selection Criteria • What’s inside the Web of Science Core Collection™? A closer look at Open Access in the Emerging Sources Citation Index • New Release Highlights 2 SCIE: Science Citation Index Expanded SSCI: Social Sciences Citation Index AHCI: Arts & Humanities Citation Index ESCI: Emerging Sources Citation Index The Web of Science™ BKCI: Book Citation Index Journal Evaluation CPCI: Conference Proceedings Citation Index Process and Selection Criteria 28 selection criteria in total 24 quality criteria to select for editorial rigor and publishing best practice The Web of Science Journal Evaluation Process and 4 Selection Criteria impact criteria to select for the most impactful journals Register for an Open House Session 3 Any journal that fails to meet all the quality criteria will be removed from the Web of Science Core Collection The Web of Science™ Journal Evaluation Process and Selection Criteria New Journals that meet the quality evaluations Re-evaluations criteria enter ESCI Journals that meet quality and impact criteria enter SCIE, SSCI or AHCI ESCI journals that gain impact move to SCIE, SSCI or AHCI SCIE, SSCI and AHCI journals that decrease in impact move to ESCI 4 The Web of Science™ Journal Evaluation Process • 64% of journals evaluated were not accepted for indexing. 53% failed Editorial Triage 11% failed Quality • 34% of journals evaluated were added to the Web of Science Core Collection ™.