Media Streaming Over the Internet — an Overview of Delivery Technologies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Article (PDF)

Advances in Social Science, Education and Humanities Research, volume 123 2nd International Conference on Education, Sports, Arts and Management Engineering (ICESAME 2017) The Application of New Media Technology in the Ideological and Political Education of College Students Hao Lu 1, a 1 Jiangxi Vocational & Technical College of Information Application, Nanchang, China [email protected] Keywords: new media; application; the Ideological and Political Education Abstract: With the development of science and technology, new media technology has been more widely used in teaching. In particular, the impact of new media technology on college students’ ideological and political education is very important. The development of new media technology has brought the opportunities to college students’ ideological and political education while bringing the challenges. Therefore, it is an urgent task to study on college students’ ideological and political education under the new media environment. 1. Introduction New media technology, relative to traditional media technology, is the emerging electronic media technology on the basis of digital technology, internet technology, mobile communication technology, etc. it mainly contains fetion, wechat, blog, podcast, network television, network radio, online games, digital TV, virtual communities, portals, search engines, etc. With the rapid development and wide application of science and technology, new media technology has profoundly affected students’ learning and life. Of course, it also brings new challenges and opportunities to college students’ ideological and political education. Therefore, how to better use the new media technology to improve college students’ ideological and political education becomes the problems needing to be solving by college moral educators. 2. The intervention mode and its characteristics of new media technology New media technology, including blog, instant messaging tools, streaming media, etc, is a new network tools and application mode and instant messaging carriers under the network environment. -

Audience Affect, Interactivity, and Genre in the Age of Streaming TV

. Volume 16, Issue 2 November 2019 Navigating the Nebula: Audience affect, interactivity, and genre in the age of streaming TV James M. Elrod, University of Michigan, USA Abstract: Streaming technologies continue to shift audience viewing practices. However, aside from addressing how these developments allow for more complex serialized streaming television, not much work has approached concerns of specific genres that fall under the field of digital streaming. How do emergent and encouraged modes of viewing across various SVOD platforms re-shape how audiences affectively experience and interact with genre and generic texts? What happens to collective audience discourses as the majority of viewers’ situated consumption of new serial content becomes increasingly accelerated, adaptable, and individualized? Given the range and diversity of genres and fandoms, which often intersect and overlap despite their current fragmentation across geographies, platforms, and lines of access, why might it be pertinent to reconfigure genre itself as a site or node of affective experience and interactive, collective production? Finally, as studies of streaming television advance within the industry and academia, how might we ponder on a genre-by- genre basis, fandoms’ potential need for time and space to collectively process and interact affectively with generic serial texts – in other words, to consider genres and generic texts themselves as key mediative sites between the contexts of production and those of fans’ interactivity and communal, affective pleasure? This article draws together threads of commentary from the industry, scholars, and culture writers about SVOD platforms, emergent viewing practices, speculative genres, and fandoms to argue for the centrality of genre in interventions into audience studies. -

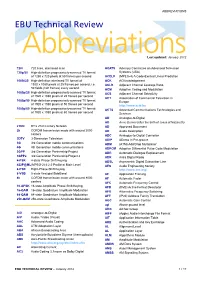

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership -

Faq Av Bridge Va 999 821

Contents 1 Vaddio IP Streaming Features and Functionality................................................................................. 2 1.1 Frequently Asked Questions ........................................................................................................ 2 1.2 Streaming Configuration.............................................................................................................. 4 1.3 Application Examples................................................................................................................... 5 1.3.1 Distribution Application (Single Stream-to-Multiple Clients)............................................... 5 1.3.2 Recording/Archive Application ............................................................................................ 6 2 Compatibility Summary ....................................................................................................................... 7 2.1 Vaddio Lab Tested Interoperability.............................................................................................. 7 2.2 Field Test Media Players or Server............................................................................................... 7 3 Media Player/Server Interoperability .................................................................................................. 8 3.1 Quicktime Media Player............................................................................................................... 8 3.2 VLC Player ................................................................................................................................. -

I Feasibility of Streaming Media for Transportation Research And

Feasibility of Streaming Media For Transportation Research and Implementation Final Report Prepared by: Drew M. Coleman July 2007 Research Project SPR-2231 Report No. 2231-F-05-11 Connecticut Department of Transportation Bureau of Engineering and Highway operations Division of Research Keith R. Lane, P.E. Director of Research and Materials James M. Sime, P.E. Manager of Research i TECHNICAL REPORT DOCUMENTATION PAGE 1. Report No. 2. Government Accession 3. Recipients Catalog No. 2231-F-05-11 No. 4. Title and Subtitle 5. Report Date Feasibility of Streaming Media for July 2007 Transportation Research and 6. Performing Organization Code Implementation SPR-2231 7. Author(s) Drew M. Coleman 8. Performing Organization Report No. 2231-F-05-11 9. Performing Organization Name and 10. Work Unit No. (TRIS) Address 11. Contract or Grant No. Connecticut Department of Transportation CT Study No. SPR-2231 Division of Research 13. Type of Report and Period Covered 280 West Street Final Report Rocky Hill, CT 06067-3502 February 2001-June 2007 12. Sponsoring Agency Name and Address Connecticut Department of Transportation 2800 Berlin Turnpike 14. Sponsoring Agency Code Newington, CT 06131-7546 SPR-2231 15. Supplementary Notes Conducted in cooperation with the U.S. Department of Transportation, Federal Highway Administration 16. Abstract This report is intended to serve as a guide for transportation personnel in the development and dissemination of streaming video-based presentations. These were created using streaming media production tools, then delivered via network and Web-based media servers, and finally, viewed from the end-users’ PC- desktops. The study focuses on three popular streaming media technology platforms: RealNetworks®, Microsoft® and Apple®. -

![[MS-MSSOD]: Media Streaming Server Protocols Overview](https://docslib.b-cdn.net/cover/7347/ms-mssod-media-streaming-server-protocols-overview-577347.webp)

[MS-MSSOD]: Media Streaming Server Protocols Overview

[MS-MSSOD]: Media Streaming Server Protocols Overview This document provides an overview of the Media Streaming Server Protocols Overview Protocol Family. It is intended for use in conjunction with the Microsoft Protocol Technical Documents, publicly available standard specifications, network programming art, and Microsoft Windows distributed systems concepts. It assumes that the reader is either familiar with the aforementioned material or has immediate access to it. A Protocol System Document does not require the use of Microsoft programming tools or programming environments in order to implement the Protocols in the System. Developers who have access to Microsoft programming tools and environments are free to take advantage of them. Intellectual Property Rights Notice for Open Specifications Documentation . Technical Documentation. Microsoft publishes Open Specifications documentation for protocols, file formats, languages, standards as well as overviews of the interaction among each of these technologies. Copyrights. This documentation is covered by Microsoft copyrights. Regardless of any other terms that are contained in the terms of use for the Microsoft website that hosts this documentation, you may make copies of it in order to develop implementations of the technologies described in the Open Specifications and may distribute portions of it in your implementations using these technologies or your documentation as necessary to properly document the implementation. You may also distribute in your implementation, with or without modification, any schema, IDL's, or code samples that are included in the documentation. This permission also applies to any documents that are referenced in the Open Specifications. No Trade Secrets. Microsoft does not claim any trade secret rights in this documentation. -

Windows Poster 20-12-2013 V3

Microsoft® Discover the Open Specifications technical documents you need for your interoperability solutions. To obtain these technical documents, go to the Open Specifications Interactive Tiles: open specifications poster © 2012-2014 Microsoft Corporation. All rights reserved. http://msdn.microsoft.com/openspecifications/jj128107 Component Object Model (COM+) Technical Documentation Technical Documentation Presentation Layer Services Technical Documentation Component Object Model Plus (COM+) Event System Protocol Active Directory Protocols Overview Open Data Protocol (OData) Transport Layer Security (TLS) Profile Windows System Overview Component Object Model Plus (COM+) Protocol Active Directory Lightweight Directory Services Schema WCF-Based Encrypted Server Administration and Notification Protocol Session Layer Services Windows Protocols Overview Component Object Model Plus (COM+) Queued Components Protocol Active Directory Schema Attributes A-L Distributed Component Object Model (DCOM) Remote Protocol Windows Overview Application Component Object Model Plus (COM+) Remote Administration Protocol Directory Active Directory Schema Attributes M General HomeGroup Protocol Supplemental Shared Abstract Data Model Elements Component Object Model Plus (COM+) Tracker Service Protocol Active Directory Schema Attributes N-Z Peer Name Resolution Protocol (PNRP) Version 4.0 Windows Data Types Services General Application Services Services Active Directory Schema Classes Services Peer-to-Peer Graphing Protocol Documents Windows Error Codes ASP.NET -

Streaming Media Seminar – Effective Development and Distribution Of

Proceedings of the 2004 ASCUE Conference, www.ascue.org June 6 – 10, 1004, Myrtle Beach, South Carolina Streaming Media Seminar – Effective Development and Distribution of Streaming Multimedia in Education Robert Mainhart Electronic Classroom/Video Production Manager James Gerraughty Production Coordinator Kristine M. Anderson Distributed Learning Course Management Specialist CERMUSA - Saint Francis University P.O. Box 600 Loretto, PA 15940-0600 814-472-3947 [email protected] This project is partially supported by the Center of Excellence for Remote and Medically Under- Served Areas (CERMUSA) at Saint Francis University, Loretto, Pennsylvania, under the Office of Naval Research Grant Number N000140210938. Introduction Concisely defined, “streaming media” is moving video and/or audio transmitted over the Internet for immediate viewing/listening by an end user. However, at Saint Francis University’s Center of Excellence for Remote and Medically Under-Served Areas (CERMUSA), we approach stream- ing media from a broader perspective. Our working definition includes a wide range of visual electronic multimedia that can be transmitted across the Internet for viewing in real time or saved as a file for later viewing. While downloading and saving a media file for later play is not, strictly speaking, a form of streaming, we include that method in our discussion of multimedia content used in education. There are numerous media types and within most, multiple hardware and software platforms used to develop and distribute video and audio content across campus or the Internet. Faster, less expensive and more powerful computers and related tools brings the possibility of content crea- tion, editing and distribution into the hands of the content creators. -

Streaming Media for Web Based Training

DOCUMENT RESUME ED 448 705 IR 020 468 AUTHOR Childers, Chad; Rizzo, Frank; Bangert, Linda TITLE Streaming Media for Web Based Training. PUB DATE 1999-10-00 NOTE 7p.; In: WebNet 99 World Conference on the WWW and Internet Proceedings (Honolulu, Hawaii, October 24-30, 1999); see IR 020 454. PUB TYPE Reports Descriptive (141) Speeches/Meeting Papers (150) EDRS PRICE MF01/PC01 Plus Postage. DESCRIPTORS *Computer Uses in Education; Educational Technology; *Instructional Design; *Material Development; *Multimedia Instruction; *Multimedia Materials; Standards; *Training; World Wide Web IDENTIFIERS Streaming Audio; Streaming Video; Technology Utilization; *Web Based Instruction ABSTRACT This paper discusses streaming media for World Wide Web-based training (WBT). The first section addresses WBT in the 21st century, including the Synchronized Multimedia Integration Language (SMIL) standard that allows multimedia content such as text, pictures, sound, and video to be synchronized for a coherent learning experience. The second section covers definitions, history, and current status. The third section presents SMIL as the best direction for WBT. The fourth section describes building a WBT module,using SMIL, including interface design, learning materials content design, and building the RealText[TM], RealPix[TM], and SMIL files. The fifth section addresses technological issues, including encoding content to RealNetworks formats, uploading files to stream server, and hardware requirements. The final section summarizes future directions. (MES) Reproductions supplied by EDRS are the best that can be made from the original document. U.S. DEPARTMENT OF EDUCATION Office of Educational Research and Improvement PERMISSION TO REPRODUCE AND EDUCATIONAL RESOURCES INFORMATION DISSEMINATE THIS MATERIAL HAS CENTER (ERIC) BEEN GRANTED BY fH This document has been reproduced as received from the person or organization G.H. -

Progettazione Di Una Piattaforma Per Lo Streaming Video E La Sincronizzazione Di Contenuti Multimediali

Università degli studi di Padova Facoltà di scienze MM. FF. NN. Laurea in Informatica Progettazione di una piattaforma per lo streaming video e la sincronizzazione di contenuti multimediali Laureando: Roberto Baldin Matricola: 561592 Relatore: Dott.ssa Ombretta Gaggi Anno accademico 2008/2009 Indice 1 Introduzione 1 1.1 Descrizione dell’azienda QBGROUP . 1 1.2 Descrizione dello stage . 2 2 Streaming server 5 2.1 Introduzione . 5 2.1.1 Concetto di streaming, storia e protocolli . 5 2.1.2 Confronto tra progressive download, pseudo streaming e streaming . 6 2.2 Scelta del server di streaming . 7 2.2.1 Configurazione hardware e software del server . 7 2.2.2 Soluzioni individuate . 8 2.2.3 Installazione di Adobe Flash Media Streaming Server . 8 2.2.4 Installazione di Red5 Media Server . 11 2.2.5 Installazione di Windows Media Services . 15 2.2.6 Confronto tra le soluzioni e benchmark . 16 2.3 Completamento configurazione del server di streaming . 17 2.3.1 Problematiche di accessibilità dei contenuti . 17 2.3.2 Scelta del client . 21 2.3.3 Formati e caratteristiche dei video . 21 2.3.4 Automazione della procedura di conversione . 25 3 Sincronizzazione di contenuti multimediali 31 3.1 Introduzione . 31 3.1.1 Strumenti e tecnologie utilizzate . 32 3.1.2 SMIL: Synchronized Multimedia Integration Language . 36 i 3.2 Sviluppo di un’applicazione web per la sincronizzazione di contenuti multi- mediali . 39 3.2.1 Sviluppo di un’applicazione per la sincronizzazione tra video e slide 39 3.2.2 Sviluppo di un player SMIL per il web . -

Configuring Remote Desktop Features in Horizon 7

Configuring Remote Desktop Features in Horizon 7 OCT 2020 VMware Horizon 7 7.13 Configuring Remote Desktop Features in Horizon 7 You can find the most up-to-date technical documentation on the VMware website at: https://docs.vmware.com/ VMware, Inc. 3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com © Copyright 2018-2020 VMware, Inc. All rights reserved. Copyright and trademark information. VMware, Inc. 2 Contents 1 Configuring Remote Desktop Features in Horizon 7 8 2 Configuring Remote Desktop Features 9 Configuring Unity Touch 10 System Requirements for Unity Touch 10 Configure Favorite Applications Displayed by Unity Touch 11 Configuring Flash URL Redirection for Multicast or Unicast Streaming 13 System Requirements for Flash URL Redirection 15 Verify that the Flash URL Redirection Feature Is Installed 16 Set Up the Web Pages for Flash URL Redirection 16 Set Up Client Devices for Flash URL Redirection 17 Disable or Enable Flash URL Redirection 17 Configuring Flash Redirection 18 System Requirements for Flash Redirection 19 Install and Configure Flash Redirection 20 Use Windows Registry Settings to Configure Flash Redirection 22 Configuring HTML5 Multimedia Redirection 23 System Requirements for HTML5 Multimedia Redirection 24 Install and Configure HTML5 Multimedia Redirection 25 Install the VMware Horizon HTML5 Redirection Extension for Chrome 27 Install the VMware Horizon HTML5 Redirection Extension for Edge 28 HTML5 Multimedia Redirection Limitations 29 Configuring Browser Redirection 29 System Requirements for Browser Redirection -

Navigating Youth Media Landscapes: Challenges and Opportunities for Public Media

Navigating Youth Media Patrick Davison Fall 2020 Monica Bulger Landscapes Mary Madden Challenges and Opportunities for Public Media The Joan Ganz Cooney Center at Sesame Workshop The Corporation for Public Broadcasting About the AuthorS Patrick Davison is the Program Manager for Research Production and Editorial at Data & Society Research Institute. He holds a PhD from NYU’s department of Media, Culture, and Communication, and his research is on the relationship between networked media and culture. Monica Bulger is a Senior Fellow at the Joan Ganz Cooney Center at Sesame Workshop. She studies youth and family media literacy practices and advises policy globally. She has consulted on child online protection for UNICEF since 2012, and her research encompasses 16 countries in Asia, the Middle East, North Africa, South America, North America, and Europe. Monica is an affiliate of the Data & Society Research Institute in New York City where she led the Connected Learning initiative. Monica holds a PhD in Education and was a Research Fellow at the Oxford Internet Institute and the Berkman Klein Center for Internet & Society at Harvard University. Mary Madden is a veteran researcher, writer and nationally- recognized expert on privacy and technology, trends in social media use, and the impact of digital media on teens and parents. She is an Affiliate at the Data & Society Research Institute in New York City, where she most recently directed an initiative to explore the effects of data-centric systems on Americans’ health and well-being and led several studies examining the intersection of privacy and digital inequality. Prior to her role at Data & Society, Mary was a Senior Researcher for the Pew Research Center’s Internet, Science & Technology team in Washington, DC and an Affiliate at the Berkman Klein Center for Internet & Society at Harvard University.