What Is the Set Cover Problem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vertex Cover Might Be Hard to Approximate to Within 2 Ε − Subhash Khot ∗ Oded Regev †

Vertex Cover Might be Hard to Approximate to within 2 ε − Subhash Khot ∗ Oded Regev † Abstract Based on a conjecture regarding the power of unique 2-prover-1-round games presented in [Khot02], we show that vertex cover is hard to approximate within any constant factor better than 2. We actually show a stronger result, namely, based on the same conjecture, vertex cover on k-uniform hypergraphs is hard to approximate within any constant factor better than k. 1 Introduction Minimum vertex cover is the problem of finding the smallest set of vertices that touches all the edges in a given graph. This is one of the most fundamental NP-complete problems. A simple 2- approximation algorithm exists for this problem: construct a maximal matching by greedily adding edges and then let the vertex cover contain both endpoints of each edge in the matching. It can be seen that the resulting set of vertices indeed touches all the edges and that its size is at most twice the size of the minimum vertex cover. However, despite considerable efforts, state of the art techniques can only achieve an approximation ratio of 2 o(1) [16, 21]. − Given this state of affairs, one might strongly suspect that vertex cover is NP-hard to approxi- mate within 2 ε for any ε> 0. This is one of the major open questions in the field of approximation − algorithms. In [18], H˚astad showed that approximating vertex cover within constant factors less 7 than 6 is NP-hard. This factor was recently improved by Dinur and Safra [10] to 1.36. -

3.1 Matchings and Factors: Matchings and Covers

1 3.1 Matchings and Factors: Matchings and Covers This copyrighted material is taken from Introduction to Graph Theory, 2nd Ed., by Doug West; and is not for further distribution beyond this course. These slides will be stored in a limited-access location on an IIT server and are not for distribution or use beyond Math 454/553. 2 Matchings 3.1.1 Definition A matching in a graph G is a set of non-loop edges with no shared endpoints. The vertices incident to the edges of a matching M are saturated by M (M-saturated); the others are unsaturated (M-unsaturated). A perfect matching in a graph is a matching that saturates every vertex. perfect matching M-unsaturated M-saturated M Contains copyrighted material from Introduction to Graph Theory by Doug West, 2nd Ed. Not for distribution beyond IIT’s Math 454/553. 3 Perfect Matchings in Complete Bipartite Graphs a 1 The perfect matchings in a complete b 2 X,Y-bigraph with |X|=|Y| exactly c 3 correspond to the bijections d 4 f: X -> Y e 5 Therefore Kn,n has n! perfect f 6 matchings. g 7 Kn,n The complete graph Kn has a perfect matching iff… Contains copyrighted material from Introduction to Graph Theory by Doug West, 2nd Ed. Not for distribution beyond IIT’s Math 454/553. 4 Perfect Matchings in Complete Graphs The complete graph Kn has a perfect matching iff n is even. So instead of Kn consider K2n. We count the perfect matchings in K2n by: (1) Selecting a vertex v (e.g., with the highest label) one choice u v (2) Selecting a vertex u to match to v K2n-2 2n-1 choices (3) Selecting a perfect matching on the rest of the vertices. -

Theoretical Computer Science Efficient Approximation of Min Set

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Theoretical Computer Science 410 (2009) 2184–2195 Contents lists available at ScienceDirect Theoretical Computer Science journal homepage: www.elsevier.com/locate/tcs Efficient approximation of min set cover by moderately exponential algorithms N. Bourgeois, B. Escoffier ∗, V.Th. Paschos LAMSADE, CNRS FRE 3234 and Université Paris-Dauphine, France article info a b s t r a c t Article history: We study the approximation of min set cover combining ideas and results from polynomial Received 13 October 2008 approximation and from exact computation (with non-trivial worst case complexity upper Received in revised form 30 January 2009 bounds) for NP-hard problems. We design approximation algorithms for min set cover Accepted 1 February 2009 achieving ratios that cannot be achieved in polynomial time (unless problems in NP could Communicated by G. Ausiello be solved by slightly super-polynomial algorithms) with worst-case complexity much lower (though super-polynomial) than those of an exact computation. Keywords: 2009 Elsevier B.V. All rights reserved. Exponential algorithms ' Approximation algorithms min set cover 1. Introduction C Given a ground set C of cardinality n and a system S D fS1;:::; Smg ⊂ 2 , min set cover consists of determining a 0 minimum size subsystem S such that [S2S0 S D C. min set cover is a famous NP-hard problem dealt with in the seminal paper [22]. For the last ten years, the issue of exact resolution of NP-hard problems by algorithms having provably non-trivial upper time-complexity bounds has been very actively studied (see, for instance, the surveys [16,26,30]). -

Set Cover Algorithms for Very Large Datasets

Set Cover Algorithms For Very Large Datasets ∗ Graham Cormode Howard Karloff Anthony Wirth AT&T Labs–Research AT&T Labs–Research Department of Computer [email protected] [email protected] Science and Software Engineering The University of Melbourne [email protected] ABSTRACT 1. INTRODUCTION The problem of Set Cover—to find the smallest subcol- The problem of Set Cover arises in a surprisingly broad lection of sets that covers some universe—is at the heart number of places. Although presented as a somewhat ab- of many data and analysis tasks. It arises in a wide range stract problem, it captures many different scenarios that of settings, including operations research, machine learning, arise in the context of data management and knowledge planning, data quality and data mining. Although finding an mining. The basic problem is that we are given a collec- optimal solution is NP-hard, the greedy algorithm is widely tion of sets, each of which is drawn from a common universe used, and typically finds solutions that are close to optimal. of possible items. The goal is to find a subcollection of sets However, a direct implementation of the greedy approach, so that their union includes every item from the universe. which picks the set with the largest number of uncovered Places where Set Cover occurs include: items at each step, does not behave well when the in- • In operations research, the problem of choosing where put is very large and disk resident. The greedy algorithm to locate a number of facilities so that all sites are must make many random accesses to disk, which are unpre- within a certain distance of the closest facility can be dictable and costly in comparison to linear scans. -

Approximation Algorithms

Lecture 21 Approximation Algorithms 21.1 Overview Suppose we are given an NP-complete problem to solve. Even though (assuming P = NP) we 6 can’t hope for a polynomial-time algorithm that always gets the best solution, can we develop polynomial-time algorithms that always produce a “pretty good” solution? In this lecture we consider such approximation algorithms, for several important problems. Specific topics in this lecture include: 2-approximation for vertex cover via greedy matchings. • 2-approximation for vertex cover via LP rounding. • Greedy O(log n) approximation for set-cover. • Approximation algorithms for MAX-SAT. • 21.2 Introduction Suppose we are given a problem for which (perhaps because it is NP-complete) we can’t hope for a fast algorithm that always gets the best solution. Can we hope for a fast algorithm that guarantees to get at least a “pretty good” solution? E.g., can we guarantee to find a solution that’s within 10% of optimal? If not that, then how about within a factor of 2 of optimal? Or, anything non-trivial? As seen in the last two lectures, the class of NP-complete problems are all equivalent in the sense that a polynomial-time algorithm to solve any one of them would imply a polynomial-time algorithm to solve all of them (and, moreover, to solve any problem in NP). However, the difficulty of getting a good approximation to these problems varies quite a bit. In this lecture we will examine several important NP-complete problems and look at to what extent we can guarantee to get approximately optimal solutions, and by what algorithms. -

Complexity and Approximation Results for the Connected Vertex Cover Problem in Graphs and Hypergraphs Bruno Escoffier, Laurent Gourvès, Jérôme Monnot

Complexity and approximation results for the connected vertex cover problem in graphs and hypergraphs Bruno Escoffier, Laurent Gourvès, Jérôme Monnot To cite this version: Bruno Escoffier, Laurent Gourvès, Jérôme Monnot. Complexity and approximation results forthe connected vertex cover problem in graphs and hypergraphs. 2007. hal-00178912 HAL Id: hal-00178912 https://hal.archives-ouvertes.fr/hal-00178912 Preprint submitted on 12 Oct 2007 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Laboratoire d'Analyse et Modélisation de Systèmes pour l'Aide à la Décision CNRS UMR 7024 CAHIER DU LAMSADE 262 Juillet 2007 Complexity and approximation results for the connected vertex cover problem in graphs and hypergraphs Bruno Escoffier, Laurent Gourvès, Jérôme Monnot Complexity and approximation results for the connected vertex cover problem in graphs and hypergraphs Bruno Escoffier∗ Laurent Gourv`es∗ J´erˆome Monnot∗ Abstract We study a variation of the vertex cover problem where it is required that the graph induced by the vertex cover is connected. We prove that this problem is polynomial in chordal graphs, has a PTAS in planar graphs, is APX-hard in bipartite graphs and is 5/3-approximable in any class of graphs where the vertex cover problem is polynomial (in particular in bipartite graphs). -

Approximation Algorithms Instructor: Richard Peng Nov 21, 2016

CS 3510 Design & Analysis of Algorithms Section B, Lecture #34 Approximation Algorithms Instructor: Richard Peng Nov 21, 2016 • DISCLAIMER: These notes are not necessarily an accurate representation of what I said during the class. They are mostly what I intend to say, and have not been carefully edited. Last week we formalized ways of showing that a problem is hard, specifically the definition of NP , NP -completeness, and NP -hardness. We now discuss ways of saying something useful about these hard problems. Specifi- cally, we show that the answer produced by our algorithm is within a certain fraction of the optimum. For a maximization problem, suppose now that we have an algorithm A for our prob- lem which, given an instance I, returns a solution with value A(I). The approximation ratio of algorithm A is dened to be OP T (I) max : I A(I) This is not restricted to NP-complete problems: we can apply this to matching to get a 2-approximation. Lemma 0.1. The maximal matching has size at least 1=2 of the optimum. Proof. Let the maximum matching be M ∗. Consider the process of taking a maximal matching: an edge in M ∗ can't be chosen only after one (or both) of its endpoints are picked. Each edge we add to the maximal matching only has 2 endpoints, so it takes at least M ∗ 2 edges to make all edges in M ∗ ineligible. This ratio is indeed close to tight: consider a graph that has many length 3 paths, and the maximal matching keeps on taking the middle edges. -

1 Bipartite Matching and Vertex Covers

ORF 523 Lecture 6 Princeton University Instructor: A.A. Ahmadi Scribe: G. Hall Any typos should be emailed to a a [email protected]. In this lecture, we will cover an application of LP strong duality to combinatorial optimiza- tion: • Bipartite matching • Vertex covers • K¨onig'stheorem • Totally unimodular matrices and integral polytopes. 1 Bipartite matching and vertex covers Recall that a bipartite graph G = (V; E) is a graph whose vertices can be divided into two disjoint sets such that every edge connects one node in one set to a node in the other. Definition 1 (Matching, vertex cover). A matching is a disjoint subset of edges, i.e., a subset of edges that do not share a common vertex. A vertex cover is a subset of the nodes that together touch all the edges. (a) An example of a bipartite (b) An example of a matching (c) An example of a vertex graph (dotted lines) cover (grey nodes) Figure 1: Bipartite graphs, matchings, and vertex covers 1 Lemma 1. The cardinality of any matching is less than or equal to the cardinality of any vertex cover. This is easy to see: consider any matching. Any vertex cover must have nodes that at least touch the edges in the matching. Moreover, a single node can at most cover one edge in the matching because the edges are disjoint. As it will become clear shortly, this property can also be seen as an immediate consequence of weak duality in linear programming. Theorem 1 (K¨onig). If G is bipartite, the cardinality of the maximum matching is equal to the cardinality of the minimum vertex cover. -

1 Vertex Cover on Hypergraphs

15-859FF: Coping with Intractability CMU, Fall 2019 Lecture #3: Reductions for Problems in FPT September 11, 2019 Lecturer: Venkatesan Guruswami Scribe: Anish Sevekari 1 Vertex Cover on Hypergraphs We define a d-regular hypergraph H = (V; F) where F is a family of subsets of V of size d. The vertex cover problem on hypergraphs is to find the smallest set S such that every edge X 2 F intersects S at least once. We parameterize the vertex cover problem by size of the optimum solution. Then the parameterized version of the problem is given a hypergraph H and an integer k, does H have a vertex cover of size k? We will now give a dkpoly(n) algorithm that solves the vertex cover problem and a O(d2d!kd) kernel for d-regular Hypergraph Vertex Cover problem. 1.1 An algorithm for Hypergraph Vertex Cover The algorithm is a simple generalization of the 2kpoly(n) algorithm for vertex cover over graphs. We pick any edge, then branch on the vertex on this edge which is in the vertex cover. Algorithm 1 VertexCover(H = (V; F); k) 1: if k = 0 and jFj > 0 then 2: return ? 3: end if 4: Pick any edge e 2 F. 5: for v 2 e do 6: H0 = (V n fvg; F n ff 2 F : v 2 fg) 7: if VertexCover(H0; k) 6=? then 8: return fvg [ VertexCover(H0; k − 1) 9: end if 10: end for 11: return ? Note that the recursion depth of this algorithm is k, and we branch on at most d points inside each call. -

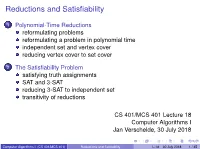

Reductions and Satisfiability

Reductions and Satisfiability 1 Polynomial-Time Reductions reformulating problems reformulating a problem in polynomial time independent set and vertex cover reducing vertex cover to set cover 2 The Satisfiability Problem satisfying truth assignments SAT and 3-SAT reducing 3-SAT to independent set transitivity of reductions CS 401/MCS 401 Lecture 18 Computer Algorithms I Jan Verschelde, 30 July 2018 Computer Algorithms I (CS 401/MCS 401) Reductions and Satifiability L-18 30 July 2018 1 / 45 Reductions and Satifiability 1 Polynomial-Time Reductions reformulating problems reformulating a problem in polynomial time independent set and vertex cover reducing vertex cover to set cover 2 The Satisfiability Problem satisfying truth assignments SAT and 3-SAT reducing 3-SAT to independent set transitivity of reductions Computer Algorithms I (CS 401/MCS 401) Reductions and Satifiability L-18 30 July 2018 2 / 45 reformulating problems The Ford-Fulkerson algorithm computes maximum flow. By reduction to a flow problem, we could solve the following problems: bipartite matching, circulation with demands, survey design, and airline scheduling. Because the Ford-Fulkerson is an efficient algorithm, all those problems can be solved efficiently as well. Our plan for the remainder of the course is to explore computationally hard problems. Computer Algorithms I (CS 401/MCS 401) Reductions and Satifiability L-18 30 July 2018 3 / 45 imagine a meeting with your boss ... From Computers and intractability. A Guide to the Theory of NP-Completeness by Michael R. Garey and David S. Johnson, Bell Laboratories, 1979. Computer Algorithms I (CS 401/MCS 401) Reductions and Satifiability L-18 30 July 2018 4 / 45 what you want to say is From Computers and intractability. -

![Жиз © © 9D%')( X` Yb Acedtac Dd Fgaf H Acedd F Gaf [3, 13]. X](https://docslib.b-cdn.net/cover/8650/%C2%A9-%C2%A9-9d-x-yb-acedtac-dd-fgaf-h-acedd-f-gaf-3-13-x-1188650.webp)

Жиз © © 9D%')( X` Yb Acedtac Dd Fgaf H Acedd F Gaf [3, 13]. X

Covering Problems with Hard Capacities Julia Chuzhoy Joseph (Seffi) Naor Computer Science Department Technion, Haifa 32000, Israel E-mail: cjulia,naor ¡ @cs.technion.ac.il. - Abstract elements of . A cover is a multi-set of input sets that * + can cover all the elements, while - contains at most '() We consider the classical vertex cover and set cover copies of each ./ , and each copy covers at most problems with the addition of hard capacity constraints. elements. We assume that the capacity constraints are hard, This means that a set (vertex) can only cover a limited i.e., the number of copies and the capacity of a set cannot number of its elements (adjacent edges) and the number of be exceeded. The capacitated set cover problem is a natu- available copies of each set (vertex) is bounded. This is a ral generalization of a basic and well-studied problem that natural generalization of the classical problems that also captures practical scenarios where resource limitations are captures resource limitations in practical scenarios. present. We obtain the following results. For the unweighted A special case of the capacitated set cover problem that vertex cover problem with hard capacities we give a ¢ - we consider is the capacitated vertex cover problem, de- © £ approximation algorithm which is based on randomized fined as follows. An undirected graph 01¤1+2 is given !36 rounding with alterations. We prove that the weighted ver- and each vertex 3452 is associated with a cost , a * !36 sion is at least as hard as the set cover problem. This is capacity ',!36 , and a multiplicity . -

Fractional Set Cover in the Streaming Model∗

Fractional Set Cover in the Streaming Model∗ Piotr Indyk1, Sepideh Mahabadi2, Ronitt Rubinfeld3, Jonathan Ullman4, Ali Vakilian5, and Anak Yodpinyanee6 1 CSAIL, MIT, Cambridge, MA, USA [email protected] 2 CSAIL, MIT, Cambridge, MA, USA [email protected] 3 CSAIL, MIT and TAU, Cambridge, MA, USA [email protected] 4 CCIS, Northeastern University, Boston, MA, USA [email protected] 5 CSAIL, MIT, Cambridge, MA, USA [email protected] 6 CSAIL, MIT, Cambridge, MA, USA [email protected] Abstract We study the Fractional Set Cover problem in the streaming model. That is, we consider the relaxation of the set cover problem over a universe of n elements and a collection of m sets, where each set can be picked fractionally, with a value in [0, 1]. We present a randomized (1 + ε)- approximation algorithm that makes p passes over the data, and uses Oe(mnO(1/pε) + n) memory space. The algorithm works in both the set arrival and the edge arrival models. To the best of our knowledge, this is the first streaming result for the fractional set cover problem. We obtain our results by employing the multiplicative weights update framework in the streaming settings. 1998 ACM Subject Classification F.2.2 Nonnumerical Algorithms and Problems Keywords and phrases Streaming Algorithms, Fractional Set Cover, LP relaxation, Multiplica- tive Weight Update Digital Object Identifier 10.4230/LIPIcs.APPROX/RANDOM.2017.12 1 Introduction Set Cover is one of the classical NP-hard problems in combinatorial optimization. In this problem the input consists of a set (universe) of n elements U = {e1, ··· , en} and a collection of m sets F = {S1, ··· ,Sm}.