Tips from a Maestro of the Spray Can 3 History Lesson in Abstraction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The World at the Time of Messel: Conference Volume

T. Lehmann & S.F.K. Schaal (eds) The World at the Time of Messel - Conference Volume Time at the The World The World at the Time of Messel: Puzzles in Palaeobiology, Palaeoenvironment and the History of Early Primates 22nd International Senckenberg Conference 2011 Frankfurt am Main, 15th - 19th November 2011 ISBN 978-3-929907-86-5 Conference Volume SENCKENBERG Gesellschaft für Naturforschung THOMAS LEHMANN & STEPHAN F.K. SCHAAL (eds) The World at the Time of Messel: Puzzles in Palaeobiology, Palaeoenvironment, and the History of Early Primates 22nd International Senckenberg Conference Frankfurt am Main, 15th – 19th November 2011 Conference Volume Senckenberg Gesellschaft für Naturforschung IMPRINT The World at the Time of Messel: Puzzles in Palaeobiology, Palaeoenvironment, and the History of Early Primates 22nd International Senckenberg Conference 15th – 19th November 2011, Frankfurt am Main, Germany Conference Volume Publisher PROF. DR. DR. H.C. VOLKER MOSBRUGGER Senckenberg Gesellschaft für Naturforschung Senckenberganlage 25, 60325 Frankfurt am Main, Germany Editors DR. THOMAS LEHMANN & DR. STEPHAN F.K. SCHAAL Senckenberg Research Institute and Natural History Museum Frankfurt Senckenberganlage 25, 60325 Frankfurt am Main, Germany [email protected]; [email protected] Language editors JOSEPH E.B. HOGAN & DR. KRISTER T. SMITH Layout JULIANE EBERHARDT & ANIKA VOGEL Cover Illustration EVELINE JUNQUEIRA Print Rhein-Main-Geschäftsdrucke, Hofheim-Wallau, Germany Citation LEHMANN, T. & SCHAAL, S.F.K. (eds) (2011). The World at the Time of Messel: Puzzles in Palaeobiology, Palaeoenvironment, and the History of Early Primates. 22nd International Senckenberg Conference. 15th – 19th November 2011, Frankfurt am Main. Conference Volume. Senckenberg Gesellschaft für Naturforschung, Frankfurt am Main. pp. 203. -

LCROSS (Lunar Crater Observation and Sensing Satellite) Observation Campaign: Strategies, Implementation, and Lessons Learned

Space Sci Rev DOI 10.1007/s11214-011-9759-y LCROSS (Lunar Crater Observation and Sensing Satellite) Observation Campaign: Strategies, Implementation, and Lessons Learned Jennifer L. Heldmann · Anthony Colaprete · Diane H. Wooden · Robert F. Ackermann · David D. Acton · Peter R. Backus · Vanessa Bailey · Jesse G. Ball · William C. Barott · Samantha K. Blair · Marc W. Buie · Shawn Callahan · Nancy J. Chanover · Young-Jun Choi · Al Conrad · Dolores M. Coulson · Kirk B. Crawford · Russell DeHart · Imke de Pater · Michael Disanti · James R. Forster · Reiko Furusho · Tetsuharu Fuse · Tom Geballe · J. Duane Gibson · David Goldstein · Stephen A. Gregory · David J. Gutierrez · Ryan T. Hamilton · Taiga Hamura · David E. Harker · Gerry R. Harp · Junichi Haruyama · Morag Hastie · Yutaka Hayano · Phillip Hinz · Peng K. Hong · Steven P. James · Toshihiko Kadono · Hideyo Kawakita · Michael S. Kelley · Daryl L. Kim · Kosuke Kurosawa · Duk-Hang Lee · Michael Long · Paul G. Lucey · Keith Marach · Anthony C. Matulonis · Richard M. McDermid · Russet McMillan · Charles Miller · Hong-Kyu Moon · Ryosuke Nakamura · Hirotomo Noda · Natsuko Okamura · Lawrence Ong · Dallan Porter · Jeffery J. Puschell · John T. Rayner · J. Jedadiah Rembold · Katherine C. Roth · Richard J. Rudy · Ray W. Russell · Eileen V. Ryan · William H. Ryan · Tomohiko Sekiguchi · Yasuhito Sekine · Mark A. Skinner · Mitsuru Sôma · Andrew W. Stephens · Alex Storrs · Robert M. Suggs · Seiji Sugita · Eon-Chang Sung · Naruhisa Takatoh · Jill C. Tarter · Scott M. Taylor · Hiroshi Terada · Chadwick J. Trujillo · Vidhya Vaitheeswaran · Faith Vilas · Brian D. Walls · Jun-ihi Watanabe · William J. Welch · Charles E. Woodward · Hong-Suh Yim · Eliot F. Young Received: 9 October 2010 / Accepted: 8 February 2011 © The Author(s) 2011. -

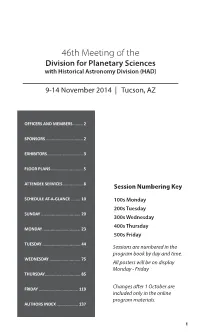

PDF Program Book

46th Meeting of the Division for Planetary Sciences with Historical Astronomy Division (HAD) 9-14 November 2014 | Tucson, AZ OFFICERS AND MEMBERS ........ 2 SPONSORS ............................... 2 EXHIBITORS .............................. 3 FLOOR PLANS ........................... 5 ATTENDEE SERVICES ................. 8 Session Numbering Key SCHEDULE AT-A-GLANCE ........ 10 100s Monday 200s Tuesday SUNDAY ................................. 20 300s Wednesday 400s Thursday MONDAY ................................ 23 500s Friday TUESDAY ................................ 44 Sessions are numbered in the program book by day and time. WEDNESDAY .......................... 75 All posters will be on display Monday - Friday THURSDAY.............................. 85 FRIDAY ................................. 119 Changes after 1 October are included only in the online program materials. AUTHORS INDEX .................. 137 1 DPS OFFICERS AND MEMBERS Current DPS Officers Heidi Hammel Chair Bonnie Buratti Vice-Chair Athena Coustenis Secretary Andrew Rivkin Treasurer Nick Schneider Education and Public Outreach Officer Vishnu Reddy Press Officer Current DPS Committee Members Rosaly Lopes Term Expires November 2014 Robert Pappalardo Term Expires November 2014 Ralph McNutt Term Expires November 2014 Ross Beyer Term Expires November 2015 Paul Withers Term Expires November 2015 Julie Castillo-Rogez Term Expires October 2016 Jani Radebaugh Term Expires October 2016 SPONSORS 2 EXHIBITORS Platinum Exhibitor Silver Exhibitors 3 EXHIBIT BOOTH ASSIGNMENTS 206 Applied -

Primates, Adapiformes) Skull from the Uintan (Middle Eocene) of San Diego County, California

AMERICAN JOURNAL OF PHYSICAL ANTHROPOLOGY 98:447-470 (1995 New Notharctine (Primates, Adapiformes) Skull From the Uintan (Middle Eocene) of San Diego County, California GREGG F. GUNNELL Museum of Paleontology, University of Michigan, Ann Arbor, Michigan 481 09-1079 KEY WORDS Californian primates, Cranial morphology, Haplorhine-strepsirhine dichotomy ABSTRACT A new genus and species of notharctine primate, Hespero- lemur actius, is described from Uintan (middle Eocene) aged rocks of San Diego County, California. Hesperolemur differs from all previously described adapiforms in having the anterior third of the ectotympanic anulus fused to the internal lateral wall of the auditory bulla. In this feature Hesperolemur superficially resembles extant cheirogaleids. Hesperolemur also differs from previously known adapiforms in lacking bony canals that transmit the inter- nal carotid artery through the tympanic cavity. Hesperolemur, like the later occurring North American cercamoniine Mahgarita steuensi, appears to have lacked a stapedial artery. Evidence from newly discovered skulls ofNotharctus and Smilodectes, along with Hesperolemur, Mahgarita, and Adapis, indicates that the tympanic arterial circulatory pattern of these adapiforms is charac- terized by stapedial arteries that are smaller than promontory arteries, a feature shared with extant tarsiers and anthropoids and one of the character- istics often used to support the existence of a haplorhine-strepsirhine dichot- omy among extant primates. The existence of such a dichotomy among Eocene primates is not supported by any compelling evidence. Hesperolemur is the latest occurring notharctine primate known from North America and is the only notharctine represented among a relatively diverse primate fauna from southern California. The coastal lowlands of southern California presumably served as a refuge area for primates during the middle and later Eocene as climates deteriorated in the continental interior. -

Evolutionary History of Lorisiform Primates

Evolution: Reviewed Article Folia Primatol 1998;69(suppl 1):250–285 oooooooooooooooooooooooooooooooo Evolutionary History of Lorisiform Primates D. Tab Rasmussen, Kimberley A. Nekaris Department of Anthropology, Washington University, St. Louis, Mo., USA Key Words Lorisidae · Strepsirhini · Plesiopithecus · Mioeuoticus · Progalago · Galago · Vertebrate paleontology · Phylogeny · Primate adaptation Abstract We integrate information from the fossil record, morphology, behavior and mo- lecular studies to provide a current overview of lorisoid evolution. Several Eocene prosimians of the northern continents, including both omomyids and adapoids, have been suggested as possible lorisoid ancestors, but these cannot be substantiated as true strepsirhines. A small-bodied primate, Anchomomys, of the middle Eocene of Europe may be the best candidate among putative adapoids for status as a true strepsirhine. Recent finds of Eocene primates in Africa have revealed new prosimian taxa that are also viable contenders for strepsirhine status. Plesiopithecus teras is a Nycticebus- sized, nocturnal prosimian from the late Eocene, Fayum, Egypt, that shares cranial specializations with lorisoids, but it also retains primitive features (e.g. four premo- lars) and has unique specializations of the anterior teeth excluding it from direct lorisi- form ancestry. Another unnamed Fayum primate resembles modern cheirogaleids in dental structure and body size. Two genera from Oman, Omanodon and Shizarodon, also reveal a mix of similarities to both cheirogaleids and anchomomyin adapoids. Resolving the phylogenetic position of these Africa primates of the early Tertiary will surely require more and better fossils. By the early to middle Miocene, lorisoids were well established in East Africa, and the debate about whether these represent lorisines or galagines is reviewed. -

The Oldest Asian Record of Anthropoidea

The oldest Asian record of Anthropoidea Sunil Bajpai*, Richard F. Kay†‡, Blythe A. Williams‡, Debasis P. Das*, Vivesh V. Kapur§, and B. N. Tiwari¶ *Department of Earth Sciences, Indian Institute of Technology, Roorkee 247 667, India; ‡Department of Evolutionary Anthropology, Duke University, Durham, NC 27708; §2815 Sector 40-C Chandigarh, India; and ¶Wadia Institute of Himalayan Geology, Dehradun, 248001, India Edited by Alan Walker, Pennsylvania State University, University Park, PA, and approved June 19, 2008 (received for review May 2, 2008) Undisputed anthropoids appear in the fossil record of Africa and Asia (14), which is outside the range of extant insectivorous primates by the middle Eocene, about 45 Ma. Here, we report the discovery of (15). Body mass reconstruction of 1 kg for ancestral primates an early Eocene eosimiid anthropoid primate from India, named tends to rule out the visual predation hypothesis and supports, Anthrasimias, that extends the Asian fossil record of anthropoids by by implication, an alternative hypothesis that links novel primate 9–10 million years. A phylogenetic analysis of 75 taxa and 343 adaptations with the coevolution of angiosperms (16). In addi- characters of the skull, postcranium, and dentition of Anthrasimias tion to Anthrasimias, four other basal primates are known from and living and fossil primates indicates the basal placement of the same stratigraphic level in the Vastan mine and represent Anthrasimias among eosimiids, confirms the anthropoid status of Omomyoidea (Vastanomys, Suratius, compare with Omomy- Eosimiidae, and suggests that crown haplorhines (tarsiers and mon- oidea) and Adapoidea (Marcgodinotius and Asiadapis) (4–6). keys) are the sister clade of Omomyoidea of the Eocene, not nested Reconstructions of body mass and diet for these and other within an omomyoid clade. -

Survivability of Copper Projectiles During Hypervelocity Impacts in Porous

Icarus 268 (2016) 102–117 Contents lists available at ScienceDirect Icarus journal homepage: www.journals.elsevier.com/icarus Survivability of copper projectiles during hypervelocity impacts in porous ice: A laboratory investigation of the survivability of projectiles impacting comets or other bodies ⇑ K.H. McDermott, M.C. Price, M. Cole, M.J. Burchell Centre for Astrophysics and Planetary Science, School of Physical Sciences, University of Kent, Canterbury, Kent CT2 7NH, United Kingdom article info abstract Article history: During hypervelocity impact (>a few km sÀ1) the resulting cratering and/or disruption of the target body Received 27 October 2014 often outweighs interest on the outcome of the projectile material, with the majority of projectiles Revised 4 December 2015 assumed to be vaporised. However, on Earth, fragments, often metallic, have been recovered from impact Accepted 11 December 2015 sites, meaning that metallic projectile fragments may survive a hypervelocity impact and still exist Available online 6 January 2016 within the wall, floor and/or ejecta of the impact crater post-impact. The discovery of the remnant impac- tor composition within the craters of asteroids, planets and comets could provide further information Keywords: regarding the impact history of a body. Accordingly, we study in the laboratory the survivability of 1 Impact processes and 2 mm diameter copper projectiles fired onto ice at speeds between 1.00 and 7.05 km sÀ1. The projec- Cratering À1 Mars tile was recovered intact at speeds up to 1.50 km s , with no ductile deformation, but some surface pit- À1 Meteorites ting was observed. At 2.39 km s , the projectile showed increasing ductile deformation and broke into À1 Comet Tempel-1 two parts. -

LCROSS (Lunar Crater Observation and Sensing Satellite) Observation Campaign: Strategies, Implementation, and Lessons Learned

Space Sci Rev (2012) 167:93–140 DOI 10.1007/s11214-011-9759-y LCROSS (Lunar Crater Observation and Sensing Satellite) Observation Campaign: Strategies, Implementation, and Lessons Learned Jennifer L. Heldmann · Anthony Colaprete · Diane H. Wooden · Robert F. Ackermann · David D. Acton · Peter R. Backus · Vanessa Bailey · Jesse G. Ball · William C. Barott · Samantha K. Blair · Marc W. Buie · Shawn Callahan · Nancy J. Chanover · Young-Jun Choi · Al Conrad · Dolores M. Coulson · Kirk B. Crawford · Russell DeHart · Imke de Pater · Michael Disanti · James R. Forster · Reiko Furusho · Tetsuharu Fuse · Tom Geballe · J. Duane Gibson · David Goldstein · Stephen A. Gregory · David J. Gutierrez · Ryan T. Hamilton · Taiga Hamura · David E. Harker · Gerry R. Harp · Junichi Haruyama · Morag Hastie · Yutaka Hayano · Phillip Hinz · Peng K. Hong · Steven P. James · Toshihiko Kadono · Hideyo Kawakita · Michael S. Kelley · Daryl L. Kim · Kosuke Kurosawa · Duk-Hang Lee · Michael Long · Paul G. Lucey · Keith Marach · Anthony C. Matulonis · Richard M. McDermid · Russet McMillan · Charles Miller · Hong-Kyu Moon · Ryosuke Nakamura · Hirotomo Noda · Natsuko Okamura · Lawrence Ong · Dallan Porter · Jeffery J. Puschell · John T. Rayner · J. Jedadiah Rembold · Katherine C. Roth · Richard J. Rudy · Ray W. Russell · Eileen V. Ryan · William H. Ryan · Tomohiko Sekiguchi · Yasuhito Sekine · Mark A. Skinner · Mitsuru Sôma · Andrew W. Stephens · Alex Storrs · Robert M. Suggs · Seiji Sugita · Eon-Chang Sung · Naruhisa Takatoh · Jill C. Tarter · Scott M. Taylor · Hiroshi Terada · Chadwick J. Trujillo · Vidhya Vaitheeswaran · Faith Vilas · Brian D. Walls · Jun-ihi Watanabe · William J. Welch · Charles E. Woodward · Hong-Suh Yim · Eliot F. Young Received: 9 October 2010 / Accepted: 8 February 2011 / Published online: 18 March 2011 © The Author(s) 2011. -

The Paromomyidae (Primates, Mammalia): Systematics, Evolution, and Ecology

The Paromomyidae (Primates, Mammalia): Systematics, Evolution, and Ecology by Sergi López-Torres A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Department of Anthropology University of Toronto © Copyright by Sergi López-Torres 2017 The Paromomyidae (Primates, Mammalia): Systematics, Evolution, and Ecology Sergi López-Torres Doctor of Philosophy Department of Anthropology University of Toronto 2017 Abstract Plesiadapiforms represent the first radiation of Primates, appearing near the Cretaceous- Paleogene boundary. Eleven families of plesiadapiforms are recognized, including the Paromomyidae. Questions surrounding this family explored in this thesis include its pattern of extinction, its phylogeny and migration, and its dietary ecology. Firstly, there is a record of misclassifying small-sized omomyoid euprimates as late-occurring paromomyid plesiadapiforms. Here, a new omomyoid from the Uintan of California is described. This material was previously thought to pertain to a paromomyid, similar to previously named supposed paromomyids Phenacolemur shifrae and Ignacius mcgrewi. The new Californian species, Ph. shifrae, and I. mcgrewi are transferred to Trogolemurini, a tribe of omomyoids. The implications of this taxonomic change are that no paromomyids are found between the early Bridgerian and the Chadronian, suggesting that the group suffered near-extinction during a period of particularly warm climate. Secondly, migration of paromomyids between North America and Europe is poorly understood. The European material (genus Arcius) is taxonomically reassessed, including emended diagnoses for all four previously named species, and description of two new Arcius species. A cladistic ii analysis of the European paromomyids resolves Arcius as monophyletic, implying that the European radiation of paromomyids was a product of a single migration event from North America. -

Impact Impacts on the Moon, Mercury, and Europa

IMPACT IMPACTS ON THE MOON, MERCURY, AND EUROPA A dissertation submitted to the graduate division of the University of Hawai`i at M¯anoain partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY in GEOLOGY AND GEOPHYSICS March 2020 By Emily S. Costello Dissertation Committee: Paul G. Lucey, Chairperson S. Fagents B. Smith-Konter N. Frazer P. Gorham c Copyright 2020 by Emily S. Costello All Rights Reserved ii This dissertation is dedicated to the Moon, who has fascinated our species for millennia and fascinates us still. iii Acknowledgements I wish to thank my family, who have loved and nourished me, and Dr. Rebecca Ghent for opening the door to planetary science to me, for teaching me courage and strength through example, and for being a true friend. I wish to thank my advisor, Dr. Paul Lucey for mentoring me in the art of concrete impressionism. I could not have hoped for a more inspiring mentor. I finally extend my heartfelt thanks to Dr. Michael Brodie, who erased the line between \great scientist" and \great artist" that day in front of a room full of humanities students - erasing, in my mind, the barrier between myself and the beauty of physics. iv Abstract In this work, I reconstitute and improve upon an Apollo-era statistical model of impact gardening (Gault et al. 1974) and validate the model against the gardening implied by remote sensing and analysis of Apollo cores. My major contribution is the modeling and analysis of the influence of secondary crater-forming impacts, which dominate impact gardening. -

Evidence for Exposed Water Ice in the Moon's

Icarus 255 (2015) 58–69 Contents lists available at ScienceDirect Icarus journal homepage: www.elsevier.com/locate/icarus Evidence for exposed water ice in the Moon’s south polar regions from Lunar Reconnaissance Orbiter ultraviolet albedo and temperature measurements ⇑ Paul O. Hayne a, , Amanda Hendrix b, Elliot Sefton-Nash c, Matthew A. Siegler a, Paul G. Lucey d, Kurt D. Retherford e, Jean-Pierre Williams c, Benjamin T. Greenhagen a, David A. Paige c a Jet Propulsion Laboratory, California Institute of Technology, Pasadena, CA 91109, United States b Planetary Science Institute, Pasadena, CA 91106, United States c University of California, Los Angeles, CA 90095, United States d University of Hawaii, Manoa, HI 96822, United States e Southwest Research Institute, Boulder, CO 80302, United States article info abstract Article history: We utilize surface temperature measurements and ultraviolet albedo spectra from the Lunar Received 25 July 2014 Reconnaissance Orbiter to test the hypothesis that exposed water frost exists within the Moon’s shad- Revised 26 March 2015 owed polar craters, and that temperature controls its concentration and spatial distribution. For locations Accepted 30 March 2015 with annual maximum temperatures T greater than the H O sublimation temperature of 110 K, we Available online 3 April 2015 max 2 find no evidence for exposed water frost, based on the LAMP UV spectra. However, we observe a strong change in spectral behavior at locations perennially below 110 K, consistent with cold-trapped ice on Keywords: the surface. In addition to the temperature association, spectral evidence for water frost comes from Moon the following spectral features: (a) decreasing Lyman- albedo, (b) decreasing ‘‘on-band’’ (129.57– Ices a Ices, UV spectroscopy 155.57 nm) albedo, and (c) increasing ‘‘off-band’’ (155.57–189.57 nm) albedo. -

Observing the Lunar Libration Zones

Observing the Lunar Libration Zones Alexander Vandenbohede 2005 Many Look, Few Observe (Harold Hill, 1991) Table of Contents Introduction 1 1 Libration and libration zones 3 2 Mare Orientale 14 3 South Pole 18 4 Mare Australe 23 5 Mare Marginis and Mare Smithii 26 6 Mare Humboldtianum 29 7 North Pole 33 8 Oceanus Procellarum 37 Appendix I: Observational Circumstances and Equipment 43 Appendix II: Time Stratigraphical Table of the Moon and the Lunar Geological Map 44 Appendix III: Bibliography 46 Introduction – Why Observe the Libration Zones? You might think that, because the Moon always keeps the same hemisphere turned towards the Earth as a consequence of its captured rotation, we always see the same 50% of the lunar surface. Well, this is not true. Because of the complicated motion of the Moon (see chapter 1) we can see a little bit around the east and west limb and over the north and south poles. The result is that we can observe 59% of the lunar surface. This extra 9% of lunar soil is called the libration zones because the motion, a gentle wobbling of the Moon in the Earth’s sky responsible for this, is called libration. In spite of the remainder of the lunar Earth-faced side, observing and even the basic task of identifying formations in the libration zones is not easy. The formations are foreshortened and seen highly edge-on. Obviously, you will need to know when libration favours which part of the lunar limb and how much you can look around the Moon’s limb.