Evaluating Search Engines and Defining a Consensus Implementation Ahmed Kamoun, Patrick Maillé, Bruno Tuffin

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evaluating Exploratory Search Engines : Designing a Set of User-Centered Methods Based on a Modeling of the Exploratory Search Process Emilie Palagi

Evaluating exploratory search engines : designing a set of user-centered methods based on a modeling of the exploratory search process Emilie Palagi To cite this version: Emilie Palagi. Evaluating exploratory search engines : designing a set of user-centered methods based on a modeling of the exploratory search process. Human-Computer Interaction [cs.HC]. Université Côte d’Azur, 2018. English. NNT : 2018AZUR4116. tel-01976017v3 HAL Id: tel-01976017 https://tel.archives-ouvertes.fr/tel-01976017v3 Submitted on 20 Feb 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSE DE DOCTORAT Evaluation des moteurs de recherche exploratoire : Elaboration d'un corps de méthodes centrées utilisateurs, basées sur une modélisation du processus de recherche exploratoire Emilie PALAGI Wimmics (Inria, I3S) et Data Science (EURECOM) Présentée en vue de l’obtention Devant le jury, composé de : du grade de docteur en Informatique Christian Bastien, Professeur, Université de Loraine d’Université Côte d’Azur Pierre De Loor, Professeur, ENIB Dirigée par : Fabien Gandon -

Final Study Report on CEF Automated Translation Value Proposition in the Context of the European LT Market/Ecosystem

Final study report on CEF Automated Translation value proposition in the context of the European LT market/ecosystem FINAL REPORT A study prepared for the European Commission DG Communications Networks, Content & Technology by: Digital Single Market CEF AT value proposition in the context of the European LT market/ecosystem Final Study Report This study was carried out for the European Commission by Luc MEERTENS 2 Khalid CHOUKRI Stefania AGUZZI Andrejs VASILJEVS Internal identification Contract number: 2017/S 108-216374 SMART number: 2016/0103 DISCLAIMER By the European Commission, Directorate-General of Communications Networks, Content & Technology. The information and views set out in this publication are those of the author(s) and do not necessarily reflect the official opinion of the Commission. The Commission does not guarantee the accuracy of the data included in this study. Neither the Commission nor any person acting on the Commission’s behalf may be held responsible for the use which may be made of the information contained therein. ISBN 978-92-76-00783-8 doi: 10.2759/142151 © European Union, 2019. All rights reserved. Certain parts are licensed under conditions to the EU. Reproduction is authorised provided the source is acknowledged. 2 CEF AT value proposition in the context of the European LT market/ecosystem Final Study Report CONTENTS Table of figures ................................................................................................................................................ 7 List of tables .................................................................................................................................................. -

Appendix I: Search Quality and Economies of Scale

Appendix I: search quality and economies of scale Introduction 1. This appendix presents some detailed evidence concerning how general search engines compete on quality. It also examines network effects, scale economies and other barriers to search engines competing effectively on quality and other dimensions of competition. 2. This appendix draws on academic literature, submissions and internal documents from market participants. Comparing quality across search engines 3. As set out in Chapter 3, the main way that search engines compete for consumers is through various dimensions of quality, including relevance of results, privacy and trust, and social purpose or rewards.1 Of these dimensions, the relevance of search results is generally considered to be the most important. 4. In this section, we present consumer research and other evidence regarding how search engines compare on the quality and relevance of search results. Evidence on quality from consumer research 5. We reviewed a range of consumer research produced or commissioned by Google or Bing, to understand how consumers perceive the quality of these and other search engines. The following evidence concerns consumers’ overall quality judgements and preferences for particular search engines: 6. Google submitted the results of its Information Satisfaction tests. Google’s Information Satisfaction tests are quality comparisons that it carries out in the normal course of its business. In these tests, human raters score unbranded search results from mobile devices for a random set of queries from Google’s search logs. • Google submitted the results of 98 tests from the US between 2017 and 2020. Google outscored Bing in each test; the gap stood at 8.1 percentage points in the most recent test and 7.3 percentage points on average over the period. -

The Digital Markets Act: European Precautionary Antitrust

The Digital Markets Act: European Precautionary Antitrust AURELIEN PORTUESE | MAY 2021 The European Commission has set out to ensure digital markets are “fair and contestable.” But in a paradigm shift for antitrust enforcement, its proposal would impose special regulations on a narrowly dened set of “gatekeepers.” Contrary to its intent, this will deter innovation—and hold back small and medium-sized rms—to the detriment of the economy. KEY TAKEAWAYS ▪ The Digital Markets Act (DMA) arbitrarily distinguishes digital from non-digital markets, even though digital distribution is just one of many ways rms reach end users. It should assess competition comprehensively instead of discriminating. ▪ The DMA’s nebulous concept of a digital “gatekeeper” entrenches large digital rms and discourages them from innovating to compete, and it creates a threshold effect for small and mid-sized rms, because it deters successful expansion. ▪ This represents a paradigm shift from ex post antitrust enforcement toward ex ante regulatory compliance—albeit for a narrowly selected set of companies—and a seminal victory for the precautionary principle over innovation. ▪ By distorting innovation incentives instead of enhancing them, the DMA’s model of “precautionary antitrust” threatens the vitality, dynamism, and competitive fairness of Europe’s economy to the detriment of consumers and rms of all sizes. ▪ Given its fundamental aws, the DMA can only be improved at the margins. The rst steps should be leveling the playing eld with reforms that apply to all rms, not just “digital” markets, and eliminating the nebulous “gatekeeper” concept. ▪ Authorities in charge of market-investigation rules need to be separated from antitrust enforcers; they need guidance and capacity for evidence-based fact-nding; and they should analyze competition issues dynamically, focusing on the long term. -

Written Statement for the Record by Megan Gray, General Counsel And

Written Statement for the Record by Megan Gray, General Counsel and Policy Advocate for DuckDuckGo for a hearing entitled "Online Platforms and Market Power, Part 2: Innovation and Entrepreneurship" before The House Judiciary Subcommittee on Antitrust, Commercial and Administrative Law Rep. David Cicilline, Chair Rep. James Sensenbrenner, Ranking Member Tuesday, July 16, 2019 DuckDuckGo is a privacy technology company that helps consumers stay more private online. DuckDuckGo has been competing in the U.S. search market for over a decade, and it is currently the 4th largest search engine in this market (see market share section below). From the vantage point of a company vigorously trying to compete, DuckDuckGo can hopefully provide useful background on the U.S. search market. Features of Competitive General Search Engines A competitive U.S. general search engine in 2019 must have a set of high-quality search features, and ensure none are substandard or shown at the wrong times. This set of mandatory high-quality search features includes: An up-to-date index of most all of the English web pages on the Internet (referred to as “organic links”) Maps Local business answers (e.g., restaurant addresses and phone numbers) News Images Videos Products/shopping Definitions Wikipedia reference Quick answers (calculator, conversions, etc.) Additional niche features may also be necessary to be competitive with particular consumer segments, such as: Up-to-date indexes of web pages in other languages Sports scores Airplane flight information Question/Answer reference (e.g., for computer programming) Lyrics When DuckDuckGo launched in 2008, this list was much smaller, and arguably just one item was a required feature: organic links (sometimes referred to as “the ten blue links”). -

Evaluating the Suitability of Web Search Engines As Proxies for Knowledge Discovery from the Web

Available online at www.sciencedirect.com ScienceDirect Procedia Computer Science 96 ( 2016 ) 169 – 178 20th International Conference on Knowledge Based and Intelligent Information and Engineering Systems, KES2016, 5-7 September 2016, York, United Kingdom Evaluating the suitability of Web search engines as proxies for knowledge discovery from the Web Laura Martínez-Sanahuja*, David Sánchez UNESCO Chair in Data Privacy, Department of Computer Engineering and Mathematics, Universitat Rovira i Virgili Av.Països Catalans, 26, 43007 Tarragona, Catalonia, Spain Abstract Many researchers use the Web search engines’ hit count as an estimator of the Web information distribution in a variety of knowledge-based (linguistic) tasks. Even though many studies have been conducted on the retrieval effectiveness of Web search engines for Web users, few of them have evaluated them as research tools. In this study we analyse the currently available search engines and evaluate the suitability and accuracy of the hit counts they provide as estimators of the frequency/probability of textual entities. From the results of this study, we identify the search engines best suited to be used in linguistic research. © 20162016 TheThe Authors. Authors. Published Published by by Elsevier Elsevier B.V. B.V. This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/). Peer-review under responsibility of KES International. Peer-review under responsibility of KES International Keywords: Web search engines; hit count; information distribution; knowledge discovery; semantic similarity; expert systems. 1. Introduction Expert and knowledge-based systems rely on data to build the knowledge models required to perform inferences or answer questions. -

OSINT Handbook September 2020

OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 Aleksandra Bielska Noa Rebecca Kurz, Yves Baumgartner, Vytenis Benetis 2 Foreword I am delighted to share with you the 2020 edition of the OSINT Tools and Resources Handbook. Once again, the Handbook has been revised and updated to reflect the evolution of this discipline, and the many strategic, operational and technical challenges OSINT practitioners have to grapple with. Given the speed of change on the web, some might question the wisdom of pulling together such a resource. What’s wrong with the Top 10 tools, or the Top 100? There are only so many resources one can bookmark after all. Such arguments are not without merit. My fear, however, is that they are also shortsighted. I offer four reasons why. To begin, a shortlist betrays the widening spectrum of OSINT practice. Whereas OSINT was once the preserve of analysts working in national security, it now embraces a growing class of professionals in fields as diverse as journalism, cybersecurity, investment research, crisis management and human rights. A limited toolkit can never satisfy all of these constituencies. Second, a good OSINT practitioner is someone who is comfortable working with different tools, sources and collection strategies. The temptation toward narrow specialisation in OSINT is one that has to be resisted. Why? Because no research task is ever as tidy as the customer’s requirements are likely to suggest. Third, is the inevitable realisation that good tool awareness is equivalent to good source awareness. Indeed, the right tool can determine whether you harvest the right information. -

TII Internet Intelligence Newsletter - November 2013 Edition

TII Internet Intelligence Newsletter - November 2013 Edition http://us7.campaign-archive1.com/?u=1e8eea4e4724fcca7714ef623... “The success of every one of our customers is our priority and we work diligently to produce and maintain the very best training materials in a format that is effective, enjoyable, interesting and easily understood.” Julie Clegg, President Toddington International Inc. TII Logo Toddington International Inc. Online Open Source Intelligence Newsletter NOVEMBER 2013 EDITION IN THIS EDITION: TOR TAILS: ONLINE ANONYMITY AND SECURITY TAKEN TO THE NEXT LEVEL FINDING UNKNOWN FACEBOOK AND TWITTER ACCOUNTS WITH KNOWN EMAIL ADDRESSES AND MOBILE PHONE NUMBERS SUGGESTING A NEW MONEY LAUNDERING MODEL USEFUL SITES AND RESOURCES FOR ONLINE INVESTIGATORS ...ALSO OF INTEREST WELCOME TO THE NOVEMBER EDITION OF THE TII ONLINE RESEARCH AND INTELLIGENCE NEWSLETTER This month we are pleased to bring you articles by guest authors Allan Rush and Mike Ryan as well as our regular listings of Research Resources and articles of interest to the Open Source Intelligence professional. The last 6 weeks have been busy with open and bespoke courses being delivered to private and public sector clients in North America, Europe and Asia. We’ve been seeing an increase in enrollment on our Using the Internet as an Investigative Research Tool™ and the Introduction to Intelligence Analysis e-Learning programs, and interest in our new courses relating to Financial Crime, Online Security and OSINT Project Management has been high, with clients now booking in-house training courses in these subject areas. We’re pleased to be speaking at a number of conferences this next few weeks including the 7th Annual HTCIA Asia Pacific Training Conference running December 2nd - 4th in Hong Kong and the Breaking the Silence: A Violence Against Women Prevention and Response Symposium hosted by the Ending Violence Association of British Columbia, November 27th - 29th in Richmond, BC. -

Pdf Manual, Vignettes, Source Code, Binaries) with a Single Instruc- Tion

Package ‘RWsearch’ June 5, 2021 Title Lazy Search in R Packages, Task Views, CRAN, the Web. All-in-One Download Description Search by keywords in R packages, task views, CRAN, the web and display the re- sults in the console or in txt, html or pdf files. Download the package documentation (html in- dex, README, NEWS, pdf manual, vignettes, source code, binaries) with a single instruc- tion. Visualize the package dependencies and CRAN checks. Compare the package versions, un- load and install the packages and their dependencies in a safe order. Ex- plore CRAN archives. Use the above functions for task view maintenance. Ac- cess web search engines from the console thanks to 80+ bookmarks. All functions accept stan- dard and non-standard evaluation. Version 4.9.3 Date 2021-06-05 Depends R (>= 3.4.0) Imports brew, latexpdf, networkD3, sig, sos, XML Suggests ctv, cranly, findR, foghorn, knitr, pacman, rmarkdown, xfun Author Patrice Kiener [aut, cre] (<https://orcid.org/0000-0002-0505-9920>) Maintainer Patrice Kiener <[email protected]> License GPL-2 Encoding UTF-8 LazyData false NeedsCompilation no Language en-GB VignetteBuilder knitr RoxygenNote 7.1.1 Repository CRAN Date/Publication 2021-06-05 13:20:08 UTC 1 2 RWsearch-package R topics documented: RWsearch-package . .2 archivedb . .5 checkdb . .7 cnsc.............................................8 crandb . .9 cranmirrors . 11 e_check . 11 funmaintext . 12 f_args . 13 f_pdf . 14 h_direct . 15 h_engine . 16 h_R ............................................. 20 h_ttp . 22 p_archive . 22 p_check . 23 p_deps . 25 p_display . 27 p_down . 28 p_graph . 30 p_html . 32 p_inst . 34 p_inun . 35 p_table2pdf . 36 p_text2pdf . 38 p_unload_all . -

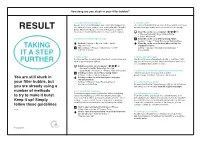

Result Gexsi, Qwant, Startpage Or Ecosia

How deep are you stuck in your filter bubble? 1 use different search engines 5 clear the cache Google stores your information and creates a tracking profile. The cache is the buffer memory on a PC. If you want to erase your Use different search engines, such as DuckDuckGo, MetaGer, movements in your web browser, you should clear it regularly. rESult Gexsi, Qwant, Startpage or Ecosia. Safety, privacy and no tracking are of primary importance to those search engines. Clear the cache on a computer: → Chrome/Firefox/MS Edge: Ctrl+Shift+Del → Safari: Alt+Cmd+E 2 disable personalised ads for apps Clear the cache on an iphone using Safari: Settings → Safari → Clear History and Website Data Android: Settings → Google → Ads → Select Clear the cache on an Android phone using the ‘Opt out of Ads’ standard browser: tAkiNG iOS: Settings → Privacy → Advertising → Enable Settings → Storage → Internal Shared Storage → it A StEp ‘Limit Ad Tracking’. Cached Data 3 delete cookies 6 explore alternative apps Cookies are files stored by web sites which contain information Use Forgotify as an alternative to Spotify or YouTube. It will FurtHEr such as personal page settings. help you discover music that others don’t listen to and that is not classified by the number of clicks. Deleting cookies on a computer: → Chrome/Firefox/MS Edge: Ctrl+Shift+Del → Safari: Menu → Preferences → Privacy → ‘Block All Cookies’ 7 enable the ‘Do not track’ option in your web browser Deleting cookies on an iphone using Safari: Tell web sites not to track your web activities: Settings -

Doc Demo-Day

DBS Digital Business Strategy feb 7 -9 2019 GLOBAL PITCH We are a company named _______ and we need to present a MVP (minimum viable product) plus all the marketing material to investor. We have three days to prepare the show. DEMOS This is the list of demos done during the class. WordPress.org / vs .com Collaborative Work Alternative: AWS, Gandi TRELLO / Scrumblr xMind Scrumblr on Github MindMapping Slack Taxonomy of digital tools Alternative: Jira Altassian Alternative: mindmeister Kick off Gantt / GoogleDrive (Gantter) SurveyMonkey TIPS Find a name QrCode / Unitag Read with Chrome for iOS Android Alternative: Google Forms Made with Unitag.io Mail Chimp (ESP) Alternative: made with a chrome Why use an ESP? extension First email URL Shortener: goo.gl Doodle Project work Browser (Chrome / Firefox) Omibox GoogleTrends Search Zone Key Words Choice helper SEOspike.com Search Engine Qwant, Baidu, Yandex, Naver, Bing, Whois Registrar FTP Yahoo!, Yahoo Japan, seznam.cz … and OVH Google FileZilla chatbots messenger SNCF & Meetic Alternative: Gandi Search tips CMS & web hosting Site: OVH Filetype: Canva / Pixlr W tab Sketch3 / iDraw OFFICE : PPT (masque / master) / Word (style) XLS pivot table Excel (TCD) Presentation PASTE (53) / Prezi / Slide Programming Xcode Advertising Swift programming MyBusiness / GoogleMaps HTML CSS JS Google AdWords / Google 360 Template: SalesForce http://html5doctor.com/ http://www.w3schools.com/ Merkato Android Studio Curation Tools API Scoop.it IFTTT ZAPIER Pocket SquareUp / Stripe / Twilio Storify Pearltree TXT -

Privacy-Handbuch Windows (PDF)

Privacy-Handbuch Spurenarm Surfen mit Mozilla Firefox, E-Mails mit Thunderbird, Anonymisierungsdienste nutzen und Daten verschlüsseln für WINDOWS 2. Oktober 2021 Inhaltsverzeichnis 1 Scroogled 6 2 Angriffe auf die Privatsphäre 16 2.1 Big Data - Kunde ist der, der bezahlt........................ 17 2.1.1 Google..................................... 17 2.1.2 Weitere Datensammler............................ 23 2.2 Techniken der Datensammler............................ 25 2.3 Tendenzen auf dem Gebiet des Tracking...................... 30 2.4 Crypto War 3.0..................................... 33 2.5 Fake News Debatte.................................. 36 2.5.1 Der Kampf gegen Fake News........................ 37 2.5.2 Fake News Beispiele............................. 38 2.5.3 Medienkompetenztraining......................... 42 2.5.4 Fake News oder Propaganda - was ist der Unterschied?........ 43 2.6 Geotagging....................................... 43 2.7 Kommunikationsanalyse............................... 46 2.8 Überwachungen im Internet............................. 48 2.9 Terrorismus und der Ausbau der Überwachung................. 54 2.10 Ich habe doch nichts zu verbergen......................... 58 3 Digitales Aikido 62 3.1 Nachdenken...................................... 63 3.2 Ein Beispiel...................................... 66 3.3 Schattenseiten der Anonymität........................... 67 3.4 Wirkungsvoller Einsatz von Kryptografie..................... 68 4 Spurenarm Surfen 71 4.1 Auswahl des Webbrowsers............................