Neurotribes: the Legacy of Autism and the Future of Neurodiversity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Neuro Tribes

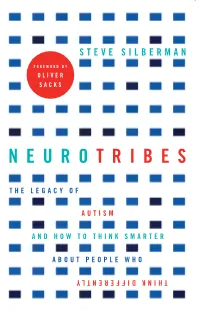

NEURO SMARTER ABOUT PEOPLE WHO THE LEGACY OF ‘NeuroTribes is a sweeping and penetrating history, presented with a rare sympathy and sensitivity . it will change how you think of autism.’—From the foreword by Oliver Sacks STEVE SILBERMAN What is autism: a devastating developmental disorder, a lifelong FOREWORD BY disability, or a naturally occurring form of cognitive difference akin AUTISM to certain forms of genius? In truth, it is all of these things and more OLIVER —and the future of our society depends on our understanding it. TRIBES SACKS Following on from his ground breaking article ‘The Geek Syndrome’, AND HOW TO THINK Wired reporter Steve Silberman unearths the secret history of autism, THINK DIFFERENTLY long suppressed by the same clinicians who became famous for identifying it, and discovers why the number of diagnoses has soared in recent years. Going back to the earliest autism research and chronicling the brave and lonely journey of autistic people and their families through the decades, Silberman provides long-sought solutions to the autism puzzle, while mapping out a path towards a more humane world in which people with learning differences have access to the resources they need to live happier and more meaningful lives. NEUROTRIBES He reveals the untold story of Hans Asperger, whose ‘little professors’ STEVE SILBERMAN were targeted by the darkest social-engineering experiment in human history; exposes the covert campaign by child psychiatrist Leo Kanner THE LEGACY OF to suppress knowledge of the autism spectrum for fifty years; and casts light on the growing movement of ‘neurodiversity’ activists seeking respect, accommodations in the workplace and education, and the right to self-determination for those with cognitive differences. -

Autism Entangled – Controversies Over Disability, Sexuality, and Gender in Contemporary Culture

Autism Entangled – Controversies over Disability, Sexuality, and Gender in Contemporary Culture Toby Atkinson BA, MA This thesis is submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy Sociology Department, Lancaster University February 2021 1 Declaration I declare that this thesis is my own work and has not been submitted in substantially the same form for the award of a higher degree elsewhere. Furthermore, I declare that the word count of this thesis, 76940 words, does not exceed the permitted maximum. Toby Atkinson February 2021 2 Acknowledgements I want to thank my supervisors Hannah Morgan, Vicky Singleton, and Adrian Mackenzie for the invaluable support they offered throughout the writing of this thesis. I am grateful as well to Celia Roberts and Debra Ferreday for reading earlier drafts of material featured in several chapters. The research was made possible by financial support from Lancaster University and the Economic and Social Research Council. I also want to thank the countless friends, colleagues, and family members who have supported me during the research process over the last four years. 3 Contents DECLARATION ......................................................................................... 2 ACKNOWLEDGEMENTS ............................................................................. 3 ABSTRACT .............................................................................................. 9 PART ONE: ........................................................................................ -

Autism Society History

Feature | Autism Society History Where We’ve Been and Where We’re Going The Autism Society’s Proud History s the nation’s oldest grassroots autism organization, the Autism Society has Abeen working to improve the lives of all affected by autism for 46 years. When the organization was founded in 1965, the autism community was very different than it is today: the term “autism” was not widely known, and doctors often blamed the condition on “refrigerator mothers,” or parents who were cold and unloving to their children – a theory that we now know to be completely false. Perhaps even more discouraging than the blame and guilt placed upon parents of children with autism at this time was the complete lack of treatment options. Parents were often told that their child would never improve, and that he or she should be institutionalized. “All practitioners we saw had one characteristic in common – none of them was able to undertake treatment,” wrote Rosalind Oppenheim, mother to a then-6-year-old son with autism, in an article in the June 17, 1961, Saturday Evening Post. “‘When will you stop running?’ one well-meaning guidance counselor asked us along the way. When indeed? After eighteen costly, heartbreaking months we felt that we had exhausted all the local medical resources.” Oppenheim’s article garnered many letters from other parents of children with autism who had had similar experiences. She sent them on to Dr. Bernard Rimland, another parent of a child with autism who was also a psychologist. Not long after, Dr. Rimland published the landmark book Infantile Autism, the first work to argue for a physical, not psychological, cause of autism. -

Autism and New Media: Disability Between Technology and Society

1 Autism and new media: Disability between technology and society Amit Pinchevski (lead author) Department of Communication and Journalism, The Hebrew University of Jerusalem, Israel John Durham Peters Department of Communication Studies, University of Iowa, USA Abstract This paper explores the elective affinities between autism and new media. Autistic Spectrum Disorder (ASD) provides a uniquely apt case for considering the conceptual link between mental disability and media technology. Tracing the history of the disorder through its various media connections and connotations, we propose a narrative of the transition from impaired sociability in person to fluent social media by network. New media introduce new affordances for people with ASD: The Internet provides habitat free of the burdens of face-to-face encounters; high-tech industry fares well with the purported special abilities of those with Asperger’s syndrome; and digital technology offers a rich metaphorical depository for the condition as a whole. Running throughout is a gender bias that brings communication and technology into the fray of biology versus culture. Autism invites us to rethink our assumptions about communication in the digital age, accounting for both the pains and possibilities it entails. 2 Keywords: Autism (ASD), Asperger’s Syndrome, new media, disability studies, interaction and technology, computer-mediated communication, gender and technology Authors’ bios Amit Pinchevski is a senior lecturer in the Department of Communication and Journalism at the Hebrew University, Israel. He is the author of By Way of Interruption: Levinas and the Ethics of Communication (2005) and co-editor of Media Witnessing: Testimony in the Age of Mass Communication (with Paul Frosh, 2009) and Ethics of Media (with Nick Couldry and Mirca Madianou, 2013). -

Becoming Autistic: How Do Late Diagnosed Autistic People

Becoming Autistic: How do Late Diagnosed Autistic People Assigned Female at Birth Understand, Discuss and Create their Gender Identity through the Discourses of Autism? Emily Violet Maddox Submitted in accordance with the requirements for the degree of Master of Philosophy The University of Leeds School of Sociology and Social Policy September 2019 1 Table of Contents ACKNOWLEDGEMENTS ................................................................................................................................... 5 ABSTRACT ....................................................................................................................................................... 6 ABBREVIATIONS ............................................................................................................................................. 7 CHAPTER ONE ................................................................................................................................................. 8 INTRODUCTION .............................................................................................................................................. 8 1.1 RESEARCH OBJECTIVES ........................................................................................................................................ 8 1.2 TERMINOLOGY ................................................................................................................................................ 14 1.3 OUTLINE OF CHAPTERS .................................................................................................................................... -

Best Kept Secret a Film by Samantha Buck

POV Community Engagement & Education Discussion GuiDe Best Kept Secret A Film by Samantha Buck www.pbs.org/pov LETTER FROM THE FILMMAKER The first question i get about Best Kept Secret is usually “What is your personal relation - ship to autism?” until making this film, i always thought the answer was “none.” What i learned is that we are all connected to it. Autism is part of who we are as a society. Across the country, young adults who turn 21 are pushed out of the school system. They often end up with nowhere to go; they simply disappear from productive society. This is what edu - cators call “falling off the cliff.” While i was on the festival circuit with my last feature documentary, 21 Below , i saw many films about young children with autism. These films were moving and important, but they only spoke of a limited population—predominately caucasians from financially stable fam - ilies. But what happens to children with autism who grow up in other circumstances? i began to research public schools in inner-city areas all over the united states, and the best kind of accident of fate brought me to JFK in newark, n.J. and Janet Mino—a force of na - ture who changed my life. she has been a constant reminder to have faith, value every member of society and believe in people’s potential. Best Kept Secret on the surface could seem like a straightforward vérité film, but we tried to accomplish something different—a subtle and layered story that takes on issues of race, class, poverty and disability through a different lens than the one to which many people are accustomed. -

Autism: a Generation at Threat Review Article

April 2009 JIMA:38486-IMANA.qxd 4/27/2009 10:10 AM Page 28 Review Article Autism: A Generation at Threat Sabeeha Rehman, MPS, FACHE President and Founding Member of National Autism Association New York Metro Chapter New York, New York Fellow of American College of Healthcare Executives Abstract A generation of children is at risk as the incidence of autism increases. No consensus exists on the causes of autism or its treatment. A wide range of poten- tial factors such as genetics, vaccines, and environmental toxins is being explored. Some clinicians offer a combination of behavioral, educational, and pharmaceutical treatments, whereas allied health professionals stress diet, bio- medical interventions, and chelation. With a lack of an organized approach to diagnosis and treatment and a lack of trained professionals, children are not receiving timely interventions. Curing autism has to be made a national priori- ty. National programs should conduct research, standardize treatments, and offer public and professional education. Key words: Autism, autism spectrum disorders, health planning. Purpose impact the normal development of the brain in the he incidence of autism spectrum disorders areas of social interaction, communication skills, and (ASD) is becoming an epidemic. This phenome - cognitive function. Individuals with ASD typically non, not unique to the United States or Europe, have difficulties in verbal and nonverbal communi - Tis fast emerging as a worldwide problem. This paper cation, social interactions, and leisure or play activi - reviews the gains made and obstacles faced in the ties. Individuals with ASD often suffer from numer - diagnosis and treatment of ASD and proposes a com - ous physical ailments, which may include, in part, prehensive plan to deal with this phenomenon. -

Disability in an Age of Environmental Risk by Sarah Gibbons a Thesis

Disablement, Diversity, Deviation: Disability in an Age of Environmental Risk by Sarah Gibbons A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Doctor of Philosophy in English Waterloo, Ontario, Canada, 2016 © Sarah Gibbons 2016 I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract This dissertation brings disability studies and postcolonial studies into dialogue with discourse surrounding risk in the environmental humanities. The central question that it investigates is how critics can reframe and reinterpret existing threat registers to accept and celebrate disability and embodied difference without passively accepting the social policies that produce disabling conditions. It examines the literary and rhetorical strategies of contemporary cultural works that one, promote a disability politics that aims for greater recognition of how our environmental surroundings affect human health and ability, but also two, put forward a disability politics that objects to devaluing disabled bodies by stigmatizing them as unnatural. Some of the major works under discussion in this dissertation include Marie Clements’s Burning Vision (2003), Indra Sinha’s Animal’s People (2007), Gerardine Wurzburg’s Wretches & Jabberers (2010) and Corinne Duyvis’s On the Edge of Gone (2016). The first section of this dissertation focuses on disability, illness, industry, and environmental health to consider how critics can discuss disability and environmental health in conjunction without returning to a medical model in which the term ‘disability’ often designates how closely bodies visibly conform or deviate from definitions of the normal body. -

The Impact of a Diagnosis of Autism Spectrum Disorder on Nonmedical Treatment Options in the Learning Environment from the Perspectives of Parents and Pediatricians

St. John Fisher College Fisher Digital Publications Education Doctoral Ralph C. Wilson, Jr. School of Education 12-2017 The Impact of a Diagnosis of Autism Spectrum Disorder on Nonmedical Treatment Options in the Learning Environment from the Perspectives of Parents and Pediatricians Cecilia Scott-Croff St. John Fisher College, [email protected] Follow this and additional works at: https://fisherpub.sjfc.edu/education_etd Part of the Education Commons How has open access to Fisher Digital Publications benefited ou?y Recommended Citation Scott-Croff, Cecilia, "The Impact of a Diagnosis of Autism Spectrum Disorder on Nonmedical Treatment Options in the Learning Environment from the Perspectives of Parents and Pediatricians" (2017). Education Doctoral. Paper 341. Please note that the Recommended Citation provides general citation information and may not be appropriate for your discipline. To receive help in creating a citation based on your discipline, please visit http://libguides.sjfc.edu/citations. This document is posted at https://fisherpub.sjfc.edu/education_etd/341 and is brought to you for free and open access by Fisher Digital Publications at St. John Fisher College. For more information, please contact [email protected]. The Impact of a Diagnosis of Autism Spectrum Disorder on Nonmedical Treatment Options in the Learning Environment from the Perspectives of Parents and Pediatricians Abstract The purpose of this qualitative study was to identify the impact of a diagnosis of autism spectrum disorder on treatment options available, within the learning environment, at the onset of a diagnosis of autism spectrum disorder (ASD) from the perspective of parents and pediatricians. Utilizing a qualitative methodology to identify codes, themes, and sub-themes through semi-structured interviews, the research captures the lived experiences of five parents with children on the autism spectrum and five pediatricians who cared for those children and families. -

The Diagnosis of Autism: from Kanner to DSM‑III to DSM‑5 and Beyond

Journal of Autism and Developmental Disorders https://doi.org/10.1007/s10803-021-04904-1 S:I AUTISM IN REVIEW: 1980-2020: 40 YEARS AFTER DSM-III The Diagnosis of Autism: From Kanner to DSM‑III to DSM‑5 and Beyond Nicole E. Rosen1 · Catherine Lord1 · Fred R. Volkmar2,3 Accepted: 27 January 2021 © The Author(s) 2021 Abstract In this paper we review the impact of DSM-III and its successors on the feld of autism—both in terms of clinical work and research. We summarize the events leading up to the inclusion of autism as a “new” ofcial diagnostic category in DSM-III, the subsequent revisions of the DSM, and the impact of the ofcial recognition of autism on research. We discuss the uses of categorical vs. dimensional approaches and the continuing tensions around broad vs. narrow views of autism. We also note some areas of current controversy and directions for the future. Keywords Autism · History · Dimensional · Categorical · DSM It has now been nearly 80 years since Leo Kanner’s (1943) frst described by Kanner has changed across the past few classic description of infantile autism. Ofcial recognition of decades. When we refer to the concept in general, we will this condition took almost 40 years; several lines of evidence use the term autism, and when we refer to particular, ear- became available in the 1970s that demonstrated the valid- lier diagnostic constructs, we will use more specifc terms ity of the diagnostic concept, clarifed early misperceptions like autism spectrum disorder, infantile autism, and autistic about autism, and illustrated the need for clearer approaches disorder. -

Educational Inclusion for Children with Autism in Palestine. What Opportunities Can Be Found to Develop Inclusive Educational Pr

EDUCATIONAL INCLUSION FOR CHILDREN WITH AUTISM IN PALESTINE. What opportunities can be found to develop inclusive educational practice and provision for children with autism in Palestine; with special reference to the developing practice in two educational settings? by ELAINE ASHBEE A thesis submitted to the University of Birmingham for the degree of DOCTOR OF PHILOSOPHY School of Education University of Birmingham November 2015 University of Birmingham Research Archive e-theses repository This unpublished thesis/dissertation is copyright of the author and/or third parties. The intellectual property rights of the author or third parties in respect of this work are as defined by The Copyright Designs and Patents Act 1988 or as modified by any successor legislation. Any use made of information contained in this thesis/dissertation must be in accordance with that legislation and must be properly acknowledged. Further distribution or reproduction in any format is prohibited without the permission of the copyright holder. Amendments to names used in thesis The Amira Basma Centre is now known as Jerusalem Princess Basma Centre Friends Girls School is now known as Ramallah Friends Lower School ABSTRACT This study investigates inclusive educational understandings, provision and practice for children with autism in Palestine, using a qualitative, case study approach and a dimension of action research together with participants from two educational settings. In addition, data about the wider context was obtained through interviews, visits, observations and focus group discussions. Despite the extraordinarily difficult context, education was found to be highly valued and Palestinian educators, parents and decision–makers had achieved impressive progress. The research found that autism is an emerging field of interest with a widespread desire for better understanding. -

Building Empathy Autism and the Workplace Katie Gaudion

Building Empathy Autism and the Workplace Katie Gaudion Workplac_Cover v2.indd 1 11/10/2016 14:26 About the research partners The Kingwood Trust Kingwood is a registered charity providing support for adults and young people with autism. Its mission is to pioneer best practice which acknowledges and promotes the potential of people with autism and to disseminate this practice and influence the national agenda. Kingwood is an independent charity and company limited by guarantee. www.kingwood.org.uk The Helen Hamlyn Centre for Design, Royal College of Art The Helen Hamlyn Centre for Design provides a focus for people-centred design research and innovation at the Royal College of Art, London. Originally founded in 1991 to explore the design implications of an ageing society, the centre now works to advance a socially inclusive approach to design through practical research and projects with industry. Its Research Associates programme teams new RCA graduates with business and voluntary sector partners. www.hhcd.rca.ac.uk BEING BEING was commissioned by The Kingwood Trust to shape and manage this ground breaking project with the Helen Hamlyn Centre for Design. BEING is a specialist business consultancy that helps organisations in the public, private or charitable sectors achieve their goals through the effective application and management of design. www.beingdesign.co.uk Workplac_Cover v2.indd 2 11/10/2016 14:26 Contents 2 Foreword 3 Introduction 4 Context: Autism and work 6 Research Methods 8 Co-creation Workshop 12 Autism and Empathy 18 Findings: