Large Scale Computing and Storage Requirements for Basic Energy Sciences: Target 2017 Report of the NERSC Requirements Review Conducted October 8–9, 2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Copyrighted Material

PART I METHODS COPYRIGHTED MATERIAL CHAPTER 1 Overview of Thermochemistry and Its Application to Reaction Kinetics ELKE GOOS Institute of Combustion Technology, German Aerospace Center (DLR), Stuttgart, Germany ALEXANDER BURCAT Faculty of Aerospace Engineering, Technion - Israel Institute of Technology, Haifa, Israel 1.1 HISTORY OF THERMOCHEMISTRY Thermochemistry deals with energy and enthalpy changes accompanying chemical reactions and phase transformations and gives a first estimate of whether a given reaction can occur. To our knowledge, the field of thermochemistry started with the experiments done by Malhard and Le Chatelier [1] with gunpowder and explosives. The first of their two papers of 1883 starts with the sentence: “All combustion is accompanied by the release of heat that increases the temperature of the burned bodies.” In 1897, Berthelot [2], who also experimented with explosives, published his two-volume monograph Thermochimie in which he summed up 40 years of calori- metric studies. The first textbook, to our knowledge, that clearly explained the principles of thermochemical properties was authored by Lewis and Randall [3] in 1923. Thermochemical data, actually heats of formation, were gathered, evaluated, and published for the first time in the seven-volume book International Critical Tables of Numerical Data, Physics, Chemistry and Technology [4] during 1926–1930 (and the additional index in 1933). In 1932, the American Chemical Society (ACS) monograph No. 60 The Free Energy of Some Organic Compounds [5] appeared. Rate Constant Calculation for Thermal Reactions: Methods and Applications, Edited by Herbert DaCosta and Maohong Fan. Ó 2012 John Wiley & Sons, Inc. Published 2012 by John Wiley & Sons, Inc. 3 4 OVERVIEW OF THERMOCHEMISTRY AND ITS APPLICATION TO REACTION KINETICS In 1936 was published The Thermochemistry of the Chemical Substances [6] where the authors Bichowsky and Rossini attempted to standardize the available data and published them at a common temperature of 18C (291K) and pressure of 1 atm. -

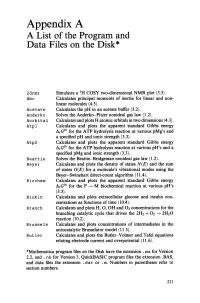

Appendix a a List of the Program and Data Files on the Disk*

Appendix A A List of the Program and Data Files on the Disk* 2dnrnr Simulates a IH COSY two-dimensional NMR plot (5.5). Abc Calculates principal moments of inertia for linear and non linear molecules (4.5). Acetate Calculates the pH in an acetate buffer (3.2). Anderko Solves the Anderko-Pitzer nonideal gas law (1.2). Aorbital Calculates and plots H atomic orbitals in two dimensions (4.3). Atpl Calculates and plots the apparent standard Gibbs energy I:irGo 1 for the ATP hydrolysis reaction at various pMg's and a specified pH and ionic strength (3.3). Atp2 Calculates and plots the apparent standard Gibbs energy I:irGo 1 for the ATP hydrolysis reaction at various pH's and a specified pMg and ionic strength (3.3). Beattie Solves the Beattie-Bridgeman nonideal gas law (1.2). Beyer Calculates and plots the density of states N(E) and the sum of states G(E) for a molecule's vibrational modes using the Beyer-Swinehart direct-count algorithm (11.4). Biochern Calculates and plots the apparent standard Gibbs energy I:irGo 1 for the F -+ M biochemical reaction at various pH's (3.3). Biokin Calculates and plots extracellular glucose and insulin con centrations as functions of time (10.4). Branch Calculates and plots H, 0, OH and 02 concentrations for the branching catalytic cycle that drives the 2H2 + 02 -+ 2H20 reaction (10.2). Brussels Calculates and plots concentrations of intermediates in the autocatalytic Brusselator model (11.5). Butler Calculates and plots the Butler-Volmer and Tafel equations relating electrode current and overpotential (11.6). -

Clefs CEA N°60

No. 60 clefsSummer 2011 Chemistry is everywhere No. 60 - Summer 2011 clefs Chemistry is everywhere www.cea.fr No. 60 Summer 2011 clefs Chemistry is everywhere Chemistry 2 Foreword, by Valérie Cabuil is everywhere I. NUCLEAR CHEMISTRY Clefs CEA No. 60 – SUMMER 2011 4 Introduction, by Stéphane Sarrade Main cover picture Dyed polymers for photovoltaic cells. 6 Advances in the separation For many years, CEA has been applying chemistry of actinides, all aspects of chemistry, in all its forms. Chemistry is at the very heart of all its by Pascal Baron major programs, whether low-carbon 10 The chemical specificities energies (nuclear energy and new energy technologies), biomedical and of actinides, environmental technologies or the by Philippe Moisy information technologies. 11 Uranium chemistry: significant P. Avavian/CEA – C. Dupont/CEA advances, Inset by Marinella Mazzanti top: Placing corrosion samples in a high-temperature furnace. 12 Chemistry and chemical P. Stroppa/CEA engineering, the COEX process, by Stéphane Grandjean bottom: Gas sensors incorporating “packaged” NEMS. P. Avavian/CEA 13 Supercritical fluids in chemical Pictogram on inside pages processes, © Fotolia by Audrey Hertz and Frédéric Charton Review published by CEA Communication Division 14 The chemistry of corrosion, Bâtiment Siège by Damien Féron, Christophe Gallé 91191 Gif-sur-Yvette Cedex (France) and Stéphane Gin Phone: + 33 (0)1 64 50 10 00 Fax (editor’s office): + 33 (0)1 64 50 17 22 14 17 Focus A Advances in modeling Executive publisher Xavier Clément in chemistry, by Philippe Guilbaud, Editor in chief Jean-Pierre Dognon, Didier Mathieu, 21 Understanding the chemical Marie-José Loverini (until 30/06/2011) Christophe Morell, André Grand mechanisms of radiolysis and Pascale Maldivi by Gérard Baldacchino Deputy editor Martine Trocellier [email protected] Scientific committee Bernard Bonin, Gilles Damamme, Céline Gaiffier, Étienne Klein, II. -

Chemical File Format Conversion Tools : a N Overview

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 3 Issue 2, February - 2014 Chemical File Format Conversion Tools : A n Overview Kavitha C. R Dr. T Mahalekshmi Research Scholar, Bharathiyar University Principal Dept of Computer Applications Sree Narayana Institute of Technology SNGIST Kollam, India Cochin, India Abstract— There are a lot of chemical data stored in large different chemical file formats. Three types of file format databases, repositories and other resources. These data are used conversion tools are discussed in section III. And the by different researchers in different applications in various areas conclusion is given in section IV followed by the references. of chemistry. Since these data are stored in several standard chemical file formats, there is a need for the inter-conversion of II. CHEMICAL FILE FORMATS chemical structures between different formats because all the formats are not supported by various software and tools used by A chemical is a collection of atoms bonded together the researchers. Therefore it becomes essential to convert one file in space. The structure of a chemical makes it unique and format to another. This paper reviews some of the chemical file gives it its physical and biological characteristics. This formats and also presents a few inter-conversion tools such as structure is represented in a variety of chemical file formats. Open Babel [1], Mol converter [2] and CncTranslate [3]. These formats are used to represent chemical structure records and its associated data fields. Some of the file Keywords— File format Conversion, Open Babel, mol formats are CML (Chemical Markup Language), SDF converter, CncTranslate, inter- conversion tools. -

Development of a High-Density Initiation Mechanism for Supercritical Nitromethane Decomposition

The Pennsylvania State University The Graduate School DEVELOPMENT OF A HIGH-DENSITY INITIATION MECHANISM FOR SUPERCRITICAL NITROMETHANE DECOMPOSITION A Thesis in Mechanical Engineering by Christopher N. Burke © 2020 Christopher N. Burke Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Science August 2020 The thesis of Christopher N. Burke was reviewed and approved by the following: Richard A. Yetter Professor of Mechanical Engineering Thesis Co-Advisor Adrianus C. van Duin Professor of Mechanical Engineering Thesis Co-Advisor Jacqueline A. O’Connor Professor of Mechanical Engineering Karen Thole Professor of Mechanical Engineering Mechanical Engineering Department Head ii Abstract: This thesis outlines the bottom-up development of a high pressure, high- density initiation mechanism for nitromethane decomposition. Using reactive molecular dynamics (ReaxFF), a hydrogen-abstraction initiation mechanism for nitromethane decomposition that occurs at initial supercritical densities of 0.83 grams per cubic centimeter was investigated and a mechanism was constructed as an addendum for existing mechanisms. The reactions in this mechanism were examined and the pathways leading from the new initiation set into existing mechanism are discussed, with ab-initio/DFT level data to support them, as well as a survey of other combustion mechanisms containing analogous reactions. C2 carbon chemistry and soot formation pathways were also included to develop a complete high-pressure mechanism to compare to the experimental results of Derk. C2 chemistry, soot chemistry, and the hydrogen-abstraction initiation mechanism were appended to the baseline mechanism used by Boyer and analyzed in Chemkin as a temporal, ideal gas decomposition. The analysis of these results includes a comprehensive discussion of the observed chemistry and the implications thereof. -

Daniel I. Pineda, Ph.D. Curriculum Vitae

Daniel I. Pineda, Ph.D. Curriculum vitae CONTACT Assistant Professor j Department of Mechanical Engineering j INFORMATION E-mail: [email protected] The University of Texas at San Antonio j Web: www.danielipineda.com One UTSA Circle j San Antonio, TX 78249 j RESEARCH Energy Conversion, Spectroscopy, Propulsion, Chemical Kinetics, Air Quality and Public Health INTERESTS EDUCATION University of California, Berkeley, Berkeley, CA Ph.D., Mechanical Engineering (Combustion) May 2017 Minors: Chemistry, Environmental Engineering M.S., Mechanical Engineering (Energy Science & Technology) December 2014 The University of Texas at Austin, Austin, TX B.S., Mechanical Engineering (Thermal-Fluid Systems), with Honors May 2012 RESEARCH The University of Texas at San Antonio, San Antonio, TX EXPERIENCE Assistant Professor Aug 2020 – Present University of California, Los Angeles, Los Angeles, CA Postdoctoral Scholar Jul 2017 – Jul 2020 University of California, Berkeley, Berkeley, CA Graduate Student Researcher Aug 2012 – Jul 2017 TEACHING The University of Texas at San Antonio, San Antonio, TX EXPERIENCE Instructor, Propulsion Aug 2020 – Dec 2020 University of California, Los Angeles, Los Angeles, CA Instructor, Combustion and Energy Systems Jan 2019 – Mar 2019 Postdoctoral Mentor, The Rocket Project at UCLA Jul 2017 – Jul 2020 University of California, Berkeley, Berkeley, CA Graduate Student Instructor, Combustion Processes Aug 2015 – Dec 2016 Graduate Student Instructor, Advanced Combustion Jan 2014 – May 2015 AWARDS Editor’s Pick, Applied Optics -

Open Babel Documentation

Open Babel Documentation Geoffrey R Hutchison Chris Morley Craig James Chris Swain Hans De Winter Tim Vandermeersch Noel M O’Boyle (Ed.) Mar 26, 2021 Contents 1 Introduction 3 1.1 Goals of the Open Babel project.....................................3 1.2 Frequently Asked Questions.......................................4 1.3 Thanks..................................................7 2 Install Open Babel 11 2.1 Install a binary package......................................... 11 2.2 Compiling Open Babel.......................................... 11 3 obabel - Convert, Filter and Manipulate Chemical Data 19 3.1 Synopsis................................................. 19 3.2 Options.................................................. 19 3.3 Examples................................................. 22 3.4 Format Options.............................................. 24 3.5 Append property values to the title.................................... 24 3.6 Generating conformers for structures.................................. 24 3.7 Filtering molecules from a multimolecule file.............................. 25 3.8 Substructure and similarity searching.................................. 28 3.9 Sorting molecules............................................ 28 3.10 Remove duplicate molecules....................................... 28 3.11 Aliases for chemical groups....................................... 29 3.12 Forcefield energy and minimization................................... 30 3.13 Aligning molecules or substructures.................................. -

Rule-Based Programming and Strategies for Automated Generation of Detailed Kinetic Models for Gas Phase Combustion of Polycyclic Hydrocarbon Molecules

Departement de formation doctorale en informatique Boole doctorale IAEM Lorraine Polytechnique de Lorraine Programmation par regies et strategies pour la generation automatique de mecanismes de combustion d’hydrocarbures polycycliques THESE presentee et soutenue publiquement le 14 juin 2004 pour Vobtention du Doctorat de l’Institut National Polytechnique de Lorraine (spdcialitd informatique) par Liliana Ibanescu Composition du jury Preaideut ; Gerard Scacchi, Professeur, ENSIC-INPL, Prance Ropporteura Jean-Louis Giavitto, Charge de Recherche, Habilite, LaMI, CNRS, Prance Narciso Marti-Oliet, Professeur, Universidad Complutense Madrid, Espagne Erommoteura ; Olivier Bournez, Charge de Recherche, LORIA, INRIA, Prance Helene Kirchner, Directeur de Recherche, LORIA, CNRS, Prance Amedeo Napoli, Directeur de Recherche, LORIA, CNRS, Prance Gaelic Pengloan, Ingenieur, PSA Peugeot Citroen Automobiles Laboratoire Lorrain de Recherche en Informatique et ses Applications — UMR 7503 Mis en page avec la classe thloria. 1 A mes parents ii iii Remerciements Je voudrais tout d'abord remercier spScialement rues encadrants, au sens large, HSlSne Kirchner, Olivier Boumez, Guy-Marie Cbme et Valerie Conraud pour m'avoir guide pendant ces trois dernitres annSes. Cette these a pu Stre rSalisSe gr&ce & leurs immenses competence et soutien. Je te remercie, Helene, pour avoir accepte de m'encadrer pendant cette these, pour m'avoir toujours encouragee, et pour m'avoir guidee dans 1'apprentissage de la recherche. Je te remercie d'autant plus vivement que je t'ai toujours sentie & mes cOtSs, et ce malgre tes lourdes responsabilites au sein du laboratoire. Je te remercie, Olivier, pour ton immense disponibilite, ton ecoute et la patience dont tu as su faire preuve pour m'enseigner le metier de chercheur. -

Electric Field Effects in Combustion with Non-Thermal Plasma by Tiernan

Electric field effects in combustion with non-thermal plasma by Tiernan Albert Casey A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Engineering - Mechanical Engineering and the Designated Emphasis in Computational and Data Science and Engineering in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Jyh-Yuan Chen, Chair Professor Carlos Fernandez-Pello Professor Phillip Colella Spring 2016 Electric field effects in combustion with non-thermal plasma Copyright 2016 by Tiernan Albert Casey 1 Abstract Electric field effects in combustion with non-thermal plasma by Tiernan Albert Casey Doctor of Philosophy in Engineering - Mechanical Engineering with Designated Emphasis in Computational and Data Science and Engineering University of California, Berkeley Professor Jyh-Yuan Chen, Chair Chemically reacting zones such as flames act as sources of charged species and can thus be considered as weakly-ionized plasmas. As such, the action of an externally applied elec- tric field has the potential to affect the dynamics of reaction zones by enhancing transport, altering the local chemical composition, activating reaction pathways, and by providing ad- ditional thermal energy through the interaction of electrons with neutral molecules. To investigate these effects, one-dimensional simulations of reacting flows are performed includ- ing the treatment of charged species transport and non-thermal electron chemistry using a modified reacting fluid solver. A particular area of interest is that of plasma assisted ignition, which is investigated in a canonical one-dimensional configuration. An incipient ignition kernel, formed by localized energy deposition into a lean mixture of methane and air at atmospheric pressure, is subjected to sub-breakdown electric fields by applied volt- ages across the domain, resulting in non-thermal behavior of the electron sub-fluid formed during the discharge. -

Computational Systems Chemistry with Rigorous Uncertainty Quantification

DISS ETH NO. 24842 Computational Systems Chemistry with Rigorous Uncertainty Quantification A thesis submitted to attain the degree of DOCTOR OF SCIENCES of ETH ZÜRICH (Dr. sc. ETH Zürich) presented by Jonny Proppe Master of Science (M.Sc.) Chemistry University of Hamburg born on August 18, 1987 citizen of Germany accepted on the recommendation of Prof. Dr. Markus Reiher, examiner Prof. Dr. Sereina Riniker, co-examiner ETH Zürich Zürich, Switzerland 2018 Jonny Proppe: Computational Systems Chemistry with Rigorous Uncertainty Quantification Dissertation ETH Zürich No. 24842, 2018. To Louisa. Contents Abstract vii Zusammenfassung ix Acknowledgments xi Abbreviations xiii 1 Complexity and Uncertainty: Challenges for Computational Chemistry 1 2 Computational Chemistry from a Network Perspective 5 2.1 Exploration of Chemical Reaction Networks ................ 6 2.2 Determination of Rate Constants from First Principles . 12 2.3 Kinetic Modeling of Reactive Chemical Systems . 14 3 Computational Chemistry from a Statistical Perspective 25 3.1 Statistical Calibration of Parametric Property Models . 25 3.2 Uncertainty Classification ........................... 27 3.3 Prediction Uncertainty from Resampling Methods . 31 4 Mechanism Deduction from Noisy Chemical Reaction Net- works 37 4.1 Kinetic Modeling of Complex Chemical Reaction Networks . 38 4.2 Overview of the KiNetX Meta-Algorithm . 40 4.3 Automatic Network Generation ....................... 41 4.4 Hierarchical Network Reduction ....................... 42 4.5 Propagation of Free-Energy Uncertainty . 48 4.6 Analysis of Kinetic Sensitivity to Free-Energy Uncertainty . 49 4.7 Integration of Stiff Ordinary Differential Equations . 51 4.8 Exemplary Workflow of the KiNetX Meta-Algorithm . 52 4.9 Accuracy and Efficiency of KiNetX-Based Kinetic Modeling . -

Open Talamantes Thesis May 2019

The Pennsylvania State University The Graduate School College of Engineering CHARACTERIZATION OF POLYOXYMETHYLENE AS A HIGH-DENSITY FUEL FOR USE IN HYBRID ROCKET APPLICATIONS A Thesis in Mechanical Engineering by Gerardo Talamantes Jr. 2019 Gerardo Talamantes Jr. Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Science May 2019 ii The thesis of Gerardo Talamantes was reviewed and approved* by the following: Richard Yetter Professor of Mechanical Engineering Director of the High Pressure Combustion Laboratory Thesis Advisor Stefan Thynell Professor of Mechanical Engineering Daniel Haworth Professor of Mechanical Engineering Associate Department Head of Mechanical and Nuclear Engineering Graduate Programs *Signatures are on file in the Graduate School iii ABSTRACT Experimental testing was conducted to investigate the behavior of polyoxymethylene (POM) for consideration as the solid fuel component in an upper stage hybrid rocket engine using high-density fuel and oxidizer. The regression rate of POM was experimentally measured using an opposed flow burner to determine the influence of color, oxidizer type, and nozzle separation distance on regression rate. The experimental measurements of regression rate on POM were compared to numerical findings that were obtained using a kinetic model developed for POM combustion in a counter-flowing geometry. It was found that natural vs. black POM does not influence the regression rate when measured in the opposed flow burner. The numerical findings were found to be consistent with the observed trends for all strain rates showing the fuel regression rate to increase with increasing oxidizer flow velocity. However, at smaller separation distances the experimental and numerical regression rates varied due to non-uniform burning surface characteristics breaking down the 1-D assumptions of the model. -

WHAT INFLUENCE WOULD a CLOUD BASED SEMANTIC LABORATORY NOTEBOOK HAVE on the DIGITISATION and MANAGEMENT of SCIENTIFIC RESEARCH? by Samantha Kanza

UNIVERSITY OF SOUTHAMPTON Faculty of Physical Sciences and Engineering School of Electronics and Computer Science What Influence would a Cloud Based Semantic Laboratory Notebook have on the Digitisation and Management of Scientific Research? by Samantha Kanza Thesis for the degree of Doctor of Philosophy 25th April 2018 UNIVERSITY OF SOUTHAMPTON ABSTRACT FACULTY OF PHYSICAL SCIENCES AND ENGINEERING SCHOOL OF ELECTRONICS AND COMPUTER SCIENCE Doctor of Philosophy WHAT INFLUENCE WOULD A CLOUD BASED SEMANTIC LABORATORY NOTEBOOK HAVE ON THE DIGITISATION AND MANAGEMENT OF SCIENTIFIC RESEARCH? by Samantha Kanza Electronic laboratory notebooks (ELNs) have been studied by the chemistry research community over the last two decades as a step towards a paper-free laboratory; sim- ilar work has also taken place in other laboratory science domains. However, despite the many available ELN platforms, their uptake in both the academic and commercial worlds remains limited. This thesis describes an investigation into the current ELN landscape, and its relationship with the requirements of laboratory scientists. Market and literature research was conducted around available ELN offerings to characterise their commonly incorporated features. Previous studies of laboratory scientists examined note-taking and record-keeping behaviours in laboratory environments; to complement and extend this, a series of user studies were conducted as part of this thesis, drawing upon the techniques of user-centred design, ethnography, and collaboration with domain experts. These user studies, combined with the characterisation of existing ELN features, in- formed the requirements and design of a proposed ELN environment which aims to bridge the gap between scientists' current practice using paper lab notebooks, and the necessity of publishing their results electronically, at any stage of the experiment life cycle.