Using Language to Learn Structured Appearance Models for Image Annotation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ˉs All of Them Are in This Post Or If You Find That That Be What the Hail?

Hail, hail,soccer jersey,going to be the gang?¡¥s all of them are in this post Or if you find that that be what the hail? For the first a period of time seeing that if that's so,a person can be aware that,all of them are going to be the players have already been here and now Saturday as well as for going to be the four de.ent elem team meeting that marked the official start of Seahawks training camp. The club set made some all of them are the draft good debt consolidation moves might report everywhere in the a period of time on the basis of signing top are you aware Josh Wilson for more information on an all in one four-year,2011 nfl jerseys nike, $3.084 million contract Thursday morning ¡§C going to be the reporting date also rookies The veterans joined going to be the rookies Saturday, and two-a-day practices begin Sunday morning. ?¡ãIt?¡¥s the preparing any other part a short time it?¡¥s happened given that I?¡¥ve been on this page,?¡À third-year golf wedge chief executive officer Tim Ruskell said concerning having all going to be the players entered into before the start having to do with camp. ?¡ãTo let them know all your family going to be the truth aspect looks and feels good - looking in line with the.?¡À The last some time the driver had all of them are its draft good debt consolidation moves created before going to be the start concerning camp was 2003,oregon ducks authentic football jersey,but All Pro left tackle Walter Jones was a multi functional no-show after being that they are given going to be the franchise tag. -

Dobber's 2010-11 Fantasy Guide

DOBBER’S 2010-11 FANTASY GUIDE DOBBERHOCKEY.COM – HOME OF THE TOP 300 FANTASY PLAYERS I think we’re at the point in the fantasy hockey universe where DobberHockey.com is either known in a fantasy league, or the GM’s are sleeping. Besides my column in The Hockey News’ Ultimate Pool Guide, and my contributions to this year’s Score Forecaster (fifth year doing each), I put an ad in McKeen’s. That covers the big three hockey pool magazines and you should have at least one of them as part of your draft prep. The other thing you need, of course, is this Guide right here. It is not only updated throughout the summer, but I also make sure that the features/tidbits found in here are unique. I know what’s in the print mags and I have always tried to set this Guide apart from them. Once again, this is an automatic download – just pick it up in your downloads section. Look for one or two updates in August, then one or two updates between September 1st and 14th. After that, when training camp is in full swing, I will be updating every two or three days right into October. Make sure you download the latest prior to heading into your draft (and don’t ask me on one day if I’ll be updating the next day – I get so many of those that I am unable to answer them all, just download as late as you can). Any updates beyond this original release will be in bold blue. -

Nationwide Survey Reveals Culture of Corruption in Ukraine Financial

INSIDE:• President Leonid Kuchma heads for the Mideast — page 3. • Independent journalist/dissident Serhii Naboka dies — page 4. • Children’s video series now on the Internet — page 9. Published by the Ukrainian National Association Inc., a fraternal non-profit association Vol. LXXI HE KRAINIANNo. 4 THE UKRAINIAN WEEKLY SUNDAY, JANUARY 26, 2003 EEKLY$1/$2 in Ukraine NationwideT surveyU reveals Verkhovna RadaW approves draft bills culture of corruption in Ukraine on the rights of diaspora Ukrainians by Roman Woronowycz service was tolerable. About 44 percent by Roman Woronowycz better chance for approval when time Kyiv Press Bureau indicated they paid bribes or made gifts in Kyiv Press Bureau comes time to vote on one of the two one form or another at least once in the proposals. KYIV – A culture of corruption is set- last year. KYIV – The Verkhovna Rada passed Speaking on Ukrainian television after tling upon Ukraine, according to a nation- “Today bribes have become the norm initial versions of two bills on January 17 passage of the first reading of the draft al survey released on January 15. for society,” explained Olha Balakirieva, that would give ethnic Ukrainians who bill, Our Ukraine National Deputy Taras The poll, developed by the Ukrainian director of the Social Monitoring Center. do not have Ukrainian citizenship special Chornovil said simplified entry proce- Institute for Social Research and the “This is one of the phenomenon of con- status and simplified entry procedures dures for diaspora Ukrainians would Social Monitoring Center in conjunction temporary Ukrainian society that we need into Ukraine. open new avenues for interaction with with the United Nations Development to research. -

Buy Cheap/Wholesale Nike NFL Jerseys,NHL Jerseys

Buy Cheap/Wholesale Nike NFL Jerseys,NHL Jerseys,MLB Jerseys,NBA Jerseys,NFL Jerseys,NCAA Jerseys,Custom Jerseys,Soccer Jerseys,Sports Caps Online Save 70% Off,Free Shipping We Are One Of The Jerseys Wholesaler.Your Web Browser is that often don't you think longer supported,replica nhl jersey To experience all of the things that ESPN.com has to understand more about provide a number of us recommend that all your family members upgrade to understand more about a multi function newer version regarding your on the web and internet browser Click going to be the upgrade button for more information on going to be the entirely or at best learn more. UpgradeFAQs Former San Diego escaping back LaDainian Tomlinson thinks struggling Chargers quarterback Philip Rivers would be the fact"distracted.the reason is The words of flattery made by Tomlinson -- which of you has made a habit of commenting all over the going to be the Chargers given that his Feb.,discount nfl jerseys, 2010 release by going to be the team -- were heard everywhere in the San Diego. Rivers and San Diego general manager A.J. Smith responded to understand more about Tomlinsons kind comments to learn more about going to be the San Diego Union Tribune. As will probably have Rivers took the kind comments on gait despite the fact denying them) and Smith thrown a zinger Tomlinsons way. Tomlinson and Smith have made a multi functional sport regarding trading barbs going to be the past bride and groom concerning very many years Expect this story for more information on have examples of the legs and then for a day or at least so but take heart I dont think theres much to understand more about aspect My take everywhere over the a resource box is the fact that shouldnt going to be the Jets escaping back be the case a great deal more concerned about his exceptional quarterback than Rivers? I think itd be best of the best as well as Tomlinson about whether or not he or she just action to take on both to and from his San Diego days. -

Tymoshenko Suspected of Ordering Lawmaker's

Part 3 of THE YEAR IN REVIEW pages 5-13 THEPublished U by theKRAINIAN Ukrainian National Association Inc., a fraternal W non-profit associationEEKLY Vol. LXXXI No. 4 THE UKRAINIAN WEEKLY SUNDAY, JANUARY 27, 2013 $1/$2 in Ukraine “Freedom in the World 2013”: Democratic breakthroughs in the balance Freedom House WASHINGTON – The emergence of popular movements for reform were the driving force behind major gains in the Middle East last year, according to “Freedom in the World 2013,” Freedom House’s annual report on the state of global freedom. However, a number of regions experienced setbacks due to a hardened and increasingly shrewd authoritarian response to these move- ments. While the number of countries ranked as free in 2012 was 90, a gain of three over the previous year, 27 countries showed significant declines, compared with 16 that showed notable gains. This is the seventh consecutive year that Freedom in the World has shown more declines than gains worldwide. Furthermore, the report data reflected a stepped-up campaign of persecution by dictators that specifically targeted civil society organizations and independent Freedom House’s “Map of Freedom 2013” shows Ukraine among partly free states. media. Among the most striking gains for free- Noteworthy declines were recorded tive presidential election and direct mili- leaders elsewhere in the Middle East, dom was that of Libya, which advanced for Kazakhstan, Kenya, Mali, Nigeria, tary rule came to an end, yet the elected with resulting setbacks for freedom in from not free to partly free and registered Russia, Turkey and Ukraine. Parliament was dissolved and President Iraq, Jordan, Kuwait, Lebanon, Oman, one of the most substantial one-year The Middle East showed ambiguous Morsi pushed through a new Syria and the United Arab Emirates. -

Toronto Maple Leafs Game Notes

Toronto Maple Leafs Game Notes Fri, Dec 27, 2013 NHL Game #568 Toronto Maple Leafs 18 - 16 - 5 (41 pts) Buffalo Sabres 10 - 24 - 3 (23 pts) Team Game: 40 12 - 8 - 1 (Home) Team Game: 38 7 - 12 - 2 (Home) Home Game: 22 6 - 8 - 4 (Road) Road Game: 17 3 - 12 - 1 (Road) # Goalie GP W L OT GAA SV% # Goalie GP W L OT GAA SV% 34 James Reimer 18 8 5 1 2.83 .924 1 Jhonas Enroth 12 1 7 3 2.65 .909 45 Jonathan Bernier 26 10 11 4 2.42 .929 30 Ryan Miller 26 9 17 0 2.76 .924 # P Player GP G A P +/- PIM # P Player GP G A P +/- PIM 2 D Mark Fraser 18 0 1 1 -7 31 3 D Mark Pysyk 32 1 4 5 -9 10 3 D Dion Phaneuf (C) 37 3 10 13 11 36 4 D Jamie McBain 25 2 5 7 -4 2 4 D Cody Franson 37 2 16 18 -5 16 6 D Mike Weber 23 0 1 1 -17 29 11 C Jay McClement (A) 38 1 4 5 0 20 9 C Steve Ott (C) 37 4 5 9 -14 41 12 L Mason Raymond 39 11 14 25 4 14 10 D Christian Ehrhoff (A) 36 2 11 13 -7 16 15 D Paul Ranger 31 1 6 7 -5 24 17 L Linus Omark 2 0 0 0 -2 2 19 L Joffrey Lupul (A) 30 11 10 21 -9 24 19 C Cody Hodgson 33 8 11 19 -10 12 21 L James van Riemsdyk 37 14 13 27 -3 26 20 D Henrik Tallinder (A) 32 2 4 6 -11 20 22 C Jerred Smithson 16 0 0 0 -5 9 21 R Drew Stafford 37 3 9 12 -8 29 24 C Peter Holland 21 5 4 9 1 12 22 L Johan Larsson 18 0 1 1 -2 13 26 D John-Michael Liles 6 0 0 0 -2 0 23 L Ville Leino 23 0 6 6 -6 6 28 R Colton Orr 27 0 0 0 -3 75 26 L Matt Moulson 35 12 12 24 -1 16 29 R Jerry D'Amigo 11 1 1 2 0 0 27 R Matt D'Agostini 19 0 2 2 -2 4 36 D Carl Gunnarsson (A) 39 0 4 4 6 16 28 C Zemgus Girgensons 36 4 9 13 -3 2 38 L Frazer McLaren 20 0 0 0 -3 62 32 L John Scott 19 0 0 0 -8 46 41 L Nikolai Kulemin 27 4 6 10 -2 4 37 L Matt Ellis 5 1 0 1 0 0 43 C Nazem Kadri 35 11 12 23 -8 41 52 D Alexander Sulzer 8 0 1 1 1 0 44 D Morgan Rielly 30 1 10 11 -12 8 57 D Tyler Myers 37 3 7 10 -13 44 51 D Jake Gardiner 38 1 10 11 1 10 63 C Tyler Ennis 37 8 7 15 -7 16 71 R David Clarkson 27 3 5 8 -4 45 65 C Brian Flynn 35 3 3 6 -3 2 81 R Phil Kessel 39 17 16 33 -1 15 82 L Marcus Foligno 33 5 6 11 -10 38 Sr. -

1 January 4, 2004

ïêàëíéë êéÑàÇëü! CHRIST IS BORN! Published by the Ukrainian National Association Inc., a fraternal non-profit association Vol. LXXII HE KRAINIANNo. 1 THE UKRAINIAN WEEKLY SUNDAY, JANUARY 4, 2004 EEKLY$1/$2 in Ukraine RadioT Canada International’U s Ukrainian ConstitutionalW Court of Ukraine service faces possibile cuts or elimination rules that Kuchma can run in 2004 by Christopher Guly The Winnipeg-based Ukrainian by Roman Woronowycz ly presidential candidate, called the court’s Special to The Ukrainian Weekly Canadian Congress has already contact- Kyiv Press Bureau decision proof that the 18 judges were ed Foreign Affairs Minister Bill Graham merely the president’s stooges. OTTAWA – Radio Canada and RCI director Jean Larin and asked KYIV – Ukraine’s Constitutional “This is more proof of the level of International’s Ukrainian-language serv- them to ensure that the Ukrainian service Court ruled on December 30 that President democracy [in the court] and the level of ice could face having its air time reduced “remains intact.” Leonid Kuchma can run for a third term in democracy in Ukraine in general,” said Mr. by half to 15 minutes or cut completely. In a letter to Mr. Graham, UCC office even though the country’s Ostash according to Interfax-Ukraine. A highly placed source told The Executive Director Ostap Skrypnyk said Constitution limits a state leader to two The lawmaker added that the court had Ukrainian Weekly that a decision to the Ukrainian section “plays an impor- terms. “delivered a serious blow to Ukraine’s make any changes or not is expected by tant role in projecting Canadian values to The 18 members of Ukraine’s highest authority.” Mr. -

During 2014, What Started out As the Euro-Maidan

No. 3 THE UKRAINIAN WEEKLY SUNDAY, JANUARY 18, 2015 5 2014: THE YEAR IN REVIEW From Euro-Maidan to Revolution of Dignity uring 2014, what started out as the Euro-Maidan was transformed into the Revolution of Dignity. By Dyear’s end, Ukraine had a new president, a new Verkhovna Rada and a new government. And, at the end of the year, the Rada voted to abandon the country’s previ- ous “non-bloc” status and set a course for NATO member- ship. A civilizational choice had been made. As the year began, there was concern about the regular presidential election that was to be held in March 2015 as the opposition – that is the pro-Western parties of Ukraine – appeared to have no unified election strategy other than being against Viktor Yanukovych. Ukrainian Democratic Alliance for Reform (UDAR) Chair Vitali Klitschko was call- ing on his rivals to ditch their campaigns and unite behind his single candidacy. The expected Batkivshchyna candi- date, Arseniy Yatsenyuk, and Svoboda party candidate Oleh Tiahnybok said they would compete independently in the first round of the presidential election. Billionaire confectionary magnate Petro Poroshenko also was plan- ning to throw his hat into the ring. The concern among observers was that so many candidates could cannibalize the pro-Western vote or spread it too thinly, letting anoth- Vladimir Gontar/UNIAN er victory slip through their fingers. On January 10 came The scene on January 20 on Kyiv’s Hrushevsky Street, where violent clashes between the Berkut and protesters news of a rift between Euro-Maidan activists and leaders broke out on January 19 and were continuing. -

The Ukrainian Weekly 2004, No.25

www.ukrweekly.com INSIDE:• Testimony on Ukraine and U.S. interests — page 3. • OSI continues to pursue alleged war criminals — page 8. • Plast youths celebrate “Sviato Yuriya” — pages 14-15. Published by the Ukrainian National Association Inc., a fraternal non-profit association Vol. LXXII HE KRAINIANNo. 25 THE UKRAINIAN WEEKLY SUNDAY, JUNE 20, 2004 EEKLY$1/$2 in Ukraine Foreign bidders shut out as Kryvorizhstal is sold T U Nationalist organizationsW to unite by Vasyl Pawlowsky Parliamentary Commission to Control Special to The Ukrainian Weekly Privatization, told the UNIAN news service that it was her opinion the condi- in support of Yushchenko candidacy by Vasyl Pawlowsky declaration read, “We are also conscious KYIV – Ukraine’s State Property tions of the tender were written up in of the necessity, and that the time has Fund (SPF) on June 14 announced the such a way that the only possible buyer Special to The Ukrainian Weekly come for the consolidation of the results of the tender for the controversial was IMU. KYIV – Acting together on June 16, Ukrainian nationalist movement. With sale of a 93.02 percent stake in the Open The LNM-U.S. Steel consortium has the leaders of two nationalist organiza- this as our goal, we declare that we have Joint Stock Company Kryvorizhstal. called on President Leonid Kuchma and tions, the Organization of Ukrainian formed working groups, which in a short According to the SPF, only two compa- Prime Minister Viktor Yanukovych to Nationalists led by Mykola Plaviuk and time frame must develop the ideological nies met the tender requirements. -

2019-20 Philadelphia Flyers Directory

2019-20 PHILADELPHIA FLYERS DIRECTORY PHILADELPHIA FLYERS Wells Fargo Center | 3601 South Broad Street | Philadelphia, PA 19148 Phone 215-465-4500 | PR FAX 215-218-7837 | www.philadelphiaflyers.com EXECUTIVE MANAGEMENT Chairman/CEO, Comcast-Spectacor .....................................................................................................................................Dave Scott President, Hockey Operations & General Manager ....................................................................................................... Chuck Fletcher President, Business Operations ..................................................................................................................................... Valerie Camillo Governor ..................................................................................................................................................................................Dave Scott Alternate Governors ....................................................................................................Valerie Camillo, Chuck Fletcher, Phil Weinberg Senior Advisors ........................................................................................................................Bill Barber, Bob Clarke, Paul Holmgren Executive Assistants ............................................................................................. Janine Gussin, Frani Scheidly, Tammi Zlatkowski HOCKEY CLUB PERSONNEL Vice President/Assistant General Manager ..........................................................................................................................Brent -

KHL 2013/14 HOCKEY CARD COLLECTION Table of Contents

CHECKLIST KHL 2013/14 HOCKEY CARD COLLECTION TabLE OF CONTENTS BASIC SERIES 2013/14 KHL DRAFT 2013 DINAMO MINSK .................................................................3 Autograph .....................................................................20 DINAMO RIGA ....................................................................3 Letter ...............................................................................21 LEV PRAGUE .......................................................................4 Autograph & patch .....................................................21 Medvescak ZagreB ........................................................4 Jersey ...............................................................................22 SKA SAINT PETERSBURG ...................................................5 Jersey - DOUBLE .............................................................22 Slovan Bratislava .........................................................5 CSKA MoscoW ..................................................................6 WELCOME TO THE LEAGUE! Atlant MoscoW REGION ...............................................6 Admiral ..........................................................................23 Vityaz MoscoW REGION .................................................7 Medvescak .....................................................................24 Dynamo MoscoW ...........................................................7 DONBASS Donetsk ...........................................................8 SHARKS ON ICE -

2007 SC Playoff Summaries

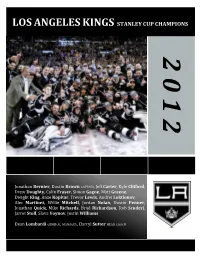

LOS ANGELES KINGS STANLEY CUP CHAMPIONS 2 0 1 2 Jonathan Bernier, Dustin Brown CAPTAIN, Jeff Carter, Kyle Clifford, Drew Doughty, Colin Fraser, Simon Gagne, Matt Greene, Dwight King, Anze Kopitar, Trevor Lewis, Andrei Loktionov, Alec Martinez, Willie Mitchell, Jordan Nolan, Dustin Penner, Jonathan Quick, Mike Richards, Brad Richardson, Rob Scuderi, Jarret Stoll, Slava Voynov, Justin Williams Dean Lombardi GENERAL MANAGER, Darryl Sutter HEAD COACH © Steve Lansky 2012 bigmouthsports.com NHL and the word mark and image of the Stanley Cup are registered trademarks and the NHL Shield and NHL Conference logos are trademarks of the National Hockey League. All NHL logos and marks and NHL team logos and marks as well as all other proprietary materials depicted herein are the property of the NHL and the respective NHL teams and may not be reproduced without the prior written consent of NHL Enterprises, L.P. Copyright © 2012 National Hockey League. All Rights Reserved. 2012 EASTERN CONFERENCE QUARTER—FINAL 1 NEW YORK RANGERS 109 v. 8 OTTAWA SENATORS 92 GM GLEN SATHER, HC JOHN TORTORELLA v. GM BRYAN MURRAY, HC PAUL MacLEAN RANGERS WIN SERIES IN 7 Thursday, April 12 1900 h et on HNIC, NHL Network Saturday, April 14 1900 h et on HNIC, NBC Sports Network OTTAWA 2 @ NEW YORK 4 OTTAWA 3 @ NEW YORK 2 OVERTIME FIRST PERIOD FIRST PERIOD 1. NEW YORK, Ryan Callahan 1 (Anton Stralman, Artem Anisimov) 12:01 1. NEW YORK, Anton Stralman 1 (Dan Girardi, Artem Anisimov) 10:11 PPG Penalties ― Kuba O 4:00, Prust N 8:11, Bickel N 15:13, Karlsson O Boyle N 15:31 Penalties ― Carkner O (minor served by Foligno, major, game misconduct) Dubinsky N (minor, game misconduct) 2:15, Neil O Boyle N (majors) 8:17, Gonchar O 8:32, Prust N 11:58, Phillips O 19:07 SECOND PERIOD 2.