Introduction to Broadcast Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

32Pw9615 Nicam

Preliminary information WideScreen Television 32PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • Digital CrystalClear with Dynamic Contrast and LTP2 • Full PAL plus (Colour Plus incl.) / WideScreen plus • Dolby Digital and MPEG Multichannel • Wireless FM surround speakers • TXT/NEXTVIEW DualScreen • NEXTVIEW (type 3) • 440 pages EasyText teletext (level 2.5) • Active control • Improved Graphical User Interface • Zoom (16x) Preliminary information WideScreen Television 32PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • Digital CrystalClear with Dynamic Contrast and LTP2 • Full PAL plus (Colour Plus incl.) / WideScreen plus • Dolby Digital and MPEG Multichannel • Wireless FM surround speakers • TXT/NEXTVIEW DualScreen • NEXTVIEW (type 3) • 440 pages EasyText teletext (level 2.5) • Active control • Improved Graphical User Interface • Zoom (16x) Preliminary information WideScreen Television 32PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • Digital CrystalClear with Dynamic Contrast and LTP2 • Full PAL plus (Colour Plus incl.) / WideScreen plus • Dolby Digital and MPEG Multichannel • Wireless FM surround speakers • TXT/NEXTVIEW DualScreen • NEXTVIEW (type 3) • 440 pages EasyText teletext (level 2.5) • Active control • Improved Graphical User Interface • Zoom (16x) Preliminary information WideScreen Television 32PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • Digital CrystalClear with Dynamic Contrast and -

TS 101 499 V2.2.1 (2008-07) Technical Specification

ETSI TS 101 499 V2.2.1 (2008-07) Technical Specification Digital Audio Broadcasting (DAB); MOT SlideShow; User Application Specification European Broadcasting Union Union Européenne de Radio-Télévision EBU·UER 2 ETSI TS 101 499 V2.2.1 (2008-07) Reference RTS/JTC-DAB-57 Keywords audio, broadcasting, DAB, digital, PAD ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice Individual copies of the present document can be downloaded from: http://www.etsi.org The present document may be made available in more than one electronic version or in print. In any case of existing or perceived difference in contents between such versions, the reference version is the Portable Document Format (PDF). In case of dispute, the reference shall be the printing on ETSI printers of the PDF version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: http://portal.etsi.org/chaircor/ETSI_support.asp Copyright Notification No part may be reproduced except as authorized by written permission. -

United States Patent (19) 11 Patent Number: 5,526,129 Ko (45) Date of Patent: Jun

US005526129A United States Patent (19) 11 Patent Number: 5,526,129 Ko (45) Date of Patent: Jun. 11, 1996 54). TIME-BASE-CORRECTION IN VIDEO 56) References Cited RECORDING USINGA FREQUENCY-MODULATED CARRIER U.S. PATENT DOCUMENTS 4,672,470 6/1987 Morimoto et al. ...................... 358/323 75) Inventor: Jeone-Wan Ko, Suwon, Rep. of Korea 5,062,005 10/1991 Kitaura et al. ....... ... 358/320 5,142,376 8/1992 Ogura ............... ... 358/310 (73) Assignee: Sam Sung Electronics Co., Ltd., 5,218,449 6/1993 Ko et al. ...... ... 358/320 Suwon, Rep. of Korea 5,231,507 7/1993 Sakata et al. ... 358/320 5,412,481 5/1995 Ko et al. ...................... ... 359/320 21 Appl. No.: 203,029 Primary Examiner-Thai Q. Tran Assistant Examiner-Khoi Truong 22 Filed: Feb. 28, 1994 Attorney, Agent, or Firm-Robert E. Bushnell Related U.S. Application Data (57) ABSTRACT 63 Continuation-in-part of Ser. No. 755,537, Sep. 6, 1991, A circuit for recording and reproducing a TBC reference abandoned. signal for use in video recording/reproducing systems includes a circuit for adding to a video signal to be recorded (30) Foreign Application Priority Data a TBC reference signal in which the TBC reference signal Nov. 19, 1990 (KR) Rep. of Korea ...................... 90/18736 has a period adaptively varying corresponding to a synchro nizing variation of the video signal; and a circuit for extract (51 int. Cl. .................. H04N 9/79 ing and reproducing a TBC reference signal from a video (52) U.S. Cl. .......................... 358/320; 358/323; 358/330; signal read-out from the recording medium in order to 358/337; 360/36.1; 360/33.1; 348/571; correct time-base errors in the video signal. -

Electronic Services Capability Listing

425 BAYVIEW AVENUE, AMITYVILLE, NEW YORK 11701-0455 TEL: 631-842-5600 FSCM 26269 www.usdynamicscorp.com e-mail: [email protected] ELECTRONIC SERVICES CAPABILITY LISTING OEM P/N USD P/N FSC NIIN NSN DESCRIPTION NHA SYSTEM PLATFORM(S) 00110201-1 5998 01-501-9981 5998-01-501-9981 150 VDC POWER SUPPLY CIRCUIT CARD ASSEMBLY AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 00110204-1 5998 01-501-9977 5998-01-501-9977 FILAMENT POWER SUPPLY CIRCUIT CARD ASSEMBLY AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 00110213-1 5998 01-501-5907 5998-01-501-5907 IGBT SWITCH CIRCUIT CARD ASSEMBLY AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 00110215-1 5820 01-501-5296 5820-01-501-5296 RADIO TRANSMITTER MODULATOR AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 004088768 5999 00-408-8768 5999-00-408-8768 HEAT SINK MSP26F/TRANSISTOR HEAT EQUILIZER B-1B 0050-76522 * * *-* DEGASSER ENDCAP * * 01-01375-002 5985 01-294-9788 5985-01-294-9788 ANTENNA 1 ELECTRONICS ASSEMBLY AN/APG-68 F-16 01-01375-002A 5985 01-294-9788 5985-01-294-9788 ANTENNA 1 ELECTRONICS ASSEMBLY AN/APG-68 F-16 015-001-004 5895 00-964-3413 5895-00-964-3413 CAVITY TUNED OSCILLATOR AN/ALR-20A B-52 016024-3 6210 01-023-8333 6210-01-023-8333 LIGHT INDICATOR SWITCH F-15 01D1327-000 573000 5996 01-097-6225 5996-01-097-6225 SOLID STATE RF AMPLIFIER AN/MST-T1 MULTI-THREAT EMITTER SIMULATOR 02032356-1 570722 5865 01-418-1605 5865-01-418-1605 THREAT INDICATOR ASSEMBLY C-130 02-11963 2915 00-798-8292 2915-00-798-8292 COVER, SHIPPING J-79-15/17 ENGINE 025100 5820 01-212-2091 5820-01-212-2091 RECEIVER TRANSMITTER (BASEBAND -

ANTENNA ODIBILOOP Di I0ZAN Per SWL – BCL (1°Parte) Di I0ZAN Florenzio Zannoni

Panorama radiofonico internazionale n. 30 Dal 1982 dalla parte del Radioascolto Rivista telematica edita in proprio dall'AIR Associazione Italiana Radioascolto c.p. 1338 - 10100 Torino AD www.air-radio.it l’editoriale ………………. Il 10-11 Maggio si svolgerà a Torino il consueto Meeting radiorama annuale AIR. PANORAMA RADIOFONICO INTERNAZIONALE organo ufficiale dell’A.I.R. Per rendere piu moderna ed interessante la prima giornata, Associazione Italiana Radioascolto recapito editoriale: abbiamo deciso di cambiare formula . radiorama - C. P. 1338 - 10100 TORINO AD e-mail: [email protected] Presentazioni ridotte al minimo. Posto adatto al AIR - radiorama radioascolto, antenne di ricezione a disposizione per esperimenti, - Responsabile Organo Ufficiale: Giancarlo VENTURI - Responsabile impaginazione radiorama:Claudio RE - Responsabile Blog AIR-radiorama: i singoli Autori banchi con ricevitori, accessori ed ausili per il radioascolto a - Responsabile sito web: Emanuele PELICIOLI ------------------------------------------------- disposizione di tutti , con presentazioni di esperienze pratiche in Il presente numero di radiorama e' pubblicato in rete in proprio dall'AIR tempo reale. Associazione Italiana Radioascolto, tramite il server Aruba con sede in località Il tutto ritrasmesso come sempre via Internet, radio e Palazzetto, 4 - 52011 Bibbiena Stazione (AR). Non costituisce testata giornalistica, televisioni, oltre ad essere registrato per poi essere a disposizione non ha carattere periodico ed è aggiornato secondo la disponibilità e la reperibilità dei sul sito AIR. L’evento sarà denominato EXPO AIR . materiali. Pertanto, non può essere considerato in alcun modo un prodotto editoriale ai sensi della L. n. 62 del Bruno Pecolatto 7.03.2001. La responsabilità di quanto Segretario AIR pubblicato è esclusivamente dei singoli Autori. -

Replacing Digital Terrestrial Television with Internet Protocol?

This is a repository copy of The short future of public broadcasting: Replacing digital terrestrial television with internet protocol?. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/94851/ Version: Accepted Version Article: Ala-Fossi, M and Lax, S orcid.org/0000-0003-3469-1594 (2016) The short future of public broadcasting: Replacing digital terrestrial television with internet protocol? International Communication Gazette, 78 (4). pp. 365-382. ISSN 1748-0485 https://doi.org/10.1177/1748048516632171 Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ The Short Future of Public Broadcasting: Replacing DTT with IP? Marko Ala-Fossi & Stephen Lax School of Communication, School of Media and Communication Media and Theatre (CMT) University of Leeds 33014 University of Tampere Leeds LS2 9JT Finland UK [email protected] [email protected] Keywords: Public broadcasting, terrestrial television, switch-off, internet protocol, convergence, universal service, data traffic, spectrum scarcity, capacity crunch. -

![(12) United States Patent (10) Patent N0.: US 8,073,418 B2 Lin Et A]](https://docslib.b-cdn.net/cover/6267/12-united-states-patent-10-patent-n0-us-8-073-418-b2-lin-et-a-386267.webp)

(12) United States Patent (10) Patent N0.: US 8,073,418 B2 Lin Et A]

US008073418B2 (12) United States Patent (10) Patent N0.: US 8,073,418 B2 Lin et a]. (45) Date of Patent: Dec. 6, 2011 (54) RECEIVING SYSTEMS AND METHODS FOR (56) References Cited AUDIO PROCESSING U.S. PATENT DOCUMENTS (75) Inventors: Chien-Hung Lin, Kaohsiung (TW); 4 414 571 A * 11/1983 Kureha et al 348/554 Hsing-J“ Wei, Keelung (TW) 5,012,516 A * 4/1991 Walton et a1. .. 381/3 _ _ _ 5,418,815 A * 5/1995 Ishikawa et a1. 375/216 (73) Ass1gnee: Mediatek Inc., Sc1ence-Basedlndustr1al 6,714,259 B2 * 3/2004 Kim ................. .. 348/706 Park, Hsin-Chu (TW) 7,436,914 B2* 10/2008 Lin ....... .. 375/347 2009/0262246 A1* 10/2009 Tsaict a1. ................... .. 348/604 ( * ) Notice: Subject to any disclaimer, the term of this * Cited by examiner patent is extended or adjusted under 35 U'S'C' 154(1)) by 793 days' Primary Examiner * Sonny Trinh (21) App1_ NO; 12/185,778 (74) Attorney, Agent, or Firm * Winston Hsu; Scott Margo (22) Filed: Aug. 4, 2008 (57) ABSTRACT (65) Prior Publication Data A receiving system for audio processing includes a ?rst Us 2010/0029240 A1 Feb 4 2010 demodulation unit and a second demodulation unit. The ?rst ' ’ demodulation unit is utilized for receiving an audio signal and (51) Int_ CL generating a ?rst demodulated audio signal. The second H043 1/10 (200601) demodulation unit is utilized for selectively receiving the H043 5/455 (200601) audio signal or the ?rst demodulated audio signal according (52) us. Cl. ....................... .. 455/312- 455/337- 348/726 to a Setting Ofa television audio System Which the receiving (58) Field Of Classi?cation Search ............... -

PROFESSIONAL VIDEO 315 800-947-1175 | 212-444-6675 Blackmagic • Canon

PROFESSIONAL VIDEO 315 800-947-1175 | 212-444-6675 Blackmagic • Canon VIDEO TAPE Fuji Film PRO-T120 VHS Video Cassette (FUPROT120)............................3.29 XA10 Professional HD Camcorder DVC-60 Mini DV Cassette (FUDVC60) .......................................3.35 Pocket Cinema Camera Ultra-compact, the XA10 DVC-80 Mini DV Cassette (FUDVC80)........................................7.99 shares nearly all the Pocket Cinema Camera is a HDV Cassette, 63 Minute (FUHDVDVM63) .................................6.99 functionality of the XF100, true Super 16 digital film DV141HD63S HDV (FUDV14163S) ............................................7.95 but in an even smaller, camera that’s small enough run-and-gun form factor. to keep with you at all times. Maxell 64GB internal flash drive Remarkably compact (5 x 2.6 DV-60 Mini DV Cassette (MADVM60SE) .................................3.99 and two SDXC-compatible x 1.5”) and lightweight (12.5 M-DV63PRO Mini DV Cassette (MADVM63PRO)......................5.50 card slots allow non-stop oz) with a magnesium alloy chassis, it features 13 stops of T-120 VHS Cassette (MAGXT120) ..........................................2.39 recording. Able to capture dynamic range, Super 16 sensor size, and and records 1080HD STD-160 VHS Cassette (MAGXT160).....................................2.69 AVCHD video at bitrates up to lossless CinemaDNG RAW and Apple ProRes 422 (HQ) files to fast STD-180 VHS Cassette (MAGXT180)......................................3.09 24Mbps, the camcorder’s native 1920 x1080 CMOS sensor also SDXC cards, so you can immediately edit or color correct your HG-T120 VHS Cassette (MAHGT120) .....................................1.99 lets you choose 60i, 24p, PF30, and PF24 frame rates for media on your laptop. Active Micro Four Thirds lens mount can HG-T160 VHS Video Cassette (MAHGT160) ............................2.59 customizing the look of your footage. -

Stability and Possible Change in the Japanese Broadcast Market: a Comparative Institutional Analysis

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Nishioka, Yoko Conference Paper Stability and Possible Change in the Japanese Broadcast Market: A Comparative Institutional Analysis 14th Asia-Pacific Regional Conference of the International Telecommunications Society (ITS): "Mapping ICT into Transformation for the Next Information Society", Kyoto, Japan, 24th-27th June, 2017 Provided in Cooperation with: International Telecommunications Society (ITS) Suggested Citation: Nishioka, Yoko (2017) : Stability and Possible Change in the Japanese Broadcast Market: A Comparative Institutional Analysis, 14th Asia-Pacific Regional Conference of the International Telecommunications Society (ITS): "Mapping ICT into Transformation for the Next Information Society", Kyoto, Japan, 24th-27th June, 2017, International Telecommunications Society (ITS), Calgary This Version is available at: http://hdl.handle.net/10419/168526 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. -

A Look at SÉCAM III

Viewer License Agreement You Must Read This License Agreement Before Proceeding. This Scroll Wrap License is the Equivalent of a Shrink Wrap ⇒ Click License, A Non-Disclosure Agreement that Creates a “Cone of Silence”. By viewing this Document you Permanently Release All Rights that would allow you to restrict the Royalty Free Use by anyone implementing in Hardware, Software and/or other Methods in whole or in part what is Defined and Originates here in this Document. This Agreement particularly Enjoins the viewer from: Filing any Patents (À La Submarine?) on said Technology & Claims and/or the use of any Restrictive Instrument that prevents anyone from using said Technology & Claims Royalty Free and without any Restrictions. This also applies to registering any Trademarks including but not limited to those being marked with “™” that Originate within this Document. Trademarks and Intellectual Property that Originate here belong to the Author of this Document unless otherwise noted. Transferring said Technology and/or Claims defined here without this Agreement to another Entity for the purpose of but not limited to allowing that Entity to circumvent this Agreement is Forbidden and will NOT release the Entity or the Transfer-er from Liability. Failure to Comply with this Agreement is NOT an Option if access to this content is desired. This Document contains Technology & Claims that are a Trade Secret: Proprietary & Confidential and cannot be transferred to another Entity without that Entity agreeing to this “Non-Disclosure Cone of Silence” V.L.A. Wrapper. Combining Other Technology with said Technology and/or Claims by the Viewer is an acknowledgment that [s]he is automatically placing Other Technology under the Licenses listed below making this License Self-Enforcing under an agreement of Confidentiality protected by this Wrapper. -

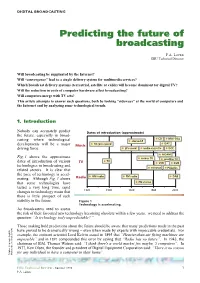

Predicting the Future of Broadcasting

DIGITAL BROADCASTING Predicting the future of broadcasting P.A. Laven EBU Technical Director Will broadcasting be supplanted by the Internet? Will “convergence” lead to a single delivery system for multimedia services? Which broadcast delivery systems (terrestrial, satellite or cable) will become dominant for digital TV? Will the reduction in costs of computer hardware affect broadcasting? Will computers merge with TV sets? This article attempts to answer such questions, both by looking “sideways” at the world of computers and the Internet and by analyzing some technological trends. 1. Introduction Nobody can accurately predict Dates of introduction (approximate) the future, especially in broad- CD Mini-Disc casting where technological stereo LP DAT developments will be a major Music 78 rpm record driving force. LP record audio cassette DCC NICAM Fig. 1 shows the approximate colour TV satellite TV TV dates of introduction of various TV VCR DVB technologies in broadcasting and teletext PALplus related sectors. It is clear that the pace of technology is accel- AM radio FM radio DAB erating. Although Fig. 1 shows Radio that some technologies have FM stereo lasted a very long time, rapid changes in technology mean that 1920 1940 1960 1980 2000 there is little prospect of such stability in the future. Figure 1 Technology is accelerating. As broadcasters need to assess the risk of their favoured new technology becoming obsolete within a few years, we need to address the question: “Is technology truly unpredictable? ” Those making bold predictions about the future should be aware that many predictions made in the past have proved to be dramatically wrong – even when made by experts with impeccable credentials. -

Television and Sound Broadcasting Regulations, 1996

BROADFASTING AND RADIO Ri-DIFFIlSfON THE BROADCASTING AND RADIO RE-DIFFUSION ACT &GULATIONs (under section 23 ( f 1) THETELEVISION AND SOUNDBRO~ASTMG REGULATIONS, 1996 (Made by the Broadcasrinl: Commi.~.~ionon ihe 14th day LF:WIT ~IM 0f Miq: 1'996) LN 25iW 91,W Preliminary 1. These Regulations may be cited as the Television and Sound Broad- cn~non casting Regulations, 1996. 2. In these Regulations- rnl- "adult programmes" means programmes which depict or display sexual organs or conduct in ar! explicit and offensive manner; "authorized person" means a person authorized by the Commission to perform duties pursuant to these Regulations; "'broadcasting station" means any premises from which broadcast programmes originate; "licensee" means a person who is licensed under the Act; "zone" means a zone established pursuant to regulation 27. Licences 3.-+ 1) Evqpem who is desirous of- m~ktiar or t~cnne (a) engaging in commercial broadcasting, non-commercial broad- carting or offering subscriber television senice shall make an application to the Commission on zhe appropriate application Flm fam set out in the First Schedule; Mdda THE TELEVISlON AND SOl/ND BROADCAflI~3'G REGC;IlL/1TlO,VS, 1996 (b) establishing, maintaining or operating a radio redifision system shall make application to the Commission in such form as the Commission may determine. (2) Every application shall be accompanied by a non-refundable fee af one hundred and ten thousand dollars. (3) The Commission may, on receipt of an application, require the applicant to furnish the Commission