Linking Programs in a Single Address Space

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

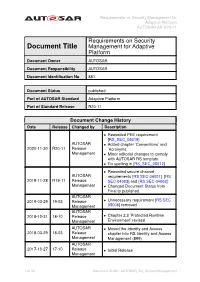

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Download Article (PDF)

Proceedings of the 2nd International Conference on Computer Science and Electronics Engineering (ICCSEE 2013) SecGOT: Secure Global Offset Tables in ELF Executables Chao Zhang, Lei Duan, Tao Wei, Wei Zou Beijing Key Laboratory of Internet Security Technology Institute of Computer Science and Technology, Peking University Beijing, China {chao.zhang, lei_duan, wei_tao, zou_wei}@pku.edu.cn Abstract—Global Offset Table (GOT) is an important feature library code for these two processes are different). This to support library sharing in Executable and Linkable Format problem also restrains the code sharing feature of libraries. (ELF) applications. The addresses of external modules’ global A solution called PIC (Position Independent Code [3]) is variables and functions are runtime resolved and stored in the proposed for the ELF (Executable and Linkable Format [4]) GOT and then are used by the program. If attackers tamper executable binaries which are common in Linux. with the function pointers in the GOT, they can hijack the In libraries or main modules supporting PIC, the code program’s control flow and execute arbitrary malicious code. section does not reference any absolute addresses in order to Current research pays few attentions on this threat (i.e. GOT support code sharing between processes. However, absolute hijacking attack). In this paper, we proposed and implemented addresses are unavoidable in programs. As a result, a GOT a protection mechanism SecGOT to randomize the GOT at table (Global Offset Table [4]) is introduced in the library. load time, and thus prevent attackers from guessing the GOT’s position and tampering with the function pointers. SecGOT is This table resides in the data section and is not shared evaluated against 101 binaries in the /bin directory for Linux. -

Foundation API Client Library PHP – Usage Examples By: Juergen Rolf Revision Version: 1.2

Foundation API Client Library PHP – Usage Examples By: Juergen Rolf Revision Version: 1.2 Revision Date: 2019-09-17 Company Unrestricted Foundation API Client Library PHP – Installation Guide Document Information Document Details File Name MDG Foundation API Client Library PHP - Usage Examples_v1_2 external.docx Contents Usage Examples and Tutorial introduction for the Foundation API Client Library - PHP Author Juergen Rolf Version 1.2 Date 2019-09-17 Intended Audience This document provides a few examples helping the reader to understand the necessary mechanisms to request data from the Market Data Gateway (MDG). The intended audience are application developers who want to get a feeling for the way they can request and receive data from the MDG. Revision History Revision Date Version Notes Author Status 2017-12-04 1.0 Initial Release J. Rolf Released 2018-03-27 1.1 Adjustments for external J. Rolf Released release 2019-09-17 1.2 Minor bugfixes J. Ockel Released References No. Document Version Date 1. Quick Start Guide - Market Data Gateway (MDG) 1.1 2018-03-27 APIs external 2. MDG Foundation API Client Library PHP – Installation 1.2 2019-09-17 Guide external Company Unrestricted Copyright © 2018 FactSet Digital Solutions GmbH. All rights reserved. Revision Version 1.2, Revision Date 2019-09-17, Author: Juergen Rolf www.factset.com | 2 Foundation API Client Library PHP – Installation Guide Table of Contents Document Information ............................................................................................................................ -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

Advanced X86

Advanced x86: BIOS and System Management Mode Internals Input/Output Xeno Kovah && Corey Kallenberg LegbaCore, LLC All materials are licensed under a Creative Commons “Share Alike” license. http://creativecommons.org/licenses/by-sa/3.0/ ABribuEon condiEon: You must indicate that derivave work "Is derived from John BuBerworth & Xeno Kovah’s ’Advanced Intel x86: BIOS and SMM’ class posted at hBp://opensecuritytraining.info/IntroBIOS.html” 2 Input/Output (I/O) I/O, I/O, it’s off to work we go… 2 Types of I/O 1. Memory-Mapped I/O (MMIO) 2. Port I/O (PIO) – Also called Isolated I/O or port-mapped IO (PMIO) • X86 systems employ both-types of I/O • Both methods map peripheral devices • Address space of each is accessed using instructions – typically requires Ring 0 privileges – Real-Addressing mode has no implementation of rings, so no privilege escalation needed • I/O ports can be mapped so that they appear in the I/O address space or the physical-memory address space (memory mapped I/O) or both – Example: PCI configuration space in a PCIe system – both memory-mapped and accessible via port I/O. We’ll learn about that in the next section • The I/O Controller Hub contains the registers that are located in both the I/O Address Space and the Memory-Mapped address space 4 Memory-Mapped I/O • Devices can also be mapped to the physical address space instead of (or in addition to) the I/O address space • Even though it is a hardware device on the other end of that access request, you can operate on it like it's memory: – Any of the processor’s instructions -

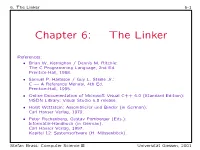

Chapter 6: the Linker

6. The Linker 6-1 Chapter 6: The Linker References: • Brian W. Kernighan / Dennis M. Ritchie: The C Programming Language, 2nd Ed. Prentice-Hall, 1988. • Samuel P. Harbison / Guy L. Steele Jr.: C — A Reference Manual, 4th Ed. Prentice-Hall, 1995. • Online Documentation of Microsoft Visual C++ 6.0 (Standard Edition): MSDN Library: Visual Studio 6.0 release. • Horst Wettstein: Assemblierer und Binder (in German). Carl Hanser Verlag, 1979. • Peter Rechenberg, Gustav Pomberger (Eds.): Informatik-Handbuch (in German). Carl Hanser Verlag, 1997. Kapitel 12: Systemsoftware (H. M¨ossenb¨ock). Stefan Brass: Computer Science III Universit¨atGiessen, 2001 6. The Linker 6-2 Overview ' $ 1. Introduction (Overview) & % 2. Object Files, Libraries, and the Linker 3. Make 4. Dynamic Linking Stefan Brass: Computer Science III Universit¨atGiessen, 2001 6. The Linker 6-3 Introduction (1) • Often, a program consists of several modules which are separately compiled. Reasons are: The program is large. Even with fast computers, editing and compiling a single file with a million lines leads to unnecessary delays. The program is developed by several people. Different programmers cannot easily edit the same file at the same time. (There is software for collaborative work that permits that, but it is still a research topic.) A large program is easier to understand if it is divided into natural units. E.g. each module defines one data type with its operations. Stefan Brass: Computer Science III Universit¨atGiessen, 2001 6. The Linker 6-4 Introduction (2) • Reasons for splitting a program into several source files (continued): The same module might be used in different pro- grams (e.g. -

Csc 453 Linking and Loading

CSc 453 Linking and Loading Saumya Debray The University of Arizona Tucson Tasks in Executing a Program 1. Compilation and assembly. Translate source program to machine language. The result may still not be suitable for execution, because of unresolved references to external and library routines. 2. Linking. Bring together the binaries of separately compiled modules. Search libraries and resolve external references. 3. Loading. Bring an object program into memory for execution. Allocate memory, initialize environment, maybe fix up addresses. CSc 453: Linking and Loading 2 1 Contents of an Object File Header information Overall information about the file and its contents. Object code and data Relocations (may be omitted in executables) Information to help fix up the object code during linking. Symbol table (optional) Information about symbols defined in this module and symbols to be imported from other modules. Debugging information (optional) CSc 453: Linking and Loading 3 Example: ELF Files (x86/Linux) Linkable sections Executable segments ELF Header Program Header (optional, ignored) describes sections Table sections segments Section Header describes sections (optional, ignored) Table CSc 453: Linking and Loading 4 2 ELF Files: contcont’’’’dddd ELF Header structure 16 bytes ELF file identifying information (magic no., addr size, byte order) 2 bytes object file type (relocatable, executable, shared object, etc.) 2 bytes machine info 4 bytes object file version 4 bytes entry point (address where execution begins) 4 bytes offset of program header table 4 bytes offset of section header table 4 bytes processor-specific flags 2 bytes ELF header size (in bytes) 2 bytes size of each entry in program header table 2 bytes no. -

Virtual Memory in X86

Fall 2017 :: CSE 306 Virtual Memory in x86 Nima Honarmand Fall 2017 :: CSE 306 x86 Processor Modes • Real mode – walks and talks like a really old x86 chip • State at boot • 20-bit address space, direct physical memory access • 1 MB of usable memory • No paging • No user mode; processor has only one protection level • Protected mode – Standard 32-bit x86 mode • Combination of segmentation and paging • Privilege levels (separate user and kernel) • 32-bit virtual address • 32-bit physical address • 36-bit if Physical Address Extension (PAE) feature enabled Fall 2017 :: CSE 306 x86 Processor Modes • Long mode – 64-bit mode (aka amd64, x86_64, etc.) • Very similar to 32-bit mode (protected mode), but bigger address space • 48-bit virtual address space • 52-bit physical address space • Restricted segmentation use • Even more obscure modes we won’t discuss today xv6 uses protected mode w/o PAE (i.e., 32-bit virtual and physical addresses) Fall 2017 :: CSE 306 Virt. & Phys. Addr. Spaces in x86 Processor • Both RAM hand hardware devices (disk, Core NIC, etc.) connected to system bus • Mapped to different parts of the physical Virtual Addr address space by the BIOS MMU Data • You can talk to a device by performing Physical Addr read/write operations on its physical addresses Cache • Devices are free to interpret reads/writes in any way they want (driver knows) System Interconnect (Bus) : all addrs virtual DRAM Network … Disk (Memory) Card : all addrs physical Fall 2017 :: CSE 306 Virt-to-Phys Translation in x86 0xdeadbeef Segmentation 0x0eadbeef Paging 0x6eadbeef Virtual Address Linear Address Physical Address Protected/Long mode only • Segmentation cannot be disabled! • But can be made a no-op (a.k.a. -

Message from the Editor

Article Reading Qualitative Studies Margarete Sandelowski University of North Carolina at Chapel Hill Chapel Hill, North Carolina, USA Julie Barroso University of North Carolina at Chapel Hill Chapel Hill, North Carolina, USA © 2002 Sandelowski et al. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract In this article, the authors hope to shift the debate in the practice disciplines concerning quality in qualitative research from a preoccupation with epistemic criteria toward consideration of aesthetic and rhetorical concerns. They see epistemic criteria as inevitably including aesthetic and rhetorical concerns. The authors argue here for a reconceptualization of the research report as a literary technology that mediates between researcher/writer and reviewer/reader. The evaluation of these reports should thus be treated as occasions in which readers seek to make texts meaningful, rather than for the rigid application of standards and criteria. The authors draw from reader-response theories, literature on rhetoric and representation in science, and findings from an on-going methodological research project involving the appraisal of a set of qualitative studies. Keywords: Reader-response, reading, representation, rhetoric, qualitative research, quality criteria, writing Acknowledgments: We thank the members of -

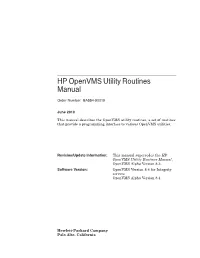

HP Openvms Utility Routines Manual

HP OpenVMS Utility Routines Manual Order Number: BA554-90019 June 2010 This manual describes the OpenVMS utility routines, a set of routines that provide a programming interface to various OpenVMS utilities. Revision/Update Information: This manual supersedes the HP OpenVMS Utility Routines Manual, OpenVMS Alpha Version 8.3. Software Version: OpenVMS Version 8.4 for Integrity servers OpenVMS Alpha Version 8.4 Hewlett-Packard Company Palo Alto, California © Copyright 2010 Hewlett-Packard Development Company, L.P. Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor’s standard commercial license. The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein. Intel and Itanium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. ZK4493 The HP OpenVMS documentation set is available on CD. This document was prepared using DECdocument, Version 3.3-1B. Contents Preface ............................................................ xvii 1 Introduction to Utility Routines 2 Access Control List (ACL) Editor Routine 2.1 Introduction to the ACL Editor Routine ........................... ACL–1 2.2 Using the ACL Editor Routine: An Example ....................... ACL–1 2.3 ACL Editor Routine . ........................................ ACL–2 ACLEDIT$EDIT ........................................... -

The Programming Language Concurrent Pascal

IEEE TRANSACTIONS ON SOFTWARE ENGINEERING, VOL. SE-I, No.2, JUNE 1975 199 The Programming Language Concurrent Pascal PER BRINCH HANSEN Abstract-The paper describes a new programming language Disk buffer for structured programming of computer operating systems. It e.lt tends the sequential programming language Pascal with concurx:~t programming tools called processes and monitors. Section I eltplains these concepts informally by means of pictures illustrating a hier archical design of a simple spooling system. Section II uses the same enmple to introduce the language notation. The main contribu~on of Concurrent Pascal is to extend the monitor concept with an .ex Producer process Consumer process plicit hierarchy Of access' rights to shared data structures that can Fig. 1. Process communication. be stated in the program text and checked by a compiler. Index Terms-Abstract data types, access rights, classes, con current processes, concurrent programming languages, hierarchical operating systems, monitors, scheduling, structured multiprogram ming. Access rights Private data Sequential 1. THE PURPOSE OF CONCURRENT PASCAL program A. Background Fig. 2. Process. INCE 1972 I have been working on a new programming .. language for structured programming of computer S The next picture shows a process component in more operating systems. This language is called Concurrent detail (Fig. 2). Pascal. It extends the sequential programming language A process consists of a private data structure and a Pascal with concurrent programming tools called processes sequential program that can operate on the data. One and monitors [1J-[3]' process cannot operate on the private data of another This is an informal description of Concurrent Pascal.