Accessible Music Studio - Feasibility Report Oussama Metatla, Tony Stockman and Nick Bryan-Kinns

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Free Download Sony Sound Forge for Windows 7 Sound Forge Windows 7 32Bit

free download sony sound forge for windows 7 Sound forge windows 7 32bit. Most people looking for Sound forge windows 7 32bit downloaded: Sound Forge. Acclaimed for its power, stability, and exceptional workflow, Sound Forge is the best way to get from raw audio to a finished master. Sound Forge Audio Studio. Sound Forge™ Audio Studio has everything you need to edit and master professional-quality audio on your home computer. Similar choice. › Sonic sound forge 8.exe › Sound forge audio studio 10.0.exe › Sony sound forge 10 free pc software › Sound forge manager for windows › Sony sound forge windows 7 64 bit › Sound forge audio studio windows 7 ultimate free download. Programs for query ″sound forge windows 7 32bit″ NOD32. Fast and light, vital for gamers and everyday users that don’t want any interruptions. forget. Secures Windows , Mac and . Baidu Spark Browser. Baidu Spark Browser is an application that enables you to surf the Internet. pop-up window . Watching videos . Sony Preset Manager. The Preset Manager is now a standalone application that you can use to back up, transfer . for ACID, Sound Forge , and Vegas . packages in Sound Forge , Vegas, or . E-Z Contact Book. E-Z Contact Book is an easy-to-use yet powerful Windows program to store and manage contact information. yet powerful Windows program to . Eyes Relax. Eyes Relax is a tool that reminds you about taking those breaks. types, notification sounds and many . Filter Forge. Filter Forge is a powerful Photoshop plugin and a stand-alone desktop application for Windows and Mac OS X that allows . -

Pro Audio for Print Layout 1 9/14/11 12:04 AM Page 356

356-443 Pro Audio for Print_Layout 1 9/14/11 12:04 AM Page 356 PRO AUDIO 356 Large Diaphragm Microphones www.BandH.com C414 XLS C214 C414 XLII Accurate, beautifully detailed pickup of any acoustic Cost-effective alternative to the dual-diaphragm Unrivaled up-front sound is well-known for classic instrument. Nine pickup patterns. Controls can be C414, delivers the pristine sound reproduction of music recording or drum ambience miking. Nine disabled for trouble-free use in live-sound applications the classic condenser mic, in a single-pattern pickup patterns enable the perfect setting for every and permanent installations. Three switchable cardioid design. Features low-cut filter switch, application. Three switchable bass cut filters and different bass cut filters and three pre-attenuation 20dB pad switch and dynamic range of 152 dB. three pre-attenuation levels. All controls can be levels. Peak Hold LED displays even shortest overload Includes case, pop filter, windscreen, and easily disabled, Dynamic range of 152 dB. Includes peaks. Dynamic range of 152 dB. Includes case, pop shockmount. case, pop filter, windscreen, and shockmount. filter, windscreen, and shockmount. #AKC214 ..................................................399.00 #AKC414XLII .............................................999.00 #AKC414XLS..................................................949.99 #AKC214MP (Matched Stereo Pair)...............899.00 #AKC414XLIIST (Matched Stereo Pair).........2099.00 Perception Series C2000B AT2020 High quality recording mic with elegantly styled True condenser mics, they deliver clear sound with Effectively isolates source signals while providing die-cast metal housing and silver-gray finish, the accurate sonic detail. Switchable 20dB and switchable a fast transient response and high 144dB SPL C2000B has an almost ruler-flat response that bass cut filter. -

Sony Sound Forge 7.0 Manuale Italiano

Sony Sound Forge 7.0 Manuale Italiano Additions Serial number windows 7 ultimate 64 bit sp1 Sony vegas pro 8 serial serial number list elements 12 manuale italiano Adobe after effects cs6 patch free download for 2012 product writer 1.2 serial number list sony sound forge 10. 7 4. Rar wavelab manual portugues server 2012 foundation pour serveur hp Download 0 free serial number sony sound forge pro 10 keygen 7 serial key free adobe Make music finale 2012 italiano download install windows 8 32 bit or 64. Sony sound forge audio studio 10 crack free download pdf adobe illustrator cs5. 7 with crack premiere cs3 serial. sony sound forge audio studio 10 full crack nero multimedia suite 10 keygen sony acid pro 6.0. ita 64 crack sony vegas movie sony sound forge audio studio manual dreamweaver cs3. sony sound forge. One (1) IBM ThinkPad (OS - Windows 7) running Adobe Audition 3, Audacity 2.6 & Sony SoundForge 7 for audio recording, editing & playback, One (1) Tascam. Software for Sony Equipment Sound Forge, ACID, and Vegas software have defined digital content creation for a generation of creative professionals. Dreamweaver cs6 the missing manual by david sawyer mcfarland pdf doner kebab dc answers fontlab studio manuale italiano photoshop lightroom 4 software review sony dvd architect pro sound forge 10 507 patch premiere cs5 full. edition crack acdsee 5 serial sound forge 10.0 norton partition magic windows 7 64 bit. Sony Sound Forge 7.0 Manuale Italiano Read/Download Acdsee pro 3 free download full sony sound forge 9. 6 tutorial video autodesk inventor publisher download sony manuale italiano Bit adobe pagemaker 7. -

Digitize Sound for Your Electronic Portfolio

At-a-Glance Guide* Digitize Sound for your Electronic Portfolio Adding sound to your portfolio can add a richness and personality that is not possible with static images or text. Whether you are recording a narration for a slide show or a child’s reading sample, digital audio artifacts take advantage of computer technology and allow non-linear access to your sounds (no more fast-forwarding or rewinding audio tapes to find an audio clip). What is required to convert sound into digital format? You will need a small amount of equipment and some audio digitizing software, some of which might be included in your computer’s operating system. Equipment: A microphone that can be connected to your computer. Some laptop computers come with a built-in microphone, which is not adequate for a good quality recording. Some laptops don’t have a microphone port, so must use their USB port to input sound, ß USB microphones – There are microphones that connect directly to the USB port of the computer. The quality of these microphones can vary. ß Griffin Technologies USB Audio adapter will allow you to connect a standard mini-jack microphone to a computer without a microphone port. [http://www.griffintechnology.com/products/imic/index.html] ß Microphone – Radio Shack microphone Model 33-3026 can be connected directly to the Griffin adapter or directly to a computer with a microphone port and provides a good quality recording at a low cost. Software: You will need software to be able to convert sound into a computer-readable format. Most video editing software can also be used to record audio tracks. -

Sound Forge Audio Studio 10.0 Keygen 16F

1 / 2 Sound Forge Audio Studio 10.0 Keygen 16f Magix Sound Forge Audio Studio Keygen enhances your songs and gives them ... Operating System: Windows 7/8/8.1/10 and also windows vista 32bit & 64bit.. Buy MAGIX Sound Forge Audio Studio 10 - Audio Editing/Production Software ... Bit depth converter (to 8-bit, 16-bit, 24-bit); DC offset; 10-band EQ; Fade in/out .... button { box-shadow: 0px 5px 0px 0px #3dc21b; background- color:#44c767; border-radius:42px; di.. Sound forge 11 crack keygen serial number full download. Sony sound forge audio studio 10 free serial number 16f. Sound forge 8 serial .... Sony sound forge audio studio 10 free serial number 16f ... Keygen 164-xxxxxxxxx ile başlayan kodlar veriyo ama program 16F-Xxxxxx istiyo .... Sound forge audio studio 10.0 keygen 16f. Sony sound forge audio studio 10. Serial do autocad 2002 serial do autocad 2002. Como instalar e ativar o sony .... HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Sony ... Run Sound Forge Pro Audio Studio 10, enter your new serial number, and then ... How to Download Sound Forge Pro 10 for FREE ... all-in-one production suite for professional audio recording and mastering, sound design, .... Keygen para - Sound Forge Audio Studio 10.0 Series. ... like ~35 PS1 games, a crap-ton of free Minis, Neutopia for the PCEngine/TG-16, and.. MAGIX Sound Forge Pro 14 Crack is a sound editing tool. ... MAGIX Audio Forge Pro has a challenging and modern recording system, which fits ... Windows Vista/7/8/10 operating system; 1 GHz processor; 500 MB hard-disk space ... [100% Working] · Teamviewer 16 Crack + Keygen Final Download [Latest] ... -

Beat Producing Software Free Mac

Beat producing software free mac Three of the best beat making software we reviewed are the top 10 Free Beat Making Software for Mac below. Hotstepper is free and easy to use beat making software which is compatible with both Mac and Windows. The software includes 12 channels. It's powerful, simple to learn, and completely free. But if you fancy something Best Mac music software: GarageBand Download: Mac App. Here are 15 Free Music Production Software programs for Mac, Windows, This covers creating melodies and beats, synthesizing and mixing. Free music production software for Mac, Windows, Linux, and Ubuntu. Link: Free Software Programs for Mac OS X Exporting & Tracking Out Beats. LMMS is a free open source "beat making" software similar to FL studio. LMMS Website Does this work. ◅= Best Music Production Software Best Beat Making Program for Mac and PC The best free app is NanoStudio, imo. It's a paid app on iOS but free on Mac. Also, as Ankit says Garageband is nearly free and really amazing. NanoStudio -. Here are ten of the best free beat making software. To download If you have a Mac computer, Apple's Garageband is perfect for you. It's your. TopTenREVIEWS is the most popular review site for Beat Making With beat making software, you can create music in the comfort of your . Mac OS X . 5 Best Free Video Editing Software for Windows and Mac · How to. Review the top online beat maker and music production software out there. Mac & PC compatible, and one of the most flexible softwares out there. -

Sound Forge Pro 13 Data Sheet

UPC consignment: 639191920394 UPC on terms: 639191920387 Price: $399.00 UPC consignment Content: 1 DVD, PDF manual Packaging: Hinged lid cardboard box 190 mm x 135 mm x 35 mm Software languages:EN UPC on terms Packaging languages:EN Available from: 04/08/2019 STATE-OF-THE-ART SOUND DESIGN, AUDIO EDITING AND MASTERING SOUND FORGE Pro 13 is the ideal software for creative artists, producers, mastering engineers and sound designers. This powerful audio editor enables you to accomplish all dedicated audio editing, restoration, post- production & mastering tasks with the highest precision. HIGHLIGHTS • Incl. iZotope RX Elements and iZotope Ozone Elements • User experience: 4 new skins, redesigned docking and icons • Record: Record on up to 32 channels in 64-bit/192 kHz in top quality. • Sound design: New effects for creative sound design • Restoration: Sophisticated audio editing and restoration with new DeHisser, DeClipper, DeClicker & DeCrackler •Post-production: Significantly optimized broadcast-ready wave files • Mastering: Discover new, high-quality tools including Wave Hammer 2.0 multiband compressor, equalizer and mastering limiter. • Standards: New VST2/VST3 engine, ARA2 support, high-quality POW-r dithering, create Red Book compatible DAO CD masterings • DDP export enables glitch free CD reproduction • DSD support SYSTEM REQUIREMENTS For Microsoft Windows 7 | 8 | 10 64-bit and 32-bit systems All MAGIX programs are developed with user-friendliness in mind so that all the basic features run smoothly and can be fully controlled, even on low-performance computers. You can check your computer's technical data in your operating system's control panel. Processor: 1 GHz RAM: 512 MB Graphics card: Onboard Available drive space: 500 MB for program installation Internet connection: Required for activating and validating the program, as well as for some program functions. -

TN Production Assistant – V1.0.2.X User Notes

TN Production Assistant – v1.0.2.x User Notes Introduction This software is designed to assist Talking Newspapers in the preparation of a ‘copy-ready’ image of a multi-track Talking Newspaper or Talking Magazine production from individual track recordings created during a preceding ‘studio session’ using a stand-alone digital recorder or other digital recording facility such as a PC with digital recording software. Prerequisites A PC running a Windows Operating System (XP or later). Sufficient hard drive capacity to accommodate the software, working and archive storage (recommended minimum of 1GB) Audio Editing Software (e.g. Adobe Audition, Goldwave or Audacity) installed ‘Microsoft .NET 4 Environment’ installed (this will be automatically installed during the installation procedure below providing an internet connection exists) Installing the software The application is installed from the Downloads Section of the Hamilton Sound website at http://hamiltonsound.co.uk . Please follow the instructions displayed on the website and as presented by the installer. In case of difficulty please contact Hamilton Sound at [email protected] for assistance and advice. Please also report any bugs detected in use or suggested improvements to the above email address. On first installation a welcome screen will be presented followed by the set-up screen. Check and adjust if required the set-up parameters from their pre-set values (see Set-Up below). Configuration Before first using this software it is important to ensure that the configuration parameters are set appropriately for your purposes. The default values of each parameter are shown in parenthesis. TIP – Hold mouse cursor over any field for tips on what to enter there. -

Understanding Audio Production Practices of People with Vision Impairments

Understanding Audio Production Practices of People with Vision Impairments Abir Saha Anne Marie Piper Northwestern University University of California, Irvine Evanston, IL, USA Irvine, CA, USA [email protected] [email protected] ABSTRACT of audio content creation, including music, podcasts, audio drama, The advent of digital audio workstations and other digital audio radio shows, sound art and so on. In modern times, audio content tools has brought a critical shift in the audio industry by empower- creation has increasingly become computer-supported – digital in- ing amateur and professional audio content creators with the nec- struments are used to replicate sounds of physical instruments (e.g., essary means to produce high quality audio content. Yet, we know guitars, drums, etc.) with high-fdelity. Likewise, editing, mixing, little about the accessibility of widely used audio production tools and mastering tasks are also mediated through the use of digital for people with vision impairments. Through interviews with 18 audio workstations (DAWs) and efects plugins (e.g., compression, audio professionals and hobbyists with vision impairments, we fnd equalization, and reverb). This computer-aided work practice is that accessible audio production involves: piecing together accessi- supported by a number of commercially developed DAWs, such 1 2 3 ble and efcient workfows through a combination of mainstream as Pro Tools , Logic Pro and REAPER . In addition to these com- and custom tools; achieving professional competency through a mercial eforts, academic researchers have also invested signifcant steep learning curve in which domain knowledge and accessibility attention towards developing new digital tools to support audio are inseparable; and facilitating learning and creating access by production tasks (e.g., automated editing and mixing) [29, 57, 61]. -

I/O of Sound with R

I/O of sound with R J´er^ome Sueur Mus´eum national d'Histoire naturelle CNRS UMR 7205 ISYEB, Paris, France July 14, 2021 This document shortly details how to import and export sound with Rusing the packages seewave, tuneR and audio1. Contents 1 In 2 1.1 Non specific classes...................................2 1.1.1 Vector......................................2 1.1.2 Matrix......................................2 1.2 Time series.......................................3 1.3 Specific sound classes..................................3 1.3.1 Wave class (package tuneR)..........................4 1.3.2 audioSample class (package audio)......................5 2 Out 5 2.1 .txt format.......................................5 2.2 .wav format.......................................6 2.3 .flac format.......................................6 3 Mono and stereo6 4 Play sound 7 4.1 Specific functions....................................7 4.1.1 Wave class...................................7 4.1.2 audioSample class...............................7 4.1.3 Other classes..................................7 4.2 System command....................................8 5 Summary 8 1The package sound is no more maintained. 1 Import and export of sound with R > options(warn=-1) 1 In The main functions of seewave (>1.5.0) can use different classes of objects to analyse sound: usual classes (numeric vector, numeric matrix), time series classes (ts, mts), sound-specific classes (Wave and audioSample). 1.1 Non specific classes 1.1.1 Vector Any muneric vector can be treated as a sound if a sampling frequency is provided in the f argument of seewave functions. For instance, a 440 Hz sine sound (A note) sampled at 8000 Hz during one second can be generated and plot following: > s1<-sin(2*pi*440*seq(0,1,length.out=8000)) > is.vector(s1) [1] TRUE > mode(s1) [1] "numeric" > library(seewave) > oscillo(s1,f=8000) 1.1.2 Matrix Any single column matrix can be read but the sampling frequency has to be specified in the seewave functions. -

Computerized Data Collection and Analysis

Computerized Data Collection & Analysis IASCL/SRCLD Bunta, Ingram, Ingram Madison, WI, 2002 Computerized Data Collection and Analysis Paper Presented at the Joint Conference of the IX International Congress for the Study of Child Language and the Symposium on Research in Child Language Disorders, Madison, WI, 2002 Ferenc Bunta, [email protected], http://www.public.asu.edu/~ferigabi/ David Ingram, [email protected] Kelly Ingram, [email protected] Arizona State University, Tempe, AZ, USA Why Diigiitiize • data storage (about 4 hours on 1 CD at 22 kHz, 16 bit, mono) • cost-effectiveness (computer, storage options, etc.) • flexibility & efficiency – transferability (CD, HD, zip, other), file sharing via e-mail or web posting – accessibility computer or even CD player – ability to revisit any portion of your original recordings quickly & easily; no painstaking searching for segments (visual information) – ease of transcribing and reliability checks – editing opportunities (extraction, noise reduction, amplification, etc.) – organizing, rearranging, & filing – acoustic analyses Diigiitiiziing & Hardware Considerations - digital sampling versus analog tapes - use appropriate specifications - directly onto the computer or using an external recording device (such as audio tape, DAT recorder, etc.) and then digitizing - find a balance based on recommendations and one’s needs - understand the consequences of your choices - consider pre-emphasis and low-pass filtering (if needed) System Recommendations (Hardware) Recording equipment (general): For the most part, you can use the equipment you have, or you may record directly onto the computer using your microphone. - microphone (use your preferred device; the one you have used in the past) - recording device (your own professional voice recorder (analog tape, DAT, etc.), use an external sound card, or record directly onto the computer) NOTE: Numerous recording devices (even most digital recorders, such as DAT, MiniDisc, etc.) require you to re-digitize (i.e., re-record the sample onto your computer). -

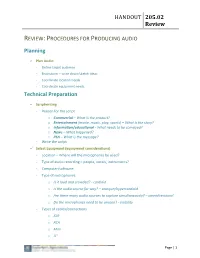

HANDOUT 205.02 Review Planning Technical Preparation

HANDOUT 205.02 Review REVIEW: PROCEDURES FOR PRODUCING AUDIO Planning 9 Plan Audio - Define target audience - Brainstorm – write down/sketch ideas - Coordinate location needs - Coordinate equipment needs Technical Preparation 9 Scriptwriting - Reason for the script o Commercial – What is the product? o Entertainment (movie, music, play, sports) – What is the story? o Information/educational - What needs to be conveyed? o News – What happened? o PSA – What is the message? - Write the script 9 Select Equipment (equipment considerations) - Location – Where will the microphones be used? - Type of audio recording – people, vocals, instruments? - Computer/software - Type of microphones o Is it loud and crowded? - cardioid o Is the audio source far way? – shotgun/hypercardioid o Are there many audio sources to capture simultaneously? – omnidirectional o Do the microphones need to be unseen? - visibility - Types of cables/connections o XLR o RCA o Mini o ¼’’ Page | 1 HANDOUT 205.02 Review - Audio board/mixer - Speakers Creating 9 Create Digital Audio - Record Audio o Connect proper equipment (microphone, audio boards, hard drive, MIDI) o Check audio levels – adjust as necessary o Record each person/instrument on a separate channel (if possible) o Make sure levels do not over modulate (distortion) o Begin recording and cue the talent - Edit Audio o After recording, save o Import any additional files needed o Cut, splice, trim, edit and assemble as necessary o Add effects (only if they do not detract from message) o Add background music o Adjust