A Survey of Some Virtual Reality Tools and Resources

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Proceedings 2005

LAC2005 Proceedings 3rd International Linux Audio Conference April 21 – 24, 2005 ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany Published by ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany April, 2005 All copyright remains with the authors www.zkm.de/lac/2005 Content Preface ............................................ ............................5 Staff ............................................... ............................6 Thursday, April 21, 2005 – Lecture Hall 11:45 AM Peter Brinkmann MidiKinesis – MIDI controllers for (almost) any purpose . ....................9 01:30 PM Victor Lazzarini Extensions to the Csound Language: from User-Defined to Plugin Opcodes and Beyond ............................. .....................13 02:15 PM Albert Gr¨af Q: A Functional Programming Language for Multimedia Applications .........21 03:00 PM St´ephane Letz, Dominique Fober and Yann Orlarey jackdmp: Jack server for multi-processor machines . ......................29 03:45 PM John ffitch On The Design of Csound5 ............................... .....................37 04:30 PM Pau Arum´ıand Xavier Amatriain CLAM, an Object Oriented Framework for Audio and Music . .............43 Friday, April 22, 2005 – Lecture Hall 11:00 AM Ivica Ico Bukvic “Made in Linux” – The Next Step .......................... ..................51 11:45 AM Christoph Eckert Linux Audio Usability Issues .......................... ........................57 01:30 PM Marije Baalman Updates of the WONDER software interface for using Wave Field Synthesis . 69 02:15 PM Georg B¨onn Development of a Composer’s Sketchbook ................. ....................73 Saturday, April 23, 2005 – Lecture Hall 11:00 AM J¨urgen Reuter SoundPaint – Painting Music ........................... ......................79 11:45 AM Michael Sch¨uepp, Rene Widtmann, Rolf “Day” Koch and Klaus Buchheim System design for audio record and playback with a computer using FireWire . 87 01:30 PM John ffitch and Tom Natt Recording all Output from a Student Radio Station . -

Catalogo De Apliciones Para Gnu/Linux

Universidad Luterana Salvadoreña SOFTWARE LIBRE SOFTWARE LIBRE CATALOGO DE APLICIONES PARA GNU/LINUX AUTORES: RUBEN ERNESTO MEJIA CORTEZ MARVIN FERNANDO RAMIREZ DAVID ARMANDO CORNEJO SOFTWARE LIBRE INDICE Contenido Pagina Introducción .........................................................................................1 Objetivos ...............................................................................................2 Que es software libre ? ..........................................................................3 Editores de texto ....................................................................................6 Exploradores ..........................................................................................17 Correo Electrónico .................................................................................28 Editores de audio ...................................................................................40 Reproductores de audio ........................................................................51 Ofimática .................................................................................................61 Reproductores multimedia ......................................................................67 Editores de video .....................................................................................76 Compresores ...........................................................................................87 Creadores de CD'S ..................................................................................96 -

Copyrighted Material

02_149263 ftoc.qxp 10/25/07 9:08 PM Page ix Contents at a Glance Introduction 1 Practice 21: Editing Audio after Editing the Session 171 Part I: Planning Out a Podcast 7 Practice 22: Taking Your Audio File Practice 1: Selecting the Right Topic into the Home Stretch 177 for Your Podcast 9 Practice 23: Creating a Perfect mp3 File 180 Practice 2: Keeping Up with the Joneses 14 Practice 24: Enhanced Podcasting 185 Practice 3: Staffing Your Podcast for Success 22 Practice 4: Podcast Studio Considerations 29 Part IV: The Final Steps Before Practice 5: Stick to the Script! 36 Episode #0 203 Practice 25: Creating and Editing ID3 Tags 205 Practice 6: Transitions, Timing, and Cues 44 Practice 26: Adding a Blog to Your Podcast 213 Practice 7: Reviewing Your Podcast with a Critical Eye 49 Practice 27: Validating Your RSS Feed 225 Practice 8: T-Minus Five Episodes . 55 Practice 28: Submitting to Podcast Directories 237 Part II: Going for a Professional Sound 61 Practice 9: Upgrading Your Headphones 63 Part V: Building Your Audience 243 Practice 29: Creating a Promotional Plan 245 Practice 10: Selecting the Right Microphone 69 Practice 30: Tell Me About It: Recording Practice 11: Upgrading Your Software 79 Promos and Quickcasts 251 Practice 12: Creating a Quiet, Happy Place 89 Practice 31: Advertising to Attract Listeners 259 Practice 13: Eliminating Ambient Noise 94 Practice 32: Networking with Other Practice 14: One-Take Wonders 105 Podcasters and Bloggers 262 Practice 15: Multiplicity: Recording Practice 33: Spreading the Word Multiple Takes 110 -

Informatique Et MAO 1 : Configurations MAO (1)

Ce fichier constitue le support de cours “son numérique” pour les formations Régisseur Son, Techniciens Polyvalent et MAO du GRIM-EDIF à Lyon. Elles ne sont mises en ligne qu’en tant qu’aide pour ces étudiants et ne peuvent être considérées comme des cours. Elles utilisent des illustrations collectées durant des années sur Internet, hélas sans en conserver les liens. Veuillez m'en excuser, ou me contacter... pour toute question : [email protected] 4ème partie : Informatique et MAO 1 : Configurations MAO (1) interface audio HP monitoring stéréo microphone(s) avec entrées/sorties ou surround analogiques micro-ordinateur logiciels multipistes, d'édition, de traitement et de synthèse, plugins etc... (+ lecteur-graveur CD/DVD/BluRay) surface de contrôle clavier MIDI toutes les opérations sont réalisées dans l’ordinateur : - l’interface audio doit permettre des latences faibles pour le jeu instrumental, mais elle ne nécessite pas de nombreuses entrées / sorties analogiques - la RAM doit permettre de stocker de nombreux plugins (et des quantités d’échantillons) - le processeur doit être capable de calculer de nombreux traitements en temps réel - l’espace de stockage et sa vitesse doivent être importants - les périphériques de contrôle sont réduits au minimum, le coût total est limité SON NUMERIQUE - 4 - INFORMATIQUE 2 : Configurations MAO (2) HP monitoring stéréo microphones interface audio avec de nombreuses ou surround entrées/sorties instruments analogiques micro-ordinateur Effets logiciels multipistes, d'édition et de traitement, plugins (+ -

Command-Line Sound Editing Wednesday, December 7, 2016

21m.380 Music and Technology Recording Techniques & Audio Production Workshop: Command-line sound editing Wednesday, December 7, 2016 1 Student presentation (pa1) • 2 Subject evaluation 3 Group picture 4 Why edit sound on the command line? Figure 1. Graphical representation of sound • We are used to editing sound graphically. • But for many operations, we do not actually need to see the waveform! 4.1 Potential applications • • • • • • • • • • • • • • • • 1 of 11 21m.380 · Workshop: Command-line sound editing · Wed, 12/7/2016 4.2 Advantages • No visual belief system (what you hear is what you hear) • Faster (no need to load guis or waveforms) • Efficient batch-processing (applying editing sequence to multiple files) • Self-documenting (simply save an editing sequence to a script) • Imaginative (might give you different ideas of what’s possible) • Way cooler (let’s face it) © 4.3 Software packages On Debian-based gnu/Linux systems (e.g., Ubuntu), install any of the below packages via apt, e.g., sudo apt-get install mplayer. Program .deb package Function mplayer mplayer Play any media file Table 1. Command-line programs for sndfile-info sndfile-programs playing, converting, and editing me- Metadata retrieval dia files sndfile-convert sndfile-programs Bit depth conversion sndfile-resample samplerate-programs Resampling lame lame Mp3 encoder flac flac Flac encoder oggenc vorbis-tools Ogg Vorbis encoder ffmpeg ffmpeg Media conversion tool mencoder mencoder Media conversion tool sox sox Sound editor ecasound ecasound Sound editor 4.4 Real-world -

Digidesign Coreaudio Driver Usage Guide

Digidesign CoreAudio Driver Usage Guide Version 7.0 for Pro Tools|HD® and Pro Tools LE™ Systems on Mac OS X 10.4 (“Tiger”) Only Introduction This document covers two versions of the CoreAudio Driver, as follows: Digidesign CoreAudio Driver The Digidesign CoreAudio Driver works with Pro Tools|HD, Digi 002®, Digi 002 Rack™, and original Mbox™ systems only. See “Digidesign CoreAudio Driver” on page 1. Mbox 2™ CoreAudio Driver The Mbox 2 CoreAudio Driver works with Mbox 2 systems only. See “Mbox 2 CoreAudio Driver” on page 8. Digidesign CoreAudio Driver The Digidesign CoreAudio Driver is a multi-client, multichannel sound driver that allows CoreAudio-compatible applica- tions to record and play back through the following Digidesign hardware: • Pro Tools|HD audio interfaces • Digi 002 • Digi 002 Rack • Mbox Full-duplex recording and playback of 24-bit audio is supported at sample rates up to 96 kHz, depending on your Digidesign hardware and CoreAudio client application. The Digidesign CoreAudio Driver will provide up to 18 channels of input and output, depending on your Pro Tools system: • Up to 8 channels of I/O with Pro Tools|HD systems • Up to 18 channels of I/O with Digi 002 and Digi 002 Rack systems • Up to 2 channels of I/O with Mbox systems For Pro Tools|HD systems with more than one card and multiple I/Os, only the primary I/O connected to the first (core) card can be used with CoreAudio. Check the Digidesign Web site (www.digidesign.com) for the latest third-party drivers for Pro Tools hardware, as well as current known issues. -

Photo Editing

All recommendations are from: http://www.mediabistro.com/10000words/7-essential-multimedia-tools-and-their_b376 Photo Editing Paid Free Photoshop Splashup Photoshop may be the industry leader when it comes to photo editing and graphic design, but Splashup, a free online tool, has many of the same capabilities at a much cheaper price. Splashup has lots of the tools you’d expect to find in Photoshop and has a similar layout, which is a bonus for those looking to get started right away. Requires free registration; Flash-based interface; resize; crop; layers; flip; sharpen; blur; color effects; special effects Fotoflexer/Photobucket Crop; resize; rotate; flip; hue/saturation/lightness; contrast; various Photoshop-like effects Photoshop Express Requires free registration; 2 GB storage; crop; rotate; resize; auto correct; exposure correction; red-eye removal; retouching; saturation; white balance; sharpen; color correction; various other effects Picnik “Auto-fix”; rotate; crop; resize; exposure correction; color correction; sharpen; red-eye correction Pic Resize Resize; crop; rotate; brightness/contrast; conversion; other effects Snipshot Resize; crop; enhancement features; exposure, contrast, saturation, hue and sharpness correction; rotate; grayscale rsizr For quick cropping and resizing EasyCropper For quick cropping and resizing Pixenate Enhancement features; crop; resize; rotate; color effects FlauntR Requires free registration; resize; rotate; crop; various effects LunaPic Similar to Microsoft Paint; many features including crop, scale -

Pro Audio for Print Layout 1 9/14/11 12:04 AM Page 356

356-443 Pro Audio for Print_Layout 1 9/14/11 12:04 AM Page 356 PRO AUDIO 356 Large Diaphragm Microphones www.BandH.com C414 XLS C214 C414 XLII Accurate, beautifully detailed pickup of any acoustic Cost-effective alternative to the dual-diaphragm Unrivaled up-front sound is well-known for classic instrument. Nine pickup patterns. Controls can be C414, delivers the pristine sound reproduction of music recording or drum ambience miking. Nine disabled for trouble-free use in live-sound applications the classic condenser mic, in a single-pattern pickup patterns enable the perfect setting for every and permanent installations. Three switchable cardioid design. Features low-cut filter switch, application. Three switchable bass cut filters and different bass cut filters and three pre-attenuation 20dB pad switch and dynamic range of 152 dB. three pre-attenuation levels. All controls can be levels. Peak Hold LED displays even shortest overload Includes case, pop filter, windscreen, and easily disabled, Dynamic range of 152 dB. Includes peaks. Dynamic range of 152 dB. Includes case, pop shockmount. case, pop filter, windscreen, and shockmount. filter, windscreen, and shockmount. #AKC214 ..................................................399.00 #AKC414XLII .............................................999.00 #AKC414XLS..................................................949.99 #AKC214MP (Matched Stereo Pair)...............899.00 #AKC414XLIIST (Matched Stereo Pair).........2099.00 Perception Series C2000B AT2020 High quality recording mic with elegantly styled True condenser mics, they deliver clear sound with Effectively isolates source signals while providing die-cast metal housing and silver-gray finish, the accurate sonic detail. Switchable 20dB and switchable a fast transient response and high 144dB SPL C2000B has an almost ruler-flat response that bass cut filter. -

Datapond – Data Driven Music Builder

DataPond – Data Driven Music Builder DataPond Data Driven Music Builder Over the past couple of months I have been attempting to teach myself to read music. This is something that I have been meaning to do for a long time. In high school the music teachers seemed to have no interest in students other than those with existing natural talent. The rest of us, they just taught appreciation of Bob Dylan. I can't stand Bob Dylan. So I never learned to read music or play an instrument. Having discovered the enjoyment of making stringed instruments such as the papermaché sitar, the Bad-Arse Mountain Dulcimer, a Windharp (inspired by this one), and a couple of other odd instruments. More recently I made a Lap Steel Slide guitar based on the electronics described in David Eric Nelson's great book “Junkyard Jam Band” and on Shane Speal's guide on building Lapsteels. I built mine out of waste wood palette wood and it connects to an old computer speaker system I pulled out of my junk box and turned to the purpose. It ended up sounding pretty good – to my ear at least. Anyway, I have instruments-a-plenty, but didn't know how to play'em so I decided to teach myself music. The biggest early challenge was reading music. I'm sure it would have been easier if a mathematician had been involved in designing the notation system. To teach myself I decided that reading sheet music and transcribing it into the computer would be a good way of doing it. -

Downloads PC Christophe Fantoni Downloads PC Tous Les Fichiers

Downloads PC Christophe Fantoni Downloads PC Tous les fichiers DirectX 8.1 pour Windows 9X/Me Indispensable au bon fonctionnement de certain programme. Il vaut mieux que DirectX soit installé sur votre machine. Voici la version française destinée au Windows 95, 98 et Millenium. Existe aussi pour Windows NT et Windows 2000. http://www.christophefantoni.com/fichier_pc.php?id=46 DirectX 8.1 pour Windows NT/2000 Indispensable au bon fonctionnement de certain programme. Il vaut mieux que DirectX soit installé sur votre machine. Voici la version française destinée à Windows Nt et Windows 2000. Existe aussi pour Windows 95, 98 et Millenium. http://www.christophefantoni.com/fichier_pc.php?id=47 Aspi Check Permet de connaitre la présence d'unc couche ASPI ainsi que le numéro de version de cette couche éventuellement présente sur votre système. Indispensable. http://www.christophefantoni.com/fichier_pc.php?id=49 Aspi 4.60 Ce logiciel freeware permet d'installer une couche ASPI (la 4.60) sur votre système d'exploitation. Attention, en cas de problème d'installation de cette version originale, une autre version de cette couche logiciel est également présente sur le site. De plus, Windows XP possede sa propre version de cette couche Aspi, version que vous trouverez également en télécharegement sur le site. Absolument indispensable. http://www.christophefantoni.com/fichier_pc.php?id=50 DVD2AVI 1.76 Fr Voici la toute première version du meilleur serveur d'image existant sur PC. Version auto-installable, en français, livré avec son manuel, également en français. Le tout à été traduit ou rédigé par mes soins.. -

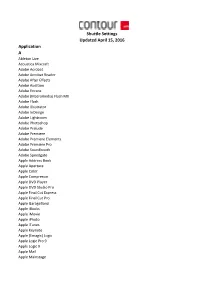

Shuttle-Settings-NEW-Marketing-V2-2

Shuttle Settings Updated April 15, 2016 Application A Ableton Live Acoustica Mixcraft Adobe Acrobat Adobe Acrobat Reader Adobe After Effects Adobe Audition Adobe Encore Adobe (Macromedia) Flash MX Adobe Flash Adobe Illustrator Adobe InDesign Adobe Lightroom Adobe Photoshop Adobe Prelude Adobe Premiere Adobe Premiere Elements Adobe Premiere Pro Adobe Soundbooth Adobe Speedgate Apple Address Book Apple Aperture Apple Color Apple Compressor Apple DVD Player Apple DVD Studio Pro Apple Final Cut Express Apple Final Cut Pro Apple GarageBand Apple iBooks Apple iMovie Apple iPhoto Apple iTunes Apple Keynote Apple (Emagic) Logic Apple Logic Pro 9 Apple Logic X Apple Mail Apple Mainstage Apple Motion Apple Numbers Apple Pages Apple Quicktime Player Apple Safari Apple Soundtrack Apple TextEdit AppleWorks Audacity AutoDesk AutoCAD 2014 AutoDesk Maya AutoDesk SketchBook Avid Liquid 7 Avid Media Composer 5-7 Avid (Digidesign) ProTools Avid Pro Tools 11 Avid MC Adrenaline Avid Studio Avid XDV Pro Avid Xpress Avid Xpress Pro B bias Deck bias Peak Bitwig Studio Boris FX Keyframer Boris FX Media 100 Suite Boris FX Media Suite Acquire Boris Graffiti Keyframmer Boris RED Keyframer Boris RED 5 C CakeWalk Guitar Tracks Pro CakeWalk Home Studio 2000_XL CakeWalk Music Creator CakeWalk Plasma CakeWalk Project 5 CakeWalk Sonar CakeWalk Sonar Platinum Camtasia Studio Camtasia Studio 7 Canopus DV Edius Canopus DV Rex Pro Canopus DV Rex RT Canopus DV Storm Cappella CINEMA 4D Cockos Reaper Corel VideoStudio Pro X5 Corel VideoStudio Pro Cyberlink PoWerDirector D Dartech -

TN Production Assistant – V1.0.2.X User Notes

TN Production Assistant – v1.0.2.x User Notes Introduction This software is designed to assist Talking Newspapers in the preparation of a ‘copy-ready’ image of a multi-track Talking Newspaper or Talking Magazine production from individual track recordings created during a preceding ‘studio session’ using a stand-alone digital recorder or other digital recording facility such as a PC with digital recording software. Prerequisites A PC running a Windows Operating System (XP or later). Sufficient hard drive capacity to accommodate the software, working and archive storage (recommended minimum of 1GB) Audio Editing Software (e.g. Adobe Audition, Goldwave or Audacity) installed ‘Microsoft .NET 4 Environment’ installed (this will be automatically installed during the installation procedure below providing an internet connection exists) Installing the software The application is installed from the Downloads Section of the Hamilton Sound website at http://hamiltonsound.co.uk . Please follow the instructions displayed on the website and as presented by the installer. In case of difficulty please contact Hamilton Sound at [email protected] for assistance and advice. Please also report any bugs detected in use or suggested improvements to the above email address. On first installation a welcome screen will be presented followed by the set-up screen. Check and adjust if required the set-up parameters from their pre-set values (see Set-Up below). Configuration Before first using this software it is important to ensure that the configuration parameters are set appropriately for your purposes. The default values of each parameter are shown in parenthesis. TIP – Hold mouse cursor over any field for tips on what to enter there.