Spoken Language Translation for Polish

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Baby Girl Names Registered in 2018

Page 1 of 46 Baby Girl Names Registered in 2018 Frequency Name Frequency Name Frequency Name 8 Aadhya 1 Aayza 1 Adalaide 1 Aadi 1 Abaani 2 Adalee 1 Aaeesha 1 Abagale 1 Adaleia 1 Aafiyah 1 Abaigeal 1 Adaleigh 4 Aahana 1 Abayoo 1 Adalia 1 Aahna 2 Abbey 13 Adaline 1 Aaila 4 Abbie 1 Adallynn 3 Aaima 1 Abbigail 22 Adalyn 3 Aaira 17 Abby 1 Adalynd 1 Aaiza 1 Abbyanna 1 Adalyne 1 Aaliah 1 Abegail 19 Adalynn 1 Aalina 1 Abelaket 1 Adalynne 33 Aaliyah 2 Abella 1 Adan 1 Aaliyah-Jade 2 Abi 1 Adan-Rehman 1 Aalizah 1 Abiageal 1 Adara 1 Aalyiah 1 Abiela 3 Addalyn 1 Aamber 153 Abigail 2 Addalynn 1 Aamilah 1 Abigaille 1 Addalynne 1 Aamina 1 Abigail-Yonas 1 Addeline 1 Aaminah 3 Abigale 2 Addelynn 1 Aanvi 1 Abigayle 3 Addilyn 2 Aanya 1 Abiha 1 Addilynn 1 Aara 1 Abilene 66 Addison 1 Aaradhya 1 Abisha 3 Addisyn 1 Aaral 1 Abisola 1 Addy 1 Aaralyn 1 Abla 9 Addyson 1 Aaralynn 1 Abraj 1 Addyzen-Jerynne 1 Aarao 1 Abree 1 Adea 2 Aaravi 1 Abrianna 1 Adedoyin 1 Aarcy 4 Abrielle 1 Adela 2 Aaria 1 Abrienne 25 Adelaide 2 Aariah 1 Abril 1 Adelaya 1 Aarinya 1 Abrish 5 Adele 1 Aarmi 2 Absalat 1 Adeleine 2 Aarna 1 Abuk 1 Adelena 1 Aarnavi 1 Abyan 2 Adelin 1 Aaro 1 Acacia 5 Adelina 1 Aarohi 1 Acadia 35 Adeline 1 Aarshi 1 Acelee 1 Adéline 2 Aarushi 1 Acelyn 1 Adelita 1 Aarvi 2 Acelynn 1 Adeljine 8 Aarya 1 Aceshana 1 Adelle 2 Aaryahi 1 Achai 21 Adelyn 1 Aashvi 1 Achan 2 Adelyne 1 Aasiyah 1 Achankeng 12 Adelynn 1 Aavani 1 Achel 1 Aderinsola 1 Aaverie 1 Achok 1 Adetoni 4 Aavya 1 Achol 1 Adeyomola 1 Aayana 16 Ada 1 Adhel 2 Aayat 1 Adah 1 Adhvaytha 1 Aayath 1 Adahlia 1 Adilee 1 -

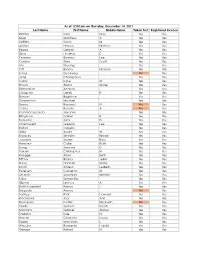

Last Name First Name Middle Name Taken Test Registered License

As of 12:00 am on Thursday, December 14, 2017 Last Name First Name Middle Name Taken Test Registered License Richter Sara May Yes Yes Silver Matthew A Yes Yes Griffiths Stacy M Yes Yes Archer Haylee Nichole Yes Yes Begay Delores A Yes Yes Gray Heather E Yes Yes Pearson Brianna Lee Yes Yes Conlon Tyler Scott Yes Yes Ma Shuang Yes Yes Ott Briana Nichole Yes Yes Liang Guopeng No Yes Jung Chang Gyo Yes Yes Carns Katie M Yes Yes Brooks Alana Marie Yes Yes Richardson Andrew Yes Yes Livingston Derek B Yes Yes Benson Brightstar Yes Yes Gowanlock Michael Yes Yes Denny Racheal N No Yes Crane Beverly A No Yes Paramo Saucedo Jovanny Yes Yes Bringham Darren R Yes Yes Torresdal Jack D Yes Yes Chenoweth Gregory Lee Yes Yes Bolton Isabella Yes Yes Miller Austin W Yes Yes Enriquez Jennifer Benise Yes Yes Jeplawy Joann Rose Yes Yes Harward Callie Ruth Yes Yes Saing Jasmine D Yes Yes Valasin Christopher N Yes Yes Roegge Alissa Beth Yes Yes Tiffany Briana Jekel Yes Yes Davis Hannah Marie Yes Yes Smith Amelia LesBeth Yes Yes Petersen Cameron M Yes Yes Chaplin Jeremiah Whittier Yes Yes Sabo Samantha Yes Yes Gipson Lindsey A Yes Yes Bath-Rosenfeld Robyn J Yes Yes Delgado Alonso No Yes Lackey Rick Howard Yes Yes Brockbank Taci Ann Yes Yes Thompson Kaitlyn Elizabeth No Yes Clarke Joshua Isaiah Yes Yes Montano Gabriel Alonzo Yes Yes England Kyle N Yes Yes Wiman Charlotte Louise Yes Yes Segay Marcinda L Yes Yes Wheeler Benjamin Harold Yes Yes George Robert N Yes Yes Wong Ann Jade Yes Yes Soder Adrienne B Yes Yes Bailey Lydia Noel Yes Yes Linner Tyler Dane Yes Yes -

ABSTRACT HAMMERSEN, LAUREN ALEXANDRA MICHELLE. The

ABSTRACT HAMMERSEN, LAUREN ALEXANDRA MICHELLE. The Control of Tin in Southwestern Britain from the First Century AD to the Late Third Century AD. (Under the direction of Dr. S. Thomas Parker.) An accurate understanding of how the Romans exploited mineral resources of the empire is an important component in determining the role Romans played in their provinces. Tin, both because it was extremely rare in the ancient world and because it remained very important from the first to third centuries AD, provides the opportunity to examine that topic. The English counties of Cornwall and Devon were among the few sites in the ancient world where tin was found. Archaeological evidence and ancient historical sources prove tin had been mined extensively in that region for more than 1500 years before the Roman conquest. During the period of the Roman occupation of Britain, tin was critical to producing bronze and pewter, which were used extensively for both functional and decorative items. Despite the knowledge that tin was found in very few places, that tin had been mined in the southwest of Britain for centuries before the Roman invasion, and that tin remained essential during the period of the occupation, for more than eighty years it has been the opinion of historians such as Aileen Fox and Sheppard Frere that the extensive tin mining of the Bronze Age was discontinued in Roman Britain until the late third or early fourth centuries. The traditional belief has been that the Romans were instead utilizing the tin mines of Spain (i.e., the Roman province of Iberia). -

Fee-Alexandra Haase the Concept of 'Rhetoric' in a Linguistic Perspective: Historical, Systematic, and Theoretical Aspects O

Fee-Alexandra Haase The Concept of ‘Rhetoric’ in a Linguistic Perspective: Historical, Systematic, and Theoretical Aspects of Rhetoric as Formal Language Usage Res Rhetorica nr 1, 27-45 2014 FEE-ALEXANDRA HAASE UNIVERSITY OF NIZWA, OMAN [email protected] The Concept of ‘Rhetoric’ in a Linguistic Perspective: Historical, Systematic, and Theoretical Aspects of Rhetoric as Formal Language Usage Abstract Rhetoric is commonly known as an old discipline for the persuasive usage of language in linguistic communication acts. In this article we examine the concept ‘rhetoric’ from 1. the diachronic perspective of historical linguistics showing that the concept ‘rhetoric’ is linguistically present in various Indo-European roots and exists across several language families and 2. the theoretical perspective towards the concept ‘rhetoric’ with a contemporary defi nition and model in the tradition of rhetorical theory. The historical and systematic approaches allow us to describe the features of the conceptualization of ‘rhetoric’ as the process in theory and empirical language history. The aim of this article is a formal description of the concept ‘rhetoric’ as a result of a theoretical process of this conceptualization, the rhetorization, and the historical documentation of the process of the emergence of the concept ‘rhetoric’ in natural languages. We present as the concept ‘rhetoric’ a specifi c mode of linguistic communication in ‘rhetoricized’ expressions of a natural language. Within linguistic communicative acts ‘rhetoricized language’ is a process of forming structured linguistic expressions. Based on traditional rhetorical theory we will in a case study present ‘formalization,’ ‘structuralization,’ and ‘symbolization’ as the three principle processes, which are parts of this process of rhetorization in rhetorical theory. -

2017 Purge List LAST NAME FIRST NAME MIDDLE NAME SUFFIX

2017 Purge List LAST NAME FIRST NAME MIDDLE NAME SUFFIX AARON LINDA R AARON-BRASS LORENE K AARSETH CHEYENNE M ABALOS KEN JOHN ABBOTT JOELLE N ABBOTT JUNE P ABEITA RONALD L ABERCROMBIA LORETTA G ABERLE AMANDA KAY ABERNETHY MICHAEL ROBERT ABEYTA APRIL L ABEYTA ISAAC J ABEYTA JONATHAN D ABEYTA LITA M ABLEMAN MYRA K ABOULNASR ABDELRAHMAN MH ABRAHAM YOSEF WESLEY ABRIL MARIA S ABUSAED AMBER L ACEVEDO MARIA D ACEVEDO NICOLE YNES ACEVEDO-RODRIGUEZ RAMON ACEVES GUILLERMO M ACEVES LUIS CARLOS ACEVES MONICA ACHEN JAY B ACHILLES CYNTHIA ANN ACKER CAMILLE ACKER PATRICIA A ACOSTA ALFREDO ACOSTA AMANDA D ACOSTA CLAUDIA I ACOSTA CONCEPCION 2/23/2017 1 of 271 2017 Purge List ACOSTA CYNTHIA E ACOSTA GREG AARON ACOSTA JOSE J ACOSTA LINDA C ACOSTA MARIA D ACOSTA PRISCILLA ROSAS ACOSTA RAMON ACOSTA REBECCA ACOSTA STEPHANIE GUADALUPE ACOSTA VALERIE VALDEZ ACOSTA WHITNEY RENAE ACQUAH-FRANKLIN SHAWKEY E ACUNA ANTONIO ADAME ENRIQUE ADAME MARTHA I ADAMS ANTHONY J ADAMS BENJAMIN H ADAMS BENJAMIN S ADAMS BRADLEY W ADAMS BRIAN T ADAMS DEMETRICE NICOLE ADAMS DONNA R ADAMS JOHN O ADAMS LEE H ADAMS PONTUS JOEL ADAMS STEPHANIE JO ADAMS VALORI ELIZABETH ADAMSKI DONALD J ADDARI SANDRA ADEE LAUREN SUN ADKINS NICHOLA ANTIONETTE ADKINS OSCAR ALBERTO ADOLPHO BERENICE ADOLPHO QUINLINN K 2/23/2017 2 of 271 2017 Purge List AGBULOS ERIC PINILI AGBULOS TITUS PINILI AGNEW HENRY E AGUAYO RITA AGUILAR CRYSTAL ASHLEY AGUILAR DAVID AGUILAR AGUILAR MARIA LAURA AGUILAR MICHAEL R AGUILAR RAELENE D AGUILAR ROSANNE DENE AGUILAR RUBEN F AGUILERA ALEJANDRA D AGUILERA FAUSTINO H AGUILERA GABRIEL -

Queen Alexandra-Complete-Revised

Queen Alexandra: The Anomaly of a Sovereign Jewish Queen in the Second Temple Period Thesis submitted for the degree of “Doctor of Philosophy” by Etka Liebowitz Submitted to the Senate of the Hebrew University of Jerusalem November 2011 This work was carried out under the supervision of: Professor Daniel R. Schwartz Acknowledgements This dissertation commenced almost a decade ago with a conversation I had with the late Professor Hanan Eshel, of blessed memory. His untimely passing was a great loss to all. It continued at the Hebrew University of Jerusalem, where I was privileged to study with the leading scholars in the field of Jewish History. First and foremost, I wish to thank my supervisor, Professor Daniel Schwartz, for the generous amount of time and effort he invested in seeing this dissertation to fruition. I learned much from his constructive suggestions and valuable insights, from our meetings, and from his outstanding classes on Josephus at the Hebrew University. I would also like to express my deep gratitude to the other members of my supervisory committee for their guidance, encouragement and useful critiques: Professor Lee Levine, who initially served as my supervisor until his retirement, and Professor Tal Ilan, whose pioneering feminist studies on the topic of Queen Alexandra led the way for my research. Professor Ilan devoted much time to this project, and has become a valued colleague, mentor and friend. My grateful thanks are also extended to Rivkah Fishman-Duker and Dr. Shaul Bauman for their comments, suggestions and invaluable assistance with various chapters. Finally, I wish to express my gratitude to my beloved family for their understanding, encouragement and support throughout my studies – my husband, Moshe, my children Shlomo, Tzipi and Naomi, and my mother, Rifka, who was an independent (and anomalous) woman many years before the feminist movement. -

Language Names and Norms in Bosnia and Herzegovina by Kirstin J. Swagman a Dissertation Submitted in Partial Fulfillment Of

Language Names and Norms in Bosnia and Herzegovina by Kirstin J. Swagman A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Anthropology) in the University of Michigan 2011 Doctoral Committee Associate Professor Alaina M. Lemon, Chair Professor Judith T. Irvine Professor Sarah G. Thomason Associate Professor Barbra A. Meek Acknowledgements This dissertation owes its existence to countless people who provided intellectual, emotional, and financial support to me during the years I spent preparing for, researching, and writing it. To my dear friends and colleagues at the University of Michigan, I owe a debt that can hardly be put into words. To the ladies at Ashley Mews, who were constant interlocutors in my early engagements with anthropology and remained steady sources of encouragement, inspiration, and friendship throughout my fieldwork and writing, this dissertation grew out of conversations we had in living rooms, coffee shops, and classrooms. I owe the greatest thanks to my Bosnian interlocutors, who graciously took me into their homes and lives and tolerated my clumsy questions. Without them, this dissertation would not exist. I want to thank the many teachers and students who shared the details of their professional and personal lives with me, and often went above and beyond by befriending me and making time in their busy schedules to explain the seemingly obvious to a curious anthropologist. I owe the greatest debt to Luljeta, Alexandra, Sanjin, and Mirzana, who all offered support, encouragement, and insight in countless ways large and small. Daniel, Peter, Tony, Marina, and Emira were also great sources of support during my fieldwork. -

Alexandra Domike Blackman

ALEXANDRA DOMIKE BLACKMAN Contact Stanford University email: [email protected] Information Department of Political Science web: www.alexandrablackman.com 616 Serra Mall, Encina Hall West Stanford, CA 94305 Education Stanford University, Stanford, CA Ph.D. Political Science Expected: 2019 The American University in Cairo, Cairo, Egypt Full-year Fellow, Center for Arabic Study Abroad (CASA) 2010-2011 Tufts University, Medford, MA B.A. International Relations (Minor in Arabic) 2010 Honors: Summa Cum Laude, Phi Beta Kappa Dissertation The Politicization of Faith: Colonialism, education, and political identity in Tunisia Project Abstract: My dissertation examines two main questions: How did French colonial rule transform the religious endowment (waqf) and educational systems in Tunisia? What impact did state control over these local institutions have on political mobilization in the run-up to independence? I explore how French colonization created demand for new French educational institutions and undermined the existing waqf-based education system. The transformation of these local institutions had important implications for the later appearance of local secular-nationalist or Islamic-nationalist mobilization around the moment of independence. The variation in local political identities persisted into the post- independence period, creating the social bases of the main nationalist party (the Neo-Destour and later the Socialist Destourian Party), and helps explain contemporary partisanship in post-revolutionary Tunisia. Committee: Lisa Blaydes (co-chair), Anna Grzymala-Busse, Kenneth Scheve (co-chair) Peer-Reviewed 2018. \Religion and Foreign Aid." Politics & Religion 11: 522-552. Publications 2018. \Voting in Transition: Evidence from Egypt's 2012 Presidential Election." Middle East Law & Governance 10(1): 25-58. (with Caroline Abadeer and Scott Williamson). -

Alexandra Domike Blackman

ALEXANDRA DOMIKE BLACKMAN Contact Cornell University email: [email protected] Information Department of Government, White Hall web: www.alexandrablackman.com Ithaca, New York Academic Cornell University, Ithaca, New York Appointments Assistant Professor, Department of Government 2020- New York University | Abu Dhabi, Abu Dhabi, United Arab Emirates Post-Doctoral Associate, Social Sciences Division 2019-2020 Education Stanford University, Stanford, California Ph.D. Political Science 2019 The American University in Cairo, Cairo, Egypt Full-year Fellow, Center for Arabic Study Abroad (CASA) 2010-2011 Tufts University, Medford, Massachusetts B.A. International Relations (Minor in Arabic) 2010 Honors: Summa Cum Laude, Phi Beta Kappa Book Project The Politicization of Faith: Settler Colonialism, Education, and Political Identity in Tunisia Peer-Reviewed 2019. \Gender Stereotypes, Political Leadership, and Voting Behavior in Tunisia." Political Behavior Publications (with Marlette Jackson). 2018. \Religion and Foreign Aid." Politics & Religion 11: 522-552. 2018. \Voting in Transition: Evidence from Egypt's 2012 Presidential Election." Middle East Law & Governance 10(1): 25-58. (with Caroline Abadeer and Scott Williamson). Book Reviews Book review of: Finding Faith in Foreign Policy: Religion and American Diplomacy in a Postsecular World and Religious Freedom in Islam: The Fate of a Universal Human Right in the Muslim World Today. Perspectives on Politics, Forthcoming. Policy Briefs & \Taking space seriously: the use of geographic methods in the study of MENA." APSA MENA Politics Other Writing Section Newsletter 4(1) (with Lama Mourad), Spring 2021. \Applications of Automated Text Analysis in the Middle East and North Africa." APSA MENA Politics Section Newsletter 2(2), Fall 2019. \Prison reform and drug decriminalization in Tunisia." Social Policy in the Middle East North Africa, Project on Middle East Political Science (POMEPS) Studies 31, October 2018. -

Language Classification and Manipulation in Romania and Moldova Chase Faucheux Louisiana State University and Agricultural and Mechanical College, [email protected]

Louisiana State University LSU Digital Commons LSU Master's Theses Graduate School 2006 Language classification and manipulation in Romania and Moldova Chase Faucheux Louisiana State University and Agricultural and Mechanical College, [email protected] Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_theses Part of the Linguistics Commons Recommended Citation Faucheux, Chase, "Language classification and manipulation in Romania and Moldova" (2006). LSU Master's Theses. 1405. https://digitalcommons.lsu.edu/gradschool_theses/1405 This Thesis is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Master's Theses by an authorized graduate school editor of LSU Digital Commons. For more information, please contact [email protected]. LANGUAGE CLASSIFICATION AND MANIPULATION IN ROMANIA AND MOLDOVA A Thesis Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the requirements for the degree of Master of Arts in The Interdepartmental Program in Linguistics By Chase Faucheux B.A., Tulane University, 2004 August 2006 TABLE OF CONTENTS ABSTRACT ………………………………………………………………….……...…..iii 1 INTRODUCTION: AIMS AND GOALS …………………………………….……….1 2 LINGUISTIC CLASSIFICATION ……………………………………………………3 2.1 Genealogical Classification ……...………………………………………………4 2.2 Language Convergence and Areal Classification ………………………….…….9 2.3 Linguistic Typology ………………………………………………………….…16 3 THE BALKAN SPRACHBUND -

Fee-Alexandra Haase1

Revista de Divulgação Científica em Língua Portuguesa, Linguística e Literatura Ano 06 n.13 - 2º Semestre de 2010 - ISSN 1807-5193 ‘THE STATE OF THE ART’ AS AN EXAMPLE FOR A TEXTUAL LINGUISTIC ‘GLOBALIZATION EFFECT’. Code Switching, Borrowing, and Change of Meaning as Conditions of Cross- cultural Communication. Fee-Alexandra Haase1 ABSTRACT: In general, code switching is a linguistic term referring to using more than one language or variety in conversation. Bilinguals have the ability to use elements of both languages, when conversing with another. Here code switching is the syntactically appropriate use of multiple varieties of languages. Code switching can occur at the level of single words, terms, and limited phrases and can reach extension of the replacement of whole grammatical units at the level of full sentences. In the simplest definition, we can define code switching as the change of a set of a lexicon of an oral or written communication unit where one language is added by elements of a language. We will discuss the functions of the implementation of a second language in the langue of a communicated writing with the example of the expression ´state of the art´ used both in English texts and other languages showing that the borrowed expression is a bearer of encoded semiotic meanings with a specific set of meanings connected, when used in writings of other languages. KEYWORDS: code switching, bilinguals, second language I Introduction Research Positions In semiotics a code is a set of conventions or sub-codes used in order to communicate meaning. The most common is language. -

Chapter 3 Compounding in Polish and the Absence of Phrasal Compounding Bogdan Szymanek John Paul II Catholic University of Lublin

Chapter 3 Compounding in Polish and the absence of phrasal compounding Bogdan Szymanek John Paul II Catholic University of Lublin In Polish, as in many other languages, phrasal compounds of the type found in English do not exist. Therefore, the following questions are worth considering: Why are phrasal compounds virtually unavailable in Polish? What sort of struc- tures function in Polish as equivalents of phrasal compounds? Are there any other types of structures that (tentatively) could be regarded as “phrasal compounds”, depending on the definition of the concept in question? Discussion of these issues is preceded by an outline of nominal compounding in Polish. Another question addressed in the article is the following: How about phrasal compounds in other Slavic languages? A preliminary investigation that I have conducted reveals that, just like in Polish, phrasal compounds are not found in other Slavic languages. The only exception seems to be Bulgarian where a new word-formation pattern is on the rise, which ultimately derives from English phrasal compounds. 1 Introduction In the Polish language, there are no phrasal compounds comparable to English forms like a scene-of-the-crime photograph etc., with a non-head phrase-level con- stituent. Instead, phrases are used. For instance: (1) a. a scene-of-the-crime photograph fotografia (z) miejsca przestępstwa photograph (from) scene.gen crime.gen ‘photograph from/of the scene of the crime’ Bogdan Szymanek. Compounding in Polish and the absence of phrasal com- pounding. In Carola Trips & Jaklin Kornfilt (eds.), Further investigations into the nature of phrasal compounding, 49–79. Berlin: Language Science Press.