Direct Integration of Collaboration Into Broadcast Content Formats

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

R" Y. 1' '" Th May 11 , 2007

Man at the Crossroads, Looking with Hope and High Vision to a New and Better Future by Benjamin Max Wood B.F.A., Digital Media San Francisco Art Institute, 2003 Submitted to the Department of Architecture in Partial Fulfillment of the Requirements for the Degree of Master of Science in Visual Studies at the Massachusetts Institute of Technology June 2007 © 2007 Benjamin Max Wood All rights reserved The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in any medium now known or hereafter created. D~'~~rt;;;~~'t;~fA'~~hi't~~t~~~ Signature of Author ,. '" " , r" Y. 1' '" th May 11 , 2007 Certified by , '" '" '" '" '" '" ., ..,, .. , /.":." ~.: .., -.: . Krzysztof Wodiczko , Professor of Visual Arts " Thesis Supervisor MASSACHUSEITS INSTITUTE OF TECHNOLOGY JUN 14 2007 LIBRARIES Man at the Crossroads, Looking with Hope and High Vision to a New and Better Future by Benjamin Max Wood th Submitted to the Department of Architecture on May 11 , 2007 in Partial Fulfillment of the Requirements for the Degree of Master of Science in Visual Studies ABSTRACT I am an artist. My work is doing the research, bringing together perspectives, ideas, people and expressing something that will be silent if I do not say. 73 years ago an artist, Diego Rivera, was trying to say something and he was abruptly interrupted, perhaps the story is not finished. Because of past work, my experiences in California and my exposure to Rivera I have become fascinated with so many issues behind his art, behind the murals. The thesis is a contemporary reawakening of a landmark moment in art history where Nelson Rockefeller covered and destroyed a Diego Rivera mural. -

Popmusik Musikgruppe & Musisk Kunstner Listen

Popmusik Musikgruppe & Musisk kunstner Listen Stacy https://da.listvote.com/lists/music/artists/stacy-3503566/albums The Idan Raichel Project https://da.listvote.com/lists/music/artists/the-idan-raichel-project-12406906/albums Mig 21 https://da.listvote.com/lists/music/artists/mig-21-3062747/albums Donna Weiss https://da.listvote.com/lists/music/artists/donna-weiss-17385849/albums Ben Perowsky https://da.listvote.com/lists/music/artists/ben-perowsky-4886285/albums Ainbusk https://da.listvote.com/lists/music/artists/ainbusk-4356543/albums Ratata https://da.listvote.com/lists/music/artists/ratata-3930459/albums Labvēlīgais Tips https://da.listvote.com/lists/music/artists/labv%C4%93l%C4%ABgais-tips-16360974/albums Deane Waretini https://da.listvote.com/lists/music/artists/deane-waretini-5246719/albums Johnny Ruffo https://da.listvote.com/lists/music/artists/johnny-ruffo-23942/albums Tony Scherr https://da.listvote.com/lists/music/artists/tony-scherr-7823360/albums Camille Camille https://da.listvote.com/lists/music/artists/camille-camille-509887/albums Idolerna https://da.listvote.com/lists/music/artists/idolerna-3358323/albums Place on Earth https://da.listvote.com/lists/music/artists/place-on-earth-51568818/albums In-Joy https://da.listvote.com/lists/music/artists/in-joy-6008580/albums Gary Chester https://da.listvote.com/lists/music/artists/gary-chester-5524837/albums Hilde Marie Kjersem https://da.listvote.com/lists/music/artists/hilde-marie-kjersem-15882072/albums Hilde Marie Kjersem https://da.listvote.com/lists/music/artists/hilde-marie-kjersem-15882072/albums -

The Equinox Vol. I No. 9

. A Collection of Sacred Magick | The Esoteric Library | www.sacred-magick.com This page is reserved for Official Pronouncements by the Chancellor of the A\A\] Persons wishing for information, assistance, further interpretation, etc., are requested to communicate with THE CHANCELLOR OF THE A\A\ c/o THE EQUINOX, 33 Avenue Studios, 76 Fulham Road South Kensingston, S.W. Telephone: 2632, KENSINGTON or to call at that address by appointment. A representative will be there to meet them. THE Chancellor of the A\ A\ wishes to warn readers of THE EQUINOX against accepting instructions in his name from an ex-Probationer, Captain J.F.C. Fuller, whose motto was “Per Ardua.” This person never advanced beyond the Degree of Probationer, never sent in a record, and has presumably neither performed practices nor obtained results. He has not, and never has had, authority to give instructions in the name of the A\ A\. THE Chancellor of the A\ A\ considers it desirable to make a brief statement of the financial position, as the time has now arrived to make an effort to spread the knowledge to the ends of the earth. The expenses of the propaganda are roughly estimated as follows— Maintenance of Temple, and service . £200 p.a. Publications . £200 p.a. Advertising, electrical expenses, etc. £200 p.a. Maintenance of an Hermitage where poor Brethren may make retirements . £200 p.a. £800 p.a. ii . As in the past, the persons responsible for the movement will give the whole of their time and energy, as well as their worldy wealth, to the service of the A\ A\ Unfortunately, the sums at their disposal do not at present suffice for the contemplated advance, and the Chan- cellor consequently appeals for assistance to those who have found in the instructions of the A\ A\ a sure means to the end they sought. -

Tallinn Annual Report 2014

Tallinn annual report 2014 Tallinn annual report 2014 CONTENTS 9PAGE 12PAGE 24PAGE 26PAGE FOREWORD BY THE MAYOR TALLINN - TALLINN HAS A TALLINN AND ITS DIVERSE THE CAPITAL OF THE PRODUCTIVE ECONOMY ARRAY OF ACTIVITIES AND REPUBLIC OF ESTONIA EXPERIENCES 30PAGE 37PAGE EDUCATED, SKILLED, AND TALLINN RESIDENT CARED Tallinn OPEN TALLINN FOR, PROTECTED, AND annual report annual HELPED - SAFE TALLINN 2014 42PAGE TALLINN AS A COSY, Facts 13 Education 31 City transport and 43 INSPIRING, AND ENVIRON- Population 14 Sports 34 road safety MENTALLY SUSTAINABLE Economy 15 Youth work 36 Landscaping and 45 CITY SPACE Managing the city 18 Social welfare 38 property maintenance Safety 40 City economy 47 Health 41 City planning 48 Water and sewerage 49 PAGE Environmental 50 54 protection Income 55 OVERVIEW OF BUDGET Operating costs 56 IMPLEMENTATION OF Investment activity 58 TALLINN CITY IN 2014 Financing activities 59 and cash flow PAGE PAGE 8 9 LINNAELU ARENG FOREWORDLINNAELU BY ARENGTHE MAYOR VALDKONNITI VALDKONNITI In their dreams and wishes, people must move faster than life dictates because this is when we desire to go on and only then we shall one day have the chance to ensure that something has been completed again. Tallinn is accustomed to setting tion of the Haabersti intersection in proven itself to be a stable city and big goals for itself - taking responsi- cooperation with the private sector. shelter for many people who would bility for ensuring that its residents Concurrently with the construction have otherwise been forced to search receive high quality services and of new tram tracks, the section of abroad for jobs. -

Karaoke Mietsystem Songlist

Karaoke Mietsystem Songlist Ein Karaokesystem der Firma Showtronic Solutions AG in Zusammenarbeit mit Karafun. Karaoke-Katalog Update vom: 13/10/2020 Singen Sie online auf www.karafun.de Gesamter Katalog TOP 50 Shallow - A Star is Born Take Me Home, Country Roads - John Denver Skandal im Sperrbezirk - Spider Murphy Gang Griechischer Wein - Udo Jürgens Verdammt, Ich Lieb' Dich - Matthias Reim Dancing Queen - ABBA Dance Monkey - Tones and I Breaking Free - High School Musical In The Ghetto - Elvis Presley Angels - Robbie Williams Hulapalu - Andreas Gabalier Someone Like You - Adele 99 Luftballons - Nena Tage wie diese - Die Toten Hosen Ring of Fire - Johnny Cash Lemon Tree - Fool's Garden Ohne Dich (schlaf' ich heut' nacht nicht ein) - You Are the Reason - Calum Scott Perfect - Ed Sheeran Münchener Freiheit Stand by Me - Ben E. King Im Wagen Vor Mir - Henry Valentino And Uschi Let It Go - Idina Menzel Can You Feel The Love Tonight - The Lion King Atemlos durch die Nacht - Helene Fischer Roller - Apache 207 Someone You Loved - Lewis Capaldi I Want It That Way - Backstreet Boys Über Sieben Brücken Musst Du Gehn - Peter Maffay Summer Of '69 - Bryan Adams Cordula grün - Die Draufgänger Tequila - The Champs ...Baby One More Time - Britney Spears All of Me - John Legend Barbie Girl - Aqua Chasing Cars - Snow Patrol My Way - Frank Sinatra Hallelujah - Alexandra Burke Aber Bitte Mit Sahne - Udo Jürgens Bohemian Rhapsody - Queen Wannabe - Spice Girls Schrei nach Liebe - Die Ärzte Can't Help Falling In Love - Elvis Presley Country Roads - Hermes House Band Westerland - Die Ärzte Warum hast du nicht nein gesagt - Roland Kaiser Ich war noch niemals in New York - Ich War Noch Marmor, Stein Und Eisen Bricht - Drafi Deutscher Zombie - The Cranberries Niemals In New York Ich wollte nie erwachsen sein (Nessajas Lied) - Don't Stop Believing - Journey EXPLICIT Kann Texte enthalten, die nicht für Kinder und Jugendliche geeignet sind. -

Svenska Låtar - Sorterade Efter Artist

Svenska låtar - Sorterade efter Artist A-teens - Promised myself Jill Johnsson - Crazy in love Abba - Mama Mia Jimmy Jansson - Flickan i det blå Ace of base - Life is a flower Jimmy Jansson - Vi kan Gunga Afro Dite - Never let it go Johan Becker - Let me love you After Dark - La Dolce Vita Jokkmokks-Jocke - GulliGullan Agnes - On and on Just D med Thorleifs - Tre Gringos Agnes - Right here right now Kajsa Stina Åkerström - Av längtan till dig Ainbusk Singers - Älska mig Kicki Danielsson - Bra vibrationer Alcazar - Alcastar Kicki Danielsson - Dag efter dag Alcazar - Not a sinner nor a saint Lasse Berghagen - Stockholm i mitt hjärta Amanda Jensen - Amarula Tree Lasse Berghagen - Teddybjörnen Fredriksson Amy Diamond - Shooting Star Lasse Holm & Monica Törnell - Är det det här Amy Diamond - Whats in it for me du kallar kärlek Amy Dimond - Welcome to the city Lena Ph - Dansa I neon Angel - Sommaren i city Lena Ph - Det gör ont Anita Lindbom - Sånt e livet Lena Ph - Kärleken är evig Ann-Christine Bärnsten - Ska vi plocka Lena Ph - Lena Anthem körsbär i min trädgård Lill Babs - En tuff brud i lyxförpacking Anna Bergendahl - This is my life Lill Babs - Leva livet Anna Book - ABC Lill Lindfors - Du är den ende Annelie Rydé - En sån karl Lilli & Sussi - Oh Mama Arne Quick - Rosen Linda Bengtzing - Alla Flickor Arvingarna - Eloise Linda Bengtzing - Hur svårt kan det va Attack - Ooa hela natten Linda Bengtzing - E det fel på mig Barbados - Allt som jag ser Linda Bengtzing & Velvet - Victorious Barbados - Bye Bye Lisa Ekdahl - Vem vet Barbados - Kom -

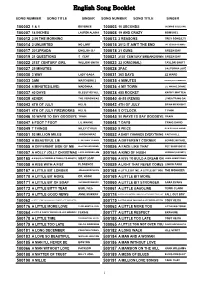

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

Inte Bara Bögarnas Fest” En Queer Kulturstudie Av Melodifestivalen

”Inte bara bögarnas fest” En queer kulturstudie av Melodifestivalen Institutionen för etnologi, religionshistoria och genusvetenskap Examensarbete 30 HP Mastersprogrammet i genusvetenskap 120 HP Vårterminen 2018 Författare: Olle Jilkén Handledare: Kalle Berggren Abstract Uppsatsen undersöker hur TV-programmet Melodifestivalen förhåller sig till sin homokulturella status genom att studera programmets heteronormativa ramar och porträttering av queerhet. Det teoretiska ramverket grundar sig i representationsteori, kulturstudier, gaystudier och queerteori. Analysen resulterar i att de heteronormativa ramarna framställer heterosexualitet som en given norm i programmet. Detta uppvisas bland annat av en implicit heterosexuell manlig blick som sexualiserar kvinnokroppar och ser avklädda män, queerhet och femininitet som något komiskt. Detta komplicerar tidigare studiers bild av melodifestivaler som huvudsakligen investerade och accepterande av queera subjekt. Den queera representationen består främst av homosexuella män. Den homosexuellt manliga identiteten görs inte enhetlig och bryter mot heteronormativa ideal i olika grad. Några sätt som den homosexuellt manliga identiteten porträtteras är artificiell, feminin, icke-monogam, barnslig och investerad i schlager. Analysen påpekar att programmet har queert innehåll trots dess kommersiella framställning och normativa ideal. Nyckelord: Melodifestivalen, schlager, genusvetenskap, homokultur, gaystudier, bögkultur, heteronormativitet. Innehållsförteckning 1. En älskad och hatad ”homofilfestival” 1 -

Eurovision Karaoke

1 Eurovision Karaoke ALBANÍA ASERBAÍDJAN ALB 06 Zjarr e ftohtë AZE 08 Day after day ALB 07 Hear My Plea AZE 09 Always ALB 10 It's All About You AZE 14 Start The Fire ALB 12 Suus AZE 15 Hour of the Wolf ALB 13 Identitet AZE 16 Miracle ALB 14 Hersi - One Night's Anger ALB 15 I’m Alive AUSTURRÍKI ALB 16 Fairytale AUT 89 Nur ein Lied ANDORRA AUT 90 Keine Mauern mehr AUT 04 Du bist AND 07 Salvem el món AUT 07 Get a life - get alive AUT 11 The Secret Is Love ARMENÍA AUT 12 Woki Mit Deim Popo AUT 13 Shine ARM 07 Anytime you need AUT 14 Conchita Wurst- Rise Like a Phoenix ARM 08 Qele Qele AUT 15 I Am Yours ARM 09 Nor Par (Jan Jan) AUT 16 Loin d’Ici ARM 10 Apricot Stone ARM 11 Boom Boom ÁSTRALÍA ARM 13 Lonely Planet AUS 15 Tonight Again ARM 14 Aram Mp3- Not Alone AUS 16 Sound of Silence ARM 15 Face the Shadow ARM 16 LoveWave 2 Eurovision Karaoke BELGÍA UKI 10 That Sounds Good To Me UKI 11 I Can BEL 86 J'aime la vie UKI 12 Love Will Set You Free BEL 87 Soldiers of love UKI 13 Believe in Me BEL 89 Door de wind UKI 14 Molly- Children of the Universe BEL 98 Dis oui UKI 15 Still in Love with You BEL 06 Je t'adore UKI 16 You’re Not Alone BEL 12 Would You? BEL 15 Rhythm Inside BÚLGARÍA BEL 16 What’s the Pressure BUL 05 Lorraine BOSNÍA OG HERSEGÓVÍNA BUL 07 Water BUL 12 Love Unlimited BOS 99 Putnici BUL 13 Samo Shampioni BOS 06 Lejla BUL 16 If Love Was a Crime BOS 07 Rijeka bez imena BOS 08 D Pokušaj DUET VERSION DANMÖRK BOS 08 S Pokušaj BOS 11 Love In Rewind DEN 97 Stemmen i mit liv BOS 12 Korake Ti Znam DEN 00 Fly on the wings of love BOS 16 Ljubav Je DEN 06 Twist of love DEN 07 Drama queen BRETLAND DEN 10 New Tomorrow DEN 12 Should've Known Better UKI 83 I'm never giving up DEN 13 Only Teardrops UKI 96 Ooh aah.. -

Karaoke Catalog Updated On: 11/01/2019 Sing Online on in English Karaoke Songs

Karaoke catalog Updated on: 11/01/2019 Sing online on www.karafun.com In English Karaoke Songs 'Til Tuesday What Can I Say After I Say I'm Sorry The Old Lamplighter Voices Carry When You're Smiling (The Whole World Smiles With Someday You'll Want Me To Want You (H?D) Planet Earth 1930s Standards That Old Black Magic (Woman Voice) Blackout Heartaches That Old Black Magic (Man Voice) Other Side Cheek to Cheek I Know Why (And So Do You) DUET 10 Years My Romance Aren't You Glad You're You Through The Iris It's Time To Say Aloha (I've Got A Gal In) Kalamazoo 10,000 Maniacs We Gather Together No Love No Nothin' Because The Night Kumbaya Personality 10CC The Last Time I Saw Paris Sunday, Monday Or Always Dreadlock Holiday All The Things You Are This Heart Of Mine I'm Not In Love Smoke Gets In Your Eyes Mister Meadowlark The Things We Do For Love Begin The Beguine 1950s Standards Rubber Bullets I Love A Parade Get Me To The Church On Time Life Is A Minestrone I Love A Parade (short version) Fly Me To The Moon 112 I'm Gonna Sit Right Down And Write Myself A Letter It's Beginning To Look A Lot Like Christmas Cupid Body And Soul Crawdad Song Peaches And Cream Man On The Flying Trapeze Christmas In Killarney 12 Gauge Pennies From Heaven That's Amore Dunkie Butt When My Ship Comes In My Own True Love (Tara's Theme) 12 Stones Yes Sir, That's My Baby Organ Grinder's Swing Far Away About A Quarter To Nine Lullaby Of Birdland Crash Did You Ever See A Dream Walking? Rags To Riches 1800s Standards I Thought About You Something's Gotta Give Home Sweet Home -

Monitor 5. Maí 2011

MONITORBLAÐIÐ 18. TBL 2. ÁRG. FIMMTUDAGUR 5. MAÍ 2011 MORGUNBLAÐIÐ | mbl.is FRÍTT EINTAK TÓNLIST, KVIKMYNDIR, SJÓNVARP, LEIKHÚS, LISTIR, ÍÞRÓTTIR, MATUR OG ALLT ANNAÐ SIA.IS ICE 54848 05/11 ÍSLENSKA GÓÐIR FARÞEGAR! ÞAÐ ER DJ-INN SEM TALAR VINSAMLEGAST SPENNIÐ SÆTISÓLARNAR OG SETJIÐ Á YKKUR HEYRNARTÓLIN. FJÖRIÐ LIGGUR Í LOFTINU! Icelandair er stolt af því að Margeir Ingólfsson, Hluti af þessu samstarfi er sú nýjung að DJ Margeir, hefur gengið til liðs við félagið heimsfrumflytja í háloftunum nýjan tónlistardisk og velur alla tónlist sem leikin er um borð. Gus Gus, Arabian Horse sem fer í almenna Sérstök áhersla verður lögð á íslenska tónlist sölu þann 23. maí næstkomandi – en þá hafa og þá listamenn sem koma fram á Iceland viðskiptavinir Icelandair haft tæpa tvo mánuði Airwaves hátíðinni. til að njóta hans – fyrstir allra. FIMMTUDAGUR 5. MAÍ 2011 Monitor 3 fyrst&fremst Monitor Ber Einar Bárðarson ábyrgð á þessu? mælir með FYRIR VERSLUNARMANNAHELGINA Margir bíða æsispenntir eftir Þjóðhátíð í Eyjum og margir hafa nú þegar bókað far þangað. Nú gefst landanum gott tæki- færi til að tryggja sér miða á hátíðina á góðu verði því forsala miða hefst á vefsíðu N1 föstudaginn 6. maí og fá korthafar N1 miðann fjögur þúsund krónum ódýrari. FYRIR MALLAKÚTINN SÓMI ÍSLANDS, Hádegishlaðborð VOX er eitt það allra besta í bransanum. SVERÐ ÞESS OG... SKJÖLDUR! Frábært úrval af heitum og köldum réttum ásamt dýrindis eftirréttum og einstaklega Dimmiterað með stæl góðu sushi. Það kostar Nemendur FSU 3.150 krónur á fögnuðu væntanlegum Í STRÖNGU VATNI ER hlaðborðið svo skólalokum á dögunum með því að dimmit- STUTT TIL BOTNS maður fer kannski ekki þangað á hverjum degi en svo era. -

Eurovision Karaoke

1 Eurovision Karaoke Eurovision Karaoke 2 Eurovision Karaoke ALBANÍA AUS 14 Conchita Wurst- Rise Like a Phoenix ALB 07 Hear My Plea BELGÍA ALB 10 It's All About You BEL 06 Je t'adore ALB 12 Suus BEL 12 Would You? ALB 13 Identitet BEL 86 J'aime la vie ALB 14 Hersi - One Night's Anger BEL 87 Soldiers of love BEL 89 Door de wind BEL 98 Dis oui ARMENÍA ARM 07 Anytime you need BOSNÍA OG HERSEGÓVÍNA ARM 08 Qele Qele BOS 99 Putnici ARM 09 Nor Par (Jan Jan) BOS 06 Lejla ARM 10 Apricot Stone BOS 07 Rijeka bez imena ARM 11 Boom Boom ARM 13 Lonely Planet ARM 14 Aram Mp3- Not Alone BOS 11 Love In Rewind BOS 12 Korake Ti Znam ASERBAÍDSJAN AZE 08 Day after day BRETLAND AZE 09 Always UKI 83 I'm never giving up AZE 14 Start The Fire UKI 96 Ooh aah... just a little bit UKI 04 Hold onto our love AUSTURRÍKI UKI 07 Flying the flag (for you) AUS 89 Nur ein Lied UKI 10 That Sounds Good To Me AUS 90 Keine Mauern mehr UKI 11 I Can AUS 04 Du bist UKI 12 Love Will Set You Free AUS 07 Get a life - get alive UKI 13 Believe in Me AUS 11 The Secret Is Love UKI 14 Molly- Children of the Universe AUS 12 Woki Mit Deim Popo AUS 13 Shine 3 Eurovision Karaoke BÚLGARÍA FIN 13 Marry Me BUL 05 Lorraine FIN 84 Hengaillaan BUL 07 Water BUL 12 Love Unlimited FRAKKLAND BUL 13 Samo Shampioni FRA 69 Un jour, un enfant DANMÖRK FRA 93 Mama Corsica DEN 97 Stemmen i mit liv DEN 00 Fly on the wings of love FRA 03 Monts et merveilles DEN 06 Twist of love DEN 07 Drama queen DEN 10 New Tomorrow FRA 09 Et S'il Fallait Le Faire DEN 12 Should've Known Better FRA 11 Sognu DEN 13 Only Teardrops