Explaining Box Office Performance from the Bottom Up: Data, Theories and Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

9780367508234 Text.Pdf

Development of the Global Film Industry The global film industry has witnessed significant transformations in the past few years. Regions outside the USA have begun to prosper while non-traditional produc- tion companies such as Netflix have assumed a larger market share and online movies adapted from literature have continued to gain in popularity. How have these trends shaped the global film industry? This book answers this question by analyzing an increasingly globalized business through a global lens. Development of the Global Film Industry examines the recent history and current state of the business in all parts of the world. While many existing studies focus on the internal workings of the industry, such as production, distribution and screening, this study takes a “big picture” view, encompassing the transnational integration of the cultural and entertainment industry as a whole, and pays more attention to the coordinated develop- ment of the film industry in the light of influence from literature, television, animation, games and other sectors. This volume is a critical reference for students, scholars and the public to help them understand the major trends facing the global film industry in today’s world. Qiao Li is Associate Professor at Taylor’s University, Selangor, Malaysia, and Visiting Professor at the Université Paris 1 Panthéon- Sorbonne. He has a PhD in Film Studies from the University of Gloucestershire, UK, with expertise in Chinese- language cinema. He is a PhD supervisor, a film festival jury member, and an enthusiast of digital filmmaking with award- winning short films. He is the editor ofMigration and Memory: Arts and Cinemas of the Chinese Diaspora (Maison des Sciences et de l’Homme du Pacifique, 2019). -

June 1St, 2010 Mr. Gary Gensler Chairman Commodity Futures

30 Alice Lane Smithtown, NY 11787 June 1st, 2010 Mr. Gary Gensler Chairman Commodity Futures Trading Commission Three Lafayette Centre 1155 21st Street, NW Washington, DC 20581 Dear Chairman Gensler, My name is Bill Bonfanti and I own and operate a movie based website, FilmGo.net and I fully support the trading of box office futures. Let me give you a little history as to who I am and what I do. I was a stockbroker on the floor of the New York Stock Exchange for 13 years and as such I understand the volatility associated with trading stocks and other financial instruments. I currently serve as box office analyst and film reviewer for the site. One of the main arguments presented by the MPAA in opposition to trading box office receipts is that box office futures would begin to negatively impact the actual box office receipts of their underlying films due to possible negative buzz associated with the price of a contract. This is a complete falsehood and in fact the opposite is true. If anything, a box office futures exchange will increase interest and public appetite for films. If you look at any newsstand, you’ll see that most of the magazines on the rack cater to our obsession with movies, TV and celebrities. There are numerous websites and televisions shows that also cater to this obsession. All of these spread both negative and positive buzz about films from the second a project is announced to the time it is actually playing in theatres nationwide. Critics review films every week and even their esteemed opinions don’t have any overall effect on the box office. -

How the CFTC Is Using Virtual Currencies to Expand Its Jurisdiction

Arkansas Law Review Volume 73 Number 2 Article 1 August 2020 New Things Under the Sun: How the CFTC is Using Virtual Currencies to Expand Its Jurisdiction James Michael Blakemore University of Michigan Law School Follow this and additional works at: https://scholarworks.uark.edu/alr Part of the Banking and Finance Law Commons, Law and Economics Commons, Secured Transactions Commons, and the Securities Law Commons Recommended Citation James M. Blakemore, New Things Under the Sun: How the CFTC is Using Virtual Currencies to Expand Its Jurisdiction, 73 Ark. L. Rev. 205 (2020). Available at: https://scholarworks.uark.edu/alr/vol73/iss2/1 This Article is brought to you for free and open access by ScholarWorks@UARK. It has been accepted for inclusion in Arkansas Law Review by an authorized editor of ScholarWorks@UARK. For more information, please contact [email protected]. NEW THINGS UNDER THE SUN: HOW THE CFTC IS USING VIRTUAL CURRENCIES TO EXPAND ITS JURISDICTION James Michael Blakemore* INTRODUCTION A decade has passed since Bitcoin solved a fundamental problem plaguing virtual currencies:1 How to ensure, without re- sort to financial intermediaries or other trusted central authorities, that a unit of digital currency can be spent only once.2 In that * Partner at Ketsal PLLC. Adjunct Professor, University of Michigan Law School. For thoughtful comments and conversations, I would like to thank Connie Chang, Joshua Garcia, Zachary Fallon, Diego Zambrano, Pandora Chang, participants in the Arkansas Law Review Symposium on the Evolving Regulation of Crypto, and the editors of the Arkansas Law Re- view. The views expressed here are my own and do not necessarily reflect the views of Ketsal. -

MICHAEL BONVILLAIN, ASC Director of Photography

MICHAEL BONVILLAIN, ASC Director of Photography official website FEATURES (partial list) OUTSIDE THE WIRE Netflix Dir: Mikael Håfström AMERICAN ULTRA Lionsgate Dir: Nima Nourizadeh Trailer MARVEL ONE-SHOT: ALL HAIL THE KING Marvel Entertainment Dir: Drew Pearce ONE NIGHT SURPRISE Cathay Audiovisual Global Dir: Eva Jin HANSEL & GRETEL: WITCH HUNTERS Paramount Pictures Dir: Tommy Wirkola Trailer WANDERLUST Universal Pictures Dir: David Wain ZOMBIELAND Columbia Pictures Dir: Ruben Fleischer Trailer CLOVERFIELD Paramount Pictures Dir: Matt Reeves A TEXAS FUNERAL New City Releasing Dir: W. Blake Herron THE LAST MARSHAL Filmtown Entertainment Dir: Mike Kirton FROM DUSK TILL DAWN 3 Dimension Films Dir: P.J. Pesce AMONGST FRIENDS Fine Line Features Dir: Rob Weiss TELEVISION (partial list) PEACEMAKER (Season 1) HBO Max DIR: James Gunn WAYS & MEANS (Season 1) CBS EP: Mike Murphy, Ed Redlich HAP AND LEONARD (Season 3) Sundance TV, Netflix EP: Jim Mickle, Nick Damici, Jeremy Platt Trailer WESTWORLD (Utah; Season 1, 4 Episodes.) Bad Robot, HBO EP: Lisa Joy, Jonathan Nolan CHANCE (Pilot) Fox 21, Hulu EP: Michael London, Kem Nunn, Brian Grazer Trailer THE SHANNARA CHRONICLES MTV EP: Al Gough, Miles Millar, Jon Favreau (Pilot & Episode 102) FROM DUSK TIL DAWN (Season 1) Entertainment One EP: Juan Carlos Coto, Robert Rodriguez COMPANY TOWN (Pilot) CBS EP: Taylor Hackford, Bill Haber, Sera Gamble DIR: Taylor Hackford REVOLUTION (Pilot) NBC EP: Jon Favreau, Eric Kripke, Bryan Burk, J.J. Abrams DIR: Jon Favreau UNDERCOVERS (Pilot) NBC EP: J.J. Abrams, Bryan Burk, Josh Reims DIR: J.J. Abrams OUTLAW (Pilot) NBC EP: Richard Schwartz, Amanda Green, Lukas Reiter DIR: Terry George *FRINGE (Pilot) Fox Dir: J.J. -

How the Motion Picture Industry Miscalculates Box Office Receipts

How the motion picture industry miscalculates box office receipts S. Eric Anderson, Loma Linda University Stewart Albertson, Loma Linda University David Shavlik, Loma Linda University INTRODUCTION when movie grosses are adjusted for inflation, the Sound of Music was a more popular movie Box office grosses, once of interest only to than Titanic even though the box office gross movie industry executives, are now widely was over $400 million less. So why is it then publicized and immediately reported by movie that box office grosses are often the only industry tracking companies. The numbers reported, when the numbers have instantaneous tracking and reporting hurts little meaning? The motion picture industry, movies with weak openings, but helps movies aware that inflation helps movies grow bigger, with big openings become even bigger as has little interest in reporting highest grossing people flock to see what all the fuss is about. box office numbers with inflation-adjusted Due to inflation, the highest grossing movies dollars that will show the motion picture tend to be the more recent releases, which the industry is stagnant at best. They are able to motion picture industry is taking full get away with it since most don’t know how advantage of when promoting new movies. to handle those inflation-adjusting As a result, the motion picture industry has calculations. developed “highest grossing “ movie lists from almost every angle imaginable - opening Inflation-adjusted gross calculations are day, opening weekend, opening day non- inaccurate weekend, opening day during the fall, winter and spring, opening day Memorial weekend, Some tracking companies have begun second weekend of release, fewest screens, reporting box office grosses with the less etc. -

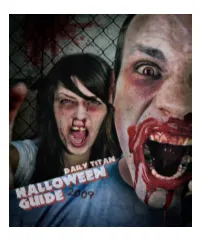

2009-10-05-Halloween Guide.Pdf

2 | The Daily Titan Details of events on page 8 Halloween Guide 2009 | 3 Movie Reviews by Jonathan Montgomery Zombieland Jennifer’s Body Remember mad cow disease? What if it the movie really focuses on the character Typically, “scary” is the last word that nifer is openly, well, open); she turns into had actually escalated into a global epidem- development of lone survivors. It is ev- comes to my mind when I think of Me- some sort of boy-hating vampire, and her ic, spreading infected zombies into your eryone’s selfishness and adherence to self- gan Fox (“Transformers,” “Transformers: quest for blood begins. surrounding cities? (Yeah, zombies! Not interest that kept them alive thus far. But as Revenge of the Fallen”). Between the gruesome killings and brief those wimpy vampires that have been run- their time spent together lengthens, an im- However, “Jennifer’s Body” really pres- moments of comedic relief, the movie it- ning around lately.) minent family bond grows between them. ents her in a way I’m not accustomed to: self never really commits to either genre. Well, apparently, deceitful, young attrac- With that said, this isn’t just a sappy sto- a blood-sucking, flesh-eating, boy-killing That is not to say the movie doesn’t tive girls, a Michael Cera clone, Jesse Eisen- ry. It is still a zombie movie, and it follows kind of gal. shine in either respects. berg, a Twinkie-obesessed Woody Harrel- most of the zombie film rules. Similar to Directed by Karyn Kusama (“Aeon Diablo Cody delivers some expected son and Bill Murray would be among the “Shaun of the Dead,” the movie is hilari- Flux”) and written by Diablo Cody clever one-liners and memorable quirky survivors. -

County Eyes $900,000 Transfer for Workhouse by BRIAN GRAVES Commission Voting Agenda for Aug

T U E S D A Y 162nd YEAR • No. 75 JULY 26, 2016 CLEVELAND, TN 16 PAGES • 50¢ County eyes $900,000 transfer for workhouse By BRIAN GRAVES Commission voting agenda for Aug. 1, Davis said by statute, the money in Spring Branch Industrial Park infra- when Chancellor Jerri S. Bryant will Banner Staff Writer after Finance Chairman Milan Blake the reserve was collected for use of law structure. hear from County Attorney Crystal noted the amount was “too great for the enforcement items. “If we get past Wednesday’s court Freiberg, who under advisement from The Bradley County Commission consent agenda.” Blake asked Davis how long the funds date, it’s a simple process with the state officials, will formally present the Finance Committee voted Monday to “This is where we had a reserve fund would last, “because once we use these trustee and we might just do the $3 mil- plan to borrow funds from the proceeds recommend transferring $900,000 from that has accumulated quite a bit over up, we’ll have to issue the bonds.” lion for the industrial park,” Davis said. of the sale of Bradley Memorial reserve funds to finance construction of the years,” said County Mayor D. Gary The mayor said the plan is to bond “We probably won’t need this money Hospital. the new workhouse facility through the Davis. “Our plan from the beginning out the workhouse balance — roughly until the end of the calendar year.” State statutes allowed that process first of the year. was to use this for the first part of pay- $2 million — at the same time bonds are The court date Davis spoke of is one The action is now on the County ment for the workhouse.” let on a $3 million commitment for scheduled for Wednesday morning See WORKHOUSE, Page 16 Inside Today Craft beer restaurant gets first Council approval Second reading Back in pads set in 2 weeks The Cleveland Blue Raiders put the pads on for the first time By JOYANNA LOVE Monday in preparation for the Banner Senior Staff Writer upcoming football season. -

Why Hollywood Isn't As Liberal As We Think and Why It Matters

Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2019 Why Hollywood Isn't As Liberal As We Think nda Why It Matters Amanda Daily Claremont McKenna College Recommended Citation Daily, Amanda, "Why Hollywood Isn't As Liberal As We Think nda Why It Matters" (2019). CMC Senior Theses. 2230. https://scholarship.claremont.edu/cmc_theses/2230 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. 1 Claremont McKenna College Why Hollywood Isn’t As Liberal As We Think And Why It Matters Submitted to Professor Jon Shields by Amanda Daily for Senior Thesis Fall 2018 and Spring 2019 April 29, 2019 2 3 Abstract Hollywood has long had a reputation as a liberal institution. Especially in 2019, it is viewed as a highly polarized sector of society sometimes hostile to those on the right side of the aisle. But just because the majority of those who work in Hollywood are liberal, that doesn’t necessarily mean our entertainment follows suit. I argue in my thesis that entertainment in Hollywood is far less partisan than people think it is and moreover, that our entertainment represents plenty of conservative themes and ideas. In doing so, I look at a combination of markets and artistic demands that restrain the politics of those in the entertainment industry and even create space for more conservative productions. Although normally art and markets are thought to be in tension with one another, in this case, they conspire to make our entertainment less one-sided politically. -

The Determinants of Box Office Revenue: a Case Based Study: Thirty, Low Budget, Highest ROI Films Vs. Thirty, Big Budget, Highes

The determinants of box office revenue: a case based study: thirty, low budget, highest ROI films vs. thirty, big budget, highest grossing Hollywood films Yasemin Bozdogan To cite this version: Yasemin Bozdogan. The determinants of box office revenue: a case based study: thirty, low budget, highest ROI films vs. thirty, big budget, highest grossing Hollywood films. Economics and Finance. 2013. dumas-00909948 HAL Id: dumas-00909948 https://dumas.ccsd.cnrs.fr/dumas-00909948 Submitted on 27 Nov 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Université Paris1 UFR 02 Sciences Economiques Master 2 Recherche M2R Economie Appliquee THE DETERMINANTS OF BOX OFFICE REVENUE: A CASE BASED STUDY Thirty, Low Budget, highest ROI Films Vs. Thirty, Big Budget, Highest Grossing Hollywood Films Sous la direcation de : Professeur Xavier GREFF Présentée et Soutenue par : Yasemin BOZDOGAN Date de soutenance : 10 June 2013 Table of Content I. Abstract II. Introduction and Motivation III. Literature review and Hypothesis Development IV. Case Specific Study IV.I ROI Sample IV.II Hollywood Sample V. Methodology, The model, Descriptive Statistics VI. Regression and Results VII. Discussions VIII. Conclusion Remarks IX. Appendix X. -

A Critical Look at the Film Futures

BACK TO THE FUTURE[S]: A CRITICAL♦ LOOK AT THE FILM FUTURES BAN INTRODUCTION.................................................................................180 I. THE HISTORY OF FILM FUTURES: FROM INCEPTION TO ARMAGEDDON .....................................................................182 II. BACKGROUND AND DEFINITIONS......................................189 A. A Brief History of Futures Trading ................................189 B. Commodity Futures Trading, Generally.........................189 C. Film Futures, Specifically................................................191 D. Who Would Invest in Film Futures?...............................193 III. IRON [B]AN – WHY CONGRESS BANNED FILM FUTURES TRADING ...........................................................195 A. “Motion Picture Box Office Receipts are not a ‘Commodity’” .................................................................196 B. “Speculators will ‘Game’ the Futures Exchange”...........198 C. “Film Futures Trading is Just Legalized Gambling”.......201 D. “Film Futures Resemble the Risky Financial Instruments that Caused the Financial Crisis” .............202 IV. TO KILL A MOCKINGBIRD–WHY FILM FUTURES TRADING WOULD HELP, NOT HARM, THE MOTION PICTURE INDUSTRY...........................................................204 A. The CFTC’s Authority and the Significance of its Authorization of Film Futures Trading ........................204 1. The CFTC’s Authority...............................................204 2. The Failure of Congress’s Ban on the Trading of Onion -

MADE in HOLLYWOOD, CENSORED by BEIJING the U.S

MADE IN HOLLYWOOD, CENSORED BY BEIJING The U.S. Film Industry and Chinese Government Influence Made in Hollywood, Censored by Beijing: The U.S. Film Industry and Chinese Government Influence 1 MADE IN HOLLYWOOD, CENSORED BY BEIJING The U.S. Film Industry and Chinese Government Influence TABLE OF CONTENTS EXECUTIVE SUMMARY I. INTRODUCTION 1 REPORT METHODOLOGY 5 PART I: HOW (AND WHY) BEIJING IS 6 ABLE TO INFLUENCE HOLLYWOOD PART II: THE WAY THIS INFLUENCE PLAYS OUT 20 PART III: ENTERING THE CHINESE MARKET 33 PART IV: LOOKING TOWARD SOLUTIONS 43 RECOMMENDATIONS 47 ACKNOWLEDGEMENTS 53 ENDNOTES 54 Made in Hollywood, Censored by Beijing: The U.S. Film Industry and Chinese Government Influence MADE IN HOLLYWOOD, CENSORED BY BEIJING EXECUTIVE SUMMARY ade in Hollywood, Censored by Beijing system is inconsistent with international norms of Mdescribes the ways in which the Chinese artistic freedom. government and its ruling Chinese Communist There are countless stories to be told about China, Party successfully influence Hollywood films, and those that are non-controversial from Beijing’s warns how this type of influence has increasingly perspective are no less valid. But there are also become normalized in Hollywood, and explains stories to be told about the ongoing crimes against the implications of this influence on freedom of humanity in Xinjiang, the ongoing struggle of Tibetans expression and on the types of stories that global to maintain their language and culture in the face of audiences are exposed to on the big screen. both societal changes and government policy, the Hollywood is one of the world’s most significant prodemocracy movement in Hong Kong, and honest, storytelling centers, a cinematic powerhouse whose everyday stories about how government policies movies are watched by millions across the globe. -

Alice in Zombieland SUMMARY

Alice in Zombieland Gena Showalter Harlequin Enterprises Limited, 2012 404 pages SUMMARY: After a car crash kills her parents and younger sister, sixteen year old Alice Bell learns that her dad was right. The monsters are real. Now, Ali must learn to fight the monsters in order to stay alive. IF YOU LIKED THIS BOOK TRY… Wake, Lisa McMann Through the Zombie Glass, Gena Showalter Infinity, Sherrilyn Kenyon School Spirits, Rachel Hawkins WEBSITES: White Rabbit Chronicles, http://www.wrchronicles.com Official website of Gena Showalter, http://genashowalter.com BOOKTALK: Disclaimer: If (because of the book’s title) you are expecting a re-telling of Lewis Carroll’s Alice in Wonderland, you will be disappointed. However, don’t let that stop you from picking up this book. It’s a great read with a different twist on the whole Zombie concept. Yes, there are zombies in the book, but they’re not your typical zombies. Instead of being undead humans that crave human flesh, Showalter’s zombies are really infected spirits that eat the spirits of humans which then causes the humans to become infected. Not everyone can see the zombies. Sixteen year old Alice Bell doesn’t see them until after a car crash that kills her parents and younger sister. Alice moves in with her grandparents and attend a different high school. At her new school, Ali hooks up with a group of kids who turn out to be zombie slayers. Cole, the leader of the group, just happens to be the most gorgeous “bad boy” that Ali has ever seen.