Automated Classification of Piano–Guitar Notes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Joshua Bynum, Trombone Anatoly Sheludyakov, Piano Joshua Bynum

Presents a Presents a Faculty Recital Faculty Recital Joshua Bynum, trombone Joshua Bynum, trombone Anatoly Sheludyakov, piano Anatoly Sheludyakov, piano October 16, 2019 6:00 pm, Edge Recital Hall October 16, 2019 6:00 pm, Edge Recital Hall Radiant Spheres David Biedenbender Radiant Spheres David Biedenbender I. Fluttering, Fast, Precise (b. 1984) I. Fluttering, Fast, Precise (b. 1984) II. for me, time moves both more slowly and more quickly II. for me, time moves both more slowly and more quickly III. Radiant Spheres III. Radiant Spheres Someone to Watch Over Me George Gershwin Someone to Watch Over Me George Gershwin (1898-1937) (1898-1937) Arr. Joseph Turrin Arr. Joseph Turrin Simple Song Leonard Bernstein Simple Song Leonard Bernstein (1918-1990) (1918-1990) Zion’s Walls Aaron Copland Zion’s Walls Aaron Copland (1900-1990) (1900-1990) -intermission- -intermission- I Was Like Wow! JacobTV I Was Like Wow! JacobTV (b. 1951) (b. 1951) Red Sky Anthony Barfield Red Sky Anthony Barfield (b. 1981) (b. 1981) **Out of respect for the performer, please silence all electronic devices throughout the performance. **Out of respect for the performer, please silence all electronic devices throughout the performance. Thank you for your cooperation. Thank you for your cooperation. ** For information on upcoming concerts, please see our website: music.uga.edu. Join ** For information on upcoming concerts, please see our website: music.uga.edu. Join our mailing list to receive information on all concerts and our mailing list to receive information on all concerts and recitals, music.uga.edu/enewsletter recitals, music.uga.edu/enewsletter Dr. Joshua Bynum is Associate Professor of Trombone at the University of Georgia and trombonist Dr. -

Recommended Solos and Ensembles Tenor Trombone Solos Sång Till

Recommended Solos and Ensembles Tenor Trombone Solos Sång till Lotta, Jan Sandström. Edition Tarrodi: Stockholm, Sweden, 1991. Trombone and piano. Requires modest range (F – g flat1), well-developed lyricism, and musicianship. There are two versions of this piece, this and another that is scored a minor third higher. Written dynamics are minimal. Although phrases and slurs are not indicated, it is a SONG…encourage legato tonguing! Stephan Schulz, bass trombonist of the Berlin Philharmonic, gives a great performance of this work on YouTube - http://www.youtube.com/watch?v=Mn8569oTBg8. A Winter’s Night, Kevin McKee, 2011. Available from the composer, www.kevinmckeemusic.com. Trombone and piano. Explores the relative minor of three keys, easy rhythms, keys, range (A – g1, ossia to b flat1). There is a fine recording of this work on his web site. Trombone Sonata, Gordon Jacob. Emerson Edition: Yorkshire, England, 1979. Trombone and piano. There are no real difficult rhythms or technical considerations in this work, which lasts about 7 minutes. There is tenor clef used throughout the second movement, and it switches between bass and tenor in the last movement. Range is F – b flat1. Recorded by Dr. Ron Babcock on his CD Trombone Treasures, and available at Hickey’s Music, www.hickeys.com. Divertimento, Edward Gregson. Chappell Music: London, 1968. Trombone and piano. Three movements, range is modest (G-g#1, ossia a1), bass clef throughout. Some mixed meter. Requires a mute, glissandi, and ad. lib. flutter tonguing. Recorded by Brett Baker on his CD The World of Trombone, volume 1, and can be purchased at http://www.brettbaker.co.uk/downloads/product=download-world-of-the- trombone-volume-1-brett-baker. -

Working List of Repertoire for Tenor Trombone Solo and Bass Trombone Solo by People of Color/People of the Global Majority (POC/PGM) and Women Composers

Working List of Repertoire for tenor trombone solo and bass trombone solo by People of Color/People of the Global Majority (POC/PGM) and Women Composers Working list v.2.4 ~ May 30, 2021 Prepared by Douglas Yeo, Guest Lecturer of Trombone Wheaton College Conservatory of Music Armerding Center for Music and the Arts www.wheaton.edu/conservatory 520 E. Kenilworth Avenue Wheaton, IL 60187 Email: [email protected] www.wheaton.edu/academics/faculty/douglas-yeo Works by People of Color/People of the Global Majority (POC/PGM) Composers: Tenor trombone • Amis, Kenneth Preludes 1–5 (with piano) www.kennethamis.com • Barfield, Anthony Meditations of Sound and Light (with piano) Red Sky (with piano/band) Soliloquy (with trombone quartet) www.anthonybarfield.com 1 • Baker, David Concert Piece (with string orchestra) - Lauren Keiser Publishing • Chavez, Carlos Concerto (with orchestra) - G. Schirmer • Coleridge-Taylor, Samuel (arr. Ralph Sauer) Gypsy Song & Dance (with piano) www.cherryclassics.com • DaCosta, Noel Four Preludes (with piano) Street Calls (unaccompanied) • Davis, Nathaniel Cleophas (arr. Aaron Hettinga) Oh Slip It Man (with piano) Mr. Trombonology (with piano) Miss Trombonism (with piano) Master Trombone (with piano) Trombone Francais (with piano) www.cherryclassics.com • John Duncan Concerto (with orchestra) Divertimento (with string quartet) Three Proclamations (with string quartet) library.umkc.edu/archival-collections/duncan • Hailstork, Adolphus Cunningham John Henry’s Big (Man vs. Machine) (with piano) - Theodore Presser • Hong, Sungji Feromenis pnois (unaccompanied) • Lam, Bun-Ching Three Easy Pieces (with electronics) • Lastres, Doris Magaly Ruiz Cuasi Danzón (with piano) Tres Piezas (with piano) • Ma, Youdao Fantasia on a Theme of Yada Meyrien (with piano/orchestra) - Jinan University Press (ISBN 9787566829207) • McCeary, Richard Deming Jr. -

Portable Digital Musical Instruments 2009 — 2010

PORTABLE DIGITAL MUSICAL INSTRUMENTS 2009 — 2010 music.yamaha.com/homekeyboard For details please contact: This document is printed with soy ink. Printed in Japan How far do you want What kind of music What are your Got rhythm? to go with your music? do you want to play? creative inclinations? Recommended Recommended Recommended Recommended Tyros3 PSR-OR700 NP-30 PSR-E323 EZ-200 DD-65 PSR-S910 PSR-S550B YPG series PSR-E223 PSR-S710 PSR-E413 Pages 4-7 Pages 8-11 Pages 12-13 Page 14 The sky's the limit. Our Digital Workstations are jam-packed If the piano is your thing, Yamaha has a range of compact We've got Digital Keyboards of all types to help players of every If drums and percussion are your strong forte, our Digital with advanced features, exceptionally realistic sounds and piano-oriented instruments that have amazingly realistic stripe achieve their full potential. Whether you're just starting Percussion unit gives you exceptionally dynamic and expressive performance functions that give you the power sound and wonderfully expressive playability–just like having out or are an experienced expert, our instrument lineup realistic sounds, letting you pound out your own beats–in to create, arrange and perform in any style or situation. a real piano in your house, with a fraction of the space. provides just what you need to get your creative juices flowing. live performance, in rehearsal or in recording. 2 3 Yamaha’s Premier Music Workstation – Unsurpassed Quality, Features and Performance Ultimate Realism Limitless Creative Potential Interactive -

The Lion's Roar

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ The Lion’s Roar A Thorne Student Publication Winter 2019 Still Free Elf Day Pg 3, Mania Album Review, Pg 3, Winter Concert Pg 4, The Arts Op Ed, Pg 4, Art Pg 5, Poetry Pg 6, Investigative Spotlight-Social Media Pg 8 Chinchillas Thorne Giving Back Kara Gallagher By: Kristen Gallagher Fortnite Allegedly Dying, Streamer Protests Notion By Liam Perez Since its initial release on September 26, 2017, the We all know the holidays are a great immensely popular online game time of year. Opening our presents known as Fortnite: Battle Royale on Christmas morning with beaming has captured the hearts, minds, smiles is always a joy, but what about those who aren’t as fortunate and wallets of over 200 million As you may know, the 7th-grade as us? players science classes have adorable, soft I am a member of Thorne’s peer worldwide(pcgamesn.com, 2018). chinchillas, but do you really know leaders, and we were invited to help However, speculation that Fortnite: Battle Royale’s time in anything about those fuzzy, out with a holiday toy drive that eye-watering creatures? Well, if you benefits Middletown residents. When the public eye is drawing to a don’t, you should definitely read this I heard of this volunteer close, or that it is ‘dead.’ This article. I will write about chinchilla opportunity, I was interested right leads to the questions of “is this fun facts, and caring for a pet away. Approximately twenty other true?” and “if it is, what’s next?”, chinchilla. Thorne students participated. -

FRENCH CONNECTIONS with Avery Gagliano, Piano

FRENCH CONNECTIONS with Avery Gagliano, piano February 13, 2021 | 7:30 PM Welcome to OMP's Virtual Concert Hall! Bonjour et bienvenue! Thank you to our loyal donors and season subscribers for your continued support, and a warm welcome to those who are joining us in our "Virtual Concert Hall" for the first time. Your contributions have made it possible for OMP to present our third virtual chamber orchestra performance in HD audio and video! We hope you enjoy this programme musical français featuring First Prize and Best Concerto Prize winner of the 2020 10th National Chopin Piano Competition Avery Gagliano! THANK YOU TO THESE GENEROUS GRANTING ORGANIZATIONS: Get the PremiumExperience! Level A Subscription Seat Access to Virtual Premium Special Concerts and additional OMP content or concerts. Receive "Kelly's Recommendation" in headphones: Bose® SoundLink around-ear headphones II with contactless shipping Select a personally autographed CD or DVD of "A Virtual Recital", featuring 2015 Young Soloist Competition Winner John Fawcett, violin and Kelly Kuo, piano. Complimentary, contactless wine delivery upon virtual reception advanced reservation Current 2020-21 Subscribers can use the amount of their tickets as a credit toward their purchase of an OMP Premium Experience Package! Give the gift of with OMP and Bose at oregonmomzaurstipclayers.org/tickets Orchestra Kelly Kuo, Artistic Director & Conductor VIOLIN Jenny Estrin, acting concertmaster Yvonne Hsueh, principal 2nd violin Stephen Chong Della Davies Sponsored by Nancy & Brian Davies Julia Frantz Sponsored by James & Paula Salerno Nathan Lowman Claudia Miller Sponsored by Jeffrey Morey & Gail Harris Sophie Therrell Sponsored by W. Mark & Anne Dean Alwyn Wright* VIOLA Arnaud Ghillebaert principal viola Lauren Elledge Kimberly Uwate* CELLO Dale Bradley acting principal cello Sponsored by Larissa Ennis & Lindsay Braun Eric Alterman Noah Seitz BASS Nicholas Burton, principal bass HARPSICHORD/PIANO Thank you to our additional musician sponsors: John Jantzi, Theodore W. -

A Brief History of Piano Action Mechanisms*

Advances in Historical Studies, 2020, 9, 312-329 https://www.scirp.org/journal/ahs ISSN Online: 2327-0446 ISSN Print: 2327-0438 A Brief History of Piano Action Mechanisms* Matteo Russo, Jose A. Robles-Linares Faculty of Engineering, University of Nottingham, Nottingham, UK How to cite this paper: Russo, M., & Ro- Abstract bles-Linares, J. A. (2020). A Brief History of Piano Action Mechanisms. Advances in His- The action mechanism of keyboard musical instruments with strings, such as torical Studies, 9, 312-329. pianos, transforms the motion of a depressed key into hammer swing or jack https://doi.org/10.4236/ahs.2020.95024 lift, which generates sound by striking the string of the instrument. The me- Received: October 30, 2020 chanical design of the key action influences many characteristics of the musi- Accepted: December 5, 2020 cal instrument, such as keyboard responsiveness, heaviness, or lightness, which Published: December 8, 2020 are critical playability parameters that can “make or break” an instrument for a pianist. Furthermore, the color of the sound, as well as its volume, given by Copyright © 2020 by author(s) and Scientific Research Publishing Inc. the shape and amplitude of the sound wave respectively, are both influenced This work is licensed under the Creative by the key action. The importance of these mechanisms is highlighted by Commons Attribution International centuries of studies and efforts to improve them, from the simple rigid lever License (CC BY 4.0). mechanism of 14th-century clavichords to the modern key action that can be http://creativecommons.org/licenses/by/4.0/ found in concert grand pianos, with dozens of bodies and compliant elements. -

The Broadway Musical

HESSTON COLLEGE THEATRE PRESENTS The Broadway Musical Director - Laura Kraybill Music Director - Matt Schloneger Book - Allan Knee Lyrics - Mindi Dickstein Based on the novel by Louisa May Alcott Music - Jason Howland Little Women The Broadway Musical Book by Music by Lyrics by Allan Knee Jason Howland Mindi Dickstein Based on the novel by Louisa May Alcott LITTLE WOMEN Is presented through special arrangement with Music Theatre International (MTI). All authorized performance materials are also supplied by MTI. 421 West 54th Street, New York, NY 10019 Phone: 212-541-4684 Fax: 212-397-4684 www.MTIShows.com The videotaping or other video or audio recording of this production is strictly prohibited. CAST Jo March................................................................................. Taylor Zehr Professor Bhaer..........................................................Galed Krisjayanta Amy March............................................................................. Emma Roth Meg March................................................................... Meredith Spicher Beth March.............................................................................. Eleya Raim Marmee March.................................................................... Anna Martin Mr. Laurence......................................................................... Micah Raber Laurie Laurence.............................................................. Nathan Patron Aunt March...................................................................... Abbie -

Piano Proficiency

PIANO PROFICIENCY All music majors, except those with a keyboard major, must pass a piano proficiency examination demonstrating functional use of the piano. Students with a primary applied area in keyboard will not take the piano proficiency but will cover functional piano material in their course of primary applied study. Before being recommended for student teaching or attempting the senior recital hearing, every non-keyboard major must pass the piano proficiency. This examination should be completed by the end of the fourth semester of enrollment in secondary piano class (MU262). It must be attempted each semester thereafter until passed. Enrollment in secondary piano class is necessary each semester until the piano proficiency requirement is completed. Students who pass the piano proficiency exam before completing four hours of secondary class piano may take the remaining credit hours in either piano or organ. Students enrolled in secondary piano classes may pass portions of the piano proficiency material. In order to attempt a portion of the proficiency material in jury before the final semester, the student must attempt at least three (3) sections of the exam. The student will only be allowed to attempt a portion of the proficiency exam with the permission of the class piano instructor. Students who complete the prepared literature portion of the exam will be expected to continue preparing literature pieces in subsequent secondary piano classes. The MU262 Final Exam is the Piano Proficiency. It is a jury exam and is held during final exam week. It must be passed completely in this fourth semester in order to receive a passing grade for the semester. -

An Interpretive Analysis of George Antheil's Sonata for Trumpet And

AN INTERPRETIVE ANALYSIS OF GEORGE ANTHEIL’S SONATA FOR TRUMPET AND PIANO Mark Fenderson, B.A., M.M. Dissertation Prepared for the Degree of DOCTOR OF MUSICAL ARTS UNIVERSITY OF NORTH TEXAS August 2008 APPROVED: Keith Johnson, Major Professor Mike Steinel, Minor Professor John Holt, Committee Member Terri Sundberg, Chair of the Division of Instrumental Studies Graham Phipps, Director of Graduate Studies in the College of Music James Scott, Dean of the College of Music Sandra L. Terrell, Dean of the Robert B. Toulouse School of Graduate Studies Fenderson, Mark. An Interpretive Analysis of George Antheil’s Sonata for Trumpet and Piano. Doctor of Musical Arts (Performance), August 2008, 44 pp., references, 19 titles. American composer George Antheil’s Sonata for Trumpet and Piano was written in 1951. This dissertation provides historical and theoretical information that gives insight into the interpretation of this sonata. Reasons why the piece deserves greater attention with respect to the standard twentieth century trumpet literature are also given. Antheil’s music was influential in the development of classical music in the first half of the 20th century and, more specifically, contributed to the establishment of an American style of classical music. Composed near the end of his life, this sonata has its roots in this heritage. The understanding of Antheil’s history, motivations, and compositional techniques is intended to help bring a performance of this sonata to its full potential. Copyright 2008 by Mark Fenderson ii TABLE OF CONTENTS -

The Percussion Family

The Percussion Family The percussion family is the largest family in the orchestra. Percussion instruments include any instrument that makes a sound when it is hit, shaken, or scraped. It's not easy to be a percussionist because it takes a lot of practice to hit an instrument with the right amount of strength, in the right place and at the right time. Some percussion instruments are tuned and can sound different notes, like the xylophone, timpani or piano, and some are untuned with no definite pitch, like the bass drum, cymbals or castanets. Percussion instruments keep the rhythm, make special sounds and add excitement and color. Unlike most of the other players in the orchestra, a percussionist will usually play many different instruments in one piece of music. The most common percussion instruments in the orchestra include the timpani, xylophone, cymbals, triangle, snare drum, bass drum, tambourine, maracas, gongs, chimes, celesta, and piano. The piano is a percussion instrument. You play it by hitting its 88 black and white keys with your fingers, which suggests it belongs in the percussion family. The piano has the largest range of any instrument in the orchestra. It is a tuned instrument, and you can play many notes at once using both your hands. Within the orchestra the piano usually supports the harmony, but it has another role as a solo instrument (an instrument that plays by itself), playing both melody and harmony. Timpani look like big polished bowls or upside-down teakettles, which is why they're also called kettledrums. They are big copper pots with drumheads made of calfskin or plastic stretched over their tops. -

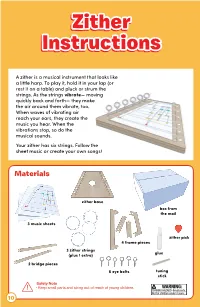

Zither Instructions

ZitherZither Build the Zither Connect the four frame Squeeze glue along the InstructionsInstructions pieces. Clear away any outside edges of the frame, excess debris from the holes. avoiding the holes. A zither is a musical instrument that looks like a little harp. To play it, hold it in your lap (or rest it on a table) and pluck or strum the strings. As the strings vibrate— moving quickly back and forth— they make the air around them vibrate, too. Carefully place the zither base Squeeze glue into the slots When waves of vibrating air on top of the frame. If glue gets near the edges of the zither reach your ears, they create the into any of the holes, wipe it base. Place the bridge pieces music you hear. When the away with a toothpick. Place a into the slots and let them dry vibrations stop, so do the heavy book on the frame and let for 10 minutes. musical sounds. it dry for 30 minutes. Your zither has six strings. Follow the sheet music or create your own songs! Materials Insert eye bolts into the two Starting from the back, zither base bottom-left holes. Twist the thread the ends of one string box from bolts clockwise until they’re through the holes on the the mail halfway into the bottom piece opposite sides of the bolts. 3 music sheets of the frame. Pull the ends until the string lengths are even. zither pick 4 frame pieces 3 zither strings glue (plus 1 extra) 2 bridge pieces 6 eye bolts tuning stick Safety NoteSafety Note WARNING:WARNING: • Keep small• Keep parts small and parts string and out string of reach out of of reach young of children.young children.