Conditional Level Generation and Game Blending

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multi-Domain Level Generation and Blending with Sketches Via Example

MULTI-DOMAIN LEVEL GENERATION AND BLENDING WITH SKETCHES VIA EXAMPLE-DRIVEN BSP AND VARIATIONAL AUTOENCODERS Sam Snodgrass Anurag Sarkar modl.ai Northeastern University Copenhagen, Denmark Boston, MA, USA [email protected] [email protected] June 18, 2020 ABSTRACT Procedural content generation via machine learning (PCGML) has demonstrated its usefulness as a content and game creation approach, and has been shown to be able to support human creativity. An important facet of creativity is combinational creativity or the recombination, adaptation, and reuse of ideas and concepts between and across domains. In this paper, we present a PCGML approach for level generation that is able to recombine, adapt, and reuse structural patterns from several domains to approximate unseen domains. We extend prior work involving example-driven Binary Space Partitioning for recombining and reusing patterns in multiple domains, and incorporate Variational Autoencoders (VAEs) for generating unseen structures. We evaluate our approach by blending across 7 domains and subsets of those domains. We show that our approach is able to blend domains together while retaining structural components. Additionally, by using different groups of training domains our approach is able to generate both 1) levels that reproduce and capture features of a target domain, and 2) levels that have vastly different properties from the input domain. Keywords procedural content generation, level blending, ize across several domains. These methods either try to level generation, binary space partitioning, variational supplement a new domain’s training data with examples autoencoder, PCGML from other domains [25], build multiple models and blend them together [7, 20], or directly build a model trained on multiple domains [21]. -

Das Nintendo-Schummelbuch

AÄ«i8®Ä' INHALTSVERZEICHNIS VORWORT 1 TAUSEND DANK 2 DER AUTOR 2 CAME BOY CHEATS 4 ADDAMS FAMILY 4 DEAD HEAT SCRAMB 14 ADVENTURE ISLAND 4 DR. FRANKEN 15 ADVENTURES OF LOLO 4 DR. FRANKEN II 15 AFTERBURST 4 DYNABLASTER 15 ALLEYWAY 4 ELEVATOR ACTION 15 BART VS THE JUGGERNAUTS 5 F-15 STRIKE EAGLE 15 BATMAN 5 FACEBALL 2000 |6 BATTLE BULL 5 FERRARI GRAND PRIX CHALLENGE |6 BATTLE PING PONG 6 FINAL FANTASY LEGEND II 17 BATTLETOADS 6 FLAPPY SPECIAL 17 BATTLE UNIT ZEOTH 6 FORTRESS OF FEAR i8 BEST OF THE BEST 7 GARFIELD i8 BILL £ TED’S EXCELLENT GARGOYLE’S QUEST 19 ADVENTURE 7 GODZILLA 19 BLASTER MASTER BOY 7 GOLF 20 BOMBERBOY 7 HUDSON HAWK 20 BOMBERMAN 8 HUMANS 20 BOOMERS ADVENTURE 8 JAMES BOND JR. 21 BUBBLE BOBBLE 9 KID DRACULA 21 BUGS BUNNY 10 KID ICARUS 21 BURAI FIGHTER 10 KING OF THE ZOO 21 BURAI FIGHTER DELUXE II KIRBY’S DREAMLAND 22 BURGER TIME DELUXE II KIRBY’S PINBALL LAND 22 CAPTAIN PLANET II KLAX 22 CAPTAIN SKYHAWK 12 KRUSTY’S FUNHOUSE 22 CASTLEVANIA 12 LEMMINGS 23 CASTLEVANIA II 12 LOCK’N’CHASE 23 CATRAP 12 LOONEY TUNES 24 CHESSMASTER 13 MEGA MAN 24 CHOPLIFTER II '3 MEGA MAN 2 24 CRAZY CASTLE 2 '3 MERCENARY FORCE 25 DEADALIAN OPUS 14 METAL GEAR 25 AAICKEY AAOUSE 25 TINY TOONS 2 40 MICKEY’S DANGEROUS CHASE 25 TOM 8 JERRY 40 NARC 26 TOP GUN 40 NINJA BOY 26 TOP GUN II 40 NINJA GAIDEN 26 TURRICAN 41 OPERATION C 26 ULTIMA 41 PARODIUS 26 WHO FRAMED ROGER RABBIT 41 PIPE DREAM 27 WIZARDS & WARRIORS - POWER MISSION 27 THE FORTRESS OF FEAR 41 PRINCE OF PERSIA 27 WORLD BEACH VOLLEY 42 PROBOTECTOR 28 WORLD CUP SOCCER 42 PUZZNIC 28 ZELDA - LINKS -

Nintendo Co., Ltd

Nintendo Co., Ltd. Financial Results Briefing for the Six-Month Period Ended September 2010 (Briefing Date: 2010/10/29) Supplementary Information [Note] Forecasts announced by Nintendo Co., Ltd. herein are prepared based on management's assumptions with information available at this time and therefore involve known and unknown risks and uncertainties. Please note such risks and uncertainties may cause the actual results to be materially different from the forecasts (earnings forecast, dividend forecast and other forecasts). Nintendo Co., Ltd. Semi-Annual Consolidated Statements of Income Transition million yen FY3/2007 FY3/2008 FY3/2009 FY3/2010 FY3/2011 Apr.-Sept.'06 Apr.-Sept.'07 Apr.-Sept.'08 Apr.-Sept.'09 Apr.-Sept.'10 Net sales 298,817 694,803 836,879 548,058 363,160 Cost of sales 162,974 413,048 469,831 341,759 214,369 Gross profit 135,842 281,754 367,048 206,298 148,791 (Gross profit ratio) (45.5%) (40.6%) (43.9%) (37.6%) (41.0%) Selling, general, and administrative expenses 68,730 92,969 114,864 101,937 94,558 Operating income 67,111 188,784 252,183 104,360 54,232 (Operating income ratio) (22.5%) (27.2%) (30.1%) (19.0%) (14.9%) Non-operating income 27,958 27,259 20,528 7,990 4,849 (of which foreign exchange gains) (10,057) (2,149) ( - ) ( - ) ( - ) Non-operating expenses 393 666 35,404 1,737 63,234 (of which foreign exchange losses) ( - ) ( - ) (33,584) (664) (62,175) Ordinary income 94,676 215,376 237,306 110,613 -4,152 (Ordinary income ratio) (31.7%) (31.0%) (28.4%) (20.2%) (-1.1%) Extraordinary income 253 3,885 1,959 4,311 190 Extraordinary loss 1 1,623 92 2,306 18 Income before income taxes and minority interests 94,929 217,639 239,172 112,618 -3,981 Income taxes 40,602 85,294 94,329 43,107 -1,960 Income before minority interests - - - - -2,020 Minority interests in income -18 -76 15 18 -9 Net income 54,345 132,421 144,828 69,492 -2,011 (Net income ratio) (18.2%) (19.1%) (17.3%) (12.7%) (-0.6%) - 1 - Nintendo Co., Ltd. -

Mario Level Generation from Mechanics Using Scene Stitching

Mario Level Generation From Mechanics Using Scene Stitching Michael Cerny Green* Luvneesh Mugrai* Ahmed Khalifa Julian Togelius Game Innovation Lab Game Innovation Lab Game Innovation Lab Game Innovation Lab New York University New York University New York University New York University Brooklyn, USA Brooklyn, USA Brooklyn, USA Brooklyn, USA [email protected] [email protected] [email protected] [email protected] Abstract—Video game tutorials allow players to gain mastery Evolving entire levels to be individually personalized is over game skills and mechanics. To hone players’ skills, it is not trivial. In this paper we propose a method of multi-step beneficial from practicing in environments that promote individ- evolution. Mini-level “scenes” that showcase varying subsets ual player skill sets. However, automatically generating environ- ments which are mechanically similar to one-another is a non- of game mechanics were evolved in previous research [8] using trivial problem. This paper presents a level generation method a Constrained Map-Elites algorithm [7] and stored within for Super Mario by stitching together pre-generated “scenes” a publicly available library*. The system uses these scenes that contain specific mechanics, using mechanic-sequences from with an FI-2Pop [9] optimizer to evolve the best “sequence agent playthroughs as input specifications. Given a sequence of of scenes,” with the target being to exactly match a specific mechanics, the proposed system uses an FI-2Pop algorithm and a corpus of scenes to perform automated level authoring. The player’s mechanical playtrace. proposed system outputs levels that can be beaten using a similar By breaking this problem down into smaller parts, the mechanical sequence to the target mechanic sequence but with system is to focus more on the bigger picture of full level a different playthrough experience. -

Female Fighters

Press Start Female Fighters Female Fighters: Perceptions of Femininity in the Super Smash Bros. Community John Adams High Point University, USA Abstract This study takes on a qualitative analysis of the online forum, SmashBoards, to examine the way gender is perceived and acted upon in the community surrounding the Super Smash Bros. series. A total of 284 comments on the forum were analyzed using the concepts of gender performativity and symbolic interactionism to determine the perceptions of femininity, reactions to female players, and the understanding of masculinity within the community. Ultimately, although hypermasculine performances were present, a focus on the technical aspects of the game tended to take priority over any understanding of gender, resulting in a generally ambiguous approach to femininity. Keywords Nintendo; Super Smash Bros; gender performativity; symbolic interactionism; sexualization; hypermasculinity Press Start Volume 3 | Issue 1 | 2016 ISSN: 2055-8198 URL: http://press-start.gla.ac.uk Press Start is an open access student journal that publishes the best undergraduate and postgraduate research, essays and dissertations from across the multidisciplinary subject of game studies. Press Start is published by HATII at the University of Glasgow. Adams Female Fighters Introduction Examinations of gender in mainstream gaming circles typically follow communities surrounding hypermasculine games, in which members harass those who do not conform to hegemonic gender norms (Consalvo, 2012; Gray, 2011; Pulos, 2011), but do not tend to reach communities surrounding other types of games, wherein their less hypermasculine nature shapes the community. The Super Smash Bros. franchise stands as an example of this less examined type of game community, with considerably more representation of women and a colorful, simplified, and gore-free style. -

Nintendo Co., Ltd

Nintendo Co., Ltd. Earnings Release for the Three-Month Period Ended June 2010 Supplementary Information [Note] Forecasts announced by Nintendo Co., Ltd. herein are prepared based on management's assumptions with information available at this time and therefore involve known and unknown risks and uncertainties. Please note such risks and uncertainties may cause the actual results to be materially different from the forecasts (earnings forecast, dividend forecast and other forecasts). Nintendo Co., Ltd. Consolidated Statements of Income Transition million yen FY3/2007 FY3/2008 FY3/2009 FY3/2010 FY3/2011 Apr.-June'06 Apr.-June'07 Apr.-June'08 Apr.-June'09 Apr.-June'10 Net sales 130,919 340,439 423,380 253,498 188,646 Cost of sales 67,876 201,336 243,691 161,940 118,298 Gross profit 63,043 139,103 179,688 91,558 70,348 (Gross profit ratio) (48.2%) (40.9%) (42.4%) (36.1%) (37.3%) Selling, general, and administrative expenses 34,241 48,471 60,496 51,156 47,005 Operating income 28,802 90,631 119,192 40,401 23,342 (Operating income ratio) (22.0%) (26.6%) (28.2%) (15.9%) (12.4%) Non-operating income 7,708 41,144 58,251 24,761 2,169 (of which foreign exchange gains) ( - ) (29,032) (47,844) (20,088) ( - ) Non-operating expenses 3,840 326 551 338 71,567 (of which foreign exchange losses) (3,462) ( - ) ( - ) ( - ) (70,594) Ordinary income 32,670 131,449 176,892 64,824 -46,055 (Ordinary income ratio) (25.0%) (38.6%) (41.8%) (25.6%) (-24.4%) Extraordinary income 6 649 3,625 5,227 134 Extraordinary loss 123 15 52 2,308 17 Income before income taxes and minority interests 32,553 132,084 180,464 67,743 -45,938 Income taxes 17,012 51,898 73,101 25,367 -20,687 Income before minority interests - - - - -25,250 Minority interests in income -10 -66 96 58 -33 Net income 15,551 80,251 107,267 42,316 -25,216 (Net income ratio) (11.9%) (23.6%) (25.3%) (16.7%) (-13.4%) - 1 - Nintendo Co., Ltd. -

Nintendo Co., Ltd

Nintendo Co., Ltd. Financial Results Briefing for Fiscal Year Ended March 2011 (Briefing Date: 2011/4/26) Supplementary Information [Note] Forecasts announced by Nintendo Co., Ltd. herein are prepared based on management's assumptions with information available at this time and therefore involve known and unknown risks and uncertainties. Please note such risks and uncertainties may cause the actual results to be materially different from the forecasts (earnings forecast, dividend forecast and other forecasts). Nintendo Co., Ltd. Consolidated Statements of Income Transition million yen FY3/2007 FY3/2008 FY3/2009 FY3/2010 FY3/2011 Net sales 966,534 1,672,423 1,838,622 1,434,365 1,014,345 Cost of sales 568,722 972,362 1,044,981 859,131 626,379 Gross profit 397,812 700,060 793,641 575,234 387,965 (Gross profit ratio) (41.2%) (41.9%) (43.2%) (40.1%) (38.2%) Selling, general, and administrative expenses 171,787 212,840 238,378 218,666 216,889 Operating income 226,024 487,220 555,263 356,567 171,076 (Operating income ratio) (23.4%) (29.1%) (30.2%) (24.9%) (16.9%) Non-operating income 63,830 48,564 32,159 11,082 8,602 (of which foreign exchange gains) (25,741) ( - ) ( - ) ( - ) ( - ) Non-operating expenses 1,015 94,977 138,727 3,325 51,577 (of which foreign exchange losses) ( - ) (92,346) (133,908) (204) (49,429) Ordinary income 288,839 440,807 448,695 364,324 128,101 (Ordinary income ratio) (29.9%) (26.4%) (24.4%) (25.4%) (12.6%) Extraordinary income 1,482 3,934 339 5,399 186 Extraordinary loss 720 10,966 902 2,282 353 Income before income taxes and minority interests 289,601 433,775 448,132 367,442 127,934 Income taxes 115,348 176,532 169,134 138,896 50,262 Income before minority interests - - - - 77,671 Minority interests in income -37 -99 -91 -89 50 Net income 174,290 257,342 279,089 228,635 77,621 (Net income ratio) (18.0%) (15.4%) (15.2%) (15.9%) (7.7%) - 1 - Nintendo Co., Ltd. -

The BG News April 15, 2019

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 4-15-2019 The BG News April 15, 2019 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation State University, Bowling Green, "The BG News April 15, 2019" (2019). BG News (Student Newspaper). 9096. https://scholarworks.bgsu.edu/bg-news/9096 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Book is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. FOR THE KIDS bg Over $255k raised at annual news Dance Marathon | PAGE 11 An independent student press serving the campus and surrounding community, ESTABLISHED 1920 Bowling Green State University Monday, April 15, 2019 Volume 98, Issue 49 PHOTO BY ABBY SHIFLEY FOR RENT GREAT SELECTION CLOSE TO CAMPUS SIGNING LEASES GREAT PRICES FOR SUMMER Complete Rental Listings Available AND FALL 2019 in our rental office or online Monday to Friday - 8:30 to 5:30 OPPORTUNITY Saturday - 9:00 to 3:00 419-354-2260 319 E. WOOSTER ST. BOWLING GREEN BE SMART. STUDENT LEGAL SERVICES BE RESPONSIBLE. BE COVERED. REAL LAWYERS | REAL RESULTS just $9/semester [email protected] | 419-372-2951 www.bgsu.edu/sls BG NEWS April 15, 2019 | PAGE 2 Students being more cautious with Uber, Lyft Mary Ross would rather get an Uber to travel home in the junior adolescent and young adult education both and I’ve gotten the description and the Columnist dark than walk home in the dark.” major Moriah Garcia is more motivated to be plates on both of them. -

Blending Levels from Different Games Using Lstms

Blending Levels from Different Games using LSTMs Anurag Sarkar and Seth Cooper Northeastern University, Boston, Massachusetts, USA [email protected], [email protected] Abstract well as blending existing levels (Guzdial and Riedl 2016b). Moreover, Gow and Corneli (2015) proposed a theoretical Recent work has shown machine learning approaches to be framework for generating new games by blending not just effective in training models on existing game data for in- forming procedural generation of novel content. Specifically, levels but entire games, showing that it is possible to blend ML techniques have successfully used existing game levels two games to create a third whose aesthetics and mechanics to train models for generating new levels and blending exist- are combinations of those of the original two games. ing ones. Such blending of not just levels, but entire games, In this work, we take a step towards implementing this has also been proposed as a possible means of generating framework by leveraging PCGML and concept blending. new games. In this paper, we build on these works by train- Specifically, we train Long Short Term Memory recurrent ing Long Short Term Memory recurrent neural networks on neural networks (LSTMs) on existing levels of the plat- level data for two separate platformer games—Super Mario formers Super Mario Bros. and Kid Icarus. We then sample Bros. and Kid Icarus—and use these models to create new from the trained models to generate new levels that encode levels that are blends of levels from both games, thus creating structural characteristics and properties of levels from both a level generator for a novel, third game. -

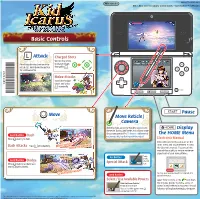

Basic Controls

MAA-CTR-AKDP-UKV NINTENDO 3DS SOFTWARE QUICK GUIDE – KID ICARUS™: UPRISING Basic Controls Attack Charged Shots Wait for the reticle Pit will attack in the direction of the to change shape, reticle . Hold down the button then press . for continuous fi re. Melee Attacks Stand close to your target and press repeatedly. Pause Move Move Reticle / Camera Slide the stylus across the Touch Screen to move Display the reticle. During Land Battles, this will also rotate the camera around Pit. To face in a different di- the HOME Menu Land Battles Dash rection quickly, try fl icking with the stylus! Move quickly to dash. Electronic Manual Select the icon for this software on the Dash Attacks Press while dashing. More range! HOME Menu and touch MANUAL to view More fi repower! the electronic manual. Please read this manual thoroughly to ensure maximum enjoyment of your new software. Touch Air Battles a fully- Land Battles Dodge Special Attack charged Move quickly just before an icon. enemy attack connects. Graceful evasion! You may also need to consult the Nintendo 3DS Land Battles Operations Manual. Select / Use Available Powers Select HOW TO PLAY or the icon from Slide the Power Palette / the in-game menus to view a series of Touch an icon to use videos in which the main characters, Pit and You can also select powers with Palutena, will explain how to play the game. and use them with . This software title includes an electronic manual to reduce the amount of paper used in its packaging. For support, please consult the electronic manual, the Nintendo 3DS Operations Manual or the Nintendo website. -

Nintendo Fun Club News Issue 03 Fall 1987

N 1 N T D 0 Jl JN iiiiji rail! \sW5 Vol. 1 No. 3 Fall 1987 1 The Legend of’ New Maps, Hot Tips Password Vi Punch -Out! !, Rad L Sneak PeeflT mm Now you can bring home your favorite smash arcade hits for play on the Nintendo Entertainment System;.. and bring out excitement, action and all the adventure you dare! We’ve got the thrills, the chills and the challenge you and your friends won’t want to miss! / [(Nintendo)' 1 i of America TAITO AMERICA CORPORATION 1987 Taito America Corporation. All rights reserved. Elevator Action,” Arkanoid.” The Legend of Kage” and Renegade” are trademarks of Taito America Corporation. C Taito. , Nintendo Fun Club News Fall 1987 PAGE 3 The Name of The Game is Challenge! An important message from master gamesman and President ofthe Nintendo Fun Club— Howard Phillips This is our third issue of the Nintendo Fun Club News. If you’re an established member, you’ll see it has expanded. It has more news, more color, and looks more like a magazine If you’re a new member, welcome to the Fun Club! We think you’ll enjoy this issue and many more to come. Thanks to your support, letters, and enthusiasm, we are rapidly expanding the Nintendo Fun Club. I think the main reason for our success is the fun of the video game challenge. Our games are increasing in sophistication. And that gives you a chance to develop more game skills, master tougher challenges, and have more fun than ever. Special game features such as a “password” which allows you to con- tinue play where you left off, and new products like the NES Advantage joystick are just a couple of examples of how we are always finding new ways to challenge your skill and add to your fun. -

The Official Nintendo Player's Guide (1987)

The Official Nintendo Player's Guide fl complete review of over 90 games for your N.E.5. The Player’s Choice JJONKEYKONG BASEBALL TENNIS * MIKE TYSON'S DUCK HUNT PUNCH OUT!! HOGAN'S ALLEY 10-YARD FJGHJ li/elcome You are probably already familiar with many of the great video games produced by Nintendo and other manufacturers who make game paks that can be played on your Nintendo® Entertainment System™. But The'Official Nintendo Player's Guide™ is designed to help make you a video game expert! This exciting new guide is packed with the kind of information #that will help you maximize your game skills. You'll see how to play faster, conquer your enemies with all-new secrets and tricks, and send your video game scores into the stratosphere! To help you add more power to your game play, we have included in-depth reviews of 24 hot video titles plus a section covering all Nintendo games (90 at present) with concise game reviews which give you the most important facts, figures, and action moves at a glance. Full color game screens are shown in all reviews, and color coding is used throughout to help identify each different series. See our Color Guide on Page Four. At Nintendo, we're committed to providing you with innovative new video games that are as challenging as they are fun. And we've expanded the action of game play with space-age accessories like the new NES MAX™ Joy Pad, the Zapper®, a revolutionary light-sensing video gun, and the NES Advantage™ Joystick which gives you the power, handling, and performance just like arcade joysticks.