Multi-Domain Level Generation and Blending with Sketches Via Example

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Illuminating the Space of Beatable Lode Runner Levels Produced by Various Generative Adversarial Networks

Illuminating the Space of Beatable Lode Runner Levels Produced By Various Generative Adversarial Networks Kirby Steckel Jacob Schrum Southwestern University Southwestern University Georgetown, Texas, USA Georgetown, Texas, USA [email protected] [email protected] ABSTRACT the training set influences the results. Specifically, the quality di- Generative Adversarial Networks (GANs) are capable of generating versity algorithm MAP-Elites [11] is used to illuminate the search convincing imitations of elements from a training set, but the distri- spaces induced by several GANs, to determine the diversity they bution of elements in the training set affects to difficulty of properly can produce, and see how many of those levels are actually beatable. training the GAN and the quality of the outputs it produces. This Out of the original 150 levels in the game, training sets of the first paper looks at six different GANs trained on different subsets of 5, 20, 50, 100, and 150 levels were created, as well as a group of 13 data from the game Lode Runner. The quality diversity algorithm levels that are similar in that their layouts depict words and letters. MAP-Elites was used to explore the set of quality levels that could Contrary to expectations, the GAN trained on all 150 Lode Runner be produced by each GAN, where quality was defined as being beat- levels did not produce many beatable levels, and was middling in able and having the longest solution path possible. Interestingly, a terms of the diversity of the levels produced. Although the diversity GAN trained on only 20 levels generated the largest set of diverse of the full training set was larger, the GAN seemed more prone to beatable levels while a GAN trained on 150 levels generated the mode collapse [20] as a result. -

Lode Runner 2 Is the Latest Addition to the Family

WELCOME In the late eighties the arcades were seeing their bleakest days, and those of us who couldn’t get enough video junk bought home computers for a quick fix. It was during one of these episodes in 1987 on my Apple IIgs that I first discovered Lode Runner. Ironically, I was a late bloomer to the whole Lode Runner scene. The game first appeared four years earlier on the Apple II; I owned an Apple IIgs at the time and could only run genuine Apple II software in a downgraded state called, ‘emulation mode.’ My gs and its 7 MHz of power were state of the art. Who was I to trifle with this silly, outdated game called ‘Lode Runner’ when I could harvest some testosterone with 4 bit graphics and stereo sound? Why waste my time on such antiquated trivialities? Too much bother for me and my supreme gs! Bah! Lode Schmode! Well, I did bother, and thanks be to God that I did. I think about three weeks after I booted the dumb thing I got up to go to the bathroom. I remember dreaming more than once during that period that I was the Lode Runner. The incessant ‘peyoo-peyoo’ of the digging sound effect was more etched into my brain than the ever-popular Thompson Twins of the time (another thanks-be-to-God on that one). It was mythologized on UUnet that I could dig a five-tiered wall and live to tell about it. I was this game and this game was I. -

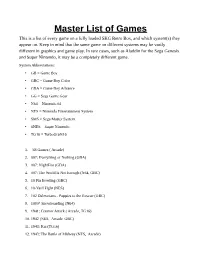

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

Video Game Archive: Nintendo 64

Video Game Archive: Nintendo 64 An Interactive Qualifying Project submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfilment of the requirements for the degree of Bachelor of Science by James R. McAleese Janelle Knight Edward Matava Matthew Hurlbut-Coke Date: 22nd March 2021 Report Submitted to: Professor Dean O’Donnell Worcester Polytechnic Institute This report represents work of one or more WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. Abstract This project was an attempt to expand and document the Gordon Library’s Video Game Archive more specifically, the Nintendo 64 (N64) collection. We made the N64 and related accessories and games more accessible to the WPI community and created an exhibition on The History of 3D Games and Twitch Plays Paper Mario, featuring the N64. 2 Table of Contents Abstract…………………………………………………………………………………………………… 2 Table of Contents…………………………………………………………………………………………. 3 Table of Figures……………………………………………………………………………………………5 Acknowledgements……………………………………………………………………………………….. 7 Executive Summary………………………………………………………………………………………. 8 1-Introduction…………………………………………………………………………………………….. 9 2-Background………………………………………………………………………………………… . 11 2.1 - A Brief of History of Nintendo Co., Ltd. Prior to the Release of the N64 in 1996:……………. 11 2.2 - The Console and its Competitors:………………………………………………………………. 16 Development of the Console……………………………………………………………………...16 -

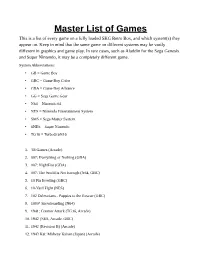

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

View List of Games Here

Game List SUPER MARIO BROS MARIO 14 SUPER MARIO BROS3 DR MARIO MARIO BROS TURTLE1 TURTLE FIGHTER CONTRA 24IN1 CONTRA FORCE SUPER CONTRA 7 KAGE JACKAL RUSH N ATTACK ADVENTURE ISLAND ADVENTURE ISLAND2 CHIP DALE1 CHIP DALE3 BUBBLE BOBBLE PART2 SNOW BROS MITSUME GA TOORU NINJA GAIDEN2 DOUBLE DRAGON2 DOUBLE DRAGON3 HOT BLOOD HIGH SCHOOL HOT BLOOD WRESTLE ROBOCOP MORTAL KOMBAT IV SPIDER MAN 10 YARD FIGHT TANK A1990 THE LEGEND OF KAGE ALADDIN3 ANTARCTIC ADVENTURE ARABIAN BALLOON FIGHT BASE BALL BINARY LAND BIRD WEEK BOMBER MAN BOMB SWEEPER BRUSH ROLLER BURGER TIME CHAKN POP CHESS CIRCUS CHARLIE CLU CLU LAND FIELD COMBAT DEFENDER DEVIL WORLD DIG DUG DONKEY KONG DONKEY KONG JR DONKEY KONG3 DONKEY KONG JR MATH DOOR DOOR EXCITEBIKE EXERION F1 RACE FORMATION Z FRONT LINE GALAGA GALAXIAN GOLF RAIDON BUNGELING BAY HYPER OLYMPIC HYPER SPORTS ICE CLIMBER JOUST KARATEKA LODE RUNNER LUNAR BALL MACROSS JEWELRY 4 MAHJONG MAHJONG MAPPY NUTS MILK MILLIPEDE MUSCLE NAITOU9 DAN SHOUGI H NIBBLES NINJA 1 NINJA3 ROAD FIGHTER OTHELLO PAC MAN PINBALL POOYAN POPEYE SKY DESTROYER Space ET STAR FORCE STAR GATE TENNIS URBAN CHAMPION WARPMAN YIE AR KUNG FU ZIPPY RACE WAREHOUSE BOY 1942 ARKANOID ASTRO ROBO SASA B WINGS BADMINGTON BALTRON BOKOSUKA WARS MIGHTY BOMB JACK PORTER CHUBBY CHERUB DESTROYI GIG DUG2 DOUGH BOY DRAGON TOWER OF DRUAGA DUCK ELEVATOR ACTION EXED EXES FLAPPY FRUITDISH GALG GEIMOS GYRODINE HEXA ICE HOCKEY LOT LOT MAGMAX PIKA CHU NINJA 2 QBAKE ONYANKO TOWN PAC LAND PACHI COM PRO WRESTLING PYRAMID ROUTE16 TURBO SEICROSS SLALOM SOCCER SON SON SPARTAN X SPELUNKER -

Das Nintendo-Schummelbuch

AÄ«i8®Ä' INHALTSVERZEICHNIS VORWORT 1 TAUSEND DANK 2 DER AUTOR 2 CAME BOY CHEATS 4 ADDAMS FAMILY 4 DEAD HEAT SCRAMB 14 ADVENTURE ISLAND 4 DR. FRANKEN 15 ADVENTURES OF LOLO 4 DR. FRANKEN II 15 AFTERBURST 4 DYNABLASTER 15 ALLEYWAY 4 ELEVATOR ACTION 15 BART VS THE JUGGERNAUTS 5 F-15 STRIKE EAGLE 15 BATMAN 5 FACEBALL 2000 |6 BATTLE BULL 5 FERRARI GRAND PRIX CHALLENGE |6 BATTLE PING PONG 6 FINAL FANTASY LEGEND II 17 BATTLETOADS 6 FLAPPY SPECIAL 17 BATTLE UNIT ZEOTH 6 FORTRESS OF FEAR i8 BEST OF THE BEST 7 GARFIELD i8 BILL £ TED’S EXCELLENT GARGOYLE’S QUEST 19 ADVENTURE 7 GODZILLA 19 BLASTER MASTER BOY 7 GOLF 20 BOMBERBOY 7 HUDSON HAWK 20 BOMBERMAN 8 HUMANS 20 BOOMERS ADVENTURE 8 JAMES BOND JR. 21 BUBBLE BOBBLE 9 KID DRACULA 21 BUGS BUNNY 10 KID ICARUS 21 BURAI FIGHTER 10 KING OF THE ZOO 21 BURAI FIGHTER DELUXE II KIRBY’S DREAMLAND 22 BURGER TIME DELUXE II KIRBY’S PINBALL LAND 22 CAPTAIN PLANET II KLAX 22 CAPTAIN SKYHAWK 12 KRUSTY’S FUNHOUSE 22 CASTLEVANIA 12 LEMMINGS 23 CASTLEVANIA II 12 LOCK’N’CHASE 23 CATRAP 12 LOONEY TUNES 24 CHESSMASTER 13 MEGA MAN 24 CHOPLIFTER II '3 MEGA MAN 2 24 CRAZY CASTLE 2 '3 MERCENARY FORCE 25 DEADALIAN OPUS 14 METAL GEAR 25 AAICKEY AAOUSE 25 TINY TOONS 2 40 MICKEY’S DANGEROUS CHASE 25 TOM 8 JERRY 40 NARC 26 TOP GUN 40 NINJA BOY 26 TOP GUN II 40 NINJA GAIDEN 26 TURRICAN 41 OPERATION C 26 ULTIMA 41 PARODIUS 26 WHO FRAMED ROGER RABBIT 41 PIPE DREAM 27 WIZARDS & WARRIORS - POWER MISSION 27 THE FORTRESS OF FEAR 41 PRINCE OF PERSIA 27 WORLD BEACH VOLLEY 42 PROBOTECTOR 28 WORLD CUP SOCCER 42 PUZZNIC 28 ZELDA - LINKS -

Game Developer Index 2010 Foreword

SWEDISH GAME INDUSTRY’S REPORTS 2011 Game Developer Index 2010 Foreword It’s hard to imagine an industry where change is so rapid as in the games industry. Just a few years ago massive online games like World of Warcraft dominated, then came the breakthrough for party games like Singstar and Guitar Hero. Three years ago, Nintendo turned the gaming world upside-down with the Wii and motion controls, and shortly thereafter came the Facebook games and Farmville which garnered over 100 million users. Today, apps for both the iPhone and Android dominate the evolution. Technology, business models, game design and marketing changing almost every year, and above all the public seem to quickly embrace and follow all these trends. Where will tomorrow’s earnings come from? How can one make even better games for the new platforms? How will the relationship between creator and audience change? These and many other issues are discussed intensively at conferences, forums and in specialist press. Swedish success isn’t lacking in the new channels, with Minecraft’s unprecedented success or Battlefield Heroes to name two examples. Independent Games Festival in San Francisco has had Swedish winners for four consecutive years and most recently we won eight out of 22 prizes. It has been touted for two decades that digital distribution would outsell traditional box sales and it looks like that shift is finally happening. Although approximately 85% of sales still goes through physical channels, there is now a decline for the first time since one began tracking data. The transformation of games as a product to games as a service seems to be here. -

Gaming Is a Hard Job, but Someone Has to Do It!

Gaming is a hard job, but someone has to do it! GIOVANNI VIGLIETTA Carleton University, Ottawa, Canada [email protected] October 29, 2013 Abstract We establish some general schemes relating the computational complex- ity of a video game to the presence of certain common elements or mechan- ics, such as destroyable paths, collectible items, doors opened by keys or activated by buttons or pressure plates, etc. Then we apply such “metatheo- rems” to several video games published between 1980 and 1998, including Pac-Man, Tron, Lode Runner, Boulder Dash, Deflektor, Mindbender, Pipe Mania, Skweek, Prince of Persia, Lemmings, Doom, Puzzle Bobble 3, and Starcraft. We obtain both new results, and improvements or alternative proofs of previously known results. 1 Introduction This work was inspired mainly by the recent papers on the computational com- plexity of video games by Forisekˇ [4] and Cormode [2], along with the excellent surveys on related topics by Kendall et al. [8] and Demaine et al. [3, 7], and may be regarded as their continuation on the same line of research. Our purpose is to single out certain recurring features or mechanics in a video game that enable general reduction schemes from known hard problems to the games we are considering. To this end, in Section 2 we produce several metathe- orems that will be applied in Section 3 to a wealth of famous commercial video games, in order to automatically establish their hardness with respect to certain computational complexity classes (with a couple of exceptions). arXiv:1201.4995v5 [cs.CC] 28 Oct 2013 Because most recent commercial games incorporate Turing-equivalent script- ing languages that easily allow the design of undecidable puzzles as part of the gameplay, we will focus primarily on older, “scriptless” games. -

Nintendo Co., Ltd

Nintendo Co., Ltd. Financial Results Briefing for the Six-Month Period Ended September 2010 (Briefing Date: 2010/10/29) Supplementary Information [Note] Forecasts announced by Nintendo Co., Ltd. herein are prepared based on management's assumptions with information available at this time and therefore involve known and unknown risks and uncertainties. Please note such risks and uncertainties may cause the actual results to be materially different from the forecasts (earnings forecast, dividend forecast and other forecasts). Nintendo Co., Ltd. Semi-Annual Consolidated Statements of Income Transition million yen FY3/2007 FY3/2008 FY3/2009 FY3/2010 FY3/2011 Apr.-Sept.'06 Apr.-Sept.'07 Apr.-Sept.'08 Apr.-Sept.'09 Apr.-Sept.'10 Net sales 298,817 694,803 836,879 548,058 363,160 Cost of sales 162,974 413,048 469,831 341,759 214,369 Gross profit 135,842 281,754 367,048 206,298 148,791 (Gross profit ratio) (45.5%) (40.6%) (43.9%) (37.6%) (41.0%) Selling, general, and administrative expenses 68,730 92,969 114,864 101,937 94,558 Operating income 67,111 188,784 252,183 104,360 54,232 (Operating income ratio) (22.5%) (27.2%) (30.1%) (19.0%) (14.9%) Non-operating income 27,958 27,259 20,528 7,990 4,849 (of which foreign exchange gains) (10,057) (2,149) ( - ) ( - ) ( - ) Non-operating expenses 393 666 35,404 1,737 63,234 (of which foreign exchange losses) ( - ) ( - ) (33,584) (664) (62,175) Ordinary income 94,676 215,376 237,306 110,613 -4,152 (Ordinary income ratio) (31.7%) (31.0%) (28.4%) (20.2%) (-1.1%) Extraordinary income 253 3,885 1,959 4,311 190 Extraordinary loss 1 1,623 92 2,306 18 Income before income taxes and minority interests 94,929 217,639 239,172 112,618 -3,981 Income taxes 40,602 85,294 94,329 43,107 -1,960 Income before minority interests - - - - -2,020 Minority interests in income -18 -76 15 18 -9 Net income 54,345 132,421 144,828 69,492 -2,011 (Net income ratio) (18.2%) (19.1%) (17.3%) (12.7%) (-0.6%) - 1 - Nintendo Co., Ltd. -

Mario Level Generation from Mechanics Using Scene Stitching

Mario Level Generation From Mechanics Using Scene Stitching Michael Cerny Green* Luvneesh Mugrai* Ahmed Khalifa Julian Togelius Game Innovation Lab Game Innovation Lab Game Innovation Lab Game Innovation Lab New York University New York University New York University New York University Brooklyn, USA Brooklyn, USA Brooklyn, USA Brooklyn, USA [email protected] [email protected] [email protected] [email protected] Abstract—Video game tutorials allow players to gain mastery Evolving entire levels to be individually personalized is over game skills and mechanics. To hone players’ skills, it is not trivial. In this paper we propose a method of multi-step beneficial from practicing in environments that promote individ- evolution. Mini-level “scenes” that showcase varying subsets ual player skill sets. However, automatically generating environ- ments which are mechanically similar to one-another is a non- of game mechanics were evolved in previous research [8] using trivial problem. This paper presents a level generation method a Constrained Map-Elites algorithm [7] and stored within for Super Mario by stitching together pre-generated “scenes” a publicly available library*. The system uses these scenes that contain specific mechanics, using mechanic-sequences from with an FI-2Pop [9] optimizer to evolve the best “sequence agent playthroughs as input specifications. Given a sequence of of scenes,” with the target being to exactly match a specific mechanics, the proposed system uses an FI-2Pop algorithm and a corpus of scenes to perform automated level authoring. The player’s mechanical playtrace. proposed system outputs levels that can be beaten using a similar By breaking this problem down into smaller parts, the mechanical sequence to the target mechanic sequence but with system is to focus more on the bigger picture of full level a different playthrough experience. -

Download 80 PLUS 4983 Horizontal Game List

4 player + 4983 Horizontal 10-Yard Fight (Japan) advmame 2P 10-Yard Fight (USA, Europe) nintendo 1941 - Counter Attack (Japan) supergrafx 1941: Counter Attack (World 900227) mame172 2P sim 1942 (Japan, USA) nintendo 1942 (set 1) advmame 2P alt 1943 Kai (Japan) pcengine 1943 Kai: Midway Kaisen (Japan) mame172 2P sim 1943: The Battle of Midway (Euro) mame172 2P sim 1943 - The Battle of Midway (USA) nintendo 1944: The Loop Master (USA 000620) mame172 2P sim 1945k III advmame 2P sim 19XX: The War Against Destiny (USA 951207) mame172 2P sim 2010 - The Graphic Action Game (USA, Europe) colecovision 2020 Super Baseball (set 1) fba 2P sim 2 On 2 Open Ice Challenge (rev 1.21) mame078 4P sim 36 Great Holes Starring Fred Couples (JU) (32X) [!] sega32x 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) fba 2P sim 3D Crazy Coaster vectrex 3D Mine Storm vectrex 3D Narrow Escape vectrex 3-D WorldRunner (USA) nintendo 3 Ninjas Kick Back (U) [!] megadrive 3 Ninjas Kick Back (U) supernintendo 4-D Warriors advmame 2P alt 4 Fun in 1 advmame 2P alt 4 Player Bowling Alley advmame 4P alt 600 advmame 2P alt 64th. Street - A Detective Story (World) advmame 2P sim 688 Attack Sub (UE) [!] megadrive 720 Degrees (rev 4) advmame 2P alt 720 Degrees (USA) nintendo 7th Saga supernintendo 800 Fathoms mame172 2P alt '88 Games mame172 4P alt / 2P sim 8 Eyes (USA) nintendo '99: The Last War advmame 2P alt AAAHH!!! Real Monsters (E) [!] supernintendo AAAHH!!! Real Monsters (UE) [!] megadrive Abadox - The Deadly Inner War (USA) nintendo A.B.