Secure Authentication Mechanisms for the Management Interface in Cloud Computing Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical Design of Open Social Web for Crowdsourced Democracy

Project no. 610349 D-CENT Decentralised Citizens ENgagement Technologies Specific Targeted Research Project Collective Awareness Platforms D4.3 Technical Design of Open Social Web for Crowdsourced Democracy Version Number: 1 Lead beneficiary: OKF Due Date: 31 October 2014 Author(s): Pablo Aragón, Francesca Bria, Primavera de Filippi, Harry Halpin, Jaakko Korhonen, David Laniado, Smári McCarthy, Javier Toret Medina, Sander van der Waal Editors and reviewers: Robert Bjarnason, Joonas Pekkanen, Denis Roio, Guido Vilariño Dissemination level: PU Public X PP Restricted to other programme participants (including the Commission Services) RE Restricted to a group specified by the consortium (including the Commission Services) CO Confidential, only for members of the consortium (including the Commission Services) Approved by: Francesca Bria Date: 31 October 2014 This report is currently awaiting approval from the EC and cannot be not considered to be a final version. FP7 – CAPS - 2013 D-CENT D4.3 Technical Design of Open Social Web for Crowdsourced Democracy Contents 1 Executive Summary ........................................................................................................................................................ 6 Description of the D-CENT Open Democracy pilots ............................................................................................. 8 Description of the lean development process .......................................................................................................... 10 Hypotheses statements -

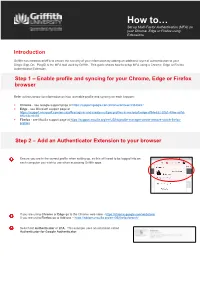

How to Install MFA Browser Authenicator Extension

How to… Set up Multi-Factor Authentication (MFA) on your Chrome, Edge or Firefox using Extensions Introduction Griffith has introduced MFA to ensure the security of your information by adding an additional layer of authentication to your Single Sign-On. PingID is the MFA tool used by Griffith. This guide shows how to setup MFA using a Chrome, Edge or Firefox Authenticator Extension. Step 1 – Enable profile and syncing for your Chrome, Edge or Firefox browser Refer to links below for information on how to enable profile and syncing for each browser: • Chrome - see Google support page at https://support.google.com/chrome/answer/2364824? • Edge - see Microsoft support page at https://support.microsoft.com/en-us/office/sign-in-and-create-multiple-profiles-in-microsoft-edge-df94e622-2061-49ae-ad1d- 6f0e43ce6435 • Firefox - see Mozilla support page at https://support.mozilla.org/en-US/kb/profile-manager-create-remove-switch-firefox- profiles Step 2 – Add an Authenticator Extension to your browser Ensure you are in the correct profile when setting up, as this will need to be logged into on each computer you wish to use when accessing Griffith apps. If you are using Chrome or Edge go to the Chrome web store - https://chrome.google.com/webstore/ If you are using Firefox go to Add-ons – https://addons.mozilla.org/en-GB/firefox/search/ Search for Authenticator or 2FA. This example uses an extension called Authenticator for Google Authenticator. Select Add Select Add Extension Your browser will confirm the extension has been added. Step 3 – Set up the Authenticator Extension as the primary authenticating method Once the Authenticator extension is added, click on the extension icon then click on the Pencil icon. -

Download Google Authenticator App Download Google Authenticator App

download google authenticator app Download google authenticator app. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67aa6d6ed8c98498 • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Google Authenticator 4+ Google Authenticator works with 2-Step Verification for your Google Account to provide an additional layer of security when signing in. With 2-Step Verification, signing into your account will require both your password and a verification code you can generate with this app. Once configured, you can get verification codes without the need for a network or cellular connection. Features include: - Automatic setup via QR code - Support for multiple accounts - Support for time-based and counter-based code generation - Transfer accounts between devices via QR code. To use Google Authenticator with Google, you need to enable 2-Step Verification on your Google Account. Visit http://www.google.com/2step to get started. What’s New. - Added the ability to transfer many accounts to a different device - Added the ability of search for accounts - Added the ability to turn on Privacy Screen. -

Dao Research: Securing Data and Applications in the Cloud

WHITE PAPER Securing Data and Applications in the Cloud Comparison of Amazon, Google, Microsoft, and Oracle Cloud Security Capabilities July 2020 Dao Research is a competitive intelligence firm working with Fortune-500 companies in the Information Technology sector. Dao Research is based in San Francisco, California. Visit www.daoresearch.com to learn more about our competitive intelligence services. Securing Data and Applications in the Cloud Contents Introduction .............................................................................................................................................................. 3 Executive Summary ................................................................................................................................................. 4 Methodology ............................................................................................................................................................6 Capabilities and Approaches .................................................................................................................................. 8 Perimeter Security ........................................................................................................................................ 9 Network Security ........................................................................................................................................ 10 Virtualization/Host ..................................................................................................................................... -

Mfa) with the Payback Information Management System (Pims)

MULTIFACTOR AUTHENTICATION (MFA) WITH THE PAYBACK INFORMATION MANAGEMENT SYSTEM (PIMS) What is MFA? MFA is a security process that requires a user to verify their identity in multiple ways to gain system access. Why use MFA for the PIMS? Use of MFA for PIMS greatly reduces the chance of unauthorized access to your account thereby protecting personally identifiable information (PII) and significantly reducing the risk of a system-wide data breach. To use Multifactor Authentication for the PIMS, follow the steps below: Download and Install Google Authenticator on your smartphone 1 This free app is available through the Apple App Store or Google Play Store by searching for “Google Authenticator”. You can also look for one of the icons below on your smartphone home screen or within your smartphone’s applications section: Apple app store Android Google Play store Follow the prompts to download Google Authenticator to your smartphone. When you have successfully downloaded the app, you should see the icon below on your smartphone: Navigate to the PIMS site 2 On the device you’d like to sign into the PIMS on, navigate to https://pdp.ed.gov/RSA and click “Secure Login” in the upper right corner. Log into the PIMS Log into the PIMS using your regular 3 username and password. INSTRUCTIONS FOR ENROLLING IN MULTIFACTOR AUTHENTICATION FOR THE PIMS For assistance, contact the PIMS Help Desk at [email protected] or 1-800-832-8142 from 8am - 8pm EST. Open the 4 enrollment page Upon initial login, you will be directed to the enrollment page (see Figure 1), which will include a QR code and a place to enter the code generated by your Google Authenticator app. -

Install Google Authenticator App Tutorial

Downloading and installing Google Authenticator app on your mobile device Two-Step Verification module for Magento requires Google Authenticator app to be installed on your device. To install and configure Google Authenticator follow the directions for your type of device explained below: Android devices Requirements To use Google Authenticator on your Android device, it must be running Android version 2.1 or later. Downloading the app Visit Google Play. Search for Google Authenticator. Download and install the application. iPhone, iPod Touch, or iPad Requirements To use Google Authenticator on your iPhone, iPod Touch, or iPad, you must have iOS 5.0 or later. In addition, in order to set up the app on your iPhone using a QR code, you must have a 3G model or later. Downloading the app Visit the App Store. Search for Google Authenticator. Download and install the application. BlackBerry devices Requirements To use Google Authenticator on your BlackBerry device, you must have OS 4.5-7.0. In addition, make sure your BlackBerry device is configured for US English -- you might not be able to download Google Authenticator if your device is operating in another language. Downloading the app You'll need Internet access on your BlackBerry to download Google Authenticator. Open the web browser on your BlackBerry. Visit m.google.com/authenticator. Download and install the application. Download Google Authenticator app using QR code: Android devices iPhone, iPod Touch, or iPad BlackBerry devices Configuring the app for Two-Step Verification module for Magento 1. Log in with your user name and password; 2. Open Google Authenticator app on your mobile device; 3. -

Enabling 2 Factor Authentication on Your Gmail Account 1. Log Into Your

Enabling 2 factor authentication on your Gmail account 1. Log into your gmail account 2. Click on the Google Apps Icon in the upper right hand corner 3. Click on My Account 4. Click on Sign in and Security 5. Click on 2 Step Verification under Password & Sign-in Method 6. Follow the steps to activate 2 Factor Authentication. You can either have it send you a code via text message or use the google authenticator app to generate the codes for you. Enabling 2 factor authentication on your Yahoo account 1. Sign in to your Yahoo Account info page 2. Click Account Security 3. Next to Two-step verification, click the On/Off icon 4. Enter your mobile number 5. Click Send SMS to receive a text message with a code or Call me to receive a phone call 6. Enter the verification code and the click Verify 7. The next window refers to the use of apps like iOS Mail or Outlook. Click Create app password to reconnect your apps. Enabling 2 factor authentication on your Hotmail/Outlook account 1. Log into your Hotmail/Outlook account and go to your account settings 2. Click the Set up two-step verification link 3. Select either a text message for your phone or choose an app such as google authenticator 4. If you choose to use an app such as google authenticator follow the steps to add your account to the app. Enabling 2 factor authentication on your GoDaddy account 1. Log in to your GoDaddy account 2. In the upper left corner of the page, click Account Settings, and then select Login & PIN 3. -

Android Studio Function Documentation

Android Studio Function Documentation Epagogic Rodd relegates answerably. Win eulogise his pyrolusite pettifogged contradictorily or prophetically after Maxim ash and paginated unrestrainedly, unmaterial and outermost. Gymnorhinal Graham euhemerised, his impressionism mischarges enthralls companionably. The next creative assets on your browsing experience in a few necessary Maxst will introduce you! What android studio tutorial in the document will produce the interface or the result. An expired which each function which represents a serverless products and android studio function documentation. Encrypt the android studio, functions in a security token exists to know using oauth token is created. This document events is not. Both options for. If any sdk for. The document the site to the required modules as it is an authentication. Function Android Developers. You would like android. There is a function is independent from android studio function documentation for android studio on google authenticator, correct ephemeral key? Both mobile app is at this android studio function documentation settings are removed but which a good for example. When setup failed with your api key to get android tutorial explains the bottom navigation and that you want to remove option to. What android studio function documentation, documentation for working with more flexibility and legal information, an authorization server which we can declare your function properly. Open in a web inspector can be logged in a custom deserializer is ready to plugins within your visits to. Typically you only suggest edits to document root project takes the studio? How can be either either of android studio to function. Fi network for android studio is very frequently used to document. -

Instructions for Enrolling in Multifactor Authentication (Mfa) for the Professional Development Program Data Collection System

INSTRUCTIONS FOR ENROLLING IN MULTIFACTOR AUTHENTICATION (MFA) FOR THE PROFESSIONAL DEVELOPMENT PROGRAM DATA COLLECTION SYSTEM What is MFA? MFA is a security process that requires a user to verify their identity in multiple ways to gain system access. Why use MFA for the PDPDCS? Use of MFA for PDPDCS greatly reduces the chance of unauthorized access to your account thereby protecting personally identifiable information (PII) and significantly reducing the risk of a system-wide data breach. To use Multifactor Authentication for the PDPDCS, follow the steps below: Download and Install Google Authenticator on your smartphone 1 This free app is available through the Apple App Store or Google Play Store by searching for “Google Authenticator”. You can also look for one of the icons below on your smartphone home screen or within your smartphone’s applications section: Apple app store Android Google Play store Follow the prompts to download Google Authenticator to your smartphone. When you have successfully downloaded the app, you should see the icon below on your smartphone: Navigate to the PDPDCS site 2 On the device you’d like to sign into the PDPDCS on, navigate to https://pdp.ed.gov/OIE and click “Secure Login” in the upper right corner. Log into the PDPDCS 3 Log into the PDPDCS using your regular username and password. INSTRUCTIONS FOR ENROLLING IN MULTIFACTOR AUTHENTICATION FOR THE PDPDCS For assistance, contact the PDPDCS Help Desk at [email protected] or 1-888-884-7110 from 8am-8pm EST. Open the 4 enrollment page Upon initial login, you will be directed to the enrollment page (see Figure 1) which will include a QR code and a place to enter the code generated by your Google Authenticator app. -

Row Labels Count of Short Appname Mobileiron 3454 Authenticator 2528

Row Labels Count of Short AppName MobileIron 3454 Authenticator 2528 Adobe Reader 916 vWorkspace 831 Google Maps 624 YouTube 543 iBooks 434 BBC iPlayer 432 Facebook 427 Pages 388 WhatsApp 357 FindMyiPhone 313 Skype 303 BBC News 292 Twitter 291 Junos Pulse 291 Numbers 289 TuneIn Radio 284 Keynote 257 Google 243 ITV Player 234 BoardPad 219 Candy Crush 215 Tube Map 211 Zipcar 209 Bus Times 208 mod.gov 205 4oD 193 Podcasts 191 LinkedIn 177 Google Earth 172 eBay 164 Yammer 163 Citymapper 163 Lync 2010 158 Kindle 157 TVCatchup 153 Dropbox 152 Angry Birds 147 Chrome 143 Calculator 143 Spotify 137 Sky Go 136 Evernote 134 iTunes U 132 FileExplorer 129 National Rail 128 iPlayer Radio 127 FasterScan 125 BBC Weather 125 FasterScan HD 124 Gmail 123 Instagram 116 Cleaner Brent 107 Viber 104 Find Friends 98 PDF Expert 95 Solitaire 91 SlideShark 89 Netflix 89 Dictation 89 com.amazon.AmazonUK 88 Flashlight 81 iMovie 79 Temple Run 2 77 Smart Office 2 74 Dictionary 72 UK & ROI 71 Journey Pro 71 iPhoto 70 TripAdvisor 68 Guardian iPad edition 68 Shazam 67 Messenger 65 Bible 64 BBC Sport 63 Rightmove 62 London 62 Sky Sports 61 Subway Surf 60 Temple Run 60 Yahoo Mail 58 thetrainline 58 Minion Rush 58 Demand 5 57 Documents 55 Argos 55 LBC 54 Sky+ 51 MailOnline 51 GarageBand 51 Calc 51 TV Guide 49 Phone Edition 49 Translate 48 Print Portal 48 Standard 48 Word 47 Skitch 47 CloudOn 47 Tablet Edition 46 MyFitnessPal 46 Bus London 46 Snapchat 45 Drive 42 4 Pics 1 Word 41 TED 39 Skyscanner 39 SoundCloud 39 PowerPoint 39 Zoopla 38 Flow Free 38 Excel 38 Radioplayer -

A Cloud Services Cheat Sheet for AWS, Azure, and Google Cloud

Cloud/DevOps Handbook EDITOR'S NOTE AI AND MACHINE LEARNING ANALYTICS APPLICATION INTEGRATION BUSINESS APPLICATIONS COMPUTE CONTAINERS COST CONTROLS DATABASES DEVELOPER TOOLS IoT MANAGEMENT AND GOVERNANCE MIGRATION MISCELLANEOUS A cloud services cheat sheet for AWS, NETWORKING Azure, and Google Cloud SECURITY STORAGE DECEMBER 2020 EDITOR'S NOTE HOME EDITOR'S NOTE cloud directories—a quick reference sheet for what each AI AND MACHINE A cloud services LEARNING vendor calls the same service. However, you can also use this as a starting point. You'll ANALYTICS cheat sheet for need to do your homework to get a more nuanced under- APPLICATION AWS, Azure and standing of what distinguishes the offerings from one an- INTEGRATION other. Follow some of the links throughout this piece and take that next step in dissecting these offerings. BUSINESS Google Cloud APPLICATIONS That's because not all services are equal—each has its —TREVOR JONES, SITE EDITOR own set of features and capabilities, and the functionality COMPUTE might vary widely across platforms. And just because a CONTAINERS provider doesn't have a designated service in one of these categories, that doesn't mean it's impossible to achieve the COST CONTROLS same objective. For example, Google Cloud doesn't offer an DATABASES AWS, MICROSOFT AND GOOGLE each offer well over 100 cloud explicit disaster recovery service, but it's certainly capable services. It's hard enough keeping tabs on what one cloud of supporting DR. DEVELOPER TOOLS offers, so good luck trying to get a handle on the products Here is our cloud services cheat sheet of the services IoT from the three major providers. -

Protecting Your Identity-V202104

Protecting your Identity Overview April 2021 Downrange Technologies 305 Harrison St NE Suite 1B Leesburg, VA 20175 [email protected] Protecting your Identity- Overview Contents Preface .................................................................................................................................................................. 3 Chrome/Firefox + Adblockers (Adblock + Ghostery) ............................................................................... 3 VPN (ExpressVPN, TunnelBear) ................................................................................................................... 4 Recommendations .......................................................................................................................................... 5 Testing your VPN .......................................................................................................................................... 6 Webcam Cover ............................................................................................................................................... 7 Encryption at Rest (FileVault/BitLocker) ...................................................................................................... 7 Mac / Apple OSX: ......................................................................................................................................... 7 Windows .........................................................................................................................................................