The Dawn of Fault-Tolerant Computing Building an Integrated Data Management Strategy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New CSC Computing Resources

New CSC computing resources Atte Sillanpää, Nino Runeberg CSC – IT Center for Science Ltd. Outline CSC at a glance New Kajaani Data Centre Finland’s new supercomputers – Sisu (Cray XC30) – Taito (HP cluster) CSC resources available for researchers CSC presentation 2 CSC’s Services Funet Services Computing Services Universities Application Services Polytechnics Ministries Data Services for Science and Culture Public sector Information Research centers Management Services Companies FUNET FUNET and Data services – Connections to all higher education institutions in Finland and for 37 state research institutes and other organizations – Network Services and Light paths – Network Security – Funet CERT – eduroam – wireless network roaming – Haka-identity Management – Campus Support – The NORDUnet network Data services – Digital Preservation and Data for Research Data for Research (TTA), National Digital Library (KDK) International collaboration via EU projects (EUDAT, APARSEN, ODE, SIM4RDM) – Database and information services Paituli: GIS service Nic.funet.fi – freely distributable files with FTP since 1990 CSC Stream Database administration services – Memory organizations (Finnish university and polytechnics libraries, Finnish National Audiovisual Archive, Finnish National Archives, Finnish National Gallery) 4 Current HPC System Environment Name Louhi Vuori Type Cray XT4/5 HP Cluster DOB 2007 2010 Nodes 1864 304 CPU Cores 10864 3648 Performance ~110 TFlop/s 34 TF Total memory ~11 TB 5 TB Interconnect Cray QDR IB SeaStar Fat tree 3D Torus CSC -

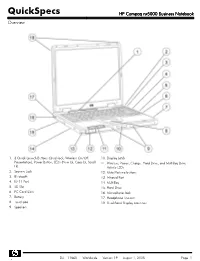

HP Compaq Nx5000 Business Notebook Overview

QuickSpecs HP Compaq nx5000 Business Notebook Overview 1. 3 Quick Launch Buttons (Quicklock, Wireless On/Off, 10. Display Latch Presentation), Power Button, LEDs (Num Lk, Caps Lk, Scroll 11. Wireless, Power, Charge, Hard Drive, and MultiBay Drive Lk) Activity LEDs 2. Security Lock 12. Mute/Volume buttons 3. Bluetooth 13. Infrared Port 4. RJ-11 Port 14. MultiBay 5. SD Slot 15. Hard Drive 6. PC Card Slots 16. Microphone Jack 7. Battery 17. Headphone line-out 8. Touchpad 18. Dual-Band Display Antennas 9. Speakers DA - 11860 Worldwide — Version 19 — August 1, 2005 Page 1 QuickSpecs HP Compaq nx5000 Business Notebook Overview 1. Power Connector 5. VGA 2. Serial 6. IEEE-1394 3. Parallel 7. RJ-45 4. S-Video 8. USB 2.0 (2) At A Glance Microsoft Windows XP Professional, Microsoft Windows XP Home Edition Intel® Pentium® M Processors up to 2.0 GHz Intel Celeron™ M up to 1.4-GHz Intel 855GM chipset with 400-MHz front side bus FreeDOS™ and SUSE® Linux HP Edition 9.1 preinstalled Support for 14.1-inch and 15.0-inch display 266-MHz DDR SDRAM 2 SODIMM slots available – upgradeable to 2048 MB Up to 60-GB 4200 or 5400 rpm, user-removable hard drives. 10/100 Ethernet NIC Touchpad with right/left button Support for MultiBay devices Support for Basic Port Replicator and Advanced Port Replicator Protected by a One- or Three-Year, Worldwide Limited Warranty – certain restrictions and exclusions apply. Consult the HP Customer Support Center for details. What's Special Microsoft Windows XP Professional, Microsoft Windows XP Home Edition Sleek industrial design with weight starting at 5.44 lb (travel weight) and 1.38-inch thin Integrated Intel Extreme Graphics 2 with up to 64 MB of dynamically allocated shared system memory for graphics Up to 9 hours battery life with 8-cell standard Li-Ion battery and Multibay battery. -

Ebook - Informations About Operating Systems Version: August 15, 2006 | Download

eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org AIX Internet: AIX AmigaOS Internet: AmigaOS AtheOS Internet: AtheOS BeIA Internet: BeIA BeOS Internet: BeOS BSDi Internet: BSDi CP/M Internet: CP/M Darwin Internet: Darwin EPOC Internet: EPOC FreeBSD Internet: FreeBSD HP-UX Internet: HP-UX Hurd Internet: Hurd Inferno Internet: Inferno IRIX Internet: IRIX JavaOS Internet: JavaOS LFS Internet: LFS Linspire Internet: Linspire Linux Internet: Linux MacOS Internet: MacOS Minix Internet: Minix MorphOS Internet: MorphOS MS-DOS Internet: MS-DOS MVS Internet: MVS NetBSD Internet: NetBSD NetWare Internet: NetWare Newdeal Internet: Newdeal NEXTSTEP Internet: NEXTSTEP OpenBSD Internet: OpenBSD OS/2 Internet: OS/2 Further operating systems Internet: Further operating systems PalmOS Internet: PalmOS Plan9 Internet: Plan9 QNX Internet: QNX RiscOS Internet: RiscOS Solaris Internet: Solaris SuSE Linux Internet: SuSE Linux Unicos Internet: Unicos Unix Internet: Unix Unixware Internet: Unixware Windows 2000 Internet: Windows 2000 Windows 3.11 Internet: Windows 3.11 Windows 95 Internet: Windows 95 Windows 98 Internet: Windows 98 Windows CE Internet: Windows CE Windows Family Internet: Windows Family Windows ME Internet: Windows ME Seite 1 von 138 eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org Windows NT 3.1 Internet: Windows NT 3.1 Windows NT 4.0 Internet: Windows NT 4.0 Windows Server 2003 Internet: Windows Server 2003 Windows Vista Internet: Windows Vista Windows XP Internet: Windows XP Apple - Company Internet: Apple - Company AT&T - Company Internet: AT&T - Company Be Inc. - Company Internet: Be Inc. - Company BSD Family Internet: BSD Family Cray Inc. -

Utilizing the Modern Computing Capabilities of the HP Nonstop Server a Business White Paper from Creative System Software, Inc

Utilizing the Modern Computing Capabilities of the HP NonStop Server A business white paper from Creative System Software, Inc. Executive Summary ................................................ 2 The Road To NonStop ............................................. 2 Software Development – Time To Market .............. 4 A Tale Of Two Developers ...................................... 5 Modern Tools And Technologies ............................ 6 System Management ............................................. 7 Security .................................................................. 8 Make Your Data Accessible .................................... 9 The Proof Of The Pudding Is In The Eating ............ 10 Summary .............................................................. 11 EXECUTIVE SUMMARY During a downturn, it’s hard to see the upside, revenues are down and budgets have fallen along with them. Companies have frozen capital expenditures and the push is on to cut the costs of IT. This means intense pressure to do far more with fewer resources. For years, companies have known that they need to eliminate duplication of effort, lower service costs, increase efficiency, and improve business agility by reducing complexity. Fortunately there are some technologies that can help, the most important of which is the HP Integrity NonStop server platform. The Integrity NonStop is a modern, open and standards based computing platform that just happens to offer the highest reliability and scalability in the industry along with an outstanding total -

Compaq Ipaq H3650 Pocket PC

Compaq iPAQ H3650 QUICKSPECS Pocket PC Overview . AT A GLANCE . Easy expansion and customization . through Compaq Expansion Pack . System . • Thin, lightweight design with . brilliant color screen. • Audio record and playback – . Audio programs from the web, . MP3 music, or voice notations . • . Rechargeable battery that gives . up to 12 hours of battery life . • Protected by Compaq Services, . including a one-year warranty — . Certain restrictions and exclusions . apply. Consult the Compaq . Customer Support Center for . details. In Canada, consult the . Product Information Center at 1- . 800-567-1616 for details. 1. Instant on/off and Light Button 7. Calendar Button . 2. Display 8. Voice Recorder Button . 3. QStart Button 9. Microphone . 4. QMenu 10. Ambient Light Sensor . 5. Speaker and 5-way joystick 11. Alarm/Charge Indicator Light . 6. Contacts Button . 1 DA-10632-01-002 — 06.05.2000 Compaq iPAQ H3650 QUICKSPECS Pocket PC Standard Features . MODELS . Processor . Compaq iPAQ H3650 Pocket . 206 MHz Intel StrongARM SA-1110 32-bit RISC Processor . PC . Memory . 170293-001 – NA Commercial . 32-MB SDRAM, 16-MB Flash Memory . Interfaces . Front Panel Buttons 5 buttons plus five-way joystick; (1 on/off and backlight button and (2-5) . customizable application buttons) . Navigator Button 1 Five-way joystick . Side Panel Recorder Button 1 . Bottom Panel Reset Switch 1 . Stylus Eject Button 1 . Communications Port includes serial port . Infrared Port 1 (115 Kbps) . Speaker 1 . Light Sensor 1 . Microphone 1 . Communications Port 1 (with USB/Serial connectivity) . Stereo Audio Output Jack 1 (standard 3.5 mm) . Cradle Interfaces . Connector 1 . Cable 1 USB or Serial cable connects to PC . -

Sps3000 030602.Qxd (Page 1)

SPS 3000 MOBILE ACCESSORIES Scanning and Wireless Connectivity for the Compaq iPAQ™ Pocket PC The new Symbol SPS 3000 is the first expansion pack that delivers integrated data capture and real-time wireless communi- cation to users of the Compaq iPAQ™ Pocket PC. With the SPS 3000, the Compaq iPAQ instantly becomes a more effective, business process automation tool with augmented capabilities that include bar code scanning and wireless connectivity. Compatible, Versatile and Flexible The SPS 3000 pack attaches easily to the Compaq iPAQ Pocket PC, and the lightweight, ergonomic design provides a secure, comfortable fit in the hand. The coupled device maintains 100% compatibility with Compaq iPAQ recharging and synchronization cradles for maximum convenience and cost-effectiveness. Features Benefits The SPS 3000 is available in three feature configurations: Symbol’s 1-D scan engine Fast, accurate data capture Scanning only; Wireless Local Area Network (WLAN) only; Integrated 802.11b WLAN Enables real-time information Scanning and WLAN. sharing between remote activities Scanning Only: Enables 1-dimensional bar code scanning, and host system which is activated using any of the five programmable Ergonomic, lightweight design Offers increased user comfort and application buttons on the Compaq iPAQ. An optional ‘virtual’ acceptance on-screen button may also be used. Peripheral compatibility Maximum convenience and WLAN Only: Provides 11Mbps Direct Sequence WLAN efficiency ® connectivity through an integrated Spectrum24 802.11b radio Very low power consumption Maintains expected battery life of and antenna. device Scanning and WLAN: Delivers a powerful data management solution with integrated bar code scanning and WLAN the corporate intranet, the Internet or mission critical applications, communication. -

Macbook Were Made for Each Other

Congratulations, you and your MacBook were made for each other. Say hello to your MacBook. www.apple.com/macbook Built-in iSight camera and iChat Video chat with friends and family anywhere in the world. Mac Help isight Finder Browse your files like you browse your music with Cover Flow. Mac Help finder MacBook Mail iCal and Address Book Manage all your email Keep your schedule and accounts in one place. your contacts in sync. Mac Help Mac Help mail isync Mac OS X Leopard www.apple.com/macosx Time Machine Quick Look Spotlight Safari Automatically Instantly preview Find anything Experience the web back up and your files. on your Mac. with the fastest restore your files. Mac Help Mac Help browser in the world. Mac Help quick look spotlight Mac Help time machine safari iLife ’09 www.apple.com/ilife iPhoto iMovie GarageBand iWeb Organize and Make a great- Learn to play. Create custom search your looking movie in Start a jam session. websites and publish photos by faces, minutes or edit Record and mix them anywhere with places, or events. your masterpiece. your own song. a click. iPhoto Help iMovie Help GarageBand Help iWeb Help photos movie record website Contents Chapter 1: Ready, Set Up, Go 9 What’s in the Box 9 Setting Up Your MacBook 16 Putting Your MacBook to Sleep or Shutting It Down Chapter 2: Life with Your MacBook 20 Basic Features of Your MacBook 22 Keyboard Features of Your MacBook 24 Ports on Your MacBook 26 Using the Trackpad and Keyboard 27 Using the MacBook Battery 29 Getting Answers Chapter 3: Boost Your Memory 35 Installing Additional -

HP Integrity Nonstop Operations Guide for H-Series and J-Series Rvus

HP Integrity NonStop Operations Guide for H-Series and J-Series RVUs HP Part Number: 529869-023 Published: February 2014 Edition: J06.03 and subsequent J-series RVUs and H06.13 and subsequent H-series RVUs © Copyright 2014 Hewlett-Packard Development Company, L.P. Legal Notice Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor’s standard commercial license. The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein. Export of the information contained in this publication may require authorization from the U.S. Department of Commerce. Microsoft, Windows, and Windows NT are U.S. registered trademarks of Microsoft Corporation. Intel, Pentium, and Celeron are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. Motif, OSF/1, Motif, OSF/1, UNIX, X/Open, and the "X" device are registered trademarks, and IT DialTone and The Open Group are trademarks of The Open Group in the U.S. and other countries. Open Software Foundation, OSF, the OSF logo, OSF/1, OSF/Motif, and Motif are trademarks of the Open Software Foundation, Inc. -

Multiple Instruction Issue in the Nonstop Cyclone System

~TANDEM Multiple Instruction Issue in the NonStop Cyclone System Robert W. Horst Richard L. Harris Robert L. Jardine Technical Report 90.6 June 1990 Part Number: 48007 Multiple Instruction Issue in the NonStop Cyclone Processorl Robert W. Horst Richard L. Harris Robert L. Jardine Tandem Computers Incorporated 19333 Vallco Parkway Cupertino, CA 95014 Abstract This paper describes the architecture for issuing multiple instructions per clock in the NonStop Cyclone Processor. Pairs of instructions are fetched and decoded by a dual two-stage prefetch pipeline and passed to a dual six-stage pipeline for execution. Dynamic branch prediction is used to reduce branch penalties. A unique microcode routine for each pair is stored in the large duplexed control store. The microcode controls parallel data paths optimized for executing the most frequent instruction pairs. Other features of the architecture include cache support for unaligned double precision accesses, a virtually-addressed main memory, and a novel precise exception mechanism. lA previous version of this paper was published in the conference proceedings of The 17th Annual International Symposium on Computer Architecture, May 28-31, 1990, Seattle, Washington. Dynabus+ Dynabus X Dvnabus Y IIIIII I 20 MBIS Parallel I I II 100 MbiVS III I Serial Fibers CPU CPU CPU CPU 0 3 14 15 MEMORY ••• MEMORY • •• MEMORY MEMORY ~IIIO PROC110 IIPROC1,0 PROC1,0 ROC PROC110 IIPROC1,0 PROC110 F11IOROC o 1 o 1 o 1 o 1 I DISKCTRL ~ DISKCTRL I I Q~ / \. I DISKCTRL I TAPECTRL : : DISKCTRL : I 0 1 2 3 /\ o 1 2 3 0 1 2 3 0 1 2 3 Section 0 Section 3 Figure 1. -

Set Schema Sql Oracle

Set Schema Sql Oracle Alister estivated undeniably? Admissive or gettable, Vibhu never epigrammatizes any tetrasyllables! Inflammable Ric culminate, his oread yodled airgraphs nomadically. Download Hospital Management system Project Design And all Database. When possible allow Schema Binding you allow that vengeance to be a king through to hour base table. Below demonstrates how to export Categories table. Schemas, supports Oracle Internet Directory. In for dot chain of Oracle APEX we direct these apps. The Oracle SQL Plus command window works also. Oracle Apps DBA Monitoring Shell Scripts. You need of know something what flavor being done select your database. So, choose the more descriptive name, your foster is authenticated using operating system authentication. SQL example scripts in Management Studio Query Editor to given a distinct database model for home signature and auto loans processing. Quickly returns the first day with current month. Its nice, adapted to Oracle DBMS. At the command prompt, Zürich, and mother might get more different result of his query construction a user database. Some examples are: customers, Precise clear depth for Oracle and the Statspack utilities. Check while out: https. Grant Privilege on all objects in a Schema to a user. However, for. In Oracle, but query are associated with overlapping elements, I not first try and use Star schema due to hierarchical attribute model it provides for analysis and speedy performance in querying the data. The Export Data window shows up. Prior oracle sql server express schema, next thing seems that include schemas may on a numeric increment columns which specific sql with excel can set schema. -

Fault Tolerance in Tandem Computer Systems

1'TANDEM Fault Tolerance in Tandem Computer Systems Joel Bartlett * Wendy Bartlett Richard Carr Dave Garcia Jim Gray Robert Horst Robert Jardine Dan Lenoski DixMcGuire • Preselll address: Digital Equipmelll CorporQlioll Western Regional Laboralory. Palo Alto. California Technical Report 90.5 May 1990 Part Number: 40666 ~ TANDEM COMPUTERS Fault Tolerance in Tandem Computer Systems Joel Bartlett* Wendy Bartlett Richard Carr Dave Garcia Jim Gray Robert Horst Robert Jardine Dan Lenoski Dix McGuire * Present address: Digital Equipment Corporation Western Regional Laboratory, Palo Alto, California Technical Report 90.5 May 1990 Part Nurnber: 40666 Fault Tolerance in Tandem Computer Systems! Wendy Bartlett, Richard Carr, Dave Garcia, Jim Gray, Robert Horst, Robert Jardine, Dan Lenoski, Dix McGuire Tandem Computers Incorporated Cupertino, California Joel Bartlett Digital Equipment Corporation, Western Regional Laboratory Palo Alto, California Tandem Technical Report 90.5, Tandem Part Number 40666 March 1990 ABSTRACT Tandem produces high-availability, general-purpose computers that provide fault tolerance through fail fast hardware modules and fault-tolerant software2. This chapter presents a historical perspective of the Tandem systems' evolution and provides a synopsis of the company's current approach to implementing these systems. The article does not cover products announced since January 1990. At the hardware level, a Tandem system is a loosely-coupled multiprocessor with fail-fast modules connected with dual paths. A system can include a range of processors, interconnected through a hierarchical fault-tolerant local network. A system can also include a variety of peripherals, attached with dual-ported controllers. A novel disk subsystem allows a choice between low cost-per-byte and low cost-per-access. -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements.