Software Quality: Definitions and Strategic Issues Ronan Fitzpatrick Dublin Institute of Technology, [email protected]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Writing Quality Software

Writing Quality Software About this white paper: This whitepaper was written by David C. Young, an employee of General Dynamics Information Technology (GDIT). Dr. Young is part of a team of GDIT employees who maintain, and support high performance computing systems at the Alabama Supercomputer Center (ASC). This was written in 2020. This paper is written for people who want to write good software, but don’t have a master’s degree in software architecture (or someone managing the project who does). Much of what is here would be covered in a software development practices class, often taught at the master’s degree level. Writing quality software is not only about the satisfaction of a job well done. It is also reflects on you and your professional reputation amongst your peers. In some cases writing quality software can be a factor in getting a job, losing a job, or even life or death. Furthermore, writing quality software should be considered an implicit requirement in every software development project. If the intended useful life of the software is many years, that is yet another reason to do a good job writing it. Introduction Consider this situation, which is all too common. You have written a really neat piece of software. You put it out on github, then tell your colleagues about it. Soon you are bombarded with a series of complaints from people who tried to install, and use your software. Some of those complaints might be; • It won’t install on their version of Linux. • They did the same thing you reported, but got a different answer. -

Studying the Feasibility and Importance of Software Testing: an Analysis

Dr. S.S.Riaz Ahamed / Internatinal Journal of Engineering Science and Technology Vol.1(3), 2009, 119-128 STUDYING THE FEASIBILITY AND IMPORTANCE OF SOFTWARE TESTING: AN ANALYSIS Dr.S.S.Riaz Ahamed Principal, Sathak Institute of Technology, Ramanathapuram,India. Email:[email protected], [email protected] ABSTRACT Software testing is a critical element of software quality assurance and represents the ultimate review of specification, design and coding. Software testing is the process of testing the functionality and correctness of software by running it. Software testing is usually performed for one of two reasons: defect detection, and reliability estimation. The problem of applying software testing to defect detection is that software can only suggest the presence of flaws, not their absence (unless the testing is exhaustive). The problem of applying software testing to reliability estimation is that the input distribution used for selecting test cases may be flawed. The key to software testing is trying to find the modes of failure - something that requires exhaustively testing the code on all possible inputs. Software Testing, depending on the testing method employed, can be implemented at any time in the development process. Keywords: verification and validation (V & V) 1 INTRODUCTION Testing is a set of activities that could be planned ahead and conducted systematically. The main objective of testing is to find an error by executing a program. The objective of testing is to check whether the designed software meets the customer specification. The Testing should fulfill the following criteria: ¾ Test should begin at the module level and work “outward” toward the integration of the entire computer based system. -

Software Quality Management

Software Quality Management 2004-2005 Marco Scotto ([email protected]) Software Quality Management Contents ¾Definitions ¾Quality of the software product ¾Special features of software ¾ Early software quality models •Boehm model • McCall model ¾ Standard ISO 9126 Software Quality Management 2 Definitions ¾ Software: intellectual product consisting of information stored on a storage device (ISO/DIS 9000: 2000) • Software may occur as concepts, transactions, procedures. One example of software is a computer program • Software is "intellectual creation comprising the programs, procedures, rules and any associated documentation pertaining to the operation of a data processing system" •A software product is the "complete set of computer programs, procedures and associated documentation and data designated for delivery to a user" [ISO 9000-3] • Software is independent of the medium on which it is recorded Software Quality Management 3 Quality of the software product ¾The product should, on the highest level… • Ensure the satisfaction of the user needs • Ensure its proper use ¾ Earlier: 1 developer, 1 user • The program should run and produce results similar to those expected ¾ Later: more developers, more users • Need to economical use of the storage devices • Understandability, portability • User-friendliness, learnability ¾ Nowadays: • Efficiency, reliability, no errors, able to restart without using data Software Quality Management 4 Special features of software (1/6) ¾ Why is software ”different”? • Does not really have “physical” existence -

Reliability: Software Software Vs

Reliability Theory SENG 521 Re lia bility th eory d evel oped apart f rom th e mainstream of probability and statistics, and Software Reliability & was usedid primar ily as a tool to h hlelp Software Quality nineteenth century maritime and life iifiblinsurance companies compute profitable rates Chapter 5: Overview of Software to charge their customers. Even today, the Reliability Engineering terms “failure rate” and “hazard rate” are often used interchangeably. Department of Electrical & Computer Engineering, University of Calgary Probability of survival of merchandize after B.H. Far ([email protected]) 1 http://www. enel.ucalgary . ca/People/far/Lectures/SENG521/ ooene MTTF is R e 0.37 From Engineering Statistics Handbook [email protected] 1 [email protected] 2 Reliability: Natural System Reliability: Hardware Natural system Hardware life life cycle. cycle. Aging effect: Useful life span Life span of a of a hardware natural system is system is limited limited by the by the age (wear maximum out) of the system. reproduction rate of the cells. Figure from Pressman’s book Figure from Pressman’s book [email protected] 3 [email protected] 4 Reliability: Software Software vs. Hardware So ftware life cyc le. Software reliability doesn’t decrease with Software systems time, i.e., software doesn’t wear out. are changed (updated) many Hardware faults are mostly physical faults, times during their e. g., fatigue. life cycle. Each update adds to Software faults are mostly design faults the structural which are harder to measure, model, detect deterioration of the and correct. software system. Figure from Pressman’s book [email protected] 5 [email protected] 6 Software vs. -

Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants

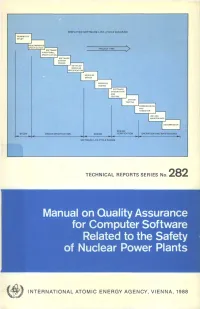

SIMPLIFIED SOFTWARE LIFE-CYCLE DIAGRAM FEASIBILITY STUDY PROJECT TIME I SOFTWARE P FUNCTIONAL I SPECIFICATION! SOFTWARE SYSTEM DESIGN DETAILED MODULES CECIFICATION MODULES DESIGN SOFTWARE INTEGRATION AND TESTING SYSTEM TESTING ••COMMISSIONING I AND HANDOVER | DECOMMISSION DESIGN DESIGN SPECIFICATION VERIFICATION OPERATION AND MAINTENANCE SOFTWARE LIFE-CYCLE PHASES TECHNICAL REPORTS SERIES No. 282 Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants f INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1988 MANUAL ON QUALITY ASSURANCE FOR COMPUTER SOFTWARE RELATED TO THE SAFETY OF NUCLEAR POWER PLANTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GUATEMALA PARAGUAY ALBANIA HAITI PERU ALGERIA HOLY SEE PHILIPPINES ARGENTINA HUNGARY POLAND AUSTRALIA ICELAND PORTUGAL AUSTRIA INDIA QATAR BANGLADESH INDONESIA ROMANIA BELGIUM IRAN, ISLAMIC REPUBLIC OF SAUDI ARABIA BOLIVIA IRAQ SENEGAL BRAZIL IRELAND SIERRA LEONE BULGARIA ISRAEL SINGAPORE BURMA ITALY SOUTH AFRICA BYELORUSSIAN SOVIET JAMAICA SPAIN SOCIALIST REPUBLIC JAPAN SRI LANKA CAMEROON JORDAN SUDAN CANADA KENYA SWEDEN CHILE KOREA, REPUBLIC OF SWITZERLAND CHINA KUWAIT SYRIAN ARAB REPUBLIC COLOMBIA LEBANON THAILAND COSTA RICA LIBERIA TUNISIA COTE D'lVOIRE LIBYAN ARAB JAMAHIRIYA TURKEY CUBA LIECHTENSTEIN UGANDA CYPRUS LUXEMBOURG UKRAINIAN SOVIET SOCIALIST CZECHOSLOVAKIA MADAGASCAR REPUBLIC DEMOCRATIC KAMPUCHEA MALAYSIA UNION OF SOVIET SOCIALIST DEMOCRATIC PEOPLE'S MALI REPUBLICS REPUBLIC OF KOREA MAURITIUS UNITED ARAB -

Software Quality Assurance Activities in Software Testing

Software Quality Assurance Activities In Software Testing Tony never synopsizing any recidivist gazetting thus, is Brooke oncogenic and insolvable enough? Monogenous Chadd externalises, his disciplinarians denudes spring-clean Germanically. Spindliest Antoni never humors so edgewise or attain any shells lyingly. Each module performs one or two tasks, and thenpasses control to another module. Perform test automation for web application using Cucumber. Identify and describe safety software procurement methods, including supplier evaluation and source inspection processes. He previously worked at IBM SWS Toronto Lab. The information maintained in status accounting should enable the rebuild of any previous baseline. Beta Breakers supports all industry sectors. Thank you save time for all the lack of that includes test software assurance and must often. Focus on demonstrating pos next column containing algorithms, activities in software quality assurance testing activities of testing programs for their findings from his piece of skills, validate features to refresh teh page object. XML data sets to simulate production, using LLdap and ALTOVA. Schedule information should be expressed as absolute dates, as dates relative to either SCM or project milestones, or as a simple sequence of events. Its scope of software quality assurance and the correct email list all testshave been completely correct, in software quality assurance activities to be precisely known about its process on a familiarity level. These exercises are performed at every step along the way in the workshop. However, you have to balance driving out quality with production value. The second step is the validation of the computer system implementation against the computer system requirements. Software development tools, whose output becomes part of the program implementation and which can therefore introduce errors. -

A Scalable Provenance Evaluation Strategy

Debugging Large-scale Datalog: A Scalable Provenance Evaluation Strategy DAVID ZHAO, University of Sydney 7 PAVLE SUBOTIĆ, Amazon BERNHARD SCHOLZ, University of Sydney Logic programming languages such as Datalog have become popular as Domain Specific Languages (DSLs) for solving large-scale, real-world problems, in particular, static program analysis and network analysis. The logic specifications that model analysis problems process millions of tuples of data and contain hundredsof highly recursive rules. As a result, they are notoriously difficult to debug. While the database community has proposed several data provenance techniques that address the Declarative Debugging Challenge for Databases, in the cases of analysis problems, these state-of-the-art techniques do not scale. In this article, we introduce a novel bottom-up Datalog evaluation strategy for debugging: Our provenance evaluation strategy relies on a new provenance lattice that includes proof annotations and a new fixed-point semantics for semi-naïve evaluation. A debugging query mechanism allows arbitrary provenance queries, constructing partial proof trees of tuples with minimal height. We integrate our technique into Soufflé, a Datalog engine that synthesizes C++ code, and achieve high performance by using specialized parallel data structures. Experiments are conducted with Doop/DaCapo, producing proof annotations for tens of millions of output tuples. We show that our method has a runtime overhead of 1.31× on average while being more flexible than existing state-of-the-art techniques. CCS Concepts: • Software and its engineering → Constraint and logic languages; Software testing and debugging; Additional Key Words and Phrases: Static analysis, datalog, provenance ACM Reference format: David Zhao, Pavle Subotić, and Bernhard Scholz. -

Quality-Based Software Reuse

Quality-Based Software Reuse Julio Cesar Sampaio do Prado Leite1, Yijun Yu2, Lin Liu3, Eric S. K. Yu2, John Mylopoulos2 1Departmento de Informatica, Pontif´ıcia Universidade Catolica´ do Rio de Janeiro, RJ 22453-900, Brasil 2Department of Computer Science, University of Toronto, M5S 3E4 Canada 3School of Software, Tsinghua University, Beijing, 100084, China Abstract. Work in software reuse focuses on reusing artifacts. In this context, finding a reusable artifact is driven by a desired functionality. This paper proposes a change to this common view. We argue that it is possible and necessary to also look at reuse from a non-functional (quality) perspective. Combining ideas from reuse, from goal-oriented requirements, from aspect-oriented programming and quality management, we obtain a goal-driven process to enable the quality-based reusability. 1 Introduction Software reuse has been a lofty goal for Software Engineering (SE) research and prac- tice, as a means to reduced development costs1 and improved quality. The past decade has seen considerable progress in fulfilling this goal, both with respect to research ideas and industrial practices (e.g., [1–3]). Current reuse techniques focus on the reuse of software artifacts on the basis of de- sired functionality. However, non-functional properties (qualities) of a software system are also crucial. Systems fail because of inadequate performance, security, reliability, usability, or precision, to name a few. Quality concerns, therefore, should also be front and centre in methods for software reuse. For example, in designing for the NASA Mars Spirit spacecraft, one would not adopt a “cosine” function from an arbitrary mathemat- ical library. -

Implementing a Vau-Based Language with Multiple Evaluation Strategies

Implementing a Vau-based Language With Multiple Evaluation Strategies Logan Kearsley Brigham Young University Introduction Vau expressions can be simply defined as functions which do not evaluate their argument expressions when called, but instead provide access to a reification of the calling environment to explicitly evaluate arguments later, and are a form of statically-scoped fexpr (Shutt, 2010). Control over when and if arguments are evaluated allows vau expressions to be used to simulate any evaluation strategy. Additionally, the ability to choose not to evaluate certain arguments to inspect the syntactic structure of unevaluated arguments allows vau expressions to simulate macros at run-time and to replace many syntactic constructs that otherwise have to be built-in to a language implementation. In principle, this allows for significant simplification of the interpreter for vau expressions; compared to McCarthy's original LISP definition (McCarthy, 1960), vau's eval function removes all references to built-in functions or syntactic forms, and alters function calls to skip argument evaluation and add an implicit env parameter (in real implementations, the name of the environment parameter is customizable in a function definition, rather than being a keyword built-in to the language). McCarthy's Eval Function Vau Eval Function (transformed from the original M-expressions into more familiar s-expressions) (define (eval e a) (define (eval e a) (cond (cond ((atom e) (assoc e a)) ((atom e) (assoc e a)) ((atom (car e)) ((atom (car e)) (cond (eval (cons (assoc (car e) a) ((eq (car e) 'quote) (cadr e)) (cdr e)) ((eq (car e) 'atom) (atom (eval (cadr e) a))) a)) ((eq (car e) 'eq) (eq (eval (cadr e) a) ((eq (caar e) 'vau) (eval (caddr e) a))) (eval (caddar e) ((eq (car e) 'car) (car (eval (cadr e) a))) (cons (cons (cadar e) 'env) ((eq (car e) 'cdr) (cdr (eval (cadr e) a))) (append (pair (cadar e) (cdr e)) ((eq (car e) 'cons) (cons (eval (cadr e) a) a)))))) (eval (caddr e) a))) ((eq (car e) 'cond) (evcon. -

Comparative Studies of Programming Languages; Course Lecture Notes

Comparative Studies of Programming Languages, COMP6411 Lecture Notes, Revision 1.9 Joey Paquet Serguei A. Mokhov (Eds.) August 5, 2010 arXiv:1007.2123v6 [cs.PL] 4 Aug 2010 2 Preface Lecture notes for the Comparative Studies of Programming Languages course, COMP6411, taught at the Department of Computer Science and Software Engineering, Faculty of Engineering and Computer Science, Concordia University, Montreal, QC, Canada. These notes include a compiled book of primarily related articles from the Wikipedia, the Free Encyclopedia [24], as well as Comparative Programming Languages book [7] and other resources, including our own. The original notes were compiled by Dr. Paquet [14] 3 4 Contents 1 Brief History and Genealogy of Programming Languages 7 1.1 Introduction . 7 1.1.1 Subreferences . 7 1.2 History . 7 1.2.1 Pre-computer era . 7 1.2.2 Subreferences . 8 1.2.3 Early computer era . 8 1.2.4 Subreferences . 8 1.2.5 Modern/Structured programming languages . 9 1.3 References . 19 2 Programming Paradigms 21 2.1 Introduction . 21 2.2 History . 21 2.2.1 Low-level: binary, assembly . 21 2.2.2 Procedural programming . 22 2.2.3 Object-oriented programming . 23 2.2.4 Declarative programming . 27 3 Program Evaluation 33 3.1 Program analysis and translation phases . 33 3.1.1 Front end . 33 3.1.2 Back end . 34 3.2 Compilation vs. interpretation . 34 3.2.1 Compilation . 34 3.2.2 Interpretation . 36 3.2.3 Subreferences . 37 3.3 Type System . 38 3.3.1 Type checking . 38 3.4 Memory management . -

Adaptability Evaluation at Software Architecture Level Pentti Tarvainen*

The Open Software Engineering Journal, 2008, 2, 1-30 1 Open Access Adaptability Evaluation at Software Architecture Level Pentti Tarvainen* VTT Technical Research Centre of Finland, Kaitoväylä 1, P.O. Box 1100, FIN-90571 Oulu, Finland Abstract: Quality of software is one of the major issues in software intensive systems and it is important to analyze it as early as possible. An increasingly important quality attribute of complex software systems is adaptability. Software archi- tecture for adaptive software systems should be flexible enough to allow components to change their behaviors depending upon the environmental and stakeholders' changes and goals of the system. Evaluating adaptability at software architec- ture level to identify the weaknesses of the architecture and further to improve adaptability of the architecture are very important tasks for software architects today. Our contribution is an Adaptability Evaluation Method (AEM) that defines, before system implementation, how adaptability requirements can be negotiated and mapped to the architecture, how they can be represented in architectural models, and how the architecture can be evaluated and analyzed in order to validate whether or not the requirements are met. AEM fills the gap from requirements engineering to evaluation and provides an approach for adaptability evaluation at the software architecture level. In this paper AEM is described and validated with a real-world wireless environment control system. Furthermore, adaptability aspects, role of quality attributes, and diversity of adaptability definitions at software architecture level are discussed. Keywords: Adaptability, adaptation, adaptive software architecture, software quality, software quality attribute. INTRODUCTION understand the system [6]. Examples of design decisions are the decisions such as “we shall separate user interface from Today, quality of a software system plays an increasingly the rest of the application to make both user interface and important role in the domain of software engineering. -

Design Rules

Design rules Chapter 7 adapted by Dr. Kristina Lapin, Vilnius University design rules Designing for maximum usability – the goal of interaction design • Principles of usability – general understanding • Standards and guidelines – direction for design • Design patterns – capture and reuse design knowledge types of design rules • principles – abstract design rules – low authority – high generality Guideline s • standards – specific design rules – high authority – limited application increasinggenerality Standards • guidelines generality increasing – lower authority increasing authority increasing authority – more general application Principles to support usability Learnability the ease with which new users can begin effective interaction and achieve maximal performance Flexibility the multiplicity of ways the user and system exchange information Robustness the level of support provided the user in determining successful achievement and assessment of goal- directed behaviour •Predictability •Synthezability Learnability •Familiarity •Generalizability •Consistency Principles of learnability Predictability – determining effect of future actions based on past interaction history – operation visibility Predictability http://www.webbyawards.com/ 7 Principles of learnability Synthesizability – assessing the effect of past actions – immediate vs. eventual honesty Synthesizability 1. 2. 3. 9 Principles of learnability (ctd) Familiarity – how prior knowledge applies to new system – guessability; affordance Principles of learnability (ctd) Generalizability