Avaya Aura® Solution Design Considerations and Guidelines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Application Notes for Avaya Aura Conferencing

Avaya Solution & Interoperability Test Lab Application Notes for Avaya Aura® Conferencing 7.2 and Radvision SCOPIA Elite MCU – Issue 1.0 Abstract These Application Notes describe the configuration of Avaya Aura® Conferencing 7.2 and Radvision SCOPIA Management 8.0. Avaya Aura® Conferencing 7.2 communicates with Radvision SCOPIA Management via a SIP trunk and Avaya Aura® Session Manager. The administration of a SIP trunk between Session Manager and SCOPIA Management. The administration of Subscriber data between Avaya Aura® Conferencing and Radvision SCOPIA components. The administration of Radvision SCOPIA Management with Avaya Aura® Conferencing These Application Notes provide information for the setup, configuration, and verification of the call flows on this solution. TJM; Reviewed: Solution & Interoperability Test Lab Application Notes 1 of 37 SPOC 5/29/2013 ©2013 Avaya Inc. All Rights Reserved. AAC72_Radvision Table of Contents 1. Introduction ............................................................................................................................. 3 2. Interoperability Testing ........................................................................................................... 3 2.1. Test Description and Coverage ........................................................................................ 3 3. Reference Configuration ......................................................................................................... 4 4. Equipment and Software Validated ....................................................................................... -

Avaya Breeze: Empower Your Business with an Application Sequenced UC Experience

© Copyright 2017. Private and confidential. AVAYA BREEZE Empower Your Business with an Application Sequenced UC Experience © Copyright 2017. Private and confidential. WELCOME! Scott Clark Vice President, Marketing ConvergeOne [email protected] © Copyright 2017. Private and confidential. THANK YOU FOR JOINING US! CONVERGEONE WEBINAR SERIES > Today > Avaya Breeze: Empower Your Business with an Application Sequenced UC Experience > Thursday, August 17, 4:00pm Eastern Time > Leveraging Breeze, Oceana and Oceanalytics for a Modern and Relevant Customer Experience > Thursday, August 24, 4:00pm Eastern Time > Avaya Engagement Designer: Tying It All Together with Use Cases © Copyright 2017. Private and confidential. TODAY’S CONVERGEONE EXPERT Andrew Bacon Regional Chief Technology Officer, Northeast ConvergeOne [email protected] © Copyright 2017. Private and confidential. TODAY’S GUEST SPEAKER Carolyn Laffan Miller Sr. Manager, Global Executive Briefing Program Avaya [email protected] © Copyright 2017. Private and confidential. AVAYA BREEZE OVERVIEW AND USE CASES Carolyn Laffan Miller, Sr. Manager and Executive Briefer, Avaya Michael Killeen, Breeze Product Manager, Avaya © Copyright 2017. Private and confidential. CUSTOMER BUSINESS TRENDS THAT LED TO THE DEVELOPMENT OF BREEZE © Copyright 2017. Private and confidential. IT DEPARTMENTS HAVE NEW EXPECTATIONS FROM INTERNAL CUSTOMERS COO CIO+ CMO CEO “CIOs are now also Chief Innovation Officers, Heads of HR, Chief Supply Chain Officers, and Heads of Shared Services to name just a few.” Peter -

User Guide for Avaya Scopia® Desktop Client

Avaya Scopia® Desktop Client User Guide Release 9.1 December 2017 © 2016-2017, Avaya Inc. YOU DO NOT WISH TO ACCEPT THESE TERMS OF USE, YOU All Rights Reserved. MUST NOT ACCESS OR USE THE HOSTED SERVICE OR AUTHORIZE ANYONE TO ACCESS OR USE THE HOSTED Notice SERVICE. While reasonable efforts have been made to ensure that the Licenses information in this document is complete and accurate at the time of printing, Avaya assumes no liability for any errors. Avaya reserves THE SOFTWARE LICENSE TERMS AVAILABLE ON THE AVAYA the right to make changes and corrections to the information in this WEBSITE, HTTPS://SUPPORT.AVAYA.COM/LICENSEINFO, document without the obligation to notify any person or organization UNDER THE LINK “AVAYA SOFTWARE LICENSE TERMS (Avaya of such changes. Products)” OR SUCH SUCCESSOR SITE AS DESIGNATED BY AVAYA, ARE APPLICABLE TO ANYONE WHO DOWNLOADS, Documentation disclaimer USES AND/OR INSTALLS AVAYA SOFTWARE, PURCHASED “Documentation” means information published in varying mediums FROM AVAYA INC., ANY AVAYA AFFILIATE, OR AN AVAYA which may include product information, operating instructions and CHANNEL PARTNER (AS APPLICABLE) UNDER A COMMERCIAL performance specifications that are generally made available to users AGREEMENT WITH AVAYA OR AN AVAYA CHANNEL PARTNER. of products. Documentation does not include marketing materials. UNLESS OTHERWISE AGREED TO BY AVAYA IN WRITING, Avaya shall not be responsible for any modifications, additions, or AVAYA DOES NOT EXTEND THIS LICENSE IF THE SOFTWARE deletions to the original published version of Documentation unless WAS OBTAINED FROM ANYONE OTHER THAN AVAYA, AN AVAYA such modifications, additions, or deletions were performed by or on AFFILIATE OR AN AVAYA CHANNEL PARTNER; AVAYA the express behalf of Avaya. -

Avaya Scopia® XT4200 Room System Specs

Highlights Avaya Scopia® XT4200 • Efficient HD Performance Room System • Outstanding Value Cost-Effective HD Video Collaboration • Intuitive and Easy-to-Use • Sleek and Elegant Design • Integrated Total Solution The Avaya Scopia® XT4200 offers outstanding value and cost- effective HD video collaboration specifically designed for the needs of smaller and mid-sized conference rooms as well as customers who don’t require all the high-end features offered in the Scopia XT5000. The system is nonetheless incredibly powerful with many features often found with costly upgrades in other vendor offerings. The Scopia XT4200 is well equipped and includes dual 720p/60fps live video and content, HD audio, H.264 High Profile and Scalable Video Coding (SVC), dual display support and a wide-angle PTZ camera. The user interface is designed for simplicity along with optional Multi-Touch control via the Apple iPad device for enhanced capabilities. avaya.com | 1 Intuitive and Easy-to-Use The Scopia XT4200 has been designed with the end user in mind. It delivers instant, intuitive and effective meeting control with little or no training required. With Microsoft Exchange calendar support, users can join meetings directly from the integrated system calendar. The advanced on-screen participants list gives leaders full meeting moderation. And to further extend its user functionality, the system is available with optional control via the Apple iPad device. Sleek and Elegant Design The Scopia XT4200 is a natural fit for today’s businesses, not only for its ease of use, but also its modern design that complements a conference room. Finally a powerful business system to With more than 100 Efficient HD Performance put on display and not keep hidden in years as a leader in The Scopia XT4200 delivers efficient HD the closet. -

Scopia® XT1200 Room System

Highlights Avaya Scopia® XT1200 Room • First Class Video System Experience Superior Performance, Excellent Value • Hear the Nuances • High Motion, High Resolution Data Sharing • Embedded HD Multi- party Conferencing • Apple iPad Control The Avaya Scopia® XT1200 delivers the perfect blend of value and a high quality experience, making it stand out from the competition. The Scopia XT1200 is a high-end system without a high-end price tag. The XT1200 supports dual 1080p/30fps live video and content. The system delivers 20 kHz CD-quality audio and includes a beam forming digital microphone pod to isolate the speaker from the noise. An optional, embedded MCU that can support continuous presence for up to 9 participants is available along with optional Apple iPad Multi-Touch control for a highly intuitive user interface. avaya.com | 1 High Motion, High Resolution Data Sharing Remote participants can view your shared data as if they are in the same room, seeing the data in all its native detail. Share presentations, videos and other multimedia content at full HD 1080p 30fps. With the dual display capability, meeting participants can simultaneously view other participants and data, allowing users to fully engage in meetings. Embedded HD Multi-party Conferencing Avaya’s multi-party expertise is embedded in the Scopia XT1200. HD With more than 100 First Class Video continuous presence meetings with up years as a leader in Experience to nine participants are more affordable than ever utilizing the communications, The Scopia XT1200 delivers dual full HD (1080p/30fps) video streams as largest capacity embedded MCU Avaya can help your standard so you don’t have to available in the industry today. -

IP Office 8.1 Avaya Scopia Installation Notes

IP Office 8.1 Avaya Scopia Installation Notes – Issue 05f - (07 April 2016) © 2016 AVAYA All Rights Reserved. Notice While reasonable efforts have been made to ensure that the information in this document is complete and accurate at the time of printing, Avaya assumes no liability for any errors. Avaya reserves the right to make changes and corrections to the information in this document without the obligation to notify any person or organization of such changes. Documentation disclaimer “Documentation” means information published by Avaya in varying mediums which may include product information, operating instructions and performance specifications that Avaya may generally make available to users of its products and Hosted Services. Documentation does not include marketing materials. Avaya shall not be responsible for any modifications, additions, or deletions to the original published version of documentation unless such modifications, additions, or deletions were performed by Avaya. End User agrees to indemnify and hold harmless Avaya, Avaya's agents, servants and employees against all claims, lawsuits, demands and judgments arising out of, or in connection with, subsequent modifications, additions or deletions to this documentation, to the extent made by End User. Link disclaimer Avaya is not responsible for the contents or reliability of any linked websites referenced within this site or documentation provided by Avaya. Avaya is not responsible for the accuracy of any information, statement or content provided on these sites and does not necessarily endorse the products, services, or information described or offered within them. Avaya does not guarantee that these links will work all the time and has no control over the availability of the linked pages. -

User Guide for Avaya Scopia® Mobile Version 8.3, May Guide Is Made Either by Avaya Inc

User Guide for Avaya Scopia® Mobile Version 8.3 For Solution 8.3 May 2014 © 2000-2014 Avaya Inc. All intellectual property rights in person of such revisions or changes. Avaya Inc may this publication are owned by Avaya Inc. and are make improvements or changes in the product(s) and/or protected by United States copyright laws, other the program(s) described in this documentation at any applicable copyright laws and international treaty time. provisions. Avaya Inc. retains all rights not expressly If there is any software on removable media described in granted. this publication, it is furnished under a license agreement All product and company names herein may be included with the product as a separate document. If you trademarks of their registered owners. are unable to locate a copy, please contact Avaya Inc This publication is AVAYA Confidential & Proprietary. and a copy will be provided to you. Use pursuant to your signed agreement or Avaya policy. Unless otherwise indicated, Avaya registered trademarks No part of this publication may be reproduced in any are registered in the United States and other territories. form whatsoever or used to make any derivative work All registered trademarks recognized. without prior written approval by Avaya Inc. For further information contact Avaya or your local No representation of warranties for fitness for any distributor or reseller. purpose other than what is specifically mentioned in this User Guide for Avaya Scopia® Mobile Version 8.3, May guide is made either by Avaya Inc. or its agents. 26, 2014 Avaya Inc. reserves the right to revise this publication http://support.avaya.com and make changes without obligation to notify any User Guide for Avaya Scopia® Mobile Version 8.3 Notices | 2 Table of Contents Chapter 1: About Scopia® Mobile Minimum Requirements for Scopia® Mobile ................................................................................................... -

IP Office R11 Offer Definition

IP Office Release 11.0 Regional Applicability: All Date of Issue: March 14, 2019 ____________________________________________________________________________________ Offer Definition Avaya IP Office™ Platform General Availability Release 11.0 Document Version 1.13 Document Definition: This is a communication that summarizes “what’s new” within ✓ Product a given release regarding any changes or enhancements to an Update existing GA (Generally Available) product. NOTICE: While reasonable efforts have been made to ensure that the information in this document is complete and accurate at the time of posting, Avaya assumes no liability for any errors. Avaya reserves the right to make changes and corrections to the information in this document without the obligation to notify any person or organization of such changes. © 2019 Avaya. All Rights Reserved. All trademarks identified by ®, TM, or SM are registered marks, trademarks, and service marks, respectively, of Avaya Inc. All other trademarks are the property of their respective owners. Avaya and other parties may also have trademark rights in other terms used herein. Avaya Inc. – Confidential and Proprietary. 1 Use pursuant to the terms of your signed agreement or Avaya policy. COMPAS ID: 179134 IP Office Release 11.0 Regional Applicability: All Offer Definition GA Date Product ➔ IP Office Platform R11.0 May 15, 2018 ➔ Rev # Rev Date 1.13 March 14, 2019 CHANGE CONTROL RECORD Date Issue/Version Summary of Changes (mm/dd/yy) # 05/15/18 1.0 General Availability 05/21/18 1.1 Section 4.1 - Note that no further Service Pack planned for IP Office 10.0. - Clarify R10+ / Release “Any” codes and implications of same for order tools. -

Leap Second Impact Update for Avaya Products

Leap Second Impact Update for Avaya Products Updated: 6/29/2015 v28 Links Remediation Needed (Y/N) and Type (e.g. Where documented? (Note: May be 24 hr delay in Application / Product Name Release one-time patch, bundled patch, manual Fix Available Supplemental Links (Release Notes, PSN, etc.) when info is available and when updates, etc.). visible on support) Engagement Solutions Avaya Aura® Communication Manager 5.2.1 EoMS - upgrade to newer release NA PSN020178u http://support.avaya.com/css/P8/doc 6.0.1 No longer bugfix supported - NTP provided by NA uments/101008265 System Platform so no RHEL kernel vulnerability - upgrade to newer release 6.2 No longer bugfix supported - NTP provided by NA System Platform so no RHEL kernel vulnerability - upgrade to newer release 6.3.x NTP provided by System Platform so no RHEL kernel vulnerability Avaya Aura® Communication Manager Messaging 5.2.1 EoMS - upgrade to newer release NA CMM Support Page - PSN 6.0.1 EoMS - upgrade to newer release NA 6.2 EoMS - upgrade to newer release NA 6.3 Security Patch SSP6 NA CMM Support Page - PSN Avaya Aura® Communication Manager Messaging - FM 6.0.1 EoMS - upgrade to newer release NA CMM Support Page - PSN 6.3 Security Patch SSP6 NA CMM Support Page - PSN Avaya Aura® Session Manager 5.2 EoMS - upgrade to newer release. PSN will NA PSN004439u detail workarounds 6.0 No longer bugfix supported - upgrade to newer NA release. PSN will detail workarounds 6.1 No longer bugfix supported - upgrade to newer NA http://support.avaya.com/css/P8/doc release. -

User Guide for Scopia Desktop Client Version 8.2.1, July Guide Is Made Either by RADVISION Ltd Or Its Agents

Scopia Desktop Client User Guide Version 8.2.1 For Solution 8.2 8.2.1 © 2000-2013 RADVISION Ltd. All intellectual property Ltd may make improvements or changes in the rights in this publication are owned by RADVISION Ltd product(s) and/or the program(s) described in this and are protected by United States copyright laws, other documentation at any time. applicable copyright laws and international treaty If there is any software on removable media described in provisions. RADVISION Ltd retains all rights not this publication, it is furnished under a license agreement expressly granted. included with the product as a separate document. If you All product and company names herein may be are unable to locate a copy, please contact RADVISION trademarks of their registered owners. Ltd and a copy will be provided to you. This publication is RADVISION confidential. No part of Unless otherwise indicated, RADVISION registered this publication may be reproduced in any form trademarks are registered in the United States and other whatsoever or used to make any derivative work without territories. All registered trademarks recognized. prior written approval by RADVISION Ltd. For further information contact RADVISION or your local No representation of warranties for fitness for any distributor or reseller. purpose other than what is specifically mentioned in this User Guide for Scopia Desktop Client Version 8.2.1, July guide is made either by RADVISION Ltd or its agents. 16, 2013 RADVISION Ltd reserves the right to revise this http://www.radvision.com publication and make changes without obligation to notify any person of such revisions or changes. -

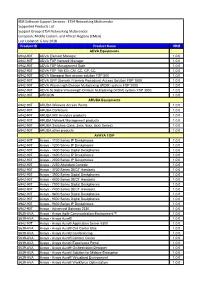

IBM Software Support Services

IBM Software Support Services - ETS4 Networking Multivendor Supported Products List Support Group: ETS4 Networking Multivendor European, Middle Eastern, and African Regions (EMEA) Last Updated: 6 July 2018 Product ID Product Name VRM ADVA Equipments 6942-90T ADVA Element Manager 1.0.0 6942-90T ADVA FSP Network Manager 1.0.0 6942-90T ADVA FSP Management Suite 1.0.0 6942-90T ADVA FSP 150 EG, CM, CC, CP, CC 1.0.0 6942-90T ADVA Managed fiber access solution FSP 500 1.0.0 6942-90T ADVA GFP (Generic Framing Procedure) Access Solution FSP 1500 1.0.0 6942-90T ADVA Wavelength Division Multiplexing (WDM) system FSP 2000 1.0.0 6942-90T ADVA Scalable Wavelength Division Multiplexing (WDM) system FSP 3000 1.0.0 6942-90T ARKOON 1.0.0 ARUBA Equipments 6942-90T ARUBA Wireless Access Points 1.0.0 6942-90T ARUBA Controlers 1.0.0 6942-90T ARUBA Wifi Analytics products 1.0.0 6942-90T ARUBA Network Management products 1.0.0 6942-90T ARUBA Switches (2xxx, 3xxx, 5xxx, 8xxx Series) 1.0.0 6942-90T ARUBA other products 1.0.0 AVAYA TOIP 6942-90T Avaya - 1100 Series IP Deskphones 1.0.0 6942-90T Avaya - 1200 Series IP Deskphones 1.0.0 6942-90T Avaya - 1400 Series Digital Deskphones 1.0.0 6942-90T Avaya - 1600 Series IP Deskphones 1.0.0 6942-90T Avaya - 2000 Series IP Deskphones 1.0.0 6942-90T Avaya - 2250 Attendant Console 1.0.0 6942-90T Avaya - 3700 Series DECT Handsets 1.0.0 6942-90T Avaya - 3900 Series Digital Deskphones 1.0.0 6942-90T Avaya - 4000 Series DECT Handsets 1.0.0 6942-90T Avaya - 7000 Series Digital Deskphones 1.0.0 6942-90T Avaya - 7400 Series DECT -

User Guide for Avaya Scopia® Pathfinder Client

User Guide for Avaya Scopia® PathFinder Client Release 8.3.3 For Solution 8.3 March 2016 © 2015-2016, Avaya, Inc. TERMS OF USE. IF YOU DO NOT HAVE SUCH AUTHORITY, OR All Rights Reserved. IF YOU DO NOT WISH TO ACCEPT THESE TERMS OF USE, YOU MUST NOT ACCESS OR USE THE HOSTED SERVICE OR Notice AUTHORIZE ANYONE TO ACCESS OR USE THE HOSTED While reasonable efforts have been made to ensure that the SERVICE. YOUR USE OF THE HOSTED SERVICE SHALL BE information in this document is complete and accurate at the time of LIMITED BY THE NUMBER AND TYPE OF LICENSES printing, Avaya assumes no liability for any errors. Avaya reserves PURCHASED UNDER YOUR CONTRACT FOR THE HOSTED the right to make changes and corrections to the information in this SERVICE, PROVIDED, HOWEVER, THAT FOR CERTAIN HOSTED document without the obligation to notify any person or organization SERVICES IF APPLICABLE, YOU MAY HAVE THE OPPORTUNITY of such changes. TO USE FLEX LICENSES, WHICH WILL BE INVOICED ACCORDING TO ACTUAL USAGE ABOVE THE CONTRACT Documentation disclaimer LICENSE LEVEL. CONTACT AVAYA OR AVAYA’S CHANNEL “Documentation” means information published by Avaya in varying PARTNER FOR MORE INFORMATION ABOUT THE LICENSES mediums which may include product information, operating FOR THE APPLICABLE HOSTED SERVICE, THE AVAILABILITY instructions and performance specifications that Avaya may generally OF ANY FLEX LICENSES (IF APPLICABLE), PRICING AND make available to users of its products and Hosted Services. BILLING INFORMATION, AND OTHER IMPORTANT Documentation does not include marketing materials. Avaya shall not INFORMATION REGARDING THE HOSTED SERVICE.