Measures of Association for Contingency Tables

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Contingency Tables Are Eaten by Large Birds of Prey

Case Study Case Study Example 9.3 beginning on page 213 of the text describes an experiment in which fish are placed in a large tank for a period of time and some Contingency Tables are eaten by large birds of prey. The fish are categorized by their level of parasitic infection, either uninfected, lightly infected, or highly infected. It is to the parasites' advantage to be in a fish that is eaten, as this provides Bret Hanlon and Bret Larget an opportunity to infect the bird in the parasites' next stage of life. The observed proportions of fish eaten are quite different among the categories. Department of Statistics University of Wisconsin|Madison Uninfected Lightly Infected Highly Infected Total October 4{6, 2011 Eaten 1 10 37 48 Not eaten 49 35 9 93 Total 50 45 46 141 The proportions of eaten fish are, respectively, 1=50 = 0:02, 10=45 = 0:222, and 37=46 = 0:804. Contingency Tables 1 / 56 Contingency Tables Case Study Infected Fish and Predation 2 / 56 Stacked Bar Graph Graphing Tabled Counts Eaten Not eaten 50 40 A stacked bar graph shows: I the sample sizes in each sample; and I the number of observations of each type within each sample. 30 This plot makes it easy to compare sample sizes among samples and 20 counts within samples, but the comparison of estimates of conditional Frequency probabilities among samples is less clear. 10 0 Uninfected Lightly Infected Highly Infected Contingency Tables Case Study Graphics 3 / 56 Contingency Tables Case Study Graphics 4 / 56 Mosaic Plot Mosaic Plot Eaten Not eaten 1.0 0.8 A mosaic plot replaces absolute frequencies (counts) with relative frequencies within each sample. -

Use of Chi-Square Statistics

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike License. Your use of this material constitutes acceptance of that license and the conditions of use of materials on this site. Copyright 2008, The Johns Hopkins University and Marie Diener-West. All rights reserved. Use of these materials permitted only in accordance with license rights granted. Materials provided “AS IS”; no representations or warranties provided. User assumes all responsibility for use, and all liability related thereto, and must independently review all materials for accuracy and efficacy. May contain materials owned by others. User is responsible for obtaining permissions for use from third parties as needed. Use of the Chi-Square Statistic Marie Diener-West, PhD Johns Hopkins University Section A Use of the Chi-Square Statistic in a Test of Association Between a Risk Factor and a Disease The Chi-Square ( X2) Statistic Categorical data may be displayed in contingency tables The chi-square statistic compares the observed count in each table cell to the count which would be expected under the assumption of no association between the row and column classifications The chi-square statistic may be used to test the hypothesis of no association between two or more groups, populations, or criteria Observed counts are compared to expected counts 4 Displaying Data in a Contingency Table Criterion 2 Criterion 1 1 2 3 . C Total . 1 n11 n12 n13 n1c r1 2 n21 n22 n23 . n2c r2 . 3 n31 . r nr1 nrc rr Total c1 c2 cc n 5 Chi-Square Test Statistic -

Knowledge and Human Capital As Sustainable Competitive Advantage in Human Resource Management

sustainability Article Knowledge and Human Capital as Sustainable Competitive Advantage in Human Resource Management Miloš Hitka 1 , Alžbeta Kucharˇcíková 2, Peter Štarcho ˇn 3 , Žaneta Balážová 1,*, Michal Lukáˇc 4 and Zdenko Stacho 5 1 Faculty of Wood Sciences and Technology, Technical University in Zvolen, T. G. Masaryka 24, 960 01 Zvolen, Slovakia; [email protected] 2 Faculty of Management Science and Informatics, University of Žilina, Univerzitná 8215/1, 010 26 Žilina, Slovakia; [email protected] 3 Faculty of Management, Comenius University in Bratislava, Odbojárov 10, P.O. BOX 95, 82005 Bratislava, Slovakia; [email protected] 4 Faculty of Social Sciences, University of SS. Cyril and Methodius in Trnava, Buˇcianska4/A, 917 01 Trnava, Slovakia; [email protected] 5 Institut of Civil Society, University of SS. Cyril and Methodius in Trnava, Buˇcianska4/A, 917 01 Trnava, Slovakia; [email protected] * Correspondence: [email protected]; Tel.: +421-45-520-6189 Received: 2 August 2019; Accepted: 9 September 2019; Published: 12 September 2019 Abstract: The ability to do business successfully and to stay on the market is a unique feature of each company ensured by highly engaged and high-quality employees. Therefore, innovative leaders able to manage, motivate, and encourage other employees can be a great competitive advantage of an enterprise. Knowledge of important personality factors regarding leadership, incentives and stimulus, systematic assessment, and subsequent motivation factors are parts of human capital and essential conditions for effective development of its potential. Familiarity with various ways to motivate leaders and their implementation in practice are important for improving the work performance and reaching business goals. -

Chapter 8 Example

Chapter 8 Example Frequency Table Time spent travelling to school – to the nearest 5 minutes (Sample of Y7s) Time Frequency Per cent Valid per cent Cumulative per cent 5.00 4 7.4 7.4 7.4 10.00 10 18.5 18.5 25.9 15.00 20 37.0 37.0 63.0 Valid 20.00 15 27.8 27.8 90.7 25.00 3 5.6 5.6 96.3 35.00 2 3.7 3.7 100.0 Total 54 100.0 100.0 Using Pie Charts Pie chart showing relationship of something to a whole Children's Food Preferences Other 28% Chips 72% Ö © Mark O’Hara, Caron Carter, Pam Dewis, Janet Kay and Jonathan Wainwright 2011 O’Hara, M., Carter, C., Dewis, P., Kay, J., and Wainwright, J. (2011) Successful Dissertations. London: Continuum. Pie chart showing relationship of something to other categories Children's Food Preferences Fruit Ice Cream 2% 2% Biscuits 3% Pasta 11% Pizza 10% Chips 72% Using Bar Charts and Histograms Bar chart Mode of Travel to School (Y7s) 14 12 10 8 6 mode of travel 4 2 0 walk car bus cycle other Ö © Mark O’Hara, Caron Carter, Pam Dewis, Janet Kay and Jonathan Wainwright 2011 O’Hara, M., Carter, C., Dewis, P., Kay, J., and Wainwright, J. (2011) Successful Dissertations. London: Continuum. Histogram Number of students 50 40 30 20 10 0 0204060 80 100 Score on final exam (maximum possible = 100) Median and Mean The median and mean of these two sets of numbers is clearly 50, but the spread can be seen to differ markedly 48 49 50 51 52 30 40 50 60 70 © Mark O’Hara, Caron Carter, Pam Dewis, Janet Kay and Jonathan Wainwright 2011 O’Hara, M., Carter, C., Dewis, P., Kay, J., and Wainwright, J. -

Pearson-Fisher Chi-Square Statistic Revisited

Information 2011 , 2, 528-545; doi:10.3390/info2030528 OPEN ACCESS information ISSN 2078-2489 www.mdpi.com/journal/information Communication Pearson-Fisher Chi-Square Statistic Revisited Sorana D. Bolboac ă 1, Lorentz Jäntschi 2,*, Adriana F. Sestra ş 2,3 , Radu E. Sestra ş 2 and Doru C. Pamfil 2 1 “Iuliu Ha ţieganu” University of Medicine and Pharmacy Cluj-Napoca, 6 Louis Pasteur, Cluj-Napoca 400349, Romania; E-Mail: [email protected] 2 University of Agricultural Sciences and Veterinary Medicine Cluj-Napoca, 3-5 M ănăş tur, Cluj-Napoca 400372, Romania; E-Mails: [email protected] (A.F.S.); [email protected] (R.E.S.); [email protected] (D.C.P.) 3 Fruit Research Station, 3-5 Horticultorilor, Cluj-Napoca 400454, Romania * Author to whom correspondence should be addressed; E-Mail: [email protected]; Tel: +4-0264-401-775; Fax: +4-0264-401-768. Received: 22 July 2011; in revised form: 20 August 2011 / Accepted: 8 September 2011 / Published: 15 September 2011 Abstract: The Chi-Square test (χ2 test) is a family of tests based on a series of assumptions and is frequently used in the statistical analysis of experimental data. The aim of our paper was to present solutions to common problems when applying the Chi-square tests for testing goodness-of-fit, homogeneity and independence. The main characteristics of these three tests are presented along with various problems related to their application. The main problems identified in the application of the goodness-of-fit test were as follows: defining the frequency classes, calculating the X2 statistic, and applying the χ2 test. -

Chi-Square Tests

Chi-Square Tests Nathaniel E. Helwig Associate Professor of Psychology and Statistics University of Minnesota October 17, 2020 Copyright c 2020 by Nathaniel E. Helwig Nathaniel E. Helwig (Minnesota) Chi-Square Tests c October 17, 2020 1 / 32 Table of Contents 1. Goodness of Fit 2. Tests of Association (for 2-way Tables) 3. Conditional Association Tests (for 3-way Tables) Nathaniel E. Helwig (Minnesota) Chi-Square Tests c October 17, 2020 2 / 32 Goodness of Fit Table of Contents 1. Goodness of Fit 2. Tests of Association (for 2-way Tables) 3. Conditional Association Tests (for 3-way Tables) Nathaniel E. Helwig (Minnesota) Chi-Square Tests c October 17, 2020 3 / 32 Goodness of Fit A Primer on Categorical Data Analysis In the previous chapter, we looked at inferential methods for a single proportion or for the difference between two proportions. In this chapter, we will extend these ideas to look more generally at contingency table analysis. All of these methods are a form of \categorical data analysis", which involves statistical inference for nominal (or categorial) variables. Nathaniel E. Helwig (Minnesota) Chi-Square Tests c October 17, 2020 4 / 32 Goodness of Fit Categorical Data with J > 2 Levels Suppose that X is a categorical (i.e., nominal) variable that has J possible realizations: X 2 f0;:::;J − 1g. Furthermore, suppose that P (X = j) = πj where πj is the probability that X is equal to j for j = 0;:::;J − 1. PJ−1 J−1 Assume that the probabilities satisfy j=0 πj = 1, so that fπjgj=0 defines a valid probability mass function for the random variable X. -

2 X 2 Contingency Chi-Square

Newsom Psy 522/622 Multiple Regression and Multivariate Quantitative Methods, Winter 2021 1 2 X 2 Contingency Chi-square The 2 X 2 contingency chi-square is used for the comparison of two groups with a dichotomous dependent variable. We might compare males and females on a yes/no response scale, for instance. The contingency chi-square is based on the same principles as the simple chi-square analysis in which we examine the expected vs. the observed frequencies. The computation is quite similar, except that the estimate of the expected frequency is a little harder to determine. Let’s use the Quinnipiac University poll data to examine the extent to which independents (non-party affiliated voters) support Biden and Trump.1 Here are the frequencies: Trump Biden Party affiliated 338 363 701 Independent 125 156 281 463 519 982 To answer the question whether Biden or Trump have a higher proportion of independent voters, we are making a comparison of the proportion of Biden supporters who are independents, 156/519 = .30, or 30.0%, to the proportion of Trump supporters who are independents, 125/463 = .27, or 27.0%. So, the table appears to suggest that Biden's supporters are more likely to be independents then Trump's supporters. Notice that this is a comparison of the conditional proportions, which correspond to column percentages in cross-tabulation 2 output. First, we need to compute the expected frequencies for each cell. R1 is the frequency for row 1, C1 is the frequency for row 2, and N is the total sample size. -

The Modification of the Phi-Coefficient Reducing Its Dependence on The

c Metho ds of Psychological Research Online 1997, Vol.2, No.1 1998 Pabst Science Publishers Internet: http://www.pabst-publishers.de/mpr/ The Mo di cation of the Phi-co ecient Reducing its Dep endence on the Marginal Distributions Peter V. Zysno Abstract The Phi-co ecient is a well known measure of correlation for dichotomous variables. It is worthy of remark, that the extreme values 1 only o ccur in the case of consistent resp onses and symmetric marginal frequencies. Con- sequently low correlations may be due to either inconsistent data, unequal resp onse frequencies or b oth. In order to overcome this somewhat confusing situation various alternative prop osals were made, which generally, remained rather unsatisfactory. Here, rst of all a system has b een develop ed in order to evaluate these measures. Only one of the well-known co ecients satis es the underlying demands. According to the criteria, the Phi-co ecientisac- companied by a formally similar mo di cation, which is indep endent of the marginal frequency distributions. Based on actual data b oth of them can b e easily computed. If the original data are not available { as usual in publica- tions { but the intercorrelations and resp onse frequencies of the variables are, then the grades of asso ciation for assymmetric distributions can b e calculated subsequently. Keywords: Phi-co ecient, indep endent marginal distributions, dichotomous variables 1 Intro duction In the b eginning of this century the Phi-co ecientYule 1912 was develop ed as a correlational measure for dichotomous variables. Its essential features can b e quickly outlined. -

Basic ES Computations, P. 1 BASIC EFFECT SIZE GUIDE with SPSS

Basic ES Computations, p. 1 BASIC EFFECT SIZE GUIDE WITH SPSS AND SAS SYNTAX Gregory J. Meyer, Robert E. McGrath, and Robert Rosenthal Last updated January 13, 2003 Pending: 1. Formulas for repeated measures/paired samples. (d = r / sqrt(1-r^2) 2. Explanation of 'set aside' lambda weights of 0 when computing focused contrasts. 3. Applications to multifactor designs. SECTION I: COMPUTING EFFECT SIZES FROM RAW DATA. I-A. The Pearson Correlation as the Effect Size I-A-1: Calculating Pearson's r From a Design With a Dimensional Variable and a Dichotomous Variable (i.e., a t-Test Design). I-A-2: Calculating Pearson's r From a Design With Two Dichotomous Variables (i.e., a 2 x 2 Chi-Square Design). I-A-3: Calculating Pearson's r From a Design With a Dimensional Variable and an Ordered, Multi-Category Variable (i.e., a Oneway ANOVA Design). I-A-4: Calculating Pearson's r From a Design With One Variable That Has 3 or More Ordered Categories and One Variable That Has 2 or More Ordered Categories (i.e., an Omnibus Chi-Square Design with df > 1). I-B. Cohen's d as the Effect Size I-B-1: Calculating Cohen's d From a Design With a Dimensional Variable and a Dichotomous Variable (i.e., a t-Test Design). SECTION II: COMPUTING EFFECT SIZES FROM THE OUTPUT OF STATISTICAL TESTS AND TRANSLATING ONE EFFECT SIZE TO ANOTHER. II-A. The Pearson Correlation as the Effect Size II-A-1: Pearson's r From t-Test Output Comparing Means Across Two Groups. -

Robust Approximations to the Non-Null Distribution of the Product Moment Correlation Coefficient I: the Phi Coefficient

DOCUMENT RESUME ED 330 706 TM 016 274 AUTHOR Edwards, Lynne K.; Meyers, Sarah A. TITLE Robust Approximations to the Non-Null Distribution of the Product Moment Correlation Coefficient I: The Phi Coefficient. SPONS AGENCY Minnesota Supercomputer Inst. PUB DATE Apr 91 NOTE 18p.; Paper presented at the Annual Meeting of the American Educational Research Association (Chicago, IL, April 3-7, 1991). PUB TYPE Reports - Evaluative/Feasibility (142) -- Speeches/Conference Papers (150) EDRS PRICE MF01/PC01 Plus Postage. DESCRI2TORS *Computer Simulation; *Correlation; Educational Research; *Equations (Mathematics); Estimation (Mathematics); *Mathematical Models; Psychological Studies; *Robustness (Statistics) IDENTIFIERS *Apprv.amation (Statistics); Nonnull Hypothesis; *Phi Coefficient; Product Moment Correlation Coefficient ABSTRACT Correlation coefficients are frequently reported in educational and psychological research. The robustnessproperties and optimality among practical approximations when phi does not equal0 with moderate sample sizes are not well documented. Threemajor approximations and their variations are examined: (1) a normal approximation of Fisher's 2, N(sub 1)(R. A. Fisher, 1915); (2)a student's t based approximation, t(sub 1)(H. C. Kraemer, 1973; A. Samiuddin, 1970), which replaces for each sample size thepopulation phi with phi*, the median of the distribution ofr (the product moment correlation); (3) a normal approximation, N(sub6) (H.C. Kraemer, 1980) that incorporates the kurtosis of the Xdistribution; and (4) five variations--t(sub2), t(sub 1)', N(sub 3), N(sub4),and N(sub4)'--on the aforementioned approximations. N(sub 1)was fcund to be most appropriate, although N(sub 6) always producedthe shortest confidence intervals for a non-null hypothesis. All eight approximations resulted in positively biased rejection ratesfor large absolute values of phi; however, for some conditionswith low values of phi with heteroscedasticity andnon-zero kurtosis, they resulted in the negatively biased empirical rejectionrates. -

Testing Statistical Assumptions in Research Dedicated to My Wife Haripriya Children Prachi-Ashish and Priyam, –J.P.Verma

Testing Statistical Assumptions in Research Dedicated to My wife Haripriya children Prachi-Ashish and Priyam, –J.P.Verma My wife, sweet children, parents, all my family and colleagues. – Abdel-Salam G. Abdel-Salam Testing Statistical Assumptions in Research J. P. Verma Lakshmibai National Institute of Physical Education Gwalior, India Abdel-Salam G. Abdel-Salam Qatar University Doha, Qatar This edition first published 2019 © 2019 John Wiley & Sons, Inc. IBM, the IBM logo, ibm.com, and SPSS are trademarks or registered trademarks of International Business Machines Corporation, registered in many jurisdictions worldwide. Other product and service names might be trademarks of IBM or other companies. A current list of IBM trademarks is available on the Web at “IBM Copyright and trademark information” at www.ibm.com/legal/ copytrade.shtml All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by law.Advice on how to obtain permision to reuse material from this title is available at http://www.wiley.com/go/permissions. The right of J. P. Verma and Abdel-Salam G. Abdel-Salam to be identified as the authors of this work has been asserted in accordance with law. Registered Offices John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, USA Editorial Office 111 River Street, Hoboken, NJ 07030, USA For details of our global editorial offices, customer services, and more information about Wiley products visit us at www.wiley.com. Wiley also publishes its books in a variety of electronic formats and by print-on-demand. -

11 Basic Concepts of Testing for Independence

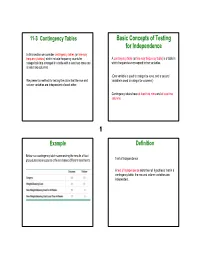

11-3 Contingency Tables Basic Concepts of Testing for Independence In this section we consider contingency tables (or two-way frequency tables), which include frequency counts for A contingency table (or two-way frequency table) is a table in categorical data arranged in a table with a least two rows and which frequencies correspond to two variables. at least two columns. (One variable is used to categorize rows, and a second We present a method for testing the claim that the row and variable is used to categorize columns.) column variables are independent of each other. Contingency tables have at least two rows and at least two columns. 11 Example Definition Below is a contingency table summarizing the results of foot procedures as a success or failure based different treatments. Test of Independence A test of independence tests the null hypothesis that in a contingency table, the row and column variables are independent. Notation Hypotheses and Test Statistic O represents the observed frequency in a cell of a H0 : The row and column variables are independent. contingency table. H1 : The row and column variables are dependent. E represents the expected frequency in a cell, found by assuming that the row and column variables are ()OE− 2 independent χ 2 = ∑ r represents the number of rows in a contingency table (not E including labels). (row total)(column total) c represents the number of columns in a contingency table E = (not including labels). (grand total) O is the observed frequency in a cell and E is the expected frequency in a cell.