The Computation of Transcendental Functions on the IA-64 Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Some Transcendental Functions with an Empty Exceptional Set 3

KYUNGPOOK Math. J. 00(0000), 000-000 Some transcendental functions with an empty exceptional set F. M. S. Lima Institute of Physics, University of Brasilia, Brasilia, DF, Brazil e-mail : [email protected] Diego Marques∗ Department of Mathematics, University de Brasilia, Brasilia, DF, Brazil e-mail : [email protected] Abstract. A transcendental function usually returns transcendental values at algebraic points. The (algebraic) exceptions form the so-called exceptional set, as for instance the unitary set {0} for the function f(z)= ez , according to the Hermite-Lindemann theorem. In this note, we give some explicit examples of transcendental entire functions whose exceptional set are empty. 1 Introduction An algebraic function is a function f(x) which satisfies P (x, f(x)) = 0 for some nonzero polynomial P (x, y) with complex coefficients. Functions that can be con- structed using only a finite number of elementary operations are examples of alge- braic functions. A function which is not algebraic is, by definition, a transcendental function — e.g., basic trigonometric functions, exponential function, their inverses, etc. If f is an entire function, namely a function which is analytic in C, to say that f is a transcendental function amounts to say that it is not a polynomial. By evaluating a transcendental function at an algebraic point of its domain, one usually finds a transcendental number, but exceptions can take place. For a given transcendental function, the set of all exceptions (i.e., all algebraic numbers of the arXiv:1004.1668v2 [math.NT] 25 Aug 2012 function domain whose image is an algebraic value) form the so-called exceptional set (denoted by Sf ). -

A Computational Approach to Solve a System of Transcendental Equations with Multi-Functions and Multi-Variables

mathematics Article A Computational Approach to Solve a System of Transcendental Equations with Multi-Functions and Multi-Variables Chukwuma Ogbonnaya 1,2,* , Chamil Abeykoon 3 , Adel Nasser 1 and Ali Turan 4 1 Department of Mechanical, Aerospace and Civil Engineering, The University of Manchester, Manchester M13 9PL, UK; [email protected] 2 Faculty of Engineering and Technology, Alex Ekwueme Federal University, Ndufu Alike Ikwo, Abakaliki PMB 1010, Nigeria 3 Aerospace Research Institute and Northwest Composites Centre, School of Materials, The University of Manchester, Manchester M13 9PL, UK; [email protected] 4 Independent Researcher, Manchester M22 4ES, Lancashire, UK; [email protected] * Correspondence: [email protected]; Tel.: +44-(0)74-3850-3799 Abstract: A system of transcendental equations (SoTE) is a set of simultaneous equations containing at least a transcendental function. Solutions involving transcendental equations are often problematic, particularly in the form of a system of equations. This challenge has limited the number of equations, with inter-related multi-functions and multi-variables, often included in the mathematical modelling of physical systems during problem formulation. Here, we presented detailed steps for using a code- based modelling approach for solving SoTEs that may be encountered in science and engineering problems. A SoTE comprising six functions, including Sine-Gordon wave functions, was used to illustrate the steps. Parametric studies were performed to visualize how a change in the variables Citation: Ogbonnaya, C.; Abeykoon, affected the superposition of the waves as the independent variable varies from x1 = 1:0.0005:100 to C.; Nasser, A.; Turan, A. -

Some Results on the Arithmetic Behavior of Transcendental Functions

Algebra: celebrating Paulo Ribenboim’s ninetieth birthday SOME RESULTS ON THE ARITHMETIC BEHAVIOR OF TRANSCENDENTAL FUNCTIONS Diego Marques University of Brasilia October 25, 2018 After I found the famous Ribenboim’s book (Chapter 10: What kind of p p 2 number is 2 ?): Algebra: celebrating Paulo Ribenboim’s ninetieth birthday My first transcendental steps In 2005, in an undergraduate course of Abstract Algebra, the professor (G.Gurgel) defined transcendental numbers and asked about the algebraic independence of e and π. Algebra: celebrating Paulo Ribenboim’s ninetieth birthday My first transcendental steps In 2005, in an undergraduate course of Abstract Algebra, the professor (G.Gurgel) defined transcendental numbers and asked about the algebraic independence of e and π. After I found the famous Ribenboim’s book (Chapter 10: What kind of p p 2 number is 2 ?): In 1976, Mahler wrote a book entitled "Lectures on Transcendental Numbers". In its chapter 3, he left three problems, called A,B, and C. The goal of this lecture is to talk about these problems... Algebra: celebrating Paulo Ribenboim’s ninetieth birthday Kurt Mahler Kurt Mahler (Germany, 1903-Australia, 1988) Mahler’s works focus in transcendental number theory, Diophantine approximation, Diophantine equations, etc In its chapter 3, he left three problems, called A,B, and C. The goal of this lecture is to talk about these problems... Algebra: celebrating Paulo Ribenboim’s ninetieth birthday Kurt Mahler Kurt Mahler (Germany, 1903-Australia, 1988) Mahler’s works focus in transcendental number theory, Diophantine approximation, Diophantine equations, etc In 1976, Mahler wrote a book entitled "Lectures on Transcendental Numbers". -

Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Session 11: Performance Analysis and Simulation ICPE ’19, April 7–11, 2019, Mumbai, India Memory Centric Characterization and Analysis of SPEC CPU2017 Suite Sarabjeet Singh Manu Awasthi [email protected] [email protected] Ashoka University Ashoka University ABSTRACT These benchmarks have become the standard for any researcher or In this paper, we provide a comprehensive, memory-centric charac- commercial entity wishing to benchmark their architecture or for terization of the SPEC CPU2017 benchmark suite, using a number of exploring new designs. mechanisms including dynamic binary instrumentation, measure- The latest offering of SPEC CPU suite, SPEC CPU2017, was re- ments on native hardware using hardware performance counters leased in June 2017 [8]. SPEC CPU2017 retains a number of bench- and operating system based tools. marks from previous iterations but has also added many new ones We present a number of results including working set sizes, mem- to reflect the changing nature of applications. Some recent stud- ory capacity consumption and memory bandwidth utilization of ies [21, 24] have already started characterizing the behavior of various workloads. Our experiments reveal that, on the x86_64 ISA, SPEC CPU2017 applications, looking for potential optimizations to SPEC CPU2017 workloads execute a significant number of mem- system architectures. ory related instructions, with approximately 50% of all dynamic In recent years the memory hierarchy, from the caches, all the instructions requiring memory accesses. We also show that there is way to main memory, has become a first class citizen of computer a large variation in the memory footprint and bandwidth utilization system design. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

Original Research Article Hypergeometric Functions On

Original Research Article Hypergeometric Functions on Cumulative Distribution Function ABSTRACT Exponential functions have been extended to Hypergeometric functions. There are many functions which can be expressed in hypergeometric function by using its analytic properties. In this paper, we will apply a unified approach to the probability density function and corresponding cumulative distribution function of the noncentral chi square variate to extract and derive hypergeometric functions. Key words: Generalized hypergeometric functions; Cumulative distribution theory; chi-square Distribution on Non-centrality Parameter. I) INTRODUCTION Higher-order transcendental functions are generalized from hypergeometric functions. Hypergeometric functions are special function which represents a series whose coefficients satisfy many recursion properties. These functions are applied in different subjects and ubiquitous in mathematical physics and also in computers as Maple and Mathematica. They can also give explicit solutions to problems in economics having dynamic aspects. The purpose of this paper is to understand the importance of hypergeometric function in different fields and initiating economists to the large class of hypergeometric functions which are generalized from transcendental function. The paper is organized in following way. In Section II, the generalized hypergeometric series is defined with some of its analytical properties and special cases. In Sections 3 and 4, hypergeometric function and Kummer’s confluent hypergeometric function are discussed in detail which will be directly used in our results. In Section 5, the main result is proved where we derive the exact cumulative distribution function of the noncentral chi sqaure variate. An appendix is attached which summarizes notational abbreviations and function names. The paper is introductory in nature presenting results reduced from general formulae. -

Pre-Calculus-Honors-Accelerated

PUBLIC SCHOOLS OF EDISON TOWNSHIP OFFICE OF CURRICULUM AND INSTRUCTION Pre-Calculus Honors/Accelerated/Academic Length of Course: Term Elective/Required: Required Schools: High School Eligibility: Grade 10-12 Credit Value: 5 Credits Date Approved: September 23, 2019 Pre-Calculus 2 TABLE OF CONTENTS Statement of Purpose 3 Course Objectives 4 Suggested Timeline 5 Unit 1: Functions from a Pre-Calculus Perspective 11 Unit 2: Power, Polynomial, and Rational Functions 16 Unit 3: Exponential and Logarithmic Functions 20 Unit 4: Trigonometric Function 23 Unit 5: Trigonometric Identities and Equations 28 Unit 6: Systems of Equations and Matrices 32 Unit 7: Conic Sections and Parametric Equations 34 Unit 8: Vectors 38 Unit 9: Polar Coordinates and Complex Numbers 41 Unit 10: Sequences and Series 44 Unit 11: Inferential Statistics 47 Unit 12: Limits and Derivatives 51 Pre-Calculus 3 Statement of Purpose Pre-Calculus courses combine the study of Trigonometry, Elementary Functions, Analytic Geometry, and Math Analysis topics as preparation for calculus. Topics typically include the study of complex numbers; polynomial, logarithmic, exponential, rational, right trigonometric, and circular functions, and their relations, inverses and graphs; trigonometric identities and equations; solutions of right and oblique triangles; vectors; the polar coordinate system; conic sections; Boolean algebra and symbolic logic; mathematical induction; matrix algebra; sequences and series; and limits and continuity. This course includes a review of essential skills from algebra, introduces polynomial, rational, exponential and logarithmic functions, and gives the student an in-depth study of trigonometric functions and their applications. Modern technology provides tools for supplementing the traditional focus on algebraic procedures, such as solving equations, with a more visual perspective, with graphs of equations displayed on a screen. -

3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) Zero Ovf

Chapter 3 Arithmetic for Computers 1 § 3.1Introduction Arithmetic for Computers Operations on integers Addition and subtraction Multiplication and division Dealing with overflow Floating-point real numbers Representation and operations Rechnerstrukturen 182.092 3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) zero ovf 1 Must support the Arithmetic/Logic 1 operations of the ISA A 32 add, addi, addiu, addu ALU result sub, subu 32 mult, multu, div, divu B 32 sqrt 4 and, andi, nor, or, ori, xor, xori m (operation) beq, bne, slt, slti, sltiu, sltu With special handling for sign extend – addi, addiu, slti, sltiu zero extend – andi, ori, xori overflow detection – add, addi, sub Rechnerstrukturen 182.092 3 — Arithmetic for Computers 3 (Simplyfied) 1-bit MIPS ALU AND, OR, ADD, SLT Rechnerstrukturen 182.092 3 — Arithmetic for Computers 4 Final 32-bit ALU Rechnerstrukturen 182.092 3 — Arithmetic for Computers 5 Performance issues Critical path of n-bit ripple-carry adder is n*CP CarryIn0 A0 1-bit result0 ALU B0 CarryOut0 CarryIn1 A1 1-bit result1 B ALU 1 CarryOut 1 CarryIn2 A2 1-bit result2 ALU B2 CarryOut CarryIn 2 3 A3 1-bit result3 ALU B3 CarryOut3 Design trick – throw hardware at it (Carry Lookahead) Rechnerstrukturen 182.092 3 — Arithmetic for Computers 6 Carry Lookahead Logic (4 bit adder) LS 283 Rechnerstrukturen 182.092 3 — Arithmetic for Computers 7 § 3.2 Addition and Subtraction 3.2 Integer Addition Example: 7 + 6 Overflow if result out of range Adding +ve and –ve operands, no overflow Adding two +ve operands -

Computing Transcendental Functions

Computing Transcendental Functions Iris Oved [email protected] 29 April 1999 Exponential, logarithmic, and trigonometric functions are transcendental. They are peculiar in that their values cannot be computed in terms of finite compositions of arithmetic and/or algebraic operations. So how might one go about computing such functions? There are several approaches to this problem, three of which will be outlined below. We will begin with Taylor approxima- tions, then take a look at a method involving Minimax Polynomials, and we will conclude with a brief consideration of CORDIC approximations. While the discussion will specifically cover methods for calculating the sine function, the procedures can be generalized for the calculation of other transcendental functions as well. 1 Taylor Approximations One method for computing the sine function is to find a polynomial that allows us to approximate the sine locally. A polynomial is a good approximation to the sine function near some point, x = a, if the derivatives of the polynomial at the point are equal to the derivatives of the sine curve at that point. The more derivatives the polynomial has in common with the sine function at the point, the better the approximation. Thus the higher the degree of the polynomial, the better the approximation. A polynomial that is found by this method is called a Taylor Polynomial. Suppose we are looking for the Taylor Polynomial of degree 1 approximating the sine function near x = 0. We will call the polynomial p(x) and the sine function f(x). We want to find a linear function p(x) such that p(0) = f(0) and p0(0) = f 0(0). -

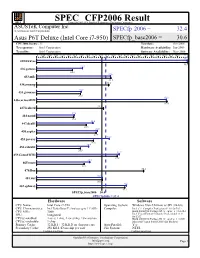

Asustek Computer Inc.: Asus P6T

SPEC CFP2006 Result spec Copyright 2006-2014 Standard Performance Evaluation Corporation ASUSTeK Computer Inc. (Test Sponsor: Intel Corporation) SPECfp 2006 = 32.4 Asus P6T Deluxe (Intel Core i7-950) SPECfp_base2006 = 30.6 CPU2006 license: 13 Test date: Oct-2008 Test sponsor: Intel Corporation Hardware Availability: Jun-2009 Tested by: Intel Corporation Software Availability: Nov-2008 0 3.00 6.00 9.00 12.0 15.0 18.0 21.0 24.0 27.0 30.0 33.0 36.0 39.0 42.0 45.0 48.0 51.0 54.0 57.0 60.0 63.0 66.0 71.0 70.7 410.bwaves 70.8 21.5 416.gamess 16.8 34.6 433.milc 34.8 434.zeusmp 33.8 20.9 435.gromacs 20.6 60.8 436.cactusADM 61.0 437.leslie3d 31.3 17.6 444.namd 17.4 26.4 447.dealII 23.3 28.5 450.soplex 27.9 34.0 453.povray 26.6 24.4 454.calculix 20.5 38.7 459.GemsFDTD 37.8 24.9 465.tonto 22.3 470.lbm 50.2 30.9 481.wrf 30.9 42.1 482.sphinx3 41.3 SPECfp_base2006 = 30.6 SPECfp2006 = 32.4 Hardware Software CPU Name: Intel Core i7-950 Operating System: Windows Vista Ultimate w/ SP1 (64-bit) CPU Characteristics: Intel Turbo Boost Technology up to 3.33 GHz Compiler: Intel C++ Compiler Professional 11.0 for IA32 CPU MHz: 3066 Build 20080930 Package ID: w_cproc_p_11.0.054 Intel Visual Fortran Compiler Professional 11.0 FPU: Integrated for IA32 CPU(s) enabled: 4 cores, 1 chip, 4 cores/chip, 2 threads/core Build 20080930 Package ID: w_cprof_p_11.0.054 CPU(s) orderable: 1 chip Microsoft Visual Studio 2008 (for libraries) Primary Cache: 32 KB I + 32 KB D on chip per core Auto Parallel: Yes Secondary Cache: 256 KB I+D on chip per core File System: NTFS Continued on next page Continued on next page Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CFP2006 Result spec Copyright 2006-2014 Standard Performance Evaluation Corporation ASUSTeK Computer Inc. -

Modeling and Analyzing CPU Power and Performance: Metrics, Methods, and Abstractions

Modeling and Analyzing CPU Power and Performance: Metrics, Methods, and Abstractions Margaret Martonosi David Brooks Pradip Bose VET NOV TES TAM EN TVM DE I VI GE T SV B NV M I NE Moore’s Law & Power Dissipation... Moore’s Law: ❚ The Good News: 2X Transistor counts every 18 months ❚ The Bad News: To get the performance improvements we’re accustomed to, CPU Power consumption will increase exponentially too... (Graphs courtesy of Fred Pollack, Intel) Why worry about power dissipation? Battery life Thermal issues: affect cooling, packaging, reliability, timing Environment Hitting the wall… ❚ Battery technology ❚ ❙ Linear improvements, nowhere Past: near the exponential power ❙ Power important for increases we’ve seen laptops, cell phones ❚ Cooling techniques ❚ Present: ❙ Air-cooled is reaching limits ❙ Power a Critical, Universal ❙ Fans often undesirable (noise, design constraint even for weight, expense) very high-end chips ❙ $1 per chip per Watt when ❚ operating in the >40W realm Circuits and process scaling can no longer solve all power ❙ Water-cooled ?!? problems. ❚ Environment ❙ SYSTEMS must also be ❙ US EPA: 10% of current electricity usage in US is directly due to power-aware desktop computers ❙ Architecture, OS, compilers ❙ Increasing fast. And doesn’t count embedded systems, Printers, UPS backup? Power: The Basics ❚ Dynamic power vs. Static power vs. short-circuit power ❙ “switching” power ❙ “leakage” power ❙ Dynamic power dominates, but static power increasing in importance ❙ Trends in each ❚ Static power: steady, per-cycle energy cost ❚ Dynamic power: power dissipation due to capacitance charging at transitions from 0->1 and 1->0 ❚ Short-circuit power: power due to brief short-circuit current during transitions. -

Continuous Profiling: Where Have All the Cycles Gone?

Continuous Profiling: Where Have All the Cycles Gone? JENNIFER M. ANDERSON, LANCE M. BERC, JEFFREY DEAN, SANJAY GHEMAWAT, MONIKA R. HENZINGER, SHUN-TAK A. LEUNG, RICHARD L. SITES, MARK T. VANDEVOORDE, CARL A. WALDSPURGER, and WILLIAM E. WEIHL Digital Equipment Corporation This article describes the Digital Continuous Profiling Infrastructure, a sampling-based profiling system designed to run continuously on production systems. The system supports multiprocessors, works on unmodified executables, and collects profiles for entire systems, including user programs, shared libraries, and the operating system kernel. Samples are collected at a high rate (over 5200 samples/sec. per 333MHz processor), yet with low overhead (1–3% slowdown for most workloads). Analysis tools supplied with the profiling system use the sample data to produce a precise and accurate accounting, down to the level of pipeline stalls incurred by individual instructions, of where time is being spent. When instructions incur stalls, the tools identify possible reasons, such as cache misses, branch mispredictions, and functional unit contention. The fine-grained instruction-level analysis guides users and automated optimizers to the causes of performance problems and provides important insights for fixing them. Categories and Subject Descriptors: C.4 [Computer Systems Organization]: Performance of Systems; D.2.2 [Software Engineering]: Tools and Techniques—profiling tools; D.2.6 [Pro- gramming Languages]: Programming Environments—performance monitoring; D.4 [Oper- ating Systems]: General; D.4.7 [Operating Systems]: Organization and Design; D.4.8 [Operating Systems]: Performance General Terms: Performance Additional Key Words and Phrases: Profiling, performance understanding, program analysis, performance-monitoring hardware An earlier version of this article appeared at the 16th ACM Symposium on Operating System Principles (SOSP), St.