Administrator Manual Revision: 58F161e

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sample Docx File Downloadable Right Here

Sample docx file downloadable right here. This is the most likely source of the files for this repo and will provide you with a basic understanding of how git works using Linux's Linux repository repository. On our server side of the system, we can use git-reload, that is, we need only the root directory of every binary and it will be displayed. So just install git with a basic command from the "packages" field and make a new directory to run git:reload on. Open the newly created copy and make a new git backup of the file, in the name of the original copy. Run git pull to generate an updated version to build this code into one file of every binary you wish to get a git version to build into. (See also the article). Now just get the latest release and place the git remote in that directory you just downloaded. The remote is now going to show up in one directory (from your local machine or other work you will never even have to write, you could change a few values on the build machine later). After the build gets started you will be able to start that next step with: $ cd git-reload then start from the root directory. Note to developers: If you forget to go over the manual, if they can't figure out the new command and there's only one change that looks like we need to add it to the repo right after, this will likely screw you out. -

Chrooting All Services in Linux

LinuxFocus article number 225 http://linuxfocus.org Chrooting All Services in Linux by Mark Nielsen (homepage) About the author: Abstract: Mark works as an independent consultant Chrooted system services improve security by limiting damage that donating time to causes like someone who broke into the system can possibly do. GNUJobs.com, writing _________________ _________________ _________________ articles, writing free software, and working as a volunteer at eastmont.net. Introduction What is chroot? Chroot basically redefines the universe for a program. More accurately, it redefines the "ROOT" directory or "/" for a program or login session. Basically, everything outside of the directory you use chroot on doesn't exist as far a program or shell is concerned. Why is this useful? If someone breaks into your computer, they won't be able to see all the files on your system. Not being able to see your files limits the commands they can do and also doesn't give them the ability to exploit other files that are insecure. The only drawback is, I believe it doesn't stop them from looking at network connections and other stuff. Thus, you want to do a few more things which we won't get into in this article too much: Secure your networking ports. Have all services run as a service under a non-root account. In addition, have all services chrooted. Forward syslogs to another computer. Analyze logs files Analyze people trying to detect random ports on your computer Limit cpu and memory resources for a service. Activate account quotas. The reason why I consider chroot (with a non-root service) to be a line of defense is, if someone breaks in under a non-root account, and there are no files which they can use to break into root, then they can only limit damage to the area they break in. -

Operating System Boot from Fully Encrypted Device

Masaryk University Faculty of Informatics Operating system boot from fully encrypted device Bachelor’s Thesis Daniel Chromik Brno, Fall 2016 Replace this page with a copy of the official signed thesis assignment and the copy of the Statement of an Author. Declaration Hereby I declare that this paper is my original authorial work, which I have worked out by my own. All sources, references and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Daniel Chromik Advisor: ing. Milan Brož i Acknowledgement I would like to thank my advisor, Ing. Milan Brož, for his guidance and his patience of a saint. Another round of thanks I would like to send towards my family and friends for their support. ii Abstract The goal of this work is description of existing solutions for boot- ing Linux and Windows from fully encrypted devices with Secure Boot. Before that, though, early boot process and bootloaders are de- scribed. A simple Linux distribution is then set up to boot from a fully encrypted device. And lastly, existing Windows encryption solutions are described. iii Keywords boot process, Linux, Windows, disk encryption, GRUB 2, LUKS iv Contents 1 Introduction ............................1 1.1 Thesis goals ..........................1 1.2 Thesis structure ........................2 2 Boot Process Description ....................3 2.1 Early Boot Process ......................3 2.2 Firmware interfaces ......................4 2.2.1 BIOS – Basic Input/Output System . .4 2.2.2 UEFI – Unified Extended Firmware Interface .5 2.3 Partitioning tables ......................5 2.3.1 MBR – Master Boot Record . -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

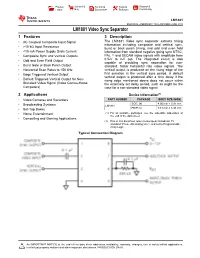

LM1881 Video Sync Separator Datasheet

Product Sample & Technical Tools & Support & Folder Buy Documents Software Community LM1881 SNLS384G –FEBRUARY 1995–REVISED JUNE 2015 LM1881 Video Sync Separator 1 Features 3 Description The LM1881 Video sync separator extracts timing 1• AC Coupled Composite Input Signal information including composite and vertical sync, • >10-kΩ Input Resistance burst or back porch timing, and odd and even field • <10-mA Power Supply Drain Current information from standard negative going sync NTSC, • Composite Sync and Vertical Outputs PAL (1) and SECAM video signals with amplitude from • Odd and Even Field Output 0.5-V to 2-V p-p. The integrated circuit is also capable of providing sync separation for non- • Burst Gate or Back Porch Output standard, faster horizontal rate video signals. The • Horizontal Scan Rates to 150 kHz vertical output is produced on the rising edge of the • Edge Triggered Vertical Output first serration in the vertical sync period. A default vertical output is produced after a time delay if the • Default Triggered Vertical Output for Non- rising edge mentioned above does not occur within Standard Video Signal (Video Games-Home the externally set delay period, such as might be the Computers) case for a non-standard video signal. 2 Applications Device Information(1) • Video Cameras and Recorders PART NUMBER PACKAGE BODY SIZE (NOM) SOIC (8) 4.90 mm × 3.91 mm • Broadcasting Systems LM1881 • Set-Top Boxes PDIP (8) 9.81 mm × 6.35 mm • Home Entertainment (1) For all available packages, see the orderable addendum at the end of the data sheet. • Computing and Gaming Applications (1) PAL in this datasheet refers to European broadcast TV standard “Phase Alternating Line”, and not to Programmable Array Logic. -

White Paper: Indestructible Firewall in a Box V1.0 Nick Mccubbins

White Paper: Indestructible Firewall In A Box v1.0 Nick McCubbins 1.1 Credits • Nathan Yawn ([email protected]) 1.2 Acknowledgements • Firewall-HOWTO • Linux Router Project • LEM 1.3 Revision History • Version 1.0 First public release 1.4 Feedback • Send all information and/or criticisms to [email protected] 1.5 Distribution Policy 2 Abstract In this document, the procedure for creating an embedded firewall whose root filesystem is loaded from a flash disk and then executed from a RAMdisk will be illustrated. A machine such as this has uses in many environments, from corporate internet access to sharing of a cable modem or xDSL connection among many computers. It has the advantages of being very light and fast, being impervious to filesystem corruption due to power loss, and being largely impervious to malicious crackers. The type of firewall illustrated herein is a simple packet-filtering, masquerading setup. Facilities for this already exist in the Linux kernel, keeping the system's memory footprint small. As such the device lends itself to embedding very well. For a more detailed description of firewall particulars, see the Linux Firewall-HOWTO. 3 Equipment This project has minimal hardware requirements. An excellent configuration consists of: For a 100-baseT network: • SBC-554 Pentium SBC with PISA bus and on-board PCI NIC (http://www.emacinc.com/pc.htm#pentiumsbc), approx. $373 • PISA backplane, chassis, power supply (http://www.emacinc.com/sbcpc_addons/mbpc641.htm), approx. $305 • Second PCI NIC • 32 MB RAM • 4 MB M-Systems Flash Disk (minimum), approx. $45 For a 10-baseT network: • EMAC's Standard Server-in-a-Box product (http://www.emacinc.com/server_in_a_box.htm), approx. -

Slurm Overview and Elasticsearch Plugin

Slurm Workload Manager Overview SC15 Alejandro Sanchez [email protected] Copyright 2015 SchedMD LLC http://www.schedmd.com Slurm Workload Manager Overview ● Originally intended as simple resource manager, but has evolved into sophisticated batch scheduler ● Able to satisfy scheduling requirements for major computer centers with use of optional plugins ● No single point of failure, backup daemons, fault-tolerant job options ● Highly scalable (3.1M core Tianhe-2 at NUDT) ● Highly portable (autoconf, extensive plugins for various environments) ● Open source (GPL v2) ● Operating on many of the world's largest computers ● About 500,000 lines of code today (plus test suite and documentation) Copyright 2015 SchedMD LLC http://www.schedmd.com Enterprise Architecture Copyright 2015 SchedMD LLC http://www.schedmd.com Architecture ● Kernel with core functions plus about 100 plugins to support various architectures and features ● Easily configured using building-block approach ● Easy to enhance for new architectures or features, typically just by adding new plugins Copyright 2015 SchedMD LLC http://www.schedmd.com Elasticsearch Plugin Copyright 2015 SchedMD LLC http://www.schedmd.com Scheduling Capabilities ● Fair-share scheduling with hierarchical bank accounts ● Preemptive and gang scheduling (time-slicing parallel jobs) ● Integrated with database for accounting and configuration ● Resource allocations optimized for topology ● Advanced resource reservations ● Manages resources across an enterprise Copyright 2015 SchedMD LLC http://www.schedmd.com -

Making Linux Protection Mechanisms Egalitarian with Userfs

Making Linux Protection Mechanisms Egalitarian with UserFS Taesoo Kim and Nickolai Zeldovich MIT CSAIL ABSTRACT firewall rules, forcing applications to invent their own UserFS provides egalitarian OS protection mechanisms protection techniques like system call interposition [15], in Linux. UserFS allows any user—not just the system binary rewriting [30] or analysis [13, 45], or interposing administrator—to allocate Unix user IDs, to use chroot, on system accesses in a language runtime like Javascript. and to set up firewall rules in order to confine untrusted This paper presents the design of UserFS, a kernel code. One key idea in UserFS is representing user IDs as framework that allows any application to use traditional files in a /proc-like file system, thus allowing applica- OS protection mechanisms on a Unix system, and a proto- tions to manage user IDs like any other files, by setting type implementation of UserFS for Linux. UserFS makes permissions and passing file descriptors over Unix do- protection mechanisms egalitarian, so that any user—not main sockets. UserFS addresses several challenges in just the system administrator—can allocate new user IDs, making user IDs egalitarian, including accountability, re- set up firewall rules, and isolate processes using chroot. source allocation, persistence, and UID reuse. We have By using the operating system’s own protection mecha- ported several applications to take advantage of UserFS; nisms, applications can avoid race conditions and ambi- by changing just tens to hundreds of lines of code, we guities associated with system call interposition [14, 43], prevented attackers from exploiting application-level vul- can confine existing code without having to recompile or nerabilities, such as code injection or missing ACL checks rewrite it in a new language, and can enforce a coherent in a PHP-based wiki application. -

Sandboxing 2 Change Root: Chroot()

Sandboxing 2 Change Root: chroot() Oldest Unix isolation mechanism Make a process believe that some subtree is the entire file system File outside of this subtree simply don’t exist Sounds good, but. Sandboxing 2 2 / 47 Chroot Sandboxing 2 3 / 47 Limitations of Chroot Only root can invoke it. (Why?) Setting up minimum necessary environment can be painful The program to execute generally needs to live within the subtree, where it’s exposed Still vulnerable to root compromise Doesn’t protect network identity Sandboxing 2 4 / 47 Root versus Chroot Suppose an ordinary user could use chroot() Create a link to the sudo command Create /etc and /etc/passwd with a known root password Create links to any files you want to read or write Besides, root can escape from chroot() Sandboxing 2 5 / 47 Escaping Chroot What is the current directory? If it’s not under the chroot() tree, try chdir("../../..") Better escape: create device files On Unix, all (non-network) devices have filenames Even physical memory has a filename Create a physical memory device, open it, and change the kernel data structures to remove the restriction Create a disk device, and mount a file system on it. Then chroot() to the real root (On Unix systems, disks other than the root file system are “mounted” as a subtree somewhere) Sandboxing 2 6 / 47 Trying Chroot # mkdir /usr/sandbox /usr/sandbox/bin # cp /bin/sh /usr/sandbox/bin/sh # chroot /usr/sandbox /bin/sh chroot: /bin/sh: Exec format error # mkdir /usr/sandbox/libexec # cp /libexec/ld.elf_so /usr/sandbox/libexec # chroot /usr/sandbox -

Chapter 3. Booting Operating Systems

Chapter 3. Booting Operating Systems Abstract: Chapter 3 provides a complete coverage on operating systems booting. It explains the booting principle and the booting sequence of various kinds of bootable devices. These include booting from floppy disk, hard disk, CDROM and USB drives. Instead of writing a customized booter to boot up only MTX, it shows how to develop booter programs to boot up real operating systems, such as Linux, from a variety of bootable devices. In particular, it shows how to boot up generic Linux bzImage kernels with initial ramdisk support. It is shown that the hard disk and CDROM booters developed in this book are comparable to GRUB and isolinux in performance. In addition, it demonstrates the booter programs by sample systems. 3.1. Booting Booting, which is short for bootstrap, refers to the process of loading an operating system image into computer memory and starting up the operating system. As such, it is the first step to run an operating system. Despite its importance and widespread interests among computer users, the subject of booting is rarely discussed in operating system books. Information on booting are usually scattered and, in most cases, incomplete. A systematic treatment of the booting process has been lacking. The purpose of this chapter is to try to fill this void. In this chapter, we shall discuss the booting principle and show how to write booter programs to boot up real operating systems. As one might expect, the booting process is highly machine dependent. To be more specific, we shall only consider the booting process of Intel x86 based PCs. -

Sonexion 1.5 Upgrade Guide ()

Sonexion 1.5 Upgrade Guide () Contents About Sonexion 1.5 Upgrade Guide..........................................................................................................................3 Sonexion 1.5.0 Upgrade Introduction........................................................................................................................4 Prerequisites..............................................................................................................................................................8 Begin Upgrade.........................................................................................................................................................10 Upgrade Sonexion 1600/900 Software....................................................................................................................17 Post-Installation Steps.............................................................................................................................................28 Troubleshoot from Error Messages.........................................................................................................................33 2 -- () About Sonexion 1.5 Upgrade Guide This document provides instructions to upgrade Sonexion systems running software release 1.4.0 to the 1.5.0 software platform. Audience This publication is intended for use by Cray field technicicans who are trained with Sonexion and familiar wtih the software upgrade process. Typographic Conventions Monospace A Monospace font indicates program code, -

The Linux Command Line

The Linux Command Line Fifth Internet Edition William Shotts A LinuxCommand.org Book Copyright ©2008-2019, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042. A version of this book is also available in printed form, published by No Starch Press. Copies may be purchased wherever fine books are sold. No Starch Press also offers elec- tronic formats for popular e-readers. They can be reached at: https://www.nostarch.com. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. Release History Version Date Description 19.01A January 28, 2019 Fifth Internet Edition (Corrected TOC) 19.01 January 17, 2019 Fifth Internet Edition. 17.10 October 19, 2017 Fourth Internet Edition. 16.07 July 28, 2016 Third Internet Edition. 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. Table of Contents Introduction....................................................................................................xvi Why Use the Command Line?......................................................................................xvi