The Quality of the Pictures in This Thesis May Vary from Those in the Original File, Owing to the Reduction in File Size That Wa

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Thebrain 10 User Guide I Table of Contents

USER GUIDE Companion for using Version 10 of TheBrain. Posted July 2019 ©2019. TheBrain Technologies LP. All Rights Reserved. TheBrain, PersonalBrain, Brain, Thought, Thoughts, and Work the Way You Think are trademarks or registered trademarks of TheBrain Technologies LP. Other trademarks or service marks appearing herein are the property of their respective owners. Table of Contents Table of Contents Introduction ....................................................................................................................... 1 Thoughts are Information........................................................................................ 1 TheBrain’s Dynamic Graphical Interface ................................................................ 2 Cross Platform Accessibility ................................................................................... 2 About this Guide ..................................................................................................... 2 Note to macOS Users ............................................................................................. 3 Installing TheBrain and Creating an Account ......................................................... 8 Opening Older Brains ........................................................................................... 11 Suggestions for Transitioning to Your New Brain ................................................ 13 A Guided Tour of TheBrain ............................................................................................ 14 Part -

Thebrain 7 User Guide I Table of Contents

USER GUIDE Companion for using Version 7 of TheBrain. Posted May, 2012 © 2012. TheBrain Technologies LP. All Rights Reserved. TheBrain, PersonalBrain, Brain, Thought, Thoughts, and Work the Way You Think are trademarks or registered trademarks of TheBrain Technologies LP. Other trademarks or service marks appearing herein are the property of their respective owners. Table of Contents Table of Contents Introduction .................................................................................................................. 1 Thoughts are Information .................................................................................... 2 Dynamic Graphical Interface ............................................................................... 2 Operating Systems ............................................................................................. 2 About this Guide ................................................................................................. 2 Note to Macintosh Users ..................................................................................... 3 Opening Older Brains ......................................................................................... 7 Suggestions for Transitioning to Your New Brain ................................................ 9 A Guided Tour of TheBrain .........................................................................................10 Part 1: Explore the Brain Window ..................................................................... 10 Start Up ........................................................................................................... -

Up and Running with Writeitnow 5 Rob Walton and David Lovelock to Accompany Version 5.0.4E of Writeitnow 5

Up and Running with WriteItNow 5 Rob Walton and David Lovelock To accompany Version 5.0.4e of WriteItNow 5 2nd Edition 2018-11-01 Ravenshead Services, Ltd. www.ravensheadservices.com All rights reserved c 2015{2018 Ravenshead Services, Ltd. To accompany Version 5.0.4e of WriteItNow 5 2nd Edition The information contained in this manual is provided AS IS without any warranty, either expressed or implied, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. The Ravenshead Services, Ltd. or the Contributors will not be liable for any special, incidental, consequential or indirect damages due to loss of data or any other reason. Printed in the United Kingdom Disclaimer Ravenshead Services, Ltd. cannot accept any responsibility for any outcome arising from the use of this manual. The Ravenshead Services, Ltd. may not be held liable in any way for any loss, cost, damage, liability or expense arising from the use of this manual. Writing is an exploration. You start from nothing and learn as you go. E.L. Doctorow Preface This section deals with the Manual|how to navigate it, and how to use it. The remainder of the Manual is devoted to WriteItNow 5 |how to navigate it, and how to use it. Navigating this Manual This manual uses \hot" links allowing the reader to navigate easily. For example, if the text states that the Index starts on page 354, then clicking on that page number (354) takes the reader to the Index. (Try it!) The same is true for Part numbers, Chapter numbers, Section numbers, Appendix letters, Figure numbers, Table numbers, and the page numbers in the Index. -

Thebrain 10 User Guide I Table of Contents

USER GUIDE Companion for using Version 10 of TheBrain. Posted April 2019 ©2019. TheBrain Technologies LP. All Rights Reserved. TheBrain, PersonalBrain, Brain, Thought, Thoughts, and Work the Way You Think are trademarks or registered trademarks of TheBrain Technologies LP. Other trademarks or service marks appearing herein are the property of their respective owners. Table of Contents Table of Contents Introduction .................................................................................................................. 1 Thoughts are Information .................................................................................... 1 TheBrain’s Dynamic Graphical Interface ............................................................. 2 Cross Platform Accessibility ................................................................................ 2 About this Guide ................................................................................................. 2 Note to Macintosh Users ..................................................................................... 3 Installing TheBrain and Creating an Account ...................................................... 8 Opening Older Brains ....................................................................................... 11 Suggestions for Transitioning to Your New Brain .............................................. 13 A Guided Tour of TheBrain .........................................................................................14 Part 1: Start Up and Explore the Brain -

Mind Maps for Genealogy

Mind Maps for Genealogy Presented to SCCHGS January 19, 2016 by Ron Arons Mind Map of Handout Created with XMind 1. What are mind maps? - Graphical/visual outlining tools - Used by individuals and corporations alike - Have been used for centuries - Products and services to automate creation of mind maps available for 10+ years - Normally used for brainstorming and (more) creative thinking 2. How are mind maps different from other tools used for genealogical research and reporting? - They work well with unstructured data as well as structured data (spreadsheets and std. genealogical programs normally work with structured data) - More visual and colorful than spreadsheets, genealogy programs, and narratives - A general tool; not designed specifically for genealogy (a pro and a con) 3. Uses of mind maps for genealogy: - Research planning - Keeping a research log - Data correlation and analysis (This is where they truly excel!) - Tackling difficult 'brick wall' problems - Reporting final results 4. Mind map product/service options: - FreeMind freemind.sourceforge.net - XMind www.xmind.net (c) Copyright 2016, Ron Arons 1 Talk Given to SCCHGS - MindJet MindManager www.mindjet.com/mindmanager - MindMeister www.mindmeister.com - iMindQ www.imindq.com - MindGenius www.mindgenius.com - The Brain www.thebrain.com - iMindMap www.thinkbuzan.com/products/imindmap - ConceptDraw www.conceptdraw.com - Popplet www.popplet.com - Coggle www.coggle.it - NovaMind www.novamind.com - Bubbl.us www.bubbl.us - Mind42 www.mind42.com - Connected Mind (Google Chrome extension) chrome.google.com - MindNode www.mindnode.com - Mindomo www.mindomo.com - SimpleMind www.simpleapps.eu/simplemind - Scapple www.literatureandlatte.com/scapple.php - Stormboard www.stormboard.com - LucidChart www.lucidchart.com - MindMup www.mindmup.com - MindMapMaker www.mindmapmaker.org 5. -

Personal Knowledge Models with Semantic Technologies

Max Völkel Personal Knowledge Models with Semantic Technologies Personal Knowledge Models with Semantic Technologies Max Völkel 2 Bibliografische Information Detaillierte bibliografische Daten sind im Internet über http://pkm. xam.de abrufbar. Covergestaltung: Stefanie Miller Herstellung und Verlag: Books on Demand GmbH, Norderstedt c 2010 Max Völkel, Ritterstr. 6, 76133 Karlsruhe This work is licensed under the Creative Commons Attribution- ShareAlike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-sa/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Fran- cisco, California, 94105, USA. Zur Erlangung des akademischen Grades eines Doktors der Wirtschaftswis- senschaften (Dr. rer. pol.) von der Fakultät für Wirtschaftswissenschaften des Karlsruher Instituts für Technologie (KIT) genehmigte Dissertation von Dipl.-Inform. Max Völkel. Tag der mündlichen Prüfung: 14. Juli 2010 Referent: Prof. Dr. Rudi Studer Koreferent: Prof. Dr. Klaus Tochtermann Prüfer: Prof. Dr. Gerhard Satzger Vorsitzende der Prüfungskommission: Prof. Dr. Christine Harbring Abstract Following the ideas of Vannevar Bush (1945) and Douglas Engelbart (1963), this thesis explores how computers can help humans to be more intelligent. More precisely, the idea is to reduce limitations of cognitive processes with the help of knowledge cues, which are external reminders about previously experienced internal knowledge. A knowledge cue is any kind of symbol, pattern or artefact, created with the intent to be used by its creator, to re- evoke a previously experienced mental state, when used. The main processes in creating, managing and using knowledge cues are analysed. Based on the resulting knowledge cue life-cycle, an economic analysis of costs and benefits in Personal Knowledge Management (PKM) processes is performed. -

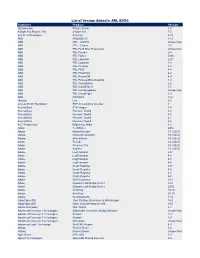

List of Version Added in ARL #2593 Publisher Product Version 3Dconnexion Picture Viewer 1.0 4-Sight Fax Project, the 4-Sight Fax 7.5 A.N.D

List of Version Added in ARL #2593 Publisher Product Version 3Dconnexion Picture Viewer 1.0 4-Sight Fax Project, The 4-Sight Fax 7.5 A.N.D. Technologies Pcounter 2.87 ABB PROMOD IV 11.3 ABB PEL - CAPRE Unspecified ABB PEL - CValve 2.5 ABB PEL Fluid Flow Framework Unspecified ABB PEL Fenske 2.4 ABB PEL Flonet 2016 ABB PEL Launcher 22.0 ABB PEL Logidraw 4.0 ABB PEL Packing 2.5 ABB PEL PEW 4.4 ABB PEL PhysPack 4.2 ABB PEL ProvueDB 4.3 ABB PEL ProvueDBNetDesktop 1.2 ABB PEL VisualAdrian 2.2 ABB PEL VisualFlonet 1.5 ABB PEL VisualLogidraw Unspecified ABB PEL VisualPiper 1.3 ABB RTUtil500 12.2 Ableton Live 9.0 Access for All Foundation PDF Accessibility Checker 3 AccessData FTK Imager 4.5 AccessData Forensic Toolkit 3.2 AccessData Forensic Toolkit 5.5 AccessData Forensic Toolkit 6.2 AccessData Forensic Toolkit 6.4 ACT Productions Brightness Slider 1.2 Adivo TechWriter 2012 Adobe Media Encoder CC (2021) Adobe Character Animator CC (2021) Adobe After Effects CC (2021) Adobe Prelude CC (2021) Adobe Premiere Pro CC (2021) Adobe Audition CC (2021) Adobe LogTransport 2.9 Adobe LogTransport 4.4 Adobe LogTransport 4.5 Adobe LogTransport 4.6 Adobe Crash Reporter 2.9 Adobe Crash Reporter 4.4 Adobe Crash Reporter 4.5 Adobe Crash Reporter 4.6 Adobe DNG Converter 12.1 Adobe Dynamic Link Media Server 14.0 Adobe Dynamic Link Media Server 2020 Adobe AcroTray 15.20 Adobe AcroTray 20.13 Adobe CineRenderAE 17.0 AdoptOpenJDK Java Runtime Environment with Hotspot 14.0 AdoptOpenJDK Open Java Development Kit 15.0 Adrian Granados WiFi Signal 4.4 Advanced Conveyor -

Download the 2018 Annual Report

Annual Report 2018 Kindly supported by: Biggerplate.com:Biggerplate.com: The The Home Home of of Mind Mind Mapping Mapping Welcome to our 2018 Annual Report! Introduction For the last 5 years, our Annual Report has Table of Contents been published with the aim of giving you a unique insight into the current state of the Biggerplate.com Summary mind mapping world according to Bigger- plate.com and our global member community. 2017 Review 3-5 2018 Priorities 7 I'm delighted to welcome you to this 5th edition, and I hope you will find the contents helpful and informative, regardless of whether you are new 2018 Annual Survey to mind mapping, an experienced expert, a software developer, or someone who acciden- Participant Profile 9 tally downloaded this report... Countries 9 Demographics 11 The report aims to provide a snapshot of the Job Roles 11 mind mapping sector, based on insights Industries 13 shared by over 1,000 mind map users who took part in our Annual Survey, which ran from late Mind Maps in Action 15 February to the end of March 2018. The results Mapping Adoption 15 provide insight into what and how people are Frequency 17 mind mapping, including a new in-depth view Collaboration 17 of how they rate their favourite software across Benefits 17 a number of mind map specific indicators. You Uses 19 can read more about the new 'Software Score- cards' in the relevant section in the report, but Tools & Technology 20 the over-arching goal is to provide better insight Methods 20 for people and organisations who might be new Devices & OS 21 to mind mapping, and trying to understand the various strengths of the different software and Software Scorecards 22 app products in the market. -

Mindmapping - a Perfect Tool for the Visual Brain

Mindmapping - A Perfect Tool for the Visual Brain Patricia Brück efsli Webinar, 14.12.2020 Your Brain is a Sleeping Giant and Mindmapping is here to help wake it up! Tony Buzan Mind mapping ▪ Activates whole-brain thinking • Logical left-hand –verbal, analytical • Creative right-hand – visual, perceptual ▪ Betty Edwards : two divided ways of thinking (Drawing on the Right Side of the Brain, 1979) ▪ Tony Buzan, The Mind Map Book,1993 The founder ▪ Tony Buzan • 40 years of research • Neurophysiology • Psychology • Psycholinguistics • Mind Map = Technique for thinking • Most effective way of thinking • Best suited for the way of how our brain works • Infinite process of thinking Tony Buzan • Human language • Imagination • Association • Thinking • organical • Non-linear • Radiant • Highly personalized Example of mindmap Tony Buzan ▪ Use of Colour • Capture attention • Improves comprehension • Gives motivation • Increases mental processing • Improves memory processes • Can bear information (colour code) Tony Buzan ▪ Use of Images • Supports visual storage • 60,000 times faster processed by our brain • Stimulates associations „A picture is worth a thousand words“ Tony Buzan ▪ Use of Words • One word per branch • Makes your brain engage with subject • Gives your brain a hook on which to hang a memory • Evolution from verbal form to visual thinking • More and more pictures • Extensive use of emojis Ideal Mindmap ▪ Has only one word at a branch ▪ Uses pictures ▪ Uses different colours for each main branch ▪ Main chapters are main branches ▪ Secondary-level shoots from main branches ▪ Third-level shoots from secondary-level one How to create a mind map ▪ Start with an image in the center • A picture is worth more than 1000 words • Stimulates associations, memory, ideas ▪ Curvilinear branches from center image • Basic ordering ideas = chapters of the thought ▪ 2nd level ideas, 3rd level ideas, …. -

OSINT Handbook September 2020

OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 Aleksandra Bielska Noa Rebecca Kurz, Yves Baumgartner, Vytenis Benetis 2 Foreword I am delighted to share with you the 2020 edition of the OSINT Tools and Resources Handbook. Once again, the Handbook has been revised and updated to reflect the evolution of this discipline, and the many strategic, operational and technical challenges OSINT practitioners have to grapple with. Given the speed of change on the web, some might question the wisdom of pulling together such a resource. What’s wrong with the Top 10 tools, or the Top 100? There are only so many resources one can bookmark after all. Such arguments are not without merit. My fear, however, is that they are also shortsighted. I offer four reasons why. To begin, a shortlist betrays the widening spectrum of OSINT practice. Whereas OSINT was once the preserve of analysts working in national security, it now embraces a growing class of professionals in fields as diverse as journalism, cybersecurity, investment research, crisis management and human rights. A limited toolkit can never satisfy all of these constituencies. Second, a good OSINT practitioner is someone who is comfortable working with different tools, sources and collection strategies. The temptation toward narrow specialisation in OSINT is one that has to be resisted. Why? Because no research task is ever as tidy as the customer’s requirements are likely to suggest. Third, is the inevitable realisation that good tool awareness is equivalent to good source awareness. Indeed, the right tool can determine whether you harvest the right information. -

Digital Mind Mapping Software: a New Horizon in the Modern Teaching- Learning Strategy Dipak Bhattacharya1*, Ramakanta Mohalik2

Journal of Advances in Education and Philosophy Abbreviated Key Title: J Adv Educ Philos ISSN 2523-2665 (Print) |ISSN 2523-2223 (Online) Scholars Middle East Publishers, Dubai, United Arab Emirates Journal homepage: https://saudijournals.com/jaep Review Article Digital Mind Mapping Software: A New Horizon in the Modern Teaching- Learning Strategy Dipak Bhattacharya1*, Ramakanta Mohalik2 1Ph.D. Scholar, Department of Education, Regional Institute of Education, NCERT, Bhubaneswar, India 2Professor, Department of Education, Regional Institute of Education, NCERT, Bhubaneswar, India DOI: 10.36348/jaep.2020.v04i10.001 | Received: 22.09.2020 | Accepted: 30.09.2020 | Published: 03.10.2020 *Corresponding author: Dipak Bhattacharya Abstract Digital mind mapping is a unique method which improves productivity by helping to build and analyze ideas, and facilitates information structuring and retrieval. Educators and learners can use different types of software to create digital mind map for teaching learning. The objectives of the paper are: to describe about different types of software used in creating digital mind maps; to highlight the process of digital mind map development through software and to provide an overview of benefits and usefulness of digital mind mapping software. It’s a review-based study. Articles published in various leading journals, conference proceedings, online materials have been referred in the present article. The first part of the paper describes concept of digital mind mapping software. Second part of the paper provides a brief description about different software used in creating digital mind maps. The third part of the article explains about the process of digital mind map creation through software. The last part of the paper elaborates benefits and usefulness of digital mind mapping software. -

Mind Map Application Market Update

© 2014 Daniel W. Rasmus January 7, 2014 Mind Map Application Market Update Market Overview With the rise of mobile devices, the number of mind mapping tools has risen in recent years, yet only a few should be considered enterprise-ready. Mind mapping tools have evolved from simple drawing tools into complex environments that include collaborative authoring, project management, quantitative model building, dashboards and integration with third-party tools, particularly Microsoft Office, the Google platform and various file sharing systems like Dropbox and Box. The leaders in this market, Mindjet, Think Buzan, TheBrain and MatchWare (not covered in this report) have now advanced to the point that enterprises need to select tools that fit well with their infrastructure, security and collaboration models. Although mind mapping is often driven by personal preference, a proliferation of tools can result in information and knowledge exchange issues that are best avoided by standardizing on one tool, and if more than one is needed, tools that offer complementary capabilities. Scope of this Report Four of the top mind map tool vendors recently updated their apps, their cloud- based services and their platform support. This report covers the latest releases for industry leaders Mindjet, Think Buzan, TheBrain and new entrant toketaWare. Industry Analysis © 2014 by Daniel W. Rasmus. All rights reserved. Reproduction or redistribution in any form without the prior permission of Daniel W. Rasmus is expressly prohibited. This information is provided on an “as is” basis and without express or implied warranties. Although this information is believed to be accurate at the time of publication, Daniel W.