Grafting Synthesis Patches Onto Live Musical Instruments

Total Page:16

File Type:pdf, Size:1020Kb

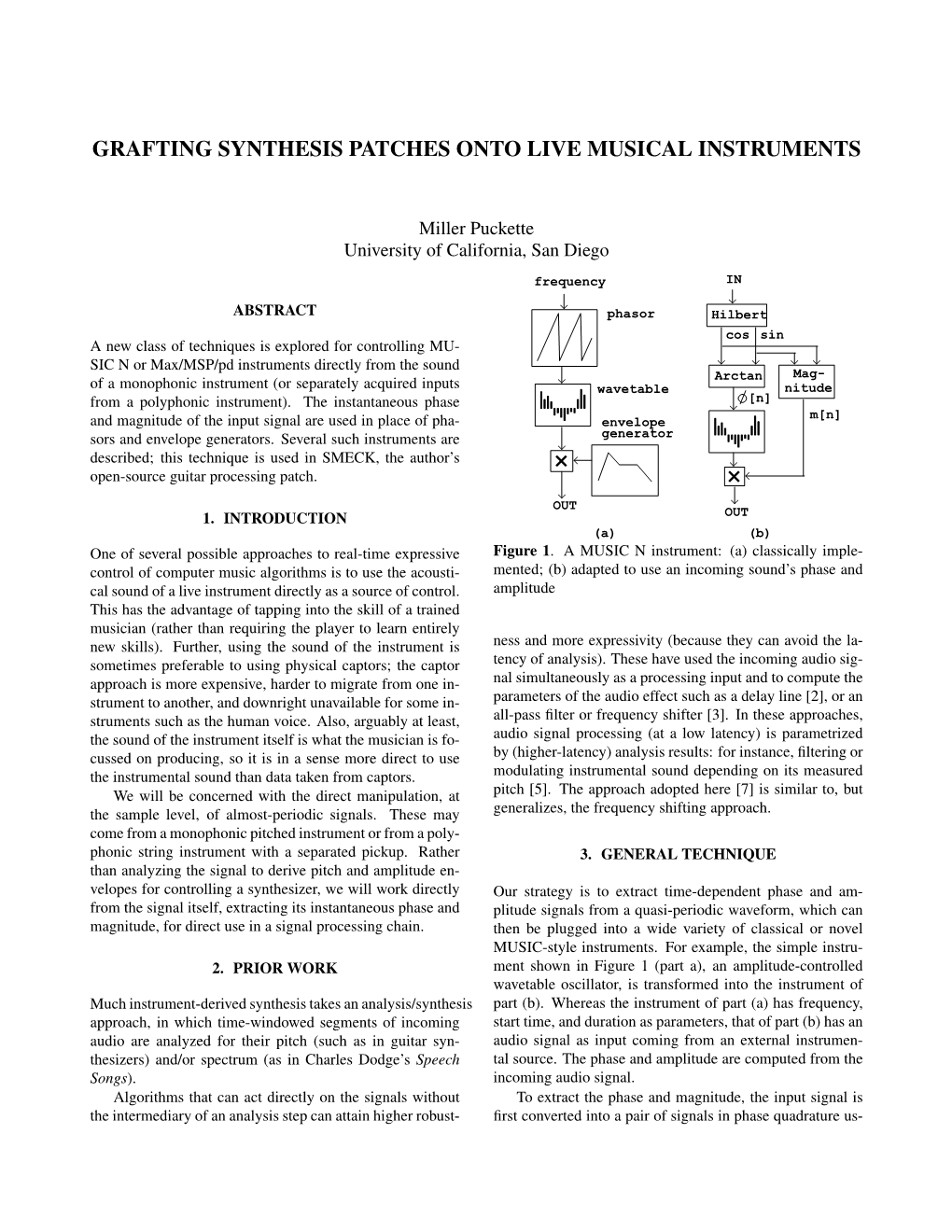

Load more

Recommended publications

-

Real-Time Programming and Processing of Music Signals Arshia Cont

Real-time Programming and Processing of Music Signals Arshia Cont To cite this version: Arshia Cont. Real-time Programming and Processing of Music Signals. Sound [cs.SD]. Université Pierre et Marie Curie - Paris VI, 2013. tel-00829771 HAL Id: tel-00829771 https://tel.archives-ouvertes.fr/tel-00829771 Submitted on 3 Jun 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Realtime Programming & Processing of Music Signals by ARSHIA CONT Ircam-CNRS-UPMC Mixed Research Unit MuTant Team-Project (INRIA) Musical Representations Team, Ircam-Centre Pompidou 1 Place Igor Stravinsky, 75004 Paris, France. Habilitation à diriger la recherche Defended on May 30th in front of the jury composed of: Gérard Berry Collège de France Professor Roger Dannanberg Carnegie Mellon University Professor Carlos Agon UPMC - Ircam Professor François Pachet Sony CSL Senior Researcher Miller Puckette UCSD Professor Marco Stroppa Composer ii à Marie le sel de ma vie iv CONTENTS 1. Introduction1 1.1. Synthetic Summary .................. 1 1.2. Publication List 2007-2012 ................ 3 1.3. Research Advising Summary ............... 5 2. Realtime Machine Listening7 2.1. Automatic Transcription................. 7 2.2. Automatic Alignment .................. 10 2.2.1. -

Miller Puckette 1560 Elon Lane Encinitas, CA 92024 [email protected]

Miller Puckette 1560 Elon Lane Encinitas, CA 92024 [email protected] Education. B.S. (Mathematics), MIT, 1980. Ph.D. (Mathematics), Harvard, 1986. Employment history. 1982-1986 Research specialist, MIT Experimental Music Studio/MIT Media Lab 1986-1987 Research scientist, MIT Media Lab 1987-1993 Research staff member, IRCAM, Paris, France 1993-1994 Head, Real-time Applications Group, IRCAM, Paris, France 1994-1996 Assistant Professor, Music department, UCSD 1996-present Professor, Music department, UCSD Publications. 1. Puckette, M., Vercoe, B. and Stautner, J., 1981. "A real-time music11 emulator," Proceedings, International Computer Music Conference. (Abstract only.) P. 292. 2. Stautner, J., Vercoe, B., and Puckette, M. 1981. "A four-channel reverberation network," Proceedings, International Computer Music Conference, pp. 265-279. 3. Stautner, J. and Puckette, M. 1982. "Designing Multichannel Reverberators," Computer Music Journal 3(2), (pp. 52-65.) Reprinted in The Music Machine, ed. Curtis Roads. Cambridge, The MIT Press, 1989. (pp. 569-582.) 4. Puckette, M., 1983. "MUSIC-500: a new, real-time Digital Synthesis system." International Computer Music Conference. (Abstract only.) 5. Puckette, M. 1984. "The 'M' Orchestra Language." Proceedings, International Computer Music Conference, pp. 17-20. 6. Vercoe, B. and Puckette, M. 1985. "Synthetic Rehearsal: Training the Synthetic Performer." Proceedings, International Computer Music Conference, pp. 275-278. 7. Puckette, M. 1986. "Shannon Entropy and the Central Limit Theorem." Doctoral dissertation, Harvard University, 63 pp. 8. Favreau, E., Fingerhut, M., Koechlin, O., Potacsek, P., Puckette, M., and Rowe, R. 1986. "Software Developments for the 4X real-time System." Proceedings, International Computer Music Conference, pp. 43-46. 9. Puckette, M. -

MUS421–571.1 Electroacoustic Music Composition Kirsten Volness – 20 Mar 2018 Synthesizers

MUS421–571.1 Electroacoustic Music Composition Kirsten Volness – 20 Mar 2018 Synthesizers • Robert Moog – Started building Theremins – Making new tools for Herb Deutsch – Modular components connected by patch cables • Voltage-controlled Oscillators (multiple wave forms) • Voltage-controlled Amplifiers • AM / FM capabilities • Filters • Envelope generator (ADSR) • Reverb unit • AMPEX tape recorder (2+ channels) • Microphones Synthesizers Synthesizers • San Francisco Tape Music Center • Morton Subotnick and Ramon Sender • Donald Buchla – “Buchla Box”– 1965 – Sequencer – Analog automation device that allows a composer to set and store a sequence of notes (or a sequence of sounds, or loudnesses, or other musical information) and play it back automatically – 16 stages (16 splices stored at once) – Pressure-sensitive keys • Subotnick receives commission from Nonesuch Records (Silver Apples of the Moon, The Wild Bull, Touch) Buchla 200 Synthesizers • CBS buys rights to manufacture Buchlas • Popularity surges among electronic music studios, record companies, live performances – Wendy Carlos – Switched-on Bach (1968) – Emerson, Lake, and Palmer, Stevie Wonder, Mothers of Invention, Yes, Pink Floyd, Herbie Hancock, Chick Corea – 1968 Putney studio presents sold-out concert at Elizabeth Hall in London Minimoog • No more patch cables! (Still monophonic) Polyphonic Synthesizers • Polymoog • Four Voice (Oberheim Electronics) – Each voice still patched separately • Prophet-5 – Dave Smith at Sequential Circuits – Fully programmable and polyphonic • GROOVE -

Interactive Electroacoustics

Interactive Electroacoustics Submitted for the degree of Doctor of Philosophy by Jon Robert Drummond B.Mus M.Sc (Hons) June 2007 School of Communication Arts University of Western Sydney Acknowledgements Page I would like to thank my principal supervisor Dr Garth Paine for his direction, support and patience through this journey. I would also like to thank my associate supervisors Ian Stevenson and Sarah Waterson. I would also like to thank Dr Greg Schiemer and Richard Vella for their ongoing counsel and faith. Finally, I would like to thank my family, my beautiful partner Emma Milne and my two beautiful daughters Amelia Milne and Demeter Milne for all their support and encouragement. Statement of Authentication The work presented in this thesis is, to the best of my knowledge and belief, original except as acknowledged in the text. I hereby declare that I have not submitted this material, either in full or in part, for a degree at this or any other institution. …………………………………………… Table of Contents TABLE OF CONTENTS ..................................................................................................................I LIST OF TABLES..........................................................................................................................VI LIST OF FIGURES AND ILLUSTRATIONS............................................................................ VII ABSTRACT..................................................................................................................................... X CHAPTER ONE: INTRODUCTION............................................................................................. -

Read Book Microsound

MICROSOUND PDF, EPUB, EBOOK Curtis Roads | 424 pages | 17 Sep 2004 | MIT Press Ltd | 9780262681544 | English | Cambridge, Mass., United States Microsound PDF Book Phantoms Canoply Games listeners. I have no skin in the game either way and think you should just use what you want to use…Just make music any way you see fit. Miller Puckette Professor, Department of Music, University of California San Diego Microsound offers an enticing series of slice 'n' dice audio recipes from one of the pioneering researchers into the amazingly rich world of granular synthesis. In a single row of hp we find the Mimeophon offering stereo repeats and travelling halos. Browse our collection here. Make Noise modules have an uncanny knack of fitting well together. Electronic Sounds Live. Search Search. Connect to Spotify. Electronic and electroacoustic music. Taylor Deupree. He has also done some scoring for TV and movies, and sound design for video games. Search Search. Join the growing network of Microsound Certified Installers today. Taylor Deupree 71, listeners. Microsound grew up with most of us. El hombre de la Caverna, Disco 1. Everything you need to know about Microsound Products. Sounds coalesce, evaporate, and mutate into other sounds. Connect your Spotify account to your Last. Microsound Accreditation Carry the Certified Microsound Installer reputation with you wherever you go. The Morphagene acts as a recorder of sound and layerer of ideas while the Mimeophon mimics and throws out echoes of what has come before. Help Learn to edit Community portal Recent changes Upload file. More Love this track Set track as current obsession Get track Loading. -

UNIVERSITY of CALIFORNIA, SAN DIEGO Unsampled Digital Synthesis and the Context of Timelab a Dissertation Submitted in Partial S

UNIVERSITY OF CALIFORNIA, SAN DIEGO Unsampled Digital Synthesis and the Context of Timelab A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Music by David Medine Committee in charge: Professor Miller Puckette, Chair Professor Anthony Burr Professor David Kirsh Professor Scott Makeig Professor Tamara Smyth Professor Rand Steiger 2016 Copyright David Medine, 2016 All rights reserved. The dissertation of David Medine is approved, and it is acceptable in quality and form for publication on micro- film and electronically: Chair University of California, San Diego 2016 iii DEDICATION To my mother and father. iv EPIGRAPH For those that seek the knowledge, it's there. But you have to seek it out, do the knowledge, and understand it on your own. |The RZA Always make the audience suffer as much as possible. —Aflred Hitchcock v TABLE OF CONTENTS Signature Page.................................. iii Dedication..................................... iv Epigraph.....................................v Table of Contents................................. vi List of Supplemental Files............................ ix List of Figures..................................x Acknowledgements................................ xiii Vita........................................ xv Abstract of the Dissertation........................... xvi Chapter 1 Introduction............................1 1.1 Preamble...........................1 1.2 `Analog'...........................5 1.3 Digitization.........................7 1.4 -

The Sampling Theorem and Its Discontents

The Sampling Theorem and Its Discontents Miller Puckette Department of Music University of California, San Diego [email protected] After a keynote talk given at the 2015 ICMC, Denton, Texas. August 28, 2016 The fundamental principle of Computer Music is usually taken to be the Nyquist Theorem, which, in its usually cited form, states that a band-limited function can be exactly represented by sampling it at regular intervals. This paper will not quarrel with the theorem itself, but rather will test the assump- tions under which it is commonly applied, and endeavor to show that there are interesting approaches to computer music that lie outside the framework of the sampling theorem. As we will see in Section 3, sampling violations are ubiquitous in every- day electronic music practice. The severity of these violations can usually be mitigated either through various engineering practices and/or careful critical lis- tening. But their existence gives the lie to the popular understanding of digital audio practice as being \lossless". This is not to deny the power of modern digital signal processing theory and its applications, but rather to claim that its underlying assumption|that the sampled signals on which we are operating are to be thought of as exactly rep- resenting band-limited continuous-time functions|sheds light on certain digital operations (notably time-invariant filtering) but not so aptly on others, such as classical synthesizer waveform generation. Digital audio practitioners cannot escape the necessity of representing contin- uous-time signals with finite-sized data structures. But the blanket assumption that such signals can only be represented via the sampling theorem can be unnecessarily limiting. -

Music Program of the 14Th Sound and Music Computing Conference 2017

Music Program of the 14th Sound and Music Computing Conference 2017 SMC 2017 July 5-8, 2017 Espoo, Finland Edited by Koray Tahiroğlu and Tapio Lokki Aalto University School of Science School of Electrical Engineering School of Arts, Design, and Architecture http://smc2017.aalto.fi Committee Chair Prof. Vesa Välimäki Papers Chair Prof. Tapio Lokki Music Chair Dr. Koray Tahiroğlu Treasurer Dr. Jukka Pätynen Web Manager Mr. Fabian Esqueda Practicalities Ms. Lea Söderman Ms. Tuula Mylläri Sponsors ii Reviewers for music program Chikashi Miyama Miriam Akkermann Claudia Molitor James Andean Ilkka Niemeläinen Newton Armstrong Eleonora Oreggia Andrew Bentley Miguel Ortiz John Bischoff Martin Parker Thomas Bjelkeborn Kevin Patton Joanne Cannon Lorah Pierre Lamberto Coccioli Sébastien Piquemal Nicolas Collins Afroditi Psarra Carl Faia Miller Puckette Nick Fells Heather Roche Heather Frasch Robert Rowe Richard Graham Tim Shaw Thomas Grill Jeff Snyder Michael Gurevich Julian Stein Kerry Hagan Malte Steiner Louise Harris Kathleen Supove Lauren Hayes Koray Tahiroğlu Anne Hege Kalev Tiits Enrique Hurtado Enrique Tomás Andy Keep Pierre Alexandre Chris Kiefer Tremblay Juraj Kojs Dan Trueman Petri Kuljuntausta Tolga Tuzun Hans Leeuw Juan Vasquez Thor Magnusson Simon Waters Christos Michalakos Anna Weisling Matthew Yee-King Johannes Zmölnig iii Table of Contents Concerning the Size of the Sun 5 Spheroid 6 Astraglossa or first steps in celestial syntax 7 Machine Milieu 8 RAW Live Coding Audio/Visual Performance 9 The First Flowers of the Year Are Always Yellow 10 Shankcraft 11 The Mark on the Mirror (Made by Breathing) 12 [sisesta pealkiri] 13 Synaesthesia 15 Cubed II 16 The Counting Sisters 17 bocca:mikro 18 revontulet (2017) 20 The CM&T Dome@SMC-17 21 Lightscape-Masquerade 22 Raveshift 24 ImproRecorderBot. -

Miller Puckette

A Divide Between ‘Compositional’ and ‘Performative’ Aspects of Pd * Miller Puckette In the ecology of human culture, unsolved problems play a role that is even more essential than that of solutions. Although the solutions found give a field its legitimacy, it is the prob- lems that give it life. With this in mind, I’ll describe what I think of as the central problem I’m struggling with today, which has perhaps been the main motivating force in my work on Pd, among other things. If Pd’s fundamental design reflects my attack on this problem, perhaps others working on or in Pd will benefit if I try to articulate the problem clearly. In its most succinct form, the problem is that, while we have good paradigms for describing processes (such as in the Max or Pd programs as they stand today), and while much work has been done on representations of musical data (ranging from searchable databases of sound to Patchwork and OpenMusic, and including Pd’s unfinished “data” editor), we lack a fluid mechanism for the two worlds to interoperate. 1EXAMPLES Three examples, programs that combine data storage and retrieval with realtime actions, will serve to demonstrate this division. Taking them as representative of the current state of the art, the rest of this paper will describe the attempts I’m now making to bridge the separation between the two realms. 1.1 CSound In CSound2, the database is called a score (this usage was widespread among software synthesis packages at the time Csound was under development). Scores in Csound consist mostly of ‘notes’, which are commands for a synthesizer. -

An Interview with Max Mathews

Tae Hong Park 102 Dixon Hall An Interview with Music Department Tulane University Max Mathews New Orleans, LA 70118 USA [email protected] Max Mathews was last interviewed for Computer learned in school was how to touch-type; that has Music Journal in 1980 in an article by Curtis Roads. become very useful now that computers have come The present interview took place at Max Mathews’s along. I also was taught in the ninth grade how home in San Francisco, California, in late May 2008. to study by myself. That is when students were Downloaded from http://direct.mit.edu/comj/article-pdf/33/3/9/1855364/comj.2009.33.3.9.pdf by guest on 26 September 2021 (See Figure 1.) This project was an interesting one, introduced to algebra. Most of the farmers and their as I had the opportunity to stay at his home and sons in the area didn’t care about learning algebra, conduct the interview, which I video-recorded in HD and they didn’t need it in their work. So, the math format over the course of one week. The original set teacher gave me a book and I and two or three of video recordings lasted approximately three hours other students worked the problems in the book and total. I then edited them down to a duration of ap- learned algebra for ourselves. And this was such a proximately one and one-half hours for inclusion on wonderful way of learning that after I finished the the 2009 Computer Music Journal Sound and Video algebra book, I got a calculus book and spent the next Anthology, which will accompany the Winter 2009 few years learning calculus by myself. -

Voices with Maureen Chowning

UC San Diego • Division of Arts and Humanities • Department of Music presents John Chowning Turenas (1972) Phoné (1981) - short break - Stria (1977) Voices (2011) BIOS JOHN CHOWNING Composer John M. Chowning was born in Salem, New Jersey in 1934. Following military service and studies at Wittenberg University, he studied composition in Paris for three years with Nadia Boulanger. In 1964, with the help of Max Mathews then at Bell Telephone Laboratories and David Poole of Stanford, he set up a computer music program using the computer system of Stanford University’s Artificial Intelligence Laboratory. Beginning the same year he began the research leading to the first generalized sound localization algorithm implemented in a quad format in 1966. He received the doctorate in composition from Stanford University in 1966, where he studied with Leland Smith. The following year he discovered the frequency modulation synthesis (FM) algorithm, licensed to Yamaha, that led to the most successful synthesis engines in the history of electronic instruments. His three early pieces, Turenas (1972), Stria (1977) and Phoné (1981), make use of his localization/spatialization and FM synthesis algorithms in uniquely different ways. The Computer Music Journal, 31(3), 2007 published four papers about Stria including analyses, its history and reconstruction. After more than twenty years of hearing problems, Chowning was finally able to compose again beginning in 2004, when he began work on Voices, for solo soprano and interactive computer using MaxMSP. Chowning was elected to the American Academy of Arts and Sciences in 1988. He was awarded the Honorary Doctor of Music by Wittenberg University in 1990. -

A Rationale for Faust Design Decisions

A Rationale for Faust Design Decisions Yann Orlarey1, Stéphane Letz1, Dominique Fober1, Albert Gräf2, Pierre Jouvelot3 GRAME, Centre National de Création Musicale, France1, Computer Music Research Group, U. of Mainz, Germany2, MINES ParisTech, PSL Research University, France3 DSLDI, October 20, 2014 1-Music DSLs Quick Overview Some Music DSLs DARMS DCMP PLACOMP DMIX LPC PLAY1 4CED MCL Elody Mars PLAY2 Adagio MUSIC EsAC MASC PMX AML III/IV/V Euterpea Max POCO AMPLE MusicLogo Extempore MidiLisp POD6 Arctic Music1000 Faust MidiLogo POD7 Autoklang MUSIC7 Flavors MODE PROD Bang Musictex Band MOM Puredata Canon MUSIGOL Fluxus Moxc PWGL CHANT MusicXML FOIL MSX Ravel Chuck Musixtex FORMES MUS10 SALIERI CLCE NIFF FORMULA MUS8 SCORE CMIX NOTELIST Fugue MUSCMP ScoreFile Cmusic Nyquist Gibber MuseData SCRIPT CMUSIC OPAL GROOVE MusES SIREN Common OpenMusic GUIDO Lisp Music MUSIC 10 Organum1 SMDL HARP Common MUSIC 11 Outperform SMOKE Music Haskore MUSIC 360 Overtone SSP Common HMSL MUSIC 4B PE SSSP Music INV Notation MUSIC Patchwork ST invokator 4BF Csound PILE Supercollider KERN MUSIC 4F CyberBand Pla Symbolic Keynote MUSIC 6 Composer Kyma Tidal LOCO First Music DSLs, Music III/IV/V (Max Mathews) 1960: Music III introduces the concept of Unit Generators 1963: Music IV, a port of Music III using a macro assembler 1968: Music V written in Fortran (inner loops of UG in assembler) ins 0 FM; osc bl p9 p10 f2 d; adn bl bl p8; osc bl bl p7 fl d; adn bl bl p6; osc b2 p5 p10 f3 d; osc bl b2 bl fl d; out bl; FM synthesis coded in CMusic Csound Originally developed