Interactive Musical Partner: a System for Human/Computer Duo Improvisations" (2013)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Creative Synthesis: Collaborative Cross Disciplinary Studies in the Arts

Western Oregon University Digital Commons@WOU Honors Senior Theses/Projects Student Scholarship 6-1-2012 Creative Synthesis: Collaborative Cross Disciplinary Studies in the Arts Ermine Todd Western Oregon University, [email protected] Follow this and additional works at: https://digitalcommons.wou.edu/honors_theses Part of the Dance Commons Recommended Citation Todd, Ermine, "Creative Synthesis: Collaborative Cross Disciplinary Studies in the Arts" (2012). Honors Senior Theses/Projects. 82. https://digitalcommons.wou.edu/honors_theses/82 This Undergraduate Honors Thesis/Project is brought to you for free and open access by the Student Scholarship at Digital Commons@WOU. It has been accepted for inclusion in Honors Senior Theses/Projects by an authorized administrator of Digital Commons@WOU. For more information, please contact [email protected], [email protected], [email protected]. Creative Synthesis: Collaborative Cross Disciplinary Studies in the Arts By Ermine Todd IV An Honors Thesis Submitted in Partial Fulfillment of the Requirements for Graduation from the Western Oregon University Honors Program Professor Darryl Thomas Thesis Advisor Dr. Gavin Keulks, Honors Program Director Western Oregon University June 2012 Todd 2 Acknowledgements This project would not have been possible without the tireless support of these people. My professor, Thesis Advisor and guide along my journey, Darryl Thomas. Academic advisor Sharon Oberst for always keeping the finish line in sight. Cassie Malmquist for her artistry and mastery of technical theatre. Tad Shannon for guiding the setup of Maple Hall and troubleshooting all technical requirements. Brandon Woodard for immense photographic contributions throughout the length of the project and Photography credit for my poster and program. -

Anthony Braxton Five Pieces 1975

Anthony Braxton Five Pieces 1975 ANTHONY BRAXTON Five Pieces 1975 Arista AL 4064 (LP) I would like to propose now, at the beginning of this discussion, that we set aside entirely the question of the ultimate worth of Anthony Braxton's music. There are those who insist that Braxton is the new Bird, Coltrane, and Ornette, the three-in-one who is singlehandedly taking the Next Step in jazz. There are others who remain unconvinced. History will decide, and while it is doing so, we can and should appreciate Braxton's music for its own immediate value, as a particularly contemporary variety of artistic expression. However, before we can sit down, take off our shoes, and place the enclosed record on our turntables, certain issues must be dealt with. People keep asking questions about Anthony Braxton, questions such as what does he think he is doing? Since these questions involve judgments we can make now, without waiting for history, we should answer them, and what better way to do so than to go directly to the man who is making the music? "Am I an improviser or a composer?" Braxton asks rhetorically, echoing more than one critical analysis of his work. "I see myself as a creative person. And the considerations determining what's really happening in the arena of improvised music imply an understanding of composition anyway. So I would say that composition and improvisation are much more closely related than is generally understood." This is exactly the sort of statement Braxton's detractors love to pounce on. Not only has the man been known to wear cardigan sweaters, smoke a pipe, and play chess; he is an interested in composing as in improvising. -

ANTHONY BRAXTON Echo Echo Mirror House

VICTO cd 125 ANTHONY BRAXTON Echo Echo Mirror House 1. COMPOSITION NO 347 + 62'37" SEPTET Taylor Ho Bynum : cornet, bugle, trompbone, iPod Mary Halvorson : guitare électrique, iPod Jessica Pavone : alto, violon, iPod Jay Rozen : tuba, iPod Aaron Siegel : percussion, vibraphone, iPod Carl Testa : contrebasse, clarinette basse, iPod Anthony Braxton : saxophones alto, soprano et sopranino, iPod, direction et composition Enregistré au 27e Festival International de ℗ Les Disques VICTO Musique Actuelle de Victoriaville le 21 mai 2011 © Les Éditions VICTORIAVILLE, SOCAN 2013 Your “Echo Echo Mirror House” piece at Victoriaville was a time-warping concert experience. My synapses were firing overtime. [Laughs] I must say, thank you. I am really happy about that performance. In this time period, we talk about avant-garde this and avant-garde that, but the post-Ayler generation is 50 years old, and the AACM came together in the ‘60s. So it’s time for new models to come together that can also integrate present-day technology and the thrust of re-structural technology into the mix of the music logics and possibility. The “Echo Echo Mirror House” music is a trans-temporal music state that connects past, present and future as one thought component. This idea is the product of the use of holistic generative template propositions that allow for 300 or 400 compositions to be written in that generative state. The “Ghost Trance” musics would be an example of the first of the holistic, generative logic template musics. The “Ghost Trance” music is concerned with telemetry and cartography, and area space measurements. With the “Echo Echo Mirror House” musics, we’re redefining the concept of elaboration. -

Chick Corea Early Circle Mp3, Flac, Wma

Chick Corea Early Circle mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Jazz Album: Early Circle Country: US Released: 1992 Style: Free Jazz, Contemporary Jazz MP3 version RAR size: 1270 mb FLAC version RAR size: 1590 mb WMA version RAR size: 1894 mb Rating: 4.6 Votes: 677 Other Formats: AU ADX ASF VQF MP1 DMF AA Tracklist Hide Credits Starp 1 5:20 Composed By – Dave Holland 73° - A Kelvin 2 9:12 Composed By – Anthony Braxton Ballad 3 6:43 Composed By – Braxton*, Altschul*, Corea*, Holland* Duet For Bass And Piano #1 4 3:28 Composed By – Corea*, Holland* Duet For Bass And Piano #2 5 1:40 Composed By – Corea*, Holland* Danse For Clarinet And Piano #1 6 2:14 Composed By – Braxton*, Corea* Danse For Clarinet And Piano #2 7 2:34 Composed By – Braxton*, Corea* Chimes I 8 10:19 Composed By – Braxton*, Corea*, Holland* Chimes II 9 6:36 Composed By – Braxton*, Corea*, Holland* Percussion Piece 10 3:25 Composed By – Braxton*, Altschul*, Corea*, Holland* Companies, etc. Recorded At – A&R Studios Credits Bass, Cello, Guitar – Dave Holland Clarinet, Flute, Alto Saxophone, Soprano Saxophone, Contrabass Clarinet – Anthony Braxton Design – Franco Caligiuri Drums, Bass, Marimba, Percussion – Barry Altschul (tracks: 1 to 3, 10) Engineer [Recording] – Tony May Liner Notes – Stanley Crouch Piano, Celesta [Celeste], Percussion – Chick Corea Producer [Original Sessions] – Sonny Lester Reissue Producer – Michael Cuscuna Remix [Digital] – Malcolm Addey Notes Recorded at A&R Studios, NYC on October 13 (#4-9) and October 19, 1970. Tracks 1 to 9 originally issued -

Temporal Disunity and Structural Unity in the Music of John Coltrane 1965-67

Listening in Double Time: Temporal Disunity and Structural Unity in the Music of John Coltrane 1965-67 Marc Howard Medwin A dissertation submitted to the faculty of the University of North Carolina at Chapel Hill in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Music. Chapel Hill 2008 Approved by: David Garcia Allen Anderson Mark Katz Philip Vandermeer Stefan Litwin ©2008 Marc Howard Medwin ALL RIGHTS RESERVED ii ABSTRACT MARC MEDWIN: Listening in Double Time: Temporal Disunity and Structural Unity in the Music of John Coltrane 1965-67 (Under the direction of David F. Garcia). The music of John Coltrane’s last group—his 1965-67 quintet—has been misrepresented, ignored and reviled by critics, scholars and fans, primarily because it is a music built on a fundamental and very audible disunity that renders a new kind of structural unity. Many of those who study Coltrane’s music have thus far attempted to approach all elements in his last works comparatively, using harmonic and melodic models as is customary regarding more conventional jazz structures. This approach is incomplete and misleading, given the music’s conceptual underpinnings. The present study is meant to provide an analytical model with which listeners and scholars might come to terms with this music’s more radical elements. I use Coltrane’s own observations concerning his final music, Jonathan Kramer’s temporal perception theory, and Evan Parker’s perspectives on atomism and laminarity in mid 1960s British improvised music to analyze and contextualize the symbiotically related temporal disunity and resultant structural unity that typify Coltrane’s 1965-67 works. -

Printcatalog Realdeal 3 DO

DISCAHOLIC auction #3 2021 OLD SCHOOL: NO JOKE! This is the 3rd list of Discaholic Auctions. Free Jazz, improvised music, jazz, experimental music, sound poetry and much more. CREATIVE MUSIC the way we need it. The way we want it! Thank you all for making the previous auctions great! The network of discaholics, collectors and related is getting extended and we are happy about that and hoping for it to be spreading even more. Let´s share, let´s make the connections, let´s collect, let´s trim our (vinyl)gardens! This specific auction is named: OLD SCHOOL: NO JOKE! Rare vinyls and more. Carefully chosen vinyls, put together by Discaholic and Ayler- completist Mats Gustafsson in collaboration with fellow Discaholic and Sun Ra- completist Björn Thorstensson. After over 33 years of trading rare records with each other, we will be offering some of the rarest and most unusual records available. For this auction we have invited electronic and conceptual-music-wizard – and Ornette Coleman-completist – Christof Kurzmann to contribute with some great objects! Our auction-lists are inspired by the great auctioneer and jazz enthusiast Roberto Castelli and his amazing auction catalogues “Jazz and Improvised Music Auction List” from waaaaay back! And most definitely inspired by our discaholic friends Johan at Tiliqua-records and Brad at Vinylvault. The Discaholic network is expanding – outer space is no limit. http://www.tiliqua-records.com/ https://vinylvault.online/ We have also invited some musicians, presenters and collectors to contribute with some records and printed materials. Among others we have Joe Mcphee who has contributed with unique posters and records directly from his archive. -

WXU Und Hat Hut – Stets Auf Der Suche Nach Neuem Werner X

r 2017 te /1 in 8 W ANALOGUE AUDIO SWITZERLAND ASSOCIATION Verein zur Erhaltung und Förderung der analogen Musikwiedergabe Das Avantgarde-Label «Hat Hut» Wenig bekannte Violinvirtuosen und Klavierkonzerte Schöne klassische und moderne Tonarme e ill R Aus der WXU und Hat Hut – stets auf der Suche nach Neuem Werner X. Uehlinger, Modern Jazz und Contemporary Music Von Urs Mühlemann Seit 42 Jahren schon gibt es das Basler Plattenlabel «Hat Hut». In dieser Zeit hat sich die Musikindust- rie grundlegend verändert. Label-Gründer Werner X. Uehlinger, Jahrgang 1935, Träger des Kulturprei- ses 1999 der Stadt Basel, hält allen Stürmen stand – und veröffentlicht mit sicherem Gespür immer wieder aussergewöhnliche Aufnahmen. «Seit 1975, ein Ohr in die Zukunft»: Dieses Motto steht als Signatur in allen E-Mails von Werner X. Uehlinger (auch als ‚WXU‘ bekannt). Den Gründer des unabhängigen Schweizer Labels «Hat Hut», das u.a. 1990 von der progressiven New Yorker Zeitschrift «Village Voice» unter die fünf weltbesten Labels gewählt wurde und internationale Wertschätzung nicht nur in Avantgarde-Kreisen geniesst, lernte ich vor mehr als 40 Jahren persönlich kennen. Wir verloren uns aus den Augen, aber ich stiess im Laufe meiner musikalischen Entwicklung immer wieder auf ‚WXU‘ und seine exquisiten Produkte. Nun hat er – als quasi Ein-Mann-Betrieb – sein Lebenswerk in die Hände von «Outhere» gelegt; das Unterneh- men vereint verschiedene Labels für klassische und für zeitgenössische Musik unter einem Dach. Das war für mich eine Gelegenheit, wieder Kontakt aufzunehmen. Wir trafen uns im Februar und im Mai 2017 zu längeren Gesprächen und tauschten uns auch schriftlich und telefonisch aus. -

Real-Time Programming and Processing of Music Signals Arshia Cont

Real-time Programming and Processing of Music Signals Arshia Cont To cite this version: Arshia Cont. Real-time Programming and Processing of Music Signals. Sound [cs.SD]. Université Pierre et Marie Curie - Paris VI, 2013. tel-00829771 HAL Id: tel-00829771 https://tel.archives-ouvertes.fr/tel-00829771 Submitted on 3 Jun 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Realtime Programming & Processing of Music Signals by ARSHIA CONT Ircam-CNRS-UPMC Mixed Research Unit MuTant Team-Project (INRIA) Musical Representations Team, Ircam-Centre Pompidou 1 Place Igor Stravinsky, 75004 Paris, France. Habilitation à diriger la recherche Defended on May 30th in front of the jury composed of: Gérard Berry Collège de France Professor Roger Dannanberg Carnegie Mellon University Professor Carlos Agon UPMC - Ircam Professor François Pachet Sony CSL Senior Researcher Miller Puckette UCSD Professor Marco Stroppa Composer ii à Marie le sel de ma vie iv CONTENTS 1. Introduction1 1.1. Synthetic Summary .................. 1 1.2. Publication List 2007-2012 ................ 3 1.3. Research Advising Summary ............... 5 2. Realtime Machine Listening7 2.1. Automatic Transcription................. 7 2.2. Automatic Alignment .................. 10 2.2.1. -

Miller Puckette 1560 Elon Lane Encinitas, CA 92024 [email protected]

Miller Puckette 1560 Elon Lane Encinitas, CA 92024 [email protected] Education. B.S. (Mathematics), MIT, 1980. Ph.D. (Mathematics), Harvard, 1986. Employment history. 1982-1986 Research specialist, MIT Experimental Music Studio/MIT Media Lab 1986-1987 Research scientist, MIT Media Lab 1987-1993 Research staff member, IRCAM, Paris, France 1993-1994 Head, Real-time Applications Group, IRCAM, Paris, France 1994-1996 Assistant Professor, Music department, UCSD 1996-present Professor, Music department, UCSD Publications. 1. Puckette, M., Vercoe, B. and Stautner, J., 1981. "A real-time music11 emulator," Proceedings, International Computer Music Conference. (Abstract only.) P. 292. 2. Stautner, J., Vercoe, B., and Puckette, M. 1981. "A four-channel reverberation network," Proceedings, International Computer Music Conference, pp. 265-279. 3. Stautner, J. and Puckette, M. 1982. "Designing Multichannel Reverberators," Computer Music Journal 3(2), (pp. 52-65.) Reprinted in The Music Machine, ed. Curtis Roads. Cambridge, The MIT Press, 1989. (pp. 569-582.) 4. Puckette, M., 1983. "MUSIC-500: a new, real-time Digital Synthesis system." International Computer Music Conference. (Abstract only.) 5. Puckette, M. 1984. "The 'M' Orchestra Language." Proceedings, International Computer Music Conference, pp. 17-20. 6. Vercoe, B. and Puckette, M. 1985. "Synthetic Rehearsal: Training the Synthetic Performer." Proceedings, International Computer Music Conference, pp. 275-278. 7. Puckette, M. 1986. "Shannon Entropy and the Central Limit Theorem." Doctoral dissertation, Harvard University, 63 pp. 8. Favreau, E., Fingerhut, M., Koechlin, O., Potacsek, P., Puckette, M., and Rowe, R. 1986. "Software Developments for the 4X real-time System." Proceedings, International Computer Music Conference, pp. 43-46. 9. Puckette, M. -

Digital Developments 70'S

Digital Developments 70’s - 80’s Hybrid Synthesis “GROOVE” • In 1967, Max Mathews and Richard Moore at Bell Labs began to develop Groove (Generated Realtime Operations on Voltage- Controlled Equipment) • In 1970, the Groove system was unveiled at a “Music and Technology” conference in Stockholm. • Groove was a hybrid system which used a Honeywell DDP224 computer to store manual actions (such as twisting knobs, playing a keyboard, etc.) These actions were stored and used to control analog synthesis components in realtime. • Composers Emmanuel Gent and Laurie Spiegel worked with GROOVE Details of GROOVE GROOVE System included: - 2 large disk storage units - a tape drive - an interface for the analog devices (12 8-bit and 2 12-bit converters) - A cathode ray display unit to show the composer a visual representation of the control instructions - Large array of analog components including 12 voltage-controlled oscillators, seven voltage-controlled amplifiers, and two voltage-controlled filters Programming language used: FORTRAN Benefits of the GROOVE System: - 1st digitally controlled realtime system - Musical parameters could be controlled over time (not note-oriented) - Was used to control images too: In 1974, Spiegel used the GROOVE system to implement the program VAMPIRE (Video and Music Program for Interactive, Realtime Exploration) • Laurie Spiegel at the GROOVE Console at Bell Labs (mid 70s) The 1st Digital Synthesizer “The Synclavier” • In 1972, composer Jon Appleton, the Founder and Director of the Bregman Electronic Music Studio at Dartmouth wanted to find a way to control a Moog synthesizer with a computer • He raised this idea to Sydney Alonso, a professor of Engineering at Dartmouth and Cameron Jones, a student in music and computer science at Dartmouth. -

Richard Teitelbaum

RICHARD TEITELBAUM . f lectro-Acoustic Chamber Music February 27-28, 1979 8:30pm $3.50 / $2.00 members / TDF Music The Kitchen Center 484 Broome Street On February 27- 28, The Kitchen Center will present works by Richard Teitel baum. Performers of the compositions and improvisations on the program include George Lewis, trombone and electronics; Reih i Sano, shakuhachi; and Richard Teitel baum, Pol yMoog, MicroMoog and modular Moog synthesizers. The program of electro-acoustic chamber music opens with "Blends," a piece for shakuhachi and synthesizers featuring the playing of Reihi Sano. The piece was composed and first per- formed in 1977, while Teitelbaum was studying in Japan on a Fullbright scholarship. Reihi Sano, who is currently an artist-in-residence at Wesleyan University, has a virtuoso command of the shakuhachi, the endblown bamboo flute. This performance of "Blends" is an American premiere. Similarly, a new work with George Lewis, incorporates Lewis's prodigious com- mand of the trombone and live electronics in a semi-improvisational piece. George Lewis has been involved with the Association for the Advancement of Creative Musicians since 1971, and has played with musicians as diverse as Anth0n.y Braxton, Phil Niblock and Count Basie, in addition to studying philosophy at Yale University. Teitelbaum and Lewis have played at Axis In SoHo, Studio RivBea and the Montreal Museum of Fine Arts. An extensive synthesizer solo by Teitelbaum, entitled "Shrine" (1976-78) rounds out the concert program. Richard Teitelbaum is one of the prime movers of live electronic music. Classically trained, and with a Master's degree in Music from Yale, he studied in Italy with Luigi Nono. -

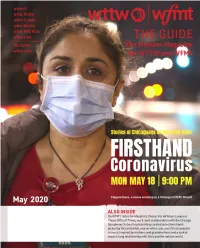

Wwciguide May 2020.Pdf

From the President & CEO The Guide The Member Magazine Dear Member, for WTTW and WFMT Thank you for your viewership, listenership, and continued support of WTTW and Renée Crown Public Media Center 5400 North Saint Louis Avenue WFMT. Now more than ever, we believe it is vital that you can turn to us for trusted Chicago, Illinois 60625 news and information, and high quality educational and entertaining programming whenever and wherever you want it. We have developed our May schedule with this Main Switchboard in mind. (773) 583-5000 Member and Viewer Services On WTTW, explore the personal, firsthand perspectives of people whose lives (773) 509-1111 x 6 have been upended by COVID-19 in Chicago in FIRSTHAND: Coronavirus. This new series of stories produced and released on wttw.com and our social media channels Websites wttw.com has been packaged into a one-hour special for television that will premiere on wfmt.com May 18 at 9:00 pm. Also, settle in for the new two-night special Asian Americans to commemorate Asian Pacific American Heritage Month. As America becomes more Publisher diverse, and more divided while facing unimaginable challenges, this film focuses a Anne Gleason Art Director new lens on U.S. history and the ongoing role that Asian Americans have played. On Tom Peth wttw.com, discover and learn about Chicago’s Japanese Culture Center established WTTW Contributors Julia Maish in 1977 in Chicago by Aikido Shihan (Teacher of Teachers) and Zen Master Fumio Lisa Tipton Toyoda to make the crafts, philosophical riches, and martial arts of Japan available WFMT Contributors to Chicagoans.