Yixin Zhu July 1, 2021

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Deep Learning of Neuromuscular Control for Biomechanical Human Animation

Deep Learning of Neuromuscular Control For Biomechanical Human Animation Masaki Nakada? and Demetri Terzopoulos Computer Science Department University of California, Los Angeles Abstract. Increasingly complex physics-based models enhance the real- ism of character animation in computer graphics, but they pose difficult motor control challenges. This is especially the case when controlling a biomechanically simulated virtual human with an anatomically real- istic structure that is actuated in a natural manner by a multitude of contractile muscles. Graphics researchers have pursued machine learn- ing approaches to neuromuscular control, but traditional neural network learning methods suffer limitations when applied to complex biomechan- ical models and their associated high-dimensional training datasets. We demonstrate that \deep learning" is a useful approach to training neu- romuscular controllers for biomechanical character animation. In par- ticular, we propose a deep neural network architecture that can effec- tively and efficiently control (online) a dynamic musculoskeletal model of the human neck-head-face complex after having learned (offline) a high-dimensional map relating head orientation changes to neck muscle activations. To our knowledge, this is the first application of deep learn- ing to biomechanical human animation with a muscle-driven model. 1 Introduction The modeling of graphical characters based on human anatomy is becoming increasingly important in the field of computer animation. Progressive fidelity in biomechanical modeling should, in principle, result in more realistic human animation. Given realistic biomechanical models, however, we must confront a variety of difficult motor control problems due to the complexity of human anatomy. In conjunction with the modeling of skeletal muscle [1,2], existing work in biomechanical human modeling has addressed the hand [3,4,5], torso [6,7,8], face [9,10,11], neck [12], etc. -

Natural Language Interaction with Explainable AI Models Arjun R Akula1, Sinisa Todorovic2, Joyce Y Chai3 and Song-Chun Zhu4

Natural Language Interaction with Explainable AI Models Arjun R Akula1, Sinisa Todorovic2, Joyce Y Chai3 and Song-Chun Zhu4 1,4University of California, Los Angeles 2Oregon State University 3Michigan State University [email protected] [email protected] [email protected] [email protected] Abstract This paper presents an explainable AI (XAI) system that provides explanations for its predictions. The system consists of two key components – namely, the predic- tion And-Or graph (AOG) model for rec- ognizing and localizing concepts of inter- est in input data, and the XAI model for providing explanations to the user about the AOG’s predictions. In this work, we focus on the XAI model specified to in- Figure 1: Two frames (scenes) of a video: (a) teract with the user in natural language, top-left image (scene1) shows two persons sitting whereas the AOG’s predictions are consid- at the reception and others entering the audito- ered given and represented by the corre- rium and (b) top-right (scene2) image people run- sponding parse graphs (pg’s) of the AOG. ning out of an auditorium. Bottom-left shows the Our XAI model takes pg’s as input and AOG parse graph (pg) for the top-left image and provides answers to the user’s questions Bottom-right shows the pg for the top-right image using the following types of reasoning: direct evidence (e.g., detection scores), medical diagnosis domains (Imran et al., 2018; part-based inference (e.g., detected parts Hatamizadeh et al., 2019)). provide evidence for the concept asked), Consider for example, two frames (scenes) of and other evidences from spatiotemporal a video shown in Figure1. -

Phd Thesis, Technical University of Denmark (DTU)

UCLA UCLA Electronic Theses and Dissertations Title An Online Collaborative Ecosystem for Educational Computer Graphics Permalink https://escholarship.org/uc/item/1h68m5hg Author Ridge, Garett Douglas Publication Date 2018 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA Los Angeles An Online Collaborative Ecosystem for Educational Computer Graphics A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Computer Science by Garett Douglas Ridge 2018 c Copyright by Garett Douglas Ridge 2018 ABSTRACT OF THE DISSERTATION An Online Collaborative Ecosystem for Educational Computer Graphics by Garett Douglas Ridge Doctor of Philosophy in Computer Science University of California, Los Angeles, 2018 Professor Demetri Terzopoulos, Chair This thesis builds upon existing introductory courses in the field of Computer Graphics, aiming to lower the excessive barrier of entry to graphics programming. We introduce tiny- graphics.js, a new software library for implementing educational WebGL projects in the classroom. To mitigate the difficulty of creating graphics-enabled websites and online games, we furthermore introduce the Encyclopedia of Code|a world wide web framework that encourages visitors to learn graphics, build educational graphical demos and articles, host them online, and organize them by topic. We provide our own examples that include custom educational games and tutorial articles, which are already being successfully employed to ease our undergraduate graphics students into the course material. Some of our modules expose students to new graphics techniques, while others are prototypes for new modes of online learning, collaboration, and computing. These include our \Active Textbooks" (educational 3D demos or games embedded in literate-programming-like articles). -

Snakes: Active Contour Models

International Journal of Computer Vision, 321-331 (1988) o 1987 KIuwer Academic Publishers, Boston, Manufactured in The Netherlands Snakes: Active Contour Models MICHAEL KASS, ANDREW WITKIN, and DEMETRI TERZOPOULOS Schlumberger Palo Alto Research, 3340 Hillview Ave., Palo Alto, CA 94304 Abstract A snake is an energy-minimizing spline guided by external constraint forces and influenced by image forces that pull it toward features such as lines and edges. Snakes are active contour models: they lock onto nearby edges, localizing them accurately. Scale-space continuation can be used to enlarge the cap- ture region surrounding a feature. Snakes provide a unified account of a number of visual problems, in- cluding detection of edges, lines, and subjective contours; motion tracking; and stereo matching. We have used snakes successfully for interactive interpretation, in which user-imposed constraint forces guide the snake near features of interest. 1 Introduction organizations. By adding suitable energy terms to the minimization, it is possible for a user to push In recent computational vision research, low- the model out of a local minimum toward the level tasks such as edge or line detection, stereo desired solution. The result is an active model matching, and motion tracking have been widely that falls into the desired solution when placed regarded as autonomous bottom-up processes. near it. Marr and Nishihara [ 111, in a strong statement of Energy minimizing models have a rich history this view, say that up to the 2.5D sketch, no in vision going back at least to Sperling’s stereo “higher-level” information is yet brought to bear: model [16]. -

Lecture Notes in Computer Science 5535 Commenced Publication in 1973 Founding and Former Series Editors: Gerhard Goos, Juris Hartmanis, and Jan Van Leeuwen

Lecture Notes in Computer Science 5535 Commenced Publication in 1973 Founding and Former Series Editors: Gerhard Goos, Juris Hartmanis, and Jan van Leeuwen Editorial Board David Hutchison Lancaster University, UK Takeo Kanade Carnegie Mellon University, Pittsburgh, PA, USA Josef Kittler University of Surrey, Guildford, UK Jon M. Kleinberg Cornell University, Ithaca, NY, USA Alfred Kobsa University of California, Irvine, CA, USA Friedemann Mattern ETH Zurich, Switzerland John C. Mitchell Stanford University, CA, USA Moni Naor Weizmann Institute of Science, Rehovot, Israel Oscar Nierstrasz University of Bern, Switzerland C. Pandu Rangan Indian Institute of Technology, Madras, India Bernhard Steffen University of Dortmund, Germany Madhu Sudan Massachusetts Institute of Technology, MA, USA Demetri Terzopoulos University of California, Los Angeles, CA, USA Doug Tygar University of California, Berkeley, CA, USA Gerhard Weikum Max-Planck Institute of Computer Science, Saarbruecken, Germany Geert-Jan Houben Gord McCalla Fabio Pianesi Massimo Zancanaro (Eds.) User Modeling, Adaptation, and Personalization 17th International Conference, UMAP 2009 formerly UM and AH Trento, Italy, June 22-26, 2009 Proceedings 13 Volume Editors Geert-Jan Houben Delft University of Technology PO Box 5031, 2600 GA Delft, The Netherlands E-mail: [email protected] Gord McCalla University of Saskatchewan Saskatoon, Saskatchewan S7N 5E2, Canada E-mail: [email protected] Fabio Pianesi Massimo Zancanaro FBK-irst via Sommarive 18, 38050 Povo, Italy E-mail: {pianesi,zancana}@fbk.eu Library of Congress Control Number: 2009928433 CR Subject Classification (1998): H.5.2, I.2, H.4, I.6, J.4, K.4, K.6 LNCS Sublibrary: SL 3 – Information Systems and Application, incl. -

Motion and Color Analysis for Animat Perception in Subsequent Sections

s s ’ ’ s s active From: AAAI-96 Proceedings. Copyright © 1996, AAAI (www.aaai.org). All rights reserved. Motion and Color Analysis for Ani Tamer F. Rabie and Demetri Terzopoulos Department of Computer Science, University of Toronto 10 King College Road, Toronto, Ontario, M5S 3G4, Canada e-mail: {tamerldt}@cs.toronto.edu Abstract We propose novel gaze control algorithms for active percep- ’ tion in mobile autonomous agents with directable, foveated vision sensors. Our agents are realistic artificial animals, or animats, situated in physics-based virtual worlds. Their ’ active perception systems continuously analyze photoreal- istic retinal image streams to glean information useful for controlling the animat eyes and body. The vision system computes optical flow and segments moving targets in the hardware low-resolution visual periphery. It then matches segmented targets against mental models of colored objects of interest. The eyes saccade to increase acuity by foveating objects. The resulting sensorimotor control loop supports complex behaviors, such as predation. Figure 1: Artificial fishes swimming among aquatic plants Introduction in a physics-based virtual marine environment. Animals are active observers of their environment (Gibson 1979). This fact has inspired a trend in the computer vi- sion field popularly known as “ vision” (Bajcsy 1988; tational efficiency through economization of photoreceptors Ballard 1991; Swain & Stricker 1993). Unfortunately, ef- and focus of attention, but it forces the visual system to solve forts to create active vision systems for physical robots problems that do not generally arise with a uniform field of have been hampered by hardware and processor limita- view. A key problem is determining where to redirect the tions. -

Fast Approximate Energy Minimization Via Graph Cuts

Proceedings of “Internation Conference on Computer Vision”, Kerkyra, Greece, September 1999 vol.I, p.377 Fast Approximate Energy Minimization via Graph Cuts Yuri Boykov Olga Veksler Ramin Zabih Computer Science Department Cornell University Ithaca, NY 14853 Abstract piecewise smooth, while Edata measures the disagree- In this paper we address the problem of minimizing ment between f and the observed data. Many differ- a large class of energy functions that occur in early ent energy functions have been proposed in the liter- vision. The major restriction is that the energy func- ature. The form of Edata is typically tion’s smoothness term must only involve pairs of pix- els. We propose two algorithms that use graph cuts to Edata(f)= Dp(fp), p compute a local minimum even when very large moves X∈P are allowed. The first move we consider is an α-β- where Dp measures how appropriate a label is for the swap: for a pair of labels α, β, this move exchanges pixel p given the observed data. In image restoration, the labels between an arbitrary set of pixels labeled α 2 for example, Dp(fp) is typically (fp − ip) , where ip is and another arbitrary set labeled β. Our first algo- the observed intensity of the pixel p. rithm generates a labeling such that there is no swap The choice of Esmooth is a critical issue, and move that decreases the energy. The second move we many different functions have been proposed. For consider is an α-expansion: for a label α, this move example, in standard regularization-based vision assigns an arbitrary set of pixels the label α. -

NYU GSAS Bulletin 2003

2003-2005 Graduate School of Arts and Science www.nyu.edu/gsas Schools and Colleges of www.nyu.edu/gsas New York University Graduate School of Arts and OTHER Robert F. Wagner Graduate School of Message from the Dean Science NEW YORK UNIVERSITY Public Service New York University SCHOOLS New York University 6 Washington Square North College of Arts and Science 4 Washington Square North New York, NY 10003-6668 New York University New York, NY 10003-6671 he paths of human possibility for students, as Admissions: 212-998-7414 Web site: www.nyu.edu/gsas 22 Washington Square North they create and recreate their lives, make this New York, NY 10011-9191 Shirley M. Ehrenkranz School of Admissions: 212-998-4500 an exciting time for the Graduate School of Catharine R. Stimpson, B.A.; B.A., Social Work T New York University Arts and Science at New York University. As advocates M.A. [Cantab.], Ph.D.; hon.: D.H.L., School of Law Hum.D., Litt.D., LL.D. 1 Washington Square North for advanced inquiry and creativity, we greatly prize New York University Dean Vanderbilt Hall New York, NY 10003-6654 Admissions: 212-998-5910 the curious and exceptionally competent student. T. James Matthews, B.A., M.A., Ph.D. 40 Washington Square South We value this moment to introduce students Vice Dean New York, NY 10012-1099 Tisch School of the Arts Admissions: 212-998-6060 and others to the intellectual vision of the Graduate Roberta S. Popik, B.A., M.S., Ph.D. New York University 721 Broadway, Room 701 School and the programs and faculty that embody Associate Dean for Graduate Enrollment School of Medicine and Post-Graduate Services Medical School New York, NY 10003-6807 that vision. -

Image Segmentation Using Deep Learning: a Survey

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TPAMI.2021.3059968, IEEE Transactions on Pattern Analysis and Machine Intelligence TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. ??, NO.??, ?? 2021 1 Image Segmentation Using Deep Learning: A Survey Shervin Minaee, Member, IEEE, Yuri Boykov, Member, IEEE, Fatih Porikli, Fellow, IEEE, Antonio Plaza, Fellow, IEEE, Nasser Kehtarnavaz, Fellow, IEEE, and Demetri Terzopoulos, Fellow, IEEE Abstract—Image segmentation is a key task in computer vision and image processing with important applications such as scene understanding, medical image analysis, robotic perception, video surveillance, augmented reality, and image compression, among others, and numerous segmentation algorithms are found in the literature. Against this backdrop, the broad success of Deep Learning (DL) has prompted the development of new image segmentation approaches leveraging DL models. We provide a comprehensive review of this recent literature, covering the spectrum of pioneering efforts in semantic and instance segmentation, including convolutional pixel-labeling networks, encoder-decoder architectures, multiscale and pyramid-based approaches, recurrent networks, visual attention models, and generative models in adversarial settings. We investigate the relationships, strengths, and challenges of these DL-based segmentation models, examine the widely used datasets, compare performances, and discuss promising research directions. Index Terms—Image segmentation, deep learning, convolutional neural networks, encoder-decoder models, recurrent models, generative models, semantic segmentation, instance segmentation, panoptic segmentation, medical image segmentation. F 1 INTRODUCTION MAGE segmentation has been a fundamental problem in I computer vision since the early days of the field [1] (Chap- ter 8). -

Towards High‑Quality 3D Telepresence with Commodity RGBD Camera

This document is downloaded from DR‑NTU (https://dr.ntu.edu.sg) Nanyang Technological University, Singapore. Towards high‑quality 3D telepresence with commodity RGBD camera Zhao, Mengyao 2018 Zhao, M. (2018). Towards high‑quality 3D telepresence with commodity RGBD camera. Doctoral thesis, Nanyang Technological University, Singapore. http://hdl.handle.net/10356/73161 https://doi.org/10.32657/10356/73161 Downloaded on 24 Sep 2021 03:41:50 SGT NANYANG TECHNOLOGICAL UNIVERSITY Towards High-quality 3D Telepresence with Commodity RGBD Camera A thesis submitted to the Nanyang Technological University in partial fulfilment of the requirement for the degree of Doctor of Philosophy by Zhao Mengyao 2016 Abstract 3D telepresence aims at providing remote participants to have the perception of being present at the same physical space, which cannot be achieved by any 2D teleconference system. The success of 3D telepresence will greatly enhance communications, allowing much better user experience, which could stimulate many applications including teleconference, telesurgery, remote education, etc. Despite years of study, 3D telepresence research still faces many chal- lenges such as high system cost, hard to achieve real-time performance with consumer-level hardware and high computation requirement, costly to obtain depth data, hard to extracting 3D people in real-time with high quality and difficult for 3D scene replacement and composition. The emerging of consumer-grade range cameras, such as Microsoft Kinect, which provides convenient and low-cost acquisition of 3D depth in real-time, accelerate many multimedia ap- plications. In this thesis, we make a few attempts, aim at improving the quality of 3D telepres- ence with commodity RGBD camera. -

UCLA UCLA Electronic Theses and Dissertations

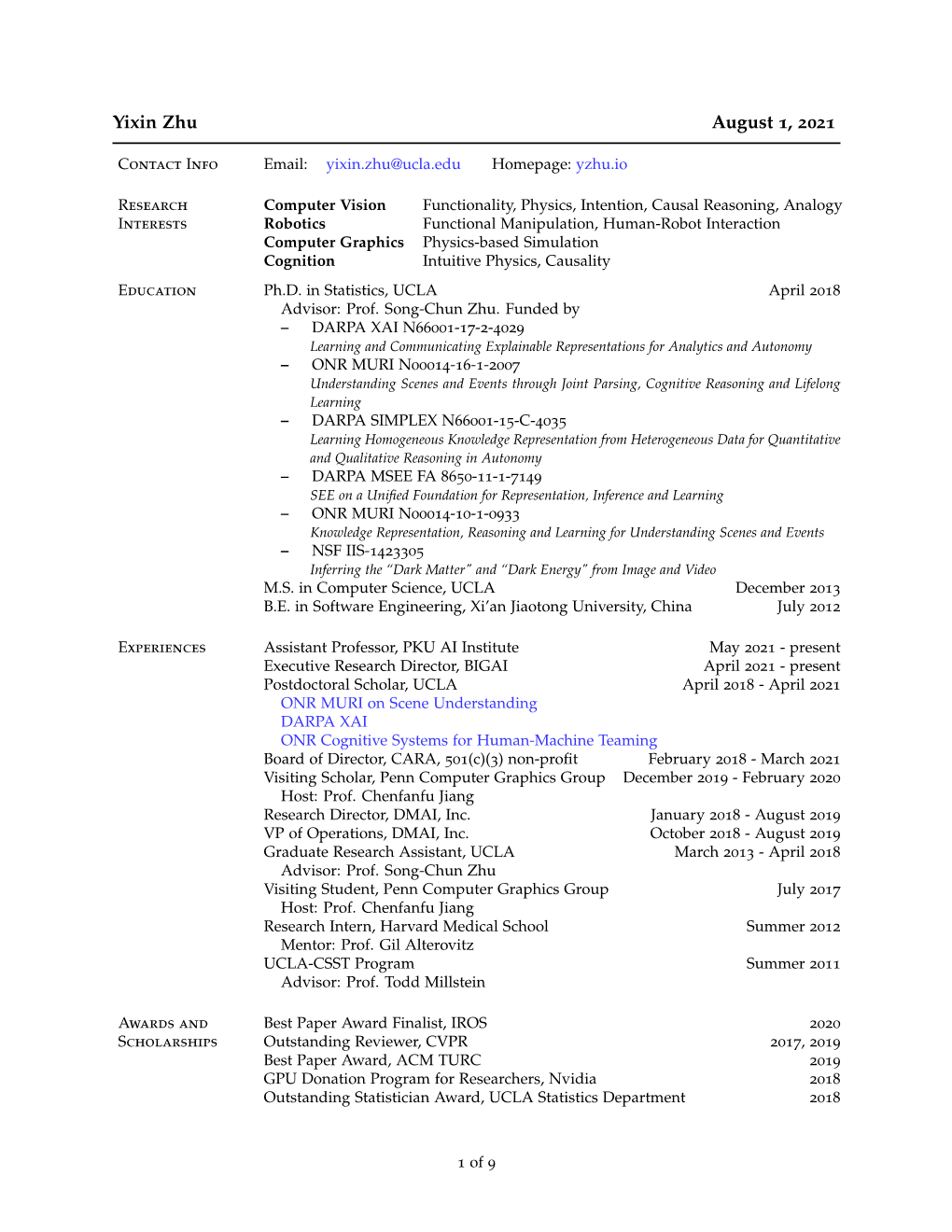

UCLA UCLA Electronic Theses and Dissertations Title Visual Commonsense Reasoning: Functionality, Physics, Causality, and Utility Permalink https://escholarship.org/uc/item/7sm0389z Author Zhu, Yixin Publication Date 2018 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA Los Angeles Visual Commonsense Reasoning: Functionality, Physics, Causality, and Utility A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Statistics by Yixin Zhu 2018 © Copyright by Yixin Zhu 2018 ABSTRACT OF THE DISSERTATION Visual Commonsense Reasoning: Functionality, Physics, Causality, and Utility by Yixin Zhu Doctor of Philosophy in Statistics University of California, Los Angeles, 2018 Professor Song-Chun Zhu, Chair Reasoning about commonsense from visual input remains an important and challenging problem in the field of computer vision. It is important because the ability to reason about commonsense, plan and act accordingly, represents the most distinct competence that tells human apart from other animals|the ability of analogy. It is challenging partially due to the absence of the observations of all the typical examples in a given category, in which the objects often present enormous intra-class variations, leading to a long-tail distribution in the dimensions of appearance and geometry. This dissertation focuses on four largely orthogonal dimensions|functionality, physics, causality, and utility|in computer vision, robotics, and cognitive science, and it makes six major contributions: 1. We rethink object recognition from the perspective of an agent: how objects are used as \tools" or \containers" in actions to accomplish a \task". Here a task is defined as changing the physical states of a target object by actions, such as, cracking a nut or painting a wall. -

Impulse-Based Dynamic Simulation of Rigid Body Systems

Impulsebased Dynamic Simulation of Rigid Bo dy Systems by Brian Vincent Mirtich BSE Arizona State University MS University of California Berkeley A dissertation submitted in partial satisfaction of the requirements for the degree of Do ctor of Philosophy in Computer Science in the GRADUATE DIVISION of the UNIVERSITY of CALIFORNIA at BERKELEY Committee in charge Professor John F Canny Chair Professor David Forsyth Professor Alan Weinstein Fall The dissertation of Brian Vincent Mirtichisapproved Chair Date Date Date University of California at Berkeley Fall Impulsebased Dynamic Simulation of Rigid Bo dy Systems Copyright Fall by Brian Vincent Mirtich Abstract Impulsebased Dynamic Simulation of Rigid Bo dy Systems by Brian Vincent Mirtich Do ctor of Philosophy in Computer Science University of California at Berkeley Professor John F Canny Chair Dynamic simulation is a p owerful application of to days computers with uses in elds rang ing from engineering to animation to virtual reality This thesis intro duces a new paradigm for dynamic simulation called impulsebased simulation The paradigm is designed to meet the twin goals of physical accuracy and computational eciency Obtaining physically ac curate results is often the whole reason for p erforming a simulation however in many applications computational eciency is equally imp ortant Impulsebased simulation is designed to simulate mo derately complex systems at interactive sp eeds To achieve this p erformance certain restrictions are made on the systems to be simulated The strongest