UC Berkeley UC Berkeley Phonlab Annual Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dissimilation in Grammar and the Lexicon 381

In Paul de Lacy (ed.), The Cambridge Handbook of Phonology, CUP. ~ssive' 16 hono main, uages Ii and Dissimilation in grammar .t sibi l1eme- and the lexicon John D. Alderete Stefan A. Frisch 1992). s har peech L1 and form 16.1 Introduction ltivity Dissimilation is the systematic avoidance of two similar sound structures in this is relatively close proximity to each other. It is exhibited in static generalizations over the lexicon, where combinations of similar sounds are systematically lspar avoided in lexical items, like the avoidance oftwo homorganic consonants in ~such Arabic roots (Greenberg 1950; McCarthy 1994). Dissimilation is also observed in l than phonological processes in which the target and trigger become less alike for by phonologically. In Tashlhiyt Berber, for example, two primary labial conson ction; ants in the same derived stem trigger a process ofdelabialization: Im-kaddabl ~ [n-kaddab] 'consider a liar (reciprocal)' (Elmedlaoui 1992, Jebbour 1985). Dissimilation has been an important empirical testing ground for many of the central research paradigms in modern linguistics. For example, dissimilatory phenomena have been crucial to the development of theories offeature geometry and feature specification in autosegmental phonology (McCarthy 1986, 1988, Padgett 1995, Yip 1989b). As the results emerging from this research were incorporated into constraint-based theories of phonology like Optimality Theory (Prince & Smolensky 2004), dissimilation became an important problem in the study of phonological markedness and constraint composition (Alderete 1997, Ito & Mester 2003). In a different line of research, dissimilation has been argued to have its seeds in the phonetics of sound change, restricted by the same vocal tract constraints involved in speech production and perception (Ohala 1981 et seq.). -

Long-Distance /R/-Dissimilation in American English

Long-Distance /r/-Dissimilation in American English Nancy Hall August 14, 2009 1 Introduction In many varieties of American English, it is possible to drop one /r/ from cer- tain words that contain two /r/s, such as su(r)prise, pa(r)ticular, gove(r)nor, and co(r)ner. This type of /r/-deletion is done by speakers who are basically ‘rhotic’; that is, who generally do not drop /r/ in any other position. It is a type of dissimi- lation, because it avoids the presence of multiple rhotics within a word.1 This paper has two goals. The first is to expand the description of American /r/-dissimilation by bringing together previously published examples of the process with new examples from an elicitation study and from corpora. This data set reveals new generalizations about the phonological environments that favor dissimilation. The second goal is to contribute to the long-running debate over why and how dissimilation happens, and particularly long-distance dissimilation. There is dis- pute over whether long-distance dissimilation is part of the grammar at all, and whether its functional grounding is a matter of articulatory constraints, processing constraints, or perception. Data from American /r/-dissimilation are especially im- portant for this debate, because the process is active, it is not restricted to only a few morphemes, and it occurs in a living language whose phonetics can be studied. Ar- guments in the literature are more often based on ancient diachronic dissimilation processes, or on processes that apply synchronically only in limited morphological contexts (and hence are likely fossilized remnants of once wider patterns). -

Chapter One Phonetic Change

CHAPTERONE PHONETICCHANGE The investigation of the nature and the types of changes that affect the sounds of a language is the most highly developed area of the study of language change. The term sound change is used to refer, in the broadest sense, to alterations in the phonetic shape of segments and suprasegmental features that result from the operation of phonological process es. The pho- netic makeup of given morphemes or words or sets of morphemes or words also may undergo change as a by-product of alterations in the grammatical patterns of a language. Sound change is used generally to refer only to those phonetic changes that affect all occurrences of a given sound or class of sounds (like the class of voiceless stops) under specifiable phonetic conditions . It is important to distinguish between the use of the term sound change as it refers tophonetic process es in a historical context , on the one hand, and as it refers to phonetic corre- spondences on the other. By phonetic process es we refer to the replacement of a sound or a sequenceof sounds presenting some articulatory difficulty by another sound or sequence lacking that difficulty . A phonetic correspondence can be said to exist between a sound at one point in the history of a language and the sound that is its direct descendent at any subsequent point in the history of that language. A phonetic correspondence often reflects the results of several phonetic process es that have affected a segment serially . Although phonetic process es are synchronic phenomena, they often have diachronic consequences. -

Begus Gasper

Post-Nasal Devoicing and a Probabilistic Model of Phonological Typology Gašper Beguš [email protected] Abstract This paper addresses one of the most contested issues in phonology: the derivation of phonological typology. The phenomenon of post-nasal devoicing brings new insights into the standard typological discussion. This alternation — “unnatural” in the sense that it operates against a universal phonetic tendency — has been reported to exist both as a sound change and as synchronic phonological process. I bring together eight identified cases of post-nasal devoicing and point to common patterns among them. Based on these patterns, I argue that post-nasal devoicing does not derive from a single atypical sound change, but rather from a set of two or three separate sounds changes, each of which is natural and well- motivated. When these sound changes occur in combination, they give rise to apparent post-nasal devoicing. Evidence from both historical and dialectal data is brought to bear to create a model for explaining future instances of apparent unnatural alternations. By showing that sound change does not operate against universal phonetic tendency, I strengthen the empirical case for the generalization that any single instance of sound change is always phonetically motivated and natural, but that a combination of such sound changes can lead to unnatural alternations in a language’s synchronic grammar. Based on these findings, I propose a new probabilistic model for explaining phonological typology within the channel bias approach: on this model, unnatural alternations will always be rare, because the relative probability that a combination of sound changes will occur collectively is always smaller than the probability that a single sound change will occur. -

Some English Words Illustrating the Great Vowel Shift. Ca. 1400 Ca. 1500 Ca. 1600 Present 'Bite' Bi:Tə Bəit Bəit

Some English words illustrating the Great Vowel Shift. ca. 1400 ca. 1500 ca. 1600 present ‘bite’ bi:tә bәit bәit baIt ‘beet’ be:t bi:t bi:t bi:t ‘beat’ bɛ:tә be:t be:t ~ bi:t bi:t ‘abate’ aba:tә aba:t > abɛ:t әbe:t әbeIt ‘boat’ bɔ:t bo:t bo:t boUt ‘boot’ bo:t bu:t bu:t bu:t ‘about’ abu:tә abәut әbәut әbaUt Note that, while Chaucer’s pronunciation of the long vowels was quite different from ours, Shakespeare’s pronunciation was similar enough to ours that with a little practice we would probably understand his plays even in the original pronuncia- tion—at least no worse than we do in our own pronunciation! This was mostly an unconditioned change; almost all the words that appear to have es- caped it either no longer had long vowels at the time the change occurred or else entered the language later. However, there was one restriction: /u:/ was not diphthongized when followed immedi- ately by a labial consonant. The original pronunciation of the vowel survives without change in coop, cooper, droop, loop, stoop, troop, and tomb; in room it survives in the speech of some, while others have shortened the vowel to /U/; the vowel has been shortened and unrounded in sup, dove (the bird), shove, crumb, plum, scum, and thumb. This multiple split of long u-vowels is the most signifi- cant IRregularity in the phonological development of English; see the handout on Modern English sound changes for further discussion. -

Syllable Structure Into Spanish, Italian & Neapolitan

Open syllable drift and the evolution of Classical Latin open and closed syllable structure into Spanish, Italian & Neapolitan John M. Ryan, University of Northern Colorado ([email protected]) Introduction, Purpose, and Method 1. the distribution of open and closed syllables in terms of word position; 2. what factors, both phonological (e.g,, coda deletion, apocope, syncope, Syllable structure is the proverbial skeleton degemination,) and extra-phonological (e.g., raddoppiamento sintattico or template upon which the sounds of a given and other historical morphosyntactic innovations as the emergence of language may combine to make sequences articles), might explain the distribution of the data. and divisions that are licit in that language. 3. whether the data support or weaken the theory of ‘open syllable drift’ Classical Latin (CL) is known to have exhibited proposed by Lausberg (1976) and others to have occurred in late Latin both open and closed syllable structure in word or early Proto Romance. final position, as well as internally. The daughter Figure 1 shows the breakdown of words, syllables and syllable/word ratio languages of Spanish, Italian and Neapolitan, for all four texts of the study: however, have evolved to manifest this dichotomy to differing due to both phonological and extra-phonological realities. Figure 1 Purpose: to conduct a comparative syllabic analysis of The Lord’s Prayer in Classical Latin (CL), modern Spanish, Italian and Neapolitan in order to determine: Data and Discussion 4) Historical processes producing reversal effects on CL open and closed syllable structure 1) Polysyllabic word-final position in The Lord’s Prayer Figure 5 shows five phonological Figure 5 Figure 2 shows that in word-final position, Latin overwhelmingly favors closed processes affecting the evolution of LATIN syllables at 72%, while Spanish only slightly favors open syllables at 55%. -

Vowel Quality and Phonological Projection

i Vowel Quality and Phonological Pro jection Marc van Oostendorp PhD Thesis Tilburg University September Acknowledgements The following p eople have help ed me prepare and write this dissertation John Alderete Elena Anagnostop oulou Sjef Barbiers Outi BatEl Dorothee Beermann Clemens Bennink Adams Bo domo Geert Bo oij Hans Bro ekhuis Norb ert Corver Martine Dhondt Ruud and Henny Dhondt Jo e Emonds Dicky Gilb ers Janet Grijzenhout Carlos Gussenhoven Gert jan Hakkenb erg Marco Haverkort Lars Hellan Ben Hermans Bart Holle brandse Hannekevan Ho of Angeliek van Hout Ro eland van Hout Harry van der Hulst Riny Huybregts Rene Kager HansPeter Kolb Emiel Krah mer David Leblanc Winnie Lechner Klarien van der Linde John Mc Carthy Dominique Nouveau Rolf Noyer Jaap and Hannyvan Oosten dorp Paola Monachesi Krisztina Polgardi Alan Prince Curt Rice Henk van Riemsdijk Iggy Ro ca Sam Rosenthall Grazyna Rowicka Lisa Selkirk Chris Sijtsma Craig Thiersch MiekeTrommelen Rub en van der Vijver Janneke Visser Riet Vos Jero en van de Weijer Wim Zonneveld Iwant to thank them all They have made the past four years for what it was the most interesting and happiest p erio d in mylife until now ii Contents Intro duction The Headedness of Syllables The Headedness Hyp othesis HH Theoretical Background Syllable Structure Feature geometry Sp ecication and Undersp ecicati on Skeletal tier Mo del of the grammar Optimality Theory Data Organisation of the thesis Chapter Chapter -

A New Test for Exemplar Theory: Varying Versus Non-Varying General Linguistics Glossa Words in Spanish

a journal of Pycha, Anne. 2017. A new test for exemplar theory: Varying versus non-varying general linguistics Glossa words in Spanish. Glossa: a journal of general linguistics 2(1): 82. 1–31, DOI: https://doi.org/10.5334/gjgl.240 RESEARCH A new test for exemplar theory: Varying versus non-varying words in Spanish Anne Pycha University of Wisconsin, Milwaukee, US [email protected] We used a Deese-Roediger-McDermott false memory paradigm to compare Spanish words in which the phonetic realization of /s/ can vary (word-medial positions: bu[s]to ~ bu[h]to ‘chest’, word-final positions:remo [s] ~ remo[h] ‘oars’) to words in which it cannot (word-initial positions: [s]opa ~ *[h]opa ‘soup’). At study, participants listened to lists of nine words that were phonological neighbors of an unheard critical item (e.g., popa, sepa, soja, etc. for the critical item sopa). At test, participants performed free recall and yes/no recognition tasks. Replicating previous work in this paradigm, results showed robust false memory effects: that is, participants were more likely to (falsely) remember a critical item than a random intrusion. When the realization of /s/ was consistent across conditions (Experiment 1), false memory rates for varying versus non-varying words did not significantly differ. However, when the realization of /s/ varied between [s] and [h] in those positions which allow it (Experiment 2), false recognition rates for varying words like busto were significantly higher than those for non-varying words likesopa . Assuming that higher false memory rates are indicative of greater lexical activation, we interpret these results to support the predictions of exemplar theory, which claims that words with heterogeneous versus homogeneous acoustic realizations should exhibit distinct patterns of activation. -

(1'9'6'8'""159-83 and in Later Publica- (1972 539

FINAL WEAKENING AND RELATED PHENOMENA1 Hans Henrich Hock University of Illinois at Urbana-Champaign 1: Final devoicing (FD) 1.1. In generative phonology, it is a generally accepted doctrine that, since word-final devoicing (WFD) is a very common and natural phenomenon, the ob- verse phenomenon, namely word-final voicin~ should not be found in natural language. Compare for instance Postal 1968 184 ('in the context----'~ the rules always devoice rather than voice'), Stampe 1969 443-5 (final devoicing comes about as the result of a failure t~ suppress the (innate) process of final devoicing), Vennemann 1972 240-1 (final voicing, defined as a process increasing the complexity of affected segment~ 'does not occur.')o 1.2 One of the standard examples for WFD is that of German, cf. Bund Bunde [bUnt] [bUndeJ. However Vennemann (1'9'6'8'""159-83 and in later publica- tions) and, following him, Hooper (1972 539) and Hyman (1975 142) have convincingly demonstrated that in Ger- man, this process applies not only word-finally, but also syllable-finally, as in radle [ra·t$le]3 'go by bike' (in some varieties of German). The standard view thus must be modified so as to recognize at least one other process, namely syllable-final devoicing (SFD). (For a different eA""Planation of this phenomenon compare section 2.3 below.) 2· Final voicing (or tenseness neutralization) 2 1 A more important argument against the stan- dard view, however, is that, as anyone with any train- ing in Indo-European linguistics can readily tell, there is at least one 5roup, namely Italic, where there is evidence for the allegedly impossible final voicing, cf PIE *siyet > OLat. -

The Role of Analogy in Language Acquisition

The role of analogy in language change Supporting constructions Hendrik De Smet and Olga Fischer (KU Leuven / University of Amsterdam/ACLC) 1. Introduction It is clear from the literature on language variation and change that analogy1 is difficult to cap- ture in fixed rules, laws or principles, even though attempts have been made by, among others, Kuryłowicz (1949) and Mańczak (1958). For this reason, analogy has not been prominent as an explanatory factor in generative diachronic studies. More recently, with the rise of usage-based (e.g. Tomasello 2003, Bybee 2010), construction-grammar [CxG] (e.g. Croft 2001, Goldberg 1995, 2006), and probabilistic linguistic approaches (e.g. Bod et al. 2003, Bod 2009), the interest in the role of analogy in linguistic change -- already quite strongly present in linguistic studies in the nineteenth century (e.g. Paul 1886) -- has been revived. Other studies in the areas of lan- guage acquisition (e.g. Slobin, ed. 1985, Abbot-Smith & Behrens 2006, Behrens 2009 etc.), cog- nitive science (e.g. work by Holyoak and Thagard 1995, Gentner et al. 2001, Gentner 2003, 2010, Hofstadter 1995), semiotics (studies on iconicity, e.g. Nöth 2001), and language evolution (e.g. Deacon 1997, 2003) have also pointed to analogical reasoning as a deep-seated cognitive principle at the heart of grammatical organization. In addition, the availability of increasingly larger diachronic corpora has enabled us to learn more about patterns’ distributions and frequen- cies, both of which are essential in understanding analogical transfer. Despite renewed interest, however, the elusiveness of analogy remains. For this reason, presumably, Traugott (2011) distinguishes between analogy and analogization, intended to sepa- rate analogy as (an important) ‘motivation’ from analogy as (a haphazard) ‘mechanism’. -

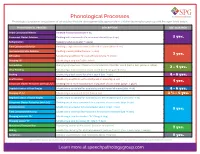

Phonological Processes

Phonological Processes Phonological processes are patterns of articulation that are developmentally appropriate in children learning to speak up until the ages listed below. PHONOLOGICAL PROCESS DESCRIPTION AGE ACQUIRED Initial Consonant Deletion Omitting first consonant (hat → at) Consonant Cluster Deletion Omitting both consonants of a consonant cluster (stop → op) 2 yrs. Reduplication Repeating syllables (water → wawa) Final Consonant Deletion Omitting a singleton consonant at the end of a word (nose → no) Unstressed Syllable Deletion Omitting a weak syllable (banana → nana) 3 yrs. Affrication Substituting an affricate for a nonaffricate (sheep → cheep) Stopping /f/ Substituting a stop for /f/ (fish → tish) Assimilation Changing a phoneme so it takes on a characteristic of another sound (bed → beb, yellow → lellow) 3 - 4 yrs. Velar Fronting Substituting a front sound for a back sound (cat → tat, gum → dum) Backing Substituting a back sound for a front sound (tap → cap) 4 - 5 yrs. Deaffrication Substituting an affricate with a continuant or stop (chip → sip) 4 yrs. Consonant Cluster Reduction (without /s/) Omitting one or more consonants in a sequence of consonants (grape → gape) Depalatalization of Final Singles Substituting a nonpalatal for a palatal sound at the end of a word (dish → dit) 4 - 6 yrs. Stopping of /s/ Substituting a stop sound for /s/ (sap → tap) 3 ½ - 5 yrs. Depalatalization of Initial Singles Substituting a nonpalatal for a palatal sound at the beginning of a word (shy → ty) Consonant Cluster Reduction (with /s/) Omitting one or more consonants in a sequence of consonants (step → tep) Alveolarization Substituting an alveolar for a nonalveolar sound (chew → too) 5 yrs. -

UC Berkeley Proceedings of the Annual Meeting of the Berkeley Linguistics Society

UC Berkeley Proceedings of the Annual Meeting of the Berkeley Linguistics Society Title Perception of Illegal Contrasts: Japanese Adaptations of Korean Coda Obstruents Permalink https://escholarship.org/uc/item/6x34v499 Journal Proceedings of the Annual Meeting of the Berkeley Linguistics Society, 36(36) ISSN 2377-1666 Author Whang, James D. Y. Publication Date 2016 Peer reviewed eScholarship.org Powered by the California Digital Library University of California PROCEEDINGS OF THE THIRTY SIXTH ANNUAL MEETING OF THE BERKELEY LINGUISTICS SOCIETY February 6-7, 2010 General Session Special Session Language Isolates and Orphans Parasession Writing Systems and Orthography Editors Nicholas Rolle Jeremy Steffman John Sylak-Glassman Berkeley Linguistics Society Berkeley, CA, USA Berkeley Linguistics Society University of California, Berkeley Department of Linguistics 1203 Dwinelle Hall Berkeley, CA 94720-2650 USA All papers copyright c 2016 by the Berkeley Linguistics Society, Inc. All rights reserved. ISSN: 0363-2946 LCCN: 76-640143 Contents Acknowledgments v Foreword vii Basque Genitive Case and Multiple Checking Xabier Artiagoitia . 1 Language Isolates and Their History, or, What's Weird, Anyway? Lyle Campbell . 16 Putting and Taking Events in Mandarin Chinese Jidong Chen . 32 Orthography Shapes Semantic and Phonological Activation in Reading Hui-Wen Cheng and Catherine L. Caldwell-Harris . 46 Writing in the World and Linguistics Peter T. Daniels . 61 When is Orthography Not Just Orthography? The Case of the Novgorod Birchbark Letters Andrew Dombrowski . 91 Gesture-to-Speech Mismatch in the Construction of Problem Solving Insight J.T.E. Elms . 101 Semantically-Oriented Vowel Reduction in an Amazonian Language Caleb Everett . 116 Universals in the Visual-Kinesthetic Modality: Politeness Marking Features in Japanese Sign Language (JSL) Johnny George .