Toward a Criminal Law for Cyberspace

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Liberty of Contract

YALE LAW JOURNAL LIBERTY OF CONTRACT "The right of a person to sell his labor," says Mr. Justice Harlan, "upon such terms as he deems proper, is in its essence, the same as the right of the purchaser of labor to prescribe the conditions upon which he will accept such labor from the person offering to sell it. So the right of the employee to quit the service of the employer, for whatever reason, is the same as the right of the employer, for whatever reason, to dispense with the ser- vices of such employee ........ In all such particulars the employer and the employee have equality of right, and any legis- lation that disturbs that equality is an arbitrary interference with the liberty of contract, which no government can legally justify in a free land." ' With this positive declaration of a lawyer, the culmination of a line of decisions now nearly twenty- five years old, a statement which a recent writer on the science of jurisprudence has deemed so fundamental as to deserve quotation and exposition at an unusual length, as compared with his treat- ment of other points, 2 let us compare the equally positive state- ment of a sociologist: "Much of the discussion about 'equal rights' is utterly hollow. All the ado made over the system of contract is surcharged with fallacy." ' To everyone acquainted at first hand with actual industrial conditions the latter statement goes without saying. Why, then do courts persist in the fallacy? Why do so many of them force upon legislation an academic theory of equality in the face of practical conditions of inequality? Why do we find a great and learned court in 19o8 taking the long step into the past of deal- ing with the relation between employer and employee in railway transportation, as if the parties were individuals-as if they were farmers haggling over the sale of a horse ? 4 Why is the legal conception of the relation of employer and employee so at variance with the common knowledge of mankind? The late Presi- ' Adair v. -

Technical Assistance, Crime Prevention, Organised Crime

Technical Assistance, Crime Prevention, Organised Crime Presentations at the HEUNI 25th Anniversary Symposium (January 2007) and at the Stockholm Criminology Symposium (June 2007) Kauko Aromaa and Terhi Viljanen, editors No. 28 2008 HEUNI Paper No. 28 Technical Assistance, Crime Prevention, Organised Crime Presentations at the HEUNI 25th Anniversary Symposium (January 2007) and at the Stockholm Criminology Symposium (June 2007) Kauko Aromaa and Terhi Viljanen, editors The European Institute for Crime Prevention and Control, affiliated with the United Nations Helsinki, 2008 This document is available electronically from: http://www.heuni.fi HEUNI The European Institute for Crime Prevention and Control, affiliated with the United Nations P.O.Box 444 FIN-00531 Helsinki Finland Tel: +358-103665280 Fax: +358-103665290 e-mail:[email protected] http://www.heuni.fi ISSN 1236-8245 Contents Foreword .......................................................................................................................... 7 Applied Knowledge: Technical Assistance to Prevent Crime and Improve Criminal Justice Kuniko Ozaki ................................................................. 9 Speaking Note for HEUNI 25th Birthday Event Gloria Laycock................................11 HEUNI Károly Bárd ........................................................................................................ 13 Ideas on How a Regional Institute Like HEUNI Could Best Promote Knowledge-Based Criminal Policy and What the Role of Member States Could Be Pirkko Lahti -

The Government's Approach to Crime Prevention

House of Commons Home Affairs Committee The Government’s Approach to Crime Prevention Tenth Report of Session 2009–10 Volume II Oral and written evidence Ordered by The House of Commons to be printed 16 March 2010 HC 242-II Published on 29 March 2010 by authority of the House of Commons London: The Stationery Office Limited £16.50 The Home Affairs Committee The Home Affairs Committee is appointed by the House of Commons to examine the expenditure, administration, and policy of the Home Office and its associated public bodies. Current membership Rt Hon Keith Vaz MP (Labour, Leicester East) (Chair) Tom Brake MP (Liberal Democrat, Carshalton and Wallington) Mr James Clappison MP (Conservative, Hertsmere) Mrs Ann Cryer MP (Labour, Keighley) David TC Davies MP (Conservative, Monmouth) Mrs Janet Dean MP (Labour, Burton) Mr Khalid Mahmood MP (Labour, Birmingham Perry Barr) Patrick Mercer MP (Conservative, Newark) Margaret Moran MP (Labour, Luton South) Gwyn Prosser MP (Labour, Dover) Bob Russell MP (Liberal Democrat, Colchester) Martin Salter MP (Labour, Reading West) Mr Gary Streeter MP (Conservative, South West Devon) Mr David Winnick MP (Labour, Walsall North) Powers The Committee is one of the departmental select committees, the powers of which are set out in House of Commons Standing Orders, principally in SO No 152. These are available on the Internet via www.parliament.uk. Publication The Reports and evidence of the Committee are published by The Stationery Office by Order of the House. All publications of the Committee (including press notices) are on the Internet at www.parliament.uk/homeaffairscom. A list of Reports of the Committee since Session 2005–06 is at the back of this volume. -

Paths of Western Law After Justinian

Pace University DigitalCommons@Pace Pace Law Faculty Publications School of Law January 2006 Paths of Western Law After Justinian M. Stuart Madden Pace Law School Follow this and additional works at: https://digitalcommons.pace.edu/lawfaculty Recommended Citation Madden, M. Stuart, "Paths of Western Law After Justinian" (2006). Pace Law Faculty Publications. 130. https://digitalcommons.pace.edu/lawfaculty/130 This Article is brought to you for free and open access by the School of Law at DigitalCommons@Pace. It has been accepted for inclusion in Pace Law Faculty Publications by an authorized administrator of DigitalCommons@Pace. For more information, please contact [email protected]. M. Stuart add en^ Preparation of the Code of Justinian, one part of a three-part presentation of Roman law published over the three-year period from 533 -535 A.D, had not been stymied by the occupation of Rome by the Rugians and the Ostrogoths. In most ways these occupations worked no material hardship on the empire, either militarily or civilly. The occupying Goths and their Roman counterparts developed symbiotic legal and social relationships, and in several instances, the new Germanic rulers sought and received approval of their rule both from the Western Empire, seated in Constantinople, and the Pope. Rugian Odoacer and Ostrogoth Theodoric each, in fact, claimed respect for Roman law, and the latter ruler held the Roman title patricius et magister rnilitum. In sum, the Rugians and the Ostrogoths were content to absorb much of Roman law, and to work only such modifications as were propitious in the light of centuries of Gothic customary law. -

Autumn 2005 SCIENCE in PARLIAMENT

Autumn 2005 SCIENCE IN PARLIAMENT State of the Nation Plastic Waste Private Finance Initiative Visions of Science Airbus Launches the New A350 The Journal of the Parliamentary and Scientific Committee http://www.scienceinparliament.org.uk THE STATE OF THE NATION 2005 An assessment of the UK’s infrastructure by the Institution of Civil Engineers PUBLISHED 18 OCTOBER 2005 About the Institution of Civil Engineers About the report As a professional body, the The State of the Nation Report For more information on the Institution of Civil Engineers (ICE) is compiled each year by a panel background to the State of the is one of the most important of civil engineering experts. The Nation Report, contact ICE sources of professional expertise report’s aim is to stimulate debate External Relations: in road and rail transport, water and to highlight the actions that supply and treatment, flood ICE believes need to be taken to t +44 (0)20 7665 2151 management, waste and energy – improve the UK’s infrastructure. e [email protected] our infrastructure. Established in It has been produced since 2000. w www.uk-infrastructure.org.uk 1818, it has over 75,000 members This year, six regional versions throughout the world – including of the State of the Nation Report – over 60,000 in the UK. covering Northern Ireland, Scotland, Wales as well as the North West, South West and West Midlands of England – are being produced, in conjunction with the UK-wide publication. To read the complete report please visit www.uk-infrastructure.org.uk Registered Charity No. -

Trainers Steve Asmussen Thomas Albertrani

TRAINERS TRAINERS THOMAS ALBERTRANI Bernardini: Jockey Club Gold Cup (2006); Scott and Eric Mark Travers (2006); Jim Dandy (2006); Preakness (2006); Withers (2006) 2015 RECORD Brilliant Speed: Saranac (2011); Blue Grass 1,499 252 252 217 $10,768,759 (2011) Buffum: Bold Ruler (2012) NATIONAL/ECLIPSE CHAMPIONS Criticism: Long Island (2008-09); Sheepshead Curlin: Horse of the Year (2007-08), Top Older Bay (2009) Male (2008), Top 3-Year-Old Male (2007) Empire Dreams: Empire Classic (2015); Kodiak Kowboy: Top Male Sprinter (2009) Birthdate - March 21, 1958 Commentator (2015); NYSS Great White Way My Miss Aurelia: Top 2-Year-Old Filly (2011) Birthplace - Brooklyn, NY (2013) Rachel Alexandra: Horse of the Year (2009), Residence - Garden City, NY Flashing: Nassau County (2009) Top 3-Year-Old Filly (2009) Family - Wife Fonda, daughters Teal and Noelle Gozzip Girl: American Oaks (2009); Sands Untapable: Top 3-Year-Old Filly (2014) Point (2009) 2015 RECORD Love Cove: Ticonderoga (2008) NEW YORK TRAINING TITLES 338 36 41 52 $3,253,692 Miss Frost: Riskaverse (2014) * 2010 Aqueduct spring, 12 victories Oratory: Peter Pan (2005) NATIONAL/ECLIPSE CHAMPION Raw Silk: Sands Point (2008) CAREER HIGHLIGHTS Bernardini: Top 3-Year-Old Male (2006) Ready for Rye: Quick Call (2015); Allied Forces * Campaigned 3-Year-Old Filly Champion (2015) Untapable in 2014, which included four Grade CAREER HIGHLIGHTS Romansh: Excelsior H. (2014); Discovery H. 1 wins: the Kentucky Oaks, the Mother Goose, * Trained Bernardini, who won the Preakness, (2013); Curlin (2013) the -

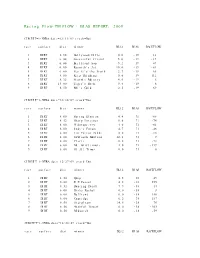

Racing Flow-TM FLOW + BIAS REPORT: 2009

Racing Flow-TM FLOW + BIAS REPORT: 2009 CIRCUIT=1-NYRA date=12/31/09 track=Dot race surface dist winner BL12 BIAS RACEFLOW 1 DIRT 5.50 Hollywood Hills 0.0 -19 13 2 DIRT 6.00 Successful friend 5.0 -19 -19 3 DIRT 6.00 Brilliant Son 5.2 -19 47 4 DIRT 6.00 Raynick's Jet 10.6 -19 -61 5 DIRT 6.00 Yes It's the Truth 2.7 -19 65 6 DIRT 8.00 Keep Thinking 0.0 -19 -112 7 DIRT 8.32 Storm's Majesty 4.0 -19 6 8 DIRT 13.00 Tiger's Rock 9.4 -19 6 9 DIRT 8.50 Mel's Gold 2.5 -19 69 CIRCUIT=1-NYRA date=12/30/09 track=Dot race surface dist winner BL12 BIAS RACEFLOW 1 DIRT 8.00 Spring Elusion 4.4 71 -68 2 DIRT 8.32 Sharp Instinct 0.0 71 -74 3 DIRT 6.00 O'Sotopretty 4.0 71 -61 4 DIRT 6.00 Indy's Forum 4.7 71 -46 5 DIRT 6.00 Ten Carrot Nikki 0.0 71 -18 6 DIRT 8.00 Sawtooth Moutain 12.1 71 9 7 DIRT 6.00 Cleric 0.6 71 -73 8 DIRT 6.00 Mt. Glittermore 4.0 71 -119 9 DIRT 6.00 Of All Times 0.0 71 0 CIRCUIT=1-NYRA date=12/27/09 track=Dot race surface dist winner BL12 BIAS RACEFLOW 1 DIRT 8.50 Quip 4.5 -38 49 2 DIRT 6.00 E Z Passer 4.2 -38 255 3 DIRT 8.32 Dancing Daisy 7.9 -38 14 4 DIRT 6.00 Risky Rachel 0.0 -38 8 5 DIRT 6.00 Kaffiend 0.0 -38 150 6 DIRT 6.00 Capridge 6.2 -38 187 7 DIRT 8.50 Stargleam 14.5 -38 76 8 DIRT 8.50 Wishful Tomcat 0.0 -38 -203 9 DIRT 8.50 Midwatch 0.0 -38 -59 CIRCUIT=1-NYRA date=12/26/09 track=Dot race surface dist winner BL12 BIAS RACEFLOW 1 DIRT 6.00 Papaleo 7.0 108 129 2 DIRT 6.00 Overcommunication 1.0 108 -72 3 DIRT 6.00 Digger 0.0 108 -211 4 DIRT 6.00 Bryan Kicks 0.0 108 136 5 DIRT 6.00 We Get It 16.8 108 129 6 DIRT 6.00 Yawanna Trust 4.5 108 -21 7 DIRT 6.00 Smarty Karakorum 6.5 108 83 8 DIRT 8.32 Almighty Silver 18.7 108 133 9 DIRT 8.32 Offlee Cool 0.0 108 -60 CIRCUIT=1-NYRA date=12/13/09 track=Dot race surface dist winner BL12 BIAS RACEFLOW 1 DIRT 8.32 Crafty Bear 3.0 -158 -139 2 DIRT 6.00 Cheers Darling 0.5 -158 61 3 DIRT 6.00 Iberian Gate 3.0 -158 154 4 DIRT 6.00 Pewter 0.5 -158 8 5 DIRT 6.00 Wolfson 6.2 -158 86 6 DIRT 6.00 Mr. -

Whole 5 (Final 16 March, 2012)

Renaissance Queenship in William Shakespeare’s English History Plays Yu-Chun Chiang UCL Ph. D. Chiang 2 Declaration I, Yu-Chun Chiang confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been indicated in the thesis. Chiang 3 Acknowledgments For the completion of this thesis, I am obliged to my supervisors, Professor Helen Hackett and Professor René Weis. Helen’s professionalism and guide, René’s advice and trust, and their care, patience, and kind support are the key to the accomplishment of my Ph.D. study. Gratitude are due to my examiners, Professor Alison Findlay and Professor Alison Shell, whose insightful comment, attentive scrutiny, friendliness, and useful guidance offer inspiring conversations and academic exchange, especially at the viva, assist me to amend and supplement arguments in the thesis, and illuminate the development of my future research. I am also grateful to Professor John Russell Brown, Dr. Eric Langley, and Professor Susan Irvine for their advice in English Graduate Seminar at UCL and at my upgrade. Professor Francis So, Dr. Vivienne Westbrook, Professor Carole Levin, and our beloved friend and mentor, Professor Marshall Grossman have given me incredible support and counsel in many aspects of my doctoral career. Without the sponsorship of the Ministry of Education in Taiwan, I would not be able to conduct my research in the U.K. Also the generous funding from the Graduate School and assistance from the Departments of English and of History enabled me to refine my knowledge and learn different methodologies of early modern studies. -

Satire and the Corpus Mysticum During Crises of Fragmentation

UNIVERSITY OF CALIFORNIA Los Angeles The Body Satyrical: Satire and the Corpus Mysticum during Crises of Fragmentation in Late Medieval and Early Modern France A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in French and Francophone Studies by Christopher Martin Flood 2013 © Copyright by Christopher Martin Flood 2013 ABSTRACT OF THE DISSERTATION The Body Satyrical: Satire and the Corpus Mysticum during Crises of Fragmentation in Medieval and Early Modern France by Christopher Martin Flood Doctor of Philosophy in French and Francophone Studies University of California, Los Angeles, 2013 Professor Jean-Claude Carron, Chair The later Middle Ages and early modern period in France were marked by divisive conflicts (i.e. the Western Schism, the Hundred Years’ War, and the Protestant Reformation) that threatened the stability and unity of two powerful yet seemingly fragile social entities, Christendom and the kingdom of France. The anxiety engendered by these crises was heightened by the implicit violence of a looming fragmentation of those entities that, perceived through the lens of the Pauline corporeal metaphor, were imagined as corpora mystica (mystical bodies). Despite the gravity of these crises of fragmentation, ii each met with a somewhat unexpected and, at times, prolific response in the form of satirical literature. Since that time, these satirical works have been reductively catalogued under the unwieldy genre of traditional satire and read superficially as mere vituperation or ridiculing didacticism. However, when studied against the background of sixteenth- century theories of satire and the corporeal metaphor, a previously unnoticed element of these works emerges that sets them apart from traditional satire and provides an original insight into the culture of the time. -

N8 PRP Annual Report 2019/20

N8 PRP Annual Report 2019/20 POLICING RESEARCH PARTNERSHIP n8prp.org.uk Contents Foreword by Andy Cooke ..................................................................................................................................................................................................... 3 Director’s Introduction – Adam Crawford .....................................................................................................................................................................4 Introducing the New Leadership Team: Academic Co-Director - Geoff Pearson .......................................................................................................................................................................... 6 Policing Co-Director – Ngaire Waine .............................................................................................................................................................................8 1 Partnerships and Evidence-Based Policing Policing and the N8 Research Partnership – Annette Bramley ...................................................................................................................10 In Conversation with Justin Partridge .................................................................................................................................................................. 12 In Conversation with ACC Chris Sykes ................................................................................................................................................................ -

![The Compleat Works of Nostradamus -=][ Compiled and Entered in PDF Format by Arcanaeum: 2003 ][=](https://docslib.b-cdn.net/cover/3323/the-compleat-works-of-nostradamus-compiled-and-entered-in-pdf-format-by-arcanaeum-2003-2343323.webp)

The Compleat Works of Nostradamus -=][ Compiled and Entered in PDF Format by Arcanaeum: 2003 ][=

The Compleat Works of Nostradamus -=][ compiled and entered in PDF format by Arcanaeum: 2003 ][=- Table of Contents: Preface Century I Century II Century III Century IV Century V Century VI Century VII Century VIII Century IX Century X Epistle To King Henry II Pour les ans Courans en ce Siecle (roughly translated: for the years’ events in this century) Almanacs: 1555−1563 Note: Many of these are written in French with the English Translation directly beneath them. Preface by: M. Nostradamus to his Prophecies Greetings and happiness to César Nostradamus my son Your late arrival, César Nostredame, my son, has made me spend much time in constant nightly reflection so that I could communicate with you by letter and leave you this reminder, after my death, for the benefit of all men, of which the divine spirit has vouchsafed me to know by means of astronomy. And since it was the Almighty's will that you were not born here in this region [Provence] and I do not want to talk of years to come but of the months during which you will struggle to grasp and understand the work I shall be compelled to leave you after my death: assuming that it will not be possible for me to leave you such [clearer] writing as may be destroyed through the injustice of the age [1555]. The key to the hidden prediction which you will inherit will be locked inside my heart. Also bear in mind that the events here described have not yet come to pass, and that all is ruled and governed by the power of Almighty God, inspiring us not by bacchic frenzy nor by enchantments but by astronomical assurances: predictions have been made through the inspiration of divine will alone and the spirit of prophecy in particular. -

University of Huddersfield Repository

University of Huddersfield Repository Monchuk, Leanne Crime Prevention Through Environmental Design (CPTED): Investigating Its Application and Delivery in England and Wales Original Citation Monchuk, Leanne (2016) Crime Prevention Through Environmental Design (CPTED): Investigating Its Application and Delivery in England and Wales. Doctoral thesis, University of Huddersfield. This version is available at http://eprints.hud.ac.uk/27933/ The University Repository is a digital collection of the research output of the University, available on Open Access. Copyright and Moral Rights for the items on this site are retained by the individual author and/or other copyright owners. Users may access full items free of charge; copies of full text items generally can be reproduced, displayed or performed and given to third parties in any format or medium for personal research or study, educational or not-for-profit purposes without prior permission or charge, provided: • The authors, title and full bibliographic details is credited in any copy; • A hyperlink and/or URL is included for the original metadata page; and • The content is not changed in any way. For more information, including our policy and submission procedure, please contact the Repository Team at: [email protected]. http://eprints.hud.ac.uk/ CRIME PREVENTION THROUGH ENVIRONMENTAL DESIGN (CPTED): INVESTIGATING ITS APPLICATION AND DELIVERY IN ENGLAND AND WALES LEANNE MONCHUK A thesis submitted to the University of Huddersfield in partial fulfilment of the requirements for the degree of Doctor of Philosophy February 2016 Copyright Statement i. The author of this thesis (including any appendices and/or schedules to this thesis) owns any copyright in it (the “Copyright”) and she has given The University of Huddersfield the right to use such Copyright for any administrative, promotional, educational and/or teaching purposes.