Foundations of Metrology John A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Metric System Units of Length

Math 0300 METRIC SYSTEM UNITS OF LENGTH Þ To convert units of length in the metric system of measurement The basic unit of length in the metric system is the meter. All units of length in the metric system are derived from the meter. The prefix “centi-“means one hundredth. 1 centimeter=1 one-hundredth of a meter kilo- = 1000 1 kilometer (km) = 1000 meters (m) hecto- = 100 1 hectometer (hm) = 100 m deca- = 10 1 decameter (dam) = 10 m 1 meter (m) = 1 m deci- = 0.1 1 decimeter (dm) = 0.1 m centi- = 0.01 1 centimeter (cm) = 0.01 m milli- = 0.001 1 millimeter (mm) = 0.001 m Conversion between units of length in the metric system involves moving the decimal point to the right or to the left. Listing the units in order from largest to smallest will indicate how many places to move the decimal point and in which direction. Example 1: To convert 4200 cm to meters, write the units in order from largest to smallest. km hm dam m dm cm mm Converting cm to m requires moving 4 2 . 0 0 2 positions to the left. Move the decimal point the same number of places and in the same direction (to the left). So 4200 cm = 42.00 m A metric measurement involving two units is customarily written in terms of one unit. Convert the smaller unit to the larger unit and then add. Example 2: To convert 8 km 32 m to kilometers First convert 32 m to kilometers. km hm dam m dm cm mm Converting m to km requires moving 0 . -

Units and Conversions

Units and Conversions This unit of the Metrology Fundamentals series was developed by the Mitutoyo Institute of Metrology, the educational department within Mitutoyo America Corporation. The Mitutoyo Institute of Metrology provides educational courses and free on-demand resources across a wide variety of measurement related topics including basic inspection techniques, principles of dimensional metrology, calibration methods, and GD&T. For more information on the educational opportunities available from Mitutoyo America Corporation, visit us at www.mitutoyo.com/education. This technical bulletin addresses an important aspect of the language of measurement – the units used when reporting or discussing measured values. The dimensioning and tolerancing practices used on engineering drawings and related product specifications use either decimal inch (in) or millimeter (mm) units. Dimensional measurements are therefore usually reported in either of these units, but there are a number of variations and conversions that must be understood. Measurement accuracy, equipment specifications, measured deviations, and errors are typically very small numbers, and therefore a more practical spoken language of units has grown out of manufacturing and precision measurement practice. Metric System In the metric system (SI or International System of Units), the fundamental unit of length is the meter (m). Engineering drawings and measurement systems use the millimeter (mm), which is one thousandths of a meter (1 mm = 0.001 m). In general practice, however, the common spoken unit is the “micron”, which is slang for the micrometer (m), one millionth of a meter (1 m = 0.001 mm = 0.000001 m). In more rare cases, the nanometer (nm) is used, which is one billionth of a meter. -

Consultative Committee for Amount of Substance; Metrology in Chemistry and Biology CCQM Working Group on Isotope Ratios (IRWG) S

Consultative Committee for Amount of Substance; Metrology in Chemistry and Biology CCQM Working Group on Isotope Ratios (IRWG) Strategy for Rolling Programme Development (2021-2030) 1. EXECUTIVE SUMMARY In April 2017, the Consultative Committee for Amount of Substance; Metrology in Chemistry and Biology (CCQM) established a task group to study the metrological state of isotope ratio measurements and to formulate recommendations to the Consultative Committee (CC) regarding potential engagement in this field. In April 2018 the Isotope Ratio Working Group (IRWG) was established by the CCQM based on the recommendation of the task group. The main focus of the IRWG is on the stable isotope ratio measurement science activities needed to improve measurement comparability to advance the science of isotope ratio measurement among National Metrology Institutes (NMIs) and Designated Institutes (DIs) focused on serving stake holder isotope ratio measurement needs. During the current five-year mandate, it is expected that the IRWG will make significant advances in: i. delta scale definition, ii. measurement comparability of relative isotope ratio measurements, iii. comparable measurement capabilities for C and N isotope ratio measurement; and iv. the understanding of calibration modalities used in metal isotope ratio characterization. 2. SCIENTIFIC, ECONOMIC AND SOCIAL CHALLENGES Isotopes have long been recognized as markers for a wide variety of molecular processes. Indeed, applications where isotope ratios are used provide scientific, economic, and social value. Early applications of isotope measurements were recognized with the 1943 Nobel Prize for Chemistry which included the determination of the water content in the human body, determination of solubility of various low-solubility salts, and development of the isotope dilution method which has since become the cornerstone of analytical chemistry. -

Role of Metrology in Conformity Assessment

Role of Metrology in Conformity Assessment Andy Henson Director of International Liaison and Communications of the BIPM Metrology, the science of measurement… and its applications “When you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind” William Thompson (Lord Kelvin): Lecture on "Electrical Units of Measurement" (3 May 1883) Conformity assessment Overall umbrella of measures taken by : ‐manufacturers ‐customers ‐regulatory authorities ‐independent third parties To assess that a product/service meets standards or technical regulations 2 Metrology is a part of our lives from birth Best regulation Safe food Technical evidence Safe treatment Safe baby food Manufacturing Safe traveling Globalization weighing of baby Healthcare Innovation Climate change Without metrology, you can’t discover, design, build, test, manufacture, maintain, prove, buy or operate anything safely and reliably. From filling your car with petrol to having an X‐ray at a hospital, your life is surrounded by measurements. In industry, from the thread of a nut and bolt and the precision machined parts on engines down to tiny structures on micro and nano components, all require an accurate measurement that is recognized around the world. Good measurement allows country to remain competitive, trade throughout the world and improve quality of life. www.bipm.org 3 Metrology, the science of measurement As we have seen, measurement science is not purely the preserve of scientists. It is something of vital importance to us all. The intricate but invisible network of services, suppliers and communications upon which we are all dependent rely on metrology for their efficient and reliable operation. -

How Are Units of Measurement Related to One Another?

UNIT 1 Measurement How are Units of Measurement Related to One Another? I often say that when you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot express it in numbers, your knowledge is of a meager and unsatisfactory kind... Lord Kelvin (1824-1907), developer of the absolute scale of temperature measurement Engage: Is Your Locker Big Enough for Your Lunch and Your Galoshes? A. Construct a list of ten units of measurement. Explain the numeric relationship among any three of the ten units you have listed. Before Studying this Unit After Studying this Unit Unit 1 Page 1 Copyright © 2012 Montana Partners This project was largely funded by an ESEA, Title II Part B Mathematics and Science Partnership grant through the Montana Office of Public Instruction. High School Chemistry: An Inquiry Approach 1. Use the measuring instrument provided to you by your teacher to measure your locker (or other rectangular three-dimensional object, if assigned) in meters. Table 1: Locker Measurements Measurement (in meters) Uncertainty in Measurement (in meters) Width Height Depth (optional) Area of Locker Door or Volume of Locker Show Your Work! Pool class data as instructed by your teacher. Table 2: Class Data Group 1 Group 2 Group 3 Group 4 Group 5 Group 6 Width Height Depth Area of Locker Door or Volume of Locker Unit 1 Page 2 Copyright © 2012 Montana Partners This project was largely funded by an ESEA, Title II Part B Mathematics and Science Partnership grant through the Montana Office of Public Instruction. -

Measuring in Metric Units BEFORE Now WHY? You Used Metric Units

Measuring in Metric Units BEFORE Now WHY? You used metric units. You’ll measure and estimate So you can estimate the mass using metric units. of a bike, as in Ex. 20. Themetric system is a decimal system of measurement. The metric Word Watch system has units for length, mass, and capacity. metric system, p. 80 Length Themeter (m) is the basic unit of length in the metric system. length: meter, millimeter, centimeter, kilometer, Three other metric units of length are themillimeter (mm) , p. 80 centimeter (cm) , andkilometer (km) . mass: gram, milligram, kilogram, p. 81 You can use the following benchmarks to estimate length. capacity: liter, milliliter, kiloliter, p. 82 1 millimeter 1 centimeter 1 meter thickness of width of a large height of the a dime paper clip back of a chair 1 kilometer combined length of 9 football fields EXAMPLE 1 Using Metric Units of Length Estimate the length of the bandage by imagining paper clips laid next to it. Then measure the bandage with a metric ruler to check your estimate. 1 Estimate using paper clips. About 5 large paper clips fit next to the bandage, so it is about 5 centimeters long. ch O at ut! W 2 Measure using a ruler. A typical metric ruler allows you to measure Each centimeter is divided only to the nearest tenth of into tenths, so the bandage cm 12345 a centimeter. is 4.8 centimeters long. 80 Chapter 2 Decimal Operations Mass Mass is the amount of matter that an object has. The gram (g) is the basic metric unit of mass. -

The Kelvin and Temperature Measurements

Volume 106, Number 1, January–February 2001 Journal of Research of the National Institute of Standards and Technology [J. Res. Natl. Inst. Stand. Technol. 106, 105–149 (2001)] The Kelvin and Temperature Measurements Volume 106 Number 1 January–February 2001 B. W. Mangum, G. T. Furukawa, The International Temperature Scale of are available to the thermometry commu- K. G. Kreider, C. W. Meyer, D. C. 1990 (ITS-90) is defined from 0.65 K nity are described. Part II of the paper Ripple, G. F. Strouse, W. L. Tew, upwards to the highest temperature measur- describes the realization of temperature able by spectral radiation thermometry, above 1234.93 K for which the ITS-90 is M. R. Moldover, B. Carol Johnson, the radiation thermometry being based on defined in terms of the calibration of spec- H. W. Yoon, C. E. Gibson, and the Planck radiation law. When it was troradiometers using reference blackbody R. D. Saunders developed, the ITS-90 represented thermo- sources that are at the temperature of the dynamic temperatures as closely as pos- equilibrium liquid-solid phase transition National Institute of Standards and sible. Part I of this paper describes the real- of pure silver, gold, or copper. The realiza- Technology, ization of contact thermometry up to tion of temperature from absolute spec- 1234.93 K, the temperature range in which tral or total radiometry over the tempera- Gaithersburg, MD 20899-0001 the ITS-90 is defined in terms of calibra- ture range from about 60 K to 3000 K is [email protected] tion of thermometers at 15 fixed points and also described. -

Handbook of MASS MEASUREMENT

Handbook of MASS MEASUREMENT FRANK E. JONES RANDALL M. SCHOONOVER CRC PRESS Boca Raton London New York Washington, D.C. © 2002 by CRC Press LLC Front cover drawing is used with the consent of the Egyptian National Institute for Standards, Gina, Egypt. Back cover art from II Codice Atlantico di Leonardo da Vinci nella Biblioteca Ambrosiana di Milano, Editore Milano Hoepli 1894–1904. With permission from the Museo Nazionale della Scienza e della Tecnologia Leonardo da Vinci Milano. Library of Congress Cataloging-in-Publication Data Jones, Frank E. Handbook of mass measurement / Frank E. Jones, Randall M. Schoonover p. cm. Includes bibliographical references and index. ISBN 0-8493-2531-5 (alk. paper) 1. Mass (Physics)—Measurement. 2. Mensuration. I. Schoonover, Randall M. II. Title. QC106 .J66 2002 531’.14’0287—dc21 2002017486 CIP This book contains information obtained from authentic and highly regarded sources. Reprinted material is quoted with permission, and sources are indicated. A wide variety of references are listed. Reasonable efforts have been made to publish reliable data and information, but the author and the publisher cannot assume responsibility for the validity of all materials or for the consequences of their use. Neither this book nor any part may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, microfilming, and recording, or by any information storage or retrieval system, without prior permission in writing from the publisher. The consent of CRC Press LLC does not extend to copying for general distribution, for promotion, for creating new works, or for resale. Specific permission must be obtained in writing from CRC Press LLC for such copying. -

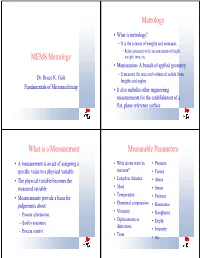

MEMS Metrology Metrology What Is a Measurement Measurable

Metrology • What is metrology? – It is the science of weights and measures • Refers primarily to the measurements of length, MEMS Metrology wetight, time, etc. • Mensuration- A branch of applied geometry – It measure the area and volume of solids from Dr. Bruce K. Gale lengths and angles Fundamentals of Micromachining • It also includes other engineering measurements for the establishment of a flat, plane reference surface What is a Measurement Measurable Parameters • A measurement is an act of assigning a • What do we want to • Pressure specific value to a physical variable measure? • Forces • The physical variable becomes the • Length or distance •Stress measured variable •Mass •Strain • Temperature • Measurements provide a basis for • Friction judgements about • Elemental composition • Resistance •Viscosity – Process information • Roughness • Diplacements or – Quality assurance •Depth distortions – Process control • Intensity •Time •etc. Components of a Measuring Measurement Systems and Tools System • Measurement systems are important tools for the quantification of the physical variable • Measurement systems extend the abilities of the human senses, while they can detect and recognize different degrees of physical variables • For scientific and engineering measurement, the selection of equipment, techniques and interpretation of the measured data are important How Important are Importance of Metrology Measurements? • In human relationships, things must be • Measurement is the language of science counted and measured • It helps us -

Thermometry (Temperature Measurement)

THERMOMETRY Thermometry ................................................................................................................................................. 1 Applications .............................................................................................................................................. 2 Temperature metrology ............................................................................................................................. 2 The primary standard: the TPW-cell ..................................................................................................... 5 Temperature and the ITS-90 ..................................................................................................................... 6 The Celsius scale ................................................................................................................................... 6 Thermometers and thermal baths .......................................................................................................... 7 Metrological properties ............................................................................................................................. 7 Types of thermometers.............................................................................................................................. 9 Liquid-in-glass ...................................................................................................................................... 9 Thermocouple .................................................................................................................................... -

Time-Reversal-Based Quantum Metrology with Many-Body Entangled States

Time-Reversal-Based Quantum Metrology with Many-Body Entangled States 1 1 1 Simone Colombo, ∗ Edwin Pedrozo-Penafiel,˜ ∗ Albert F. Adiyatullin, ∗ Zeyang Li,1 Enrique Mendez,1 Chi Shu,1;2 Vladan Vuletic´1 1Department of Physics, MIT-Harvard Center for Ultracold Atoms and Research Laboratory of Electronics, Massachusetts Institute of Technology, 2Department of Physics, Harvard University Cambridge, Massachusetts 02139, USA ∗These authors contributed equally to this work In quantum metrology, entanglement represents a valuable resource that can be used to overcome the Standard Quantum Limit (SQL) that bounds the precision of sensors that operate with independent particles. Measurements beyond the SQL are typically enabled by relatively simple entangled states (squeezed states with Gaussian probability distributions), where quantum noise is redistributed between different quadratures. However, due to both funda- arXiv:2106.03754v3 [quant-ph] 28 Sep 2021 mental limitations and the finite measurement resolution achieved in practice, sensors based on squeezed states typically operate far from the true fundamen- tal limit of quantum metrology, the Heisenberg Limit. Here, by implement- ing an effective time-reversal protocol through a controlled sign change in an optically engineered many-body spin Hamiltonian, we demonstrate atomic- sensor performance with non-Gaussian states beyond the limitations of spin 1 squeezing, and without the requirement of extreme measurement resolution. Using a system of 350 neutral 171Yb atoms, this signal amplification through time-reversed interaction (SATIN) protocol achieves the largest sensitivity im- provement beyond the SQL (11:8 0:5 dB) demonstrated in any (full Ramsey) ± interferometer to date. Furthermore, we demonstrate a precision improving in proportion to the particle number (Heisenberg scaling), at fixed distance of 12.6 dB from the Heisenberg Limit. -

The International System of Units (SI)

NAT'L INST. OF STAND & TECH NIST National Institute of Standards and Technology Technology Administration, U.S. Department of Commerce NIST Special Publication 330 2001 Edition The International System of Units (SI) 4. Barry N. Taylor, Editor r A o o L57 330 2oOI rhe National Institute of Standards and Technology was established in 1988 by Congress to "assist industry in the development of technology . needed to improve product quality, to modernize manufacturing processes, to ensure product reliability . and to facilitate rapid commercialization ... of products based on new scientific discoveries." NIST, originally founded as the National Bureau of Standards in 1901, works to strengthen U.S. industry's competitiveness; advance science and engineering; and improve public health, safety, and the environment. One of the agency's basic functions is to develop, maintain, and retain custody of the national standards of measurement, and provide the means and methods for comparing standards used in science, engineering, manufacturing, commerce, industry, and education with the standards adopted or recognized by the Federal Government. As an agency of the U.S. Commerce Department's Technology Administration, NIST conducts basic and applied research in the physical sciences and engineering, and develops measurement techniques, test methods, standards, and related services. The Institute does generic and precompetitive work on new and advanced technologies. NIST's research facilities are located at Gaithersburg, MD 20899, and at Boulder, CO 80303.