Institutionen För Datavetenskap Department of Computer and Information Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chart Book Template

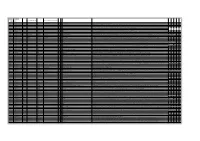

Real Chart Page 1 become a problem, since each track can sometimes be released as a separate download. CHART LOG - F However if it is known that a track is being released on 'hard copy' as a AA side, then the tracks will be grouped as one, or as soon as known. Symbol Explanations s j For the above reasons many remixed songs are listed as re-entries, however if the title is Top Ten Hit Number One hit. altered to reflect the remix it will be listed as would a new song by the act. This does not apply ± Indicates that the record probably sold more than 250K. Only used on unsorted charts. to records still in the chart and the sales of the mix would be added to the track in the chart. Unsorted chart hits will have no position, but if they are black in colour than the record made the Real Chart. Green coloured records might not This may push singles back up the chart or keep them around for longer, nevertheless the have made the Real Chart. The same applies to the red coulered hits, these are known to have made the USA charts, so could have been chart is a sales chart and NOT a popularity chart on people’s favourite songs or acts. Due to released in the UK, or imported here. encryption decoding errors some artists/titles may be spelt wrong, I apologise for any inconvenience this may cause. The chart statistics were compiled only from sales of SINGLES each week. Not only that but Date of Entry every single sale no matter where it occurred! Format rules, used by other charts, where unnecessary and therefore ignored, so you will see EP’s that charted and other strange The Charts were produced on a Sunday and the sales were from the previous seven days, with records selling more than other charts. -

Popmusik Musikgruppe & Musisk Kunstner Listen

Popmusik Musikgruppe & Musisk kunstner Listen Stacy https://da.listvote.com/lists/music/artists/stacy-3503566/albums The Idan Raichel Project https://da.listvote.com/lists/music/artists/the-idan-raichel-project-12406906/albums Mig 21 https://da.listvote.com/lists/music/artists/mig-21-3062747/albums Donna Weiss https://da.listvote.com/lists/music/artists/donna-weiss-17385849/albums Ben Perowsky https://da.listvote.com/lists/music/artists/ben-perowsky-4886285/albums Ainbusk https://da.listvote.com/lists/music/artists/ainbusk-4356543/albums Ratata https://da.listvote.com/lists/music/artists/ratata-3930459/albums Labvēlīgais Tips https://da.listvote.com/lists/music/artists/labv%C4%93l%C4%ABgais-tips-16360974/albums Deane Waretini https://da.listvote.com/lists/music/artists/deane-waretini-5246719/albums Johnny Ruffo https://da.listvote.com/lists/music/artists/johnny-ruffo-23942/albums Tony Scherr https://da.listvote.com/lists/music/artists/tony-scherr-7823360/albums Camille Camille https://da.listvote.com/lists/music/artists/camille-camille-509887/albums Idolerna https://da.listvote.com/lists/music/artists/idolerna-3358323/albums Place on Earth https://da.listvote.com/lists/music/artists/place-on-earth-51568818/albums In-Joy https://da.listvote.com/lists/music/artists/in-joy-6008580/albums Gary Chester https://da.listvote.com/lists/music/artists/gary-chester-5524837/albums Hilde Marie Kjersem https://da.listvote.com/lists/music/artists/hilde-marie-kjersem-15882072/albums Hilde Marie Kjersem https://da.listvote.com/lists/music/artists/hilde-marie-kjersem-15882072/albums -

L'italia E L'eurovision Song Contest Un Rinnovato

La musica unisce l'Europa… e non solo C'è chi la definisce "La Champions League" della musica e in fondo non sbaglia. L'Eurovision è una grande festa, ma soprattutto è un concorso in cui i Paesi d'Europa si sfidano a colpi di note. Tecnicamente, è un concorso fra televisioni, visto che ad organizzarlo è l'EBU (European Broadcasting Union), l'ente che riunisce le tv pubbliche d'Europa e del bacino del Mediterraneo. Noi italiani l'abbiamo a lungo chiamato Eurofestival, i francesi sciovinisti lo chiamano Concours Eurovision de la Chanson, l'abbreviazione per tutti è Eurovision. Oggi più che mai una rassegna globale, che vede protagonisti nel 2016 43 paesi: 42 aderenti all'ente organizzatore più l'Australia, che dell'EBU è solo membro associato, essendo fuori dall'area (l’anno scorso fu invitata dall’EBU per festeggiare i 60 anni del concorso per via dei grandi ascolti che la rassegna fa in quel paese e che quest’anno è stata nuovamente invitata dall’organizzazione). L'ideatore della rassegna fu un italiano: Sergio Pugliese, nel 1956 direttore della RAI, che ispirandosi a Sanremo volle creare una rassegna musicale europea. La propose a Marcel Bezençon, il franco-svizzero allora direttore generale del neonato consorzio eurovisione, che mise il sigillo sull'idea: ecco così nascere un concorso di musica con lo scopo nobile di promuovere la collaborazione e l'amicizia tra i popoli europei, la ricostituzione di un continente dilaniato dalla guerra attraverso lo spettacolo e la tv. E oltre a questo, molto più prosaicamente, anche sperimentare una diretta in simultanea in più Paesi e promuovere il mezzo televisivo nel vecchio continente. -

Najlepsze Hity Dla Ciebie Vol. 4 Najlepsze Hity Dla Ciebie Vol. 2

Najlepsze hity dla Ciebie vol. 4 CD 3 1. Robin Thicke Feat. Pharrell Williams - Blurred Lines [No Rap Version] CD 1 2. Bruno Mars - Locked Out Of Heaven 1. Alvaro Soler - El Mismo Sol 3. Lady Gaga - Born This Way 2. Lost Frequencies feat. Janieck Davey – Reality 4. Taio Cruz Feat. Flo Rida - Hangover 3. Imany – Don’t Be So Shy (Filatov & Karas Remix ) 5. Gnarls Barkley - Crazy 4. Ellie Goulding - On My Mind 6. Ariana Grande Feat. Zedd - Break Free 5. Sigala - Easy Love 7. Jessie J. - Price Tag [Clean Edit w/o B.O.B. 6. Avicii - For A Better Day 8. Kate Ryan – Runaway 7. Felix Jaehn Feat. Jasmine Thompson - Ain't Nobody ( 9. John Mamann feat. Kika - Love Life Loves Me Better) 10. Elaiza - Is It Right 8. Alexandra Stan & Inna feat. Daddy Yankee - We 11. Niki Minaj - Starships Chesney Wanna 12. Hawkes - The One And Only 9. Axwell /\ Ingrosso - Sun Is Shining 13. Iggy Azalea - Fancy 10. Years&Years - Shine 14. Jessie Ware - Champagne Kisses 11. Ariana Grande - One Last Time 15. Robbie Wiliams – Feel 12. Rico Bernasconi & Tuklan feat. A - Class & Sean Paul 16. Meredith Brooks - Bitch - Ebony Eyes 17. Bellini - Samba De Janeiro 13. Justin Bieber - What Do You Mean? 18. Billy Idol - Cradle Of Love 14. Dimitri Vegas & Like Mike feat. Ne-Yo - Higher Place 19. UB40 - (I Can't Help) Falling In Love With You 15. John Newman - Come And Get It 20. Shaggy – Boombastic 16. Otilia – Aventura 17. Tove Lo – Moments 18. Basto – Hold You 19. Inna – Bop Bop 20. -

Gender and Geopolitics in the Eurovision Song Contest Introduction

Gender and Geopolitics in the Eurovision Song Contest Introduction Catherine Baker Lecturer, University of Hull [email protected] http://www.suedosteuropa.uni-graz.at/cse/en/baker Contemporary Southeastern Europe, 2015, 2(1), 74-93 Contemporary Southeastern Europe is an online, peer-reviewed, multidisciplinary journal that publishes original, scholarly, and policy-oriented research on issues relevant to societies in Southeastern Europe. For more information, please contact us at [email protected] or visit our website at www.contemporarysee.org Introduction: Gender and Geopolitics in the Eurovision Song Contest Catherine Baker* Introduction From the vantage point of the early 1990s, when the end of the Cold War not only inspired the discourses of many Eurovision performances but created opportunities for the map of Eurovision participation itself to significantly expand in a short space of time, neither the scale of the contemporary Eurovision Song Contest (ESC) nor the extent to which a field of “Eurovision research” has developed in cultural studies and its related disciplines would have been recognisable. In 1993, when former Warsaw Pact states began to participate in Eurovision for the first time and Yugoslav successor states started to compete in their own right, the contest remained a one-night-per- year theatrical presentation staged in venues that accommodated, at most, a couple of thousand spectators and with points awarded by expert juries from each participating country. Between 1998 and 2004, Eurovision’s organisers, the European Broadcasting Union (EBU), and the national broadcasters responsible for hosting each edition of the contest expanded it into an ever grander spectacle: hosted in arenas before live audiences of 10,000 or more, with (from 2004) a semi-final system enabling every eligible country and broadcaster to participate each year, and with (between 1998 and 2008) points awarded almost entirely on the basis of telephone voting by audiences in each participating state. -

Eurovision Karaoke

1 Eurovision Karaoke ALBANÍA ASERBAÍDJAN ALB 06 Zjarr e ftohtë AZE 08 Day after day ALB 07 Hear My Plea AZE 09 Always ALB 10 It's All About You AZE 14 Start The Fire ALB 12 Suus AZE 15 Hour of the Wolf ALB 13 Identitet AZE 16 Miracle ALB 14 Hersi - One Night's Anger ALB 15 I’m Alive AUSTURRÍKI ALB 16 Fairytale AUT 89 Nur ein Lied ANDORRA AUT 90 Keine Mauern mehr AUT 04 Du bist AND 07 Salvem el món AUT 07 Get a life - get alive AUT 11 The Secret Is Love ARMENÍA AUT 12 Woki Mit Deim Popo AUT 13 Shine ARM 07 Anytime you need AUT 14 Conchita Wurst- Rise Like a Phoenix ARM 08 Qele Qele AUT 15 I Am Yours ARM 09 Nor Par (Jan Jan) AUT 16 Loin d’Ici ARM 10 Apricot Stone ARM 11 Boom Boom ÁSTRALÍA ARM 13 Lonely Planet AUS 15 Tonight Again ARM 14 Aram Mp3- Not Alone AUS 16 Sound of Silence ARM 15 Face the Shadow ARM 16 LoveWave 2 Eurovision Karaoke BELGÍA UKI 10 That Sounds Good To Me UKI 11 I Can BEL 86 J'aime la vie UKI 12 Love Will Set You Free BEL 87 Soldiers of love UKI 13 Believe in Me BEL 89 Door de wind UKI 14 Molly- Children of the Universe BEL 98 Dis oui UKI 15 Still in Love with You BEL 06 Je t'adore UKI 16 You’re Not Alone BEL 12 Would You? BEL 15 Rhythm Inside BÚLGARÍA BEL 16 What’s the Pressure BUL 05 Lorraine BOSNÍA OG HERSEGÓVÍNA BUL 07 Water BUL 12 Love Unlimited BOS 99 Putnici BUL 13 Samo Shampioni BOS 06 Lejla BUL 16 If Love Was a Crime BOS 07 Rijeka bez imena BOS 08 D Pokušaj DUET VERSION DANMÖRK BOS 08 S Pokušaj BOS 11 Love In Rewind DEN 97 Stemmen i mit liv BOS 12 Korake Ti Znam DEN 00 Fly on the wings of love BOS 16 Ljubav Je DEN 06 Twist of love DEN 07 Drama queen BRETLAND DEN 10 New Tomorrow DEN 12 Should've Known Better UKI 83 I'm never giving up DEN 13 Only Teardrops UKI 96 Ooh aah.. -

Eurovision Karaoke

1 Eurovision Karaoke Eurovision Karaoke 2 Eurovision Karaoke ALBANÍA AUS 14 Conchita Wurst- Rise Like a Phoenix ALB 07 Hear My Plea BELGÍA ALB 10 It's All About You BEL 06 Je t'adore ALB 12 Suus BEL 12 Would You? ALB 13 Identitet BEL 86 J'aime la vie ALB 14 Hersi - One Night's Anger BEL 87 Soldiers of love BEL 89 Door de wind BEL 98 Dis oui ARMENÍA ARM 07 Anytime you need BOSNÍA OG HERSEGÓVÍNA ARM 08 Qele Qele BOS 99 Putnici ARM 09 Nor Par (Jan Jan) BOS 06 Lejla ARM 10 Apricot Stone BOS 07 Rijeka bez imena ARM 11 Boom Boom ARM 13 Lonely Planet ARM 14 Aram Mp3- Not Alone BOS 11 Love In Rewind BOS 12 Korake Ti Znam ASERBAÍDSJAN AZE 08 Day after day BRETLAND AZE 09 Always UKI 83 I'm never giving up AZE 14 Start The Fire UKI 96 Ooh aah... just a little bit UKI 04 Hold onto our love AUSTURRÍKI UKI 07 Flying the flag (for you) AUS 89 Nur ein Lied UKI 10 That Sounds Good To Me AUS 90 Keine Mauern mehr UKI 11 I Can AUS 04 Du bist UKI 12 Love Will Set You Free AUS 07 Get a life - get alive UKI 13 Believe in Me AUS 11 The Secret Is Love UKI 14 Molly- Children of the Universe AUS 12 Woki Mit Deim Popo AUS 13 Shine 3 Eurovision Karaoke BÚLGARÍA FIN 13 Marry Me BUL 05 Lorraine FIN 84 Hengaillaan BUL 07 Water BUL 12 Love Unlimited FRAKKLAND BUL 13 Samo Shampioni FRA 69 Un jour, un enfant DANMÖRK FRA 93 Mama Corsica DEN 97 Stemmen i mit liv DEN 00 Fly on the wings of love FRA 03 Monts et merveilles DEN 06 Twist of love DEN 07 Drama queen DEN 10 New Tomorrow FRA 09 Et S'il Fallait Le Faire DEN 12 Should've Known Better FRA 11 Sognu DEN 13 Only Teardrops -

Karaoke Listing by Song Title

Karaoke Listing by Song Title Title Artiste 3 Britney Spears 11 Cassadee Pope 18 One Direction 22 Lily Allen 22 Taylor Swift 679 Fetty Wap ft Remy Boyz 1234 Feist 1973 James Blunt 1994 Jason Aldean 1999 Prince #1 Crush Garbage #thatPOWER Will.i.Am ft Justin Bieber 03 Bonnie & Clyde Jay-Z & Beyonce 1 Thing Amerie 1, 2 Step Ciara Ft Missy Elliott 10,000 Nights Alphabeat 10/10 Paolo Nutini 1-2-3 Gloria Estefan & Miami Sound Machine 1-2-3 Len Barry 18 And Life Skid Row 19-2000 Gorillaz 2 Become 1 Spice Girls To Make A Request: Either hand me a completed Karaoke Request Slip or come over and let me know what song you would like to sing A full up-to-date list can be downloaded on a smart phone from www.southportdj.co.uk/karaoke-dj Karaoke Listing by Song Title Title Artiste 2 Hearts Kylie Minogue 2 Minutes To Midnight Iron Maiden 2 Pints Of Lager & A Packet Of Crisps Please Splognessabounds 2000 Miles The Pretenders 2-4-6-8 Motorway Tom Robinson Band 24K Magic Bruno Mars 3 Words (Duet Version) Cheryl Cole ft Will.I.Am 30 Days The Saturdays 30 Minute Love Affair Paloma Faith 3AM Busted 4 In The Morning Gwen Stefani 5 Colours In Her Hair McFly 5, 6, 7, 8 Steps 5-4-3-2-1 Manfred Mann 57 Chevrolet Billie Jo Spears 6 Words Wretch 32 6345 789 Blues Brothers 68 Guns The Alarm 7 Things Miley Cyrus 7 Years Lukas Graham 7/11 Beyonce 74-75 The Connells To Make A Request: Either hand me a completed Karaoke Request Slip or come over and let me know what song you would like to sing A full up-to-date list can be downloaded on a smart phone from www.southportdj.co.uk/karaoke-dj -

Two Different Contractors Will Build Middle Schools

Volume XXXV No. 12 sewaneemessenger.com Friday, April 5, 2019 Village Two Diff erent Updates Contractors Will Build by Leslie Lytle Messenger Staff Writer Middle Schools Two years ago Frank Gladu by Leslie Lytle, Messenger Staff Writer who oversees the Sewanee Village project made a promise “not to cut At the March 29 meeting, the Franklin County School Board selected my beard until we build some- Biscan Construction to build the new North Middle School and South- thing.” Gladu will soon get to cut land Constructors to build South Middle School. Of the fi ve contractors his beard. At the April 2 Sewanee bidding, three bid on both schools, Southland among them. Th e bids by Village update meeting, Gladu an- R. G. Anderson and Barton Malow Construction off ered a discounted nounced the long-awaited ground- price if awarded the contract for both schools. Th e combined bid from breaking for the new bookstore the contractors selected, $40,588,200, was nearly a million dollars less along with reviewing other high than the next lowest bidder, Barton Malow, even after taking the dis- momentum initiatives, narrowing count into account. U.S. Hwy. 41A and construction Biscan’s $20,258,500 bid includes HVAC for the Huntland School of a mixed-use grocery and apart- gym, as requested in the bid package. Th e County Commission allocated ment building. $48 million for construction of the middle schools. “The bookstore underwent a “It’s good not to be up against the wall,” said Construction Manager redesign to conform to the bud- Gary Clardy who pointed out unforeseen costs could arise. -

2 Brothers on the 4Th Floor -Let Me Be Free a Chorus Line -One ABBA -My Love, My Life 2 Chainz Feat

2 Brothers On The 4th Floor -Let Me Be Free A Chorus Line -One ABBA -My Love, My Life 2 Chainz feat. Pharrell Williams -Feds Watching A Chorus Line -Let Me Dance For You ABBA -One Man, One Woman 2 Chainz feat. Wiz Khalifa -We Own It (Fast & Furious) A Chorus Line (Pamela Blair) -Dance: Ten, Looks: Three ABBA -Eagle 2 Shy -I'd Love You To Want Me A Day To Remember -All I Want ABBA -When I Kissed The Teacher 2 Unlimited -No Limit A Fine Frenzy -Almost Lover ABBA -So Long 20 Fingers -Short Dick Man A Fine Frenzy -Near To You ABBA -Under Attack 20 Fingers feat. Roula -Lick It A Fine Frenzy -Ashes And Wine ABBA -Rock Me 257ers -Holz A Flock of Seagulls -I Ran (So Far Away) ABBA -Head Over Heels 2Be3 -Partir un jour A Flock of Seagulls -Wishing (If I Had a Photograph of You) ABBA -As Good As New 2Pac -Dear Mama A Funny Thing Happened On The Way To The Forum - ABBA (Eurovision) -Waterloo 2Pac -Changes Comedy Tonight ABC -The Look of Love 2Pac -How Do U Want It A Great Big World -Already Home ABC -When Smokey Sings 2Pac & Digital Underground -I Get Around A Great Big World -Rockstar ABC -Poison Arrow 2Pac & Eric Williams -Do For Love A Great Big World -You'll Be Okay ABC -Be Near Me 2Pac feat. Dr. Dre & Roger Troutman -California Love A Great Big World -I Really Want It Abel -Onderweg 2Pac feat. Dr. Dre & Roger Troutman -California Love (Remix) A Great Big World feat. -

Jury Members List (Preliminary) VERSION 1 - Last Update: 1 May 2015 12:00CEST

Jury members list (preliminary) VERSION 1 - Last update: 1 May 2015 12:00CEST Country Allocation First name Middle name Last name Commonly known as Gender Age Occupation/profession Short biography (un-edited, as delivered by the participating broadcasters) Albania Backup Jury Member Altin Goci male 41 Art Manager / Musician Graduated from Academy of Fine Arts for canto. Co founder of the well known Albanian band Ritfolk. Excellent singer of live music. Plays violin, harmonica and guitar. Albania Jury Member 1 / Chairperson Bojken Lako male 39 TV and theater director Started music career in 1993 with the band Fish hook, producer of first album in 1993 King of beers. In 1999 and 2014 runner up at FiK. Many concerts in Albania and abroad. Collaborated with Band Adriatica, now part of Bojken Lako band. Albania Jury Member 2 Klodian Qafoku male 35 Composer Participant in various concerts and contests, winner of several prizes, also in children festivals. Winner of FiK in 2005, participant in ESC 2006. Composer of first Albanian etno musical Life ritual. Worked as etno musicologist at Albanology Study Center. Albania Jury Member 3 Albania Jury Member 4 Arta Marku female 45 Journalist TV moderator of art and cultural shows. Editor in chief, main editor and editor of several important magazines and newspapers in Albania. Albania Jury Member 5 Zhani Ciko male 69 Violinist Former Artistic Director and Director General of Theater of Opera and Ballet of Tirana. Former Director of Artistic Lyceum Jordan Misja. Artistic Director of Symphonic Orchestra of Albanian Radio Television. One of the most well known Albanian musicians. -

President Jean-Claude Juncker Wednesday, 29 June 2016 European Commission Rue De La Loi 200 1049 Brussels Belgium

President Jean-Claude Juncker Wednesday, 29 June 2016 European Commission Rue de la Loi 200 1049 Brussels Belgium Re. Securing a sustainable future for the European music sector Dear President Juncker, As recording artists and songwriters from across Europe and artists who regularly perform in Europe, we believe passionately in the value of music. Music is fundamental to Europe’s culture. It enriches people’s lives and contributes significantly to our economies. This is a pivotal moment for music. Consumption is exploding. Fans are listening to more music than ever before. Consumers have unprecedented opportunities to access the music they love, whenever and wherever they want to do so. But the future is jeopardised by a substantial “value gap” caused by user upload services such as Google’s YouTube that are unfairly siphoning value away from the music community and its artists and songwriters. This situation is not just harming today’s recording artists and songwriters. It threatens the survival of the next generation of creators too, and the viability and the diversity of their work. The value gap undermines the rights and revenues of those who create, invest in and own music, and distorts the market place. This is because, while music consumption is at record highs, user upload services are misusing “safe harbour” exemptions. These protections were put in place two decades ago to help develop nascent digital start-ups, but today are being misapplied to corporations that distribute and monetise our works. Right now there is a unique opportunity for Europe’s leaders to address the value gap.