AI in First-Person Shooter Games FPS AI Architecture Animation Layer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Resume / Cover Letter / Sample Code Updated May 2009

http://www.enigmasoftware.ca/henry/Resume2009.html Resume / Cover Letter / Sample Code Updated May 2009 Henry Smith [email protected] home: (780) 642-2822 Available September 2009 cell: (780) 884-7044 15 people have recommended Henry Currently in: Edmonton, AB Canada Objective Senior programmer position at a world-class game development studio Interested in GUI programming/design, rapid prototyping, scripting languages, and engine architecture Skills Eight years of game industry experience, plus many more as a hobbyist Expert C++/C programmer Development experience on PC, Mac, Console, Handheld, and Flash platforms Published indie/shareware developer Languages Expert in C++/C, ActionScript 2 Familiar with Ruby, Python, Lua, JavaScript, UnrealScript, XML Exposure to various teaching languages (Scheme, ML, Haskell, Eiffel, Prolog) Tech Familiar with Scaleform GFx, Flash, Unity, STL, Boost, Perforce Exposure to Unreal Engine, NetImmerse/Gamebryo, iPhone, OpenGL Experience BioWare Senior GUI programmer on Dragon Age: Origins Senior Programmer Architected and maintained a GUI framework in C++ and Flash/ActionScript used for all game UI Edmonton, AB Canada Mentored a junior programmer 2004—present Spearheaded a “Study Lunch” group for sharing technical knowledge and expertise Member of the (internal) Technology Architecture Group Worked with many aspects of the game engine including: graphics, input, game-rules, scripting, tools Irrational Games Designed and built several major game systems for a PS2 3rd-person action title, using C++, -

Multi-User Game Development

California State University, San Bernardino CSUSB ScholarWorks Theses Digitization Project John M. Pfau Library 2007 Multi-user game development Cheng-Yu Hung Follow this and additional works at: https://scholarworks.lib.csusb.edu/etd-project Part of the Software Engineering Commons Recommended Citation Hung, Cheng-Yu, "Multi-user game development" (2007). Theses Digitization Project. 3122. https://scholarworks.lib.csusb.edu/etd-project/3122 This Project is brought to you for free and open access by the John M. Pfau Library at CSUSB ScholarWorks. It has been accepted for inclusion in Theses Digitization Project by an authorized administrator of CSUSB ScholarWorks. For more information, please contact [email protected]. ' MULTI ;,..USER iGAME DEVELOPMENT '.,A,.'rr:OJ~c-;t.··. PJ:es·~nted ·t•o '.the·· Fa.8lllty· of. Calif0rr1i~ :Siat~:, lJniiV~r~s'ity; .•, '!' San. Bernardinti . - ' .Th P~rt±al Fu1fillrnent: 6f the ~~q11l~~fuents' for the ;pe'gree ···•.:,·.',,_ .. ·... ··., Master. o.f.·_s:tience•· . ' . ¢ornput~r •· ~6i~n¢e by ,•, ' ' .- /ch~ng~Yu Hung' ' ' Jutie .2001. MULTI-USER GAME DEVELOPMENT A Project Presented to the Faculty of California State University, San Bernardino by Cheng-Yu Hung June 2007 Approved by: {/4~2 Dr. David Turner, Chair, Computer Science ate ABSTRACT In the Current game market; the 3D multi-user game is the most popular game. To develop a successful .3D multi-llger game, we need 2D artists, 3D artists and programme.rs to work together and use tools to author the game artd a: game engine to perform \ the game. Most of this.project; is about the 3D model developmept using too.ls such as Blender, and integration of the 3D models with a .level editor arid game engine. -

CUSTOMER ORDER FORM (Net)

ORDERS PREVIEWS world.com DUE th 18 JAN 2015 JAN COMIC THE SHOP’S PREVIEWSPREVIEWS CATALOG CUSTOMER ORDER FORM CUSTOMER 601 7 Jan15 Cover ROF and COF.indd 1 12/4/2014 3:14:17 PM Available only from your local comic shop! STAR WARS: “THE FORCE POSTER” BLACK T-SHIRT Preorder now! BIG HERO 6: GUARDIANS OF THE DC HEROES: BATMAN “BAYMAX BEFORE GALAXY: “HANG ON, 75TH ANNIVERSARY & AFTER” LIGHT ROCKET & GROOT!” SYMBOL PX BLACK BLUE T-SHIRT T-SHIRT T-SHIRT Preorder now! Preorder now! Preorder now! 01 Jan15 COF Apparel Shirt Ad.indd 1 12/4/2014 3:06:36 PM FRANKENSTEIN CHRONONAUTS #1 UNDERGROUND #1 IMAGE COMICS DARK HORSE COMICS BATMAN: EARTH ONE VOLUME 2 HC DC COMICS PASTAWAYS #1 DESCENDER #1 DARK HORSE COMICS IMAGE COMICS JEM AND THE HOLOGRAMS #1 IDW PUBLISHING CONVERGENCE #0 ALL-NEW DC COMICS HAWKEYE #1 MARVEL COMICS Jan15 Gem Page ROF COF.indd 1 12/4/2014 2:59:43 PM FEATURED ITEMS COMIC BOOKS & GRAPHIC NOVELS The Fox #1 l ARCHIE COMICS God Is Dead Volume 4 TP (MR) l AVATAR PRESS The Con Job #1 l BOOM! STUDIOS Bill & Ted’s Most Triumphant Return #1 l BOOM! STUDIOS Mouse Guard: Legends of the Guard Volume 3 #1 l BOOM! STUDIOS/ARCHAIA PRESS Project Superpowers: Blackcross #1 l D.E./DYNAMITE ENTERTAINMENT Angry Youth Comix HC (MR) l FANTAGRAPHICS BOOKS 1 Hellbreak #1 (MR) l ONI PRESS Doctor Who: The Ninth Doctor #1 l TITAN COMICS Penguins of Madagascar Volume 1 TP l TITAN COMICS 1 Nemo: River of Ghosts HC (MR) l TOP SHELF PRODUCTIONS Ninjak #1 l VALIANT ENTERTAINMENT BOOKS The Art of John Avon: Journeys To Somewhere Else HC l ART BOOKS Marvel Avengers: Ultimate Character Guide Updated & Expanded l COMICS DC Super Heroes: My First Book Of Girl Power Board Book l COMICS MAGAZINES Marvel Chess Collection Special #3: Star-Lord & Thanos l EAGLEMOSS Ace Magazine #1 l COMICS Alter Ego #132 l COMICS Back Issue #80 l COMICS 2 The Walking Dead Magazine #12 (MR) l MOVIE/TV TRADING CARDS Marvel’s Agents of S.H.I.E.L.D. -

1. Planteamiento Del Problema

ESCUELA POLITÉCNICA NACIONAL FACULTAD DE INGENIERIA DE SISTEMAS DESARROLLO DE UN JUEGO DE VIDEO BIDIMENSIONAL POR MEDIO DE LA CREACIÓN DE UN MOTOR GRÁFICO REUTILIZABLE PROYECTO PREVIO A LA OBTENCIÓN DEL TÍTULO DE INGENIERO EN SISTEMAS INFORMÁTICOS Y DE COMPUTACIÓN LUIS FERNANDO ALVAREZ ARTEAGA [email protected] DIRECTOR: ING. XAVIER ARMENDARIZ [email protected] Quito, Junio 2008 2 DECLARACIÓN Yo, Luis Fernando Alvarez Arteaga, declaro bajo juramento que el trabajo aquí descrito es de mi autoría; que no ha sido previamente presentada para ningún grado o calificación profesional; y que he consultado las referencias bibliográficas que se incluyen en este documento. A través de la presente declaración cedo mis derechos de propiedad intelectual correspondientes a este trabajo a la Escuela Politécnica Nacional, según lo establecido por la Ley de Propiedad Intelectual, por su Reglamento y por la normatividad institucional vigente. Luis Fernando Alvarez Arteaga 3 CERTIFICACIÓN Certifico que el presente trabajo fue desarrollado por Luis Fernando Alvarez Arteaga, bajo mi supervisión. Xavier Armendáriz DIRECTOR DE PROYECTO 4 AGRADECIMIENTOS Primeramente quiero dar gracias a Dios, ya que por medio de una gran cantidad de acontecimientos a lo largo de mi carrera y de mi vida me demostró que él es la única fuerza que me ha sostenido y colmado de bendiciones a lo largo de estos años. En segundo lugar quiero agradecer a mi familia, quienes han sido un apoyo incondicional en el desarrollo de este proyecto con sus oraciones y palabras de aliento, más aún en los momentos de mayor dificultad. También agradezco a mi director de tesis, el Ingeniero Xavier Armendáriz, quien con su experiencia profesional en el campo del desarrollo de software contribuyó a que este proyecto tome forma y sea encaminado de la mejor manera. -

Range Rover Sport 2014

Twitter Facebook RANGE ROVER SPORT 2014 Information Provided by: Twitter Facebook LONDON Page 2 Information Provided by: Twitter Facebook SAN FRANCISCO SPECIFICATIONS Page 16 Page 28 Information Provided by: Twitter Facebook Information Provided by: Twitter Facebook The Range Rover Sport has been taken to another level. It is the most agile, dynamic and responsive Land Rover ever. Its flowing lines, distinctive silhouette and dramatic presence embody the vehicle’s energy and modernity. It simply demands to be driven. Information Provided by: Vehicle shown left is Supercharged in Chile. 3 Twitter Facebook Designed and engineered by Land Rover in the UK, this vehicle has been created without compromise. It is beautifully proportioned with a muscular and contemporary presence. The tapered bodywork, wider track and shorter rear overhang help give the vehicle a powerful, planted stance. While the gentle curves, slimmer lights and sculpted corners denote the precision of its design that is unmistakably Land Rover. Information Provided by: 4 Vehicle shown right is Supercharged in Chile. Twitter Facebook Information Provided by: Twitter Facebook Information Provided by: Twitter Facebook This latest Land Rover has a composed, assertive on-road appearance. Its streamlined front elevation and rearward sloping grille give the vehicle a distinctive and confident road image. With strong flowing lines and styling, the sophisticated design of the vehicle is conveyed without compromising the Range Rover Sport’s eminently capable character. Information Provided by: Vehicle shown left and above is Supercharged in Chile. 7 Twitter Facebook The Range Rover Sport’s interior is contemporary and meticulously fashioned to its muscular character. Superb detailing, strong elegant lines and clean surfaces combine with luxurious soft-touch finishes. -

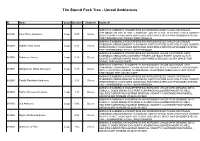

Unreal Ambiences

The Sound Pack Tree - Unreal Ambiences ID Name Loop Duration Channels Keywords AMBIANCE AMBIENCE ATMOSPHERE BACKGROUND BUZZ CHAOS CONTINUUM DIMENSION DREAM ENERGY FLASHBACK FORTH FUTURE GLITCH GLITCHES INSANITY 082100 Time Travel Ambience Loop 0:27 Stereo INTERFERENCE NIGHTMARE OVERLOAD PAST SPACE SPACESHIP SWOOSH SYSTEM TIME TRANSMISSION TRAVEL WARP WHOOSH AMBIANCE AMBIENCE ATMOSPHERE BACKGROUND BUZZ CHAOS CONTINUUM DIMENSION DREAM ENERGY FLASHBACK FORTH FUTURE GLITCH GLITCHES INSANITY 082200 Digital Chaos Storm Loop 0:15 Stereo INTERFERENCE NIGHTMARE OVERLOAD PAST SPACE SPACESHIP SWOOSH SYSTEM TIME TRANSMISSION TRAVEL WARP WHOOSH AMBIANCE AMBIENCE ATMOSPHERE BACKGROUND CHAOS CONTINUUM DARK DIMENSION DREAM DRUGS ENERGY FEAR FLASHBACK FORTH GHOST GLITCH 082400 Nightmare Voices Loop 0:26 Stereo HAUNTED HORROR INSANE MAGIC NIGHTMARE OVERLOAD SCARY SPACE TIME TRAVEL TRIP VOICES WARP AMBIANCE AMBIENCE ATMOSPHERE BACKGROUND CHAOS CONTINUUM DARK DIMENSION DISHARMONIC DREAM DRUGS ENERGY FEAR FLASHBACK FORTH GHOST 082800 Disharmonic Ghost Orchestra Loop 0:18 Stereo GLITCH HAUNTED HORROR INSANE MAGIC NIGHTMARE OVERLOAD SCARY SPACE TIME TRAVEL TRIP VOICES WARP AMBIANCE AMBIENCE ATMOSPHERE BACKGROUND BUZZ CHAOS CONTINUUM DIMENSION DREAM ENERGY FLASHBACK FORTH FUTURE GLITCH GLITCHES INSANITY 082900 Painful Flashback Ambience . 1:11 Stereo INTERFERENCE NIGHTMARE OVERLOAD PAST SPACE SPACESHIP SWOOSH SYSTEM TIME TRANSMISSION TRAVEL WARP WHOOSH AMBIANCE AMBIENCE ATMOSPHERE BACKGROUND CHAOS DARK DEMONIC DEVIL DIABOLIC DOOM DREAM EVIL FEAR FLASHBACK HALLOWEEN -

Creating a Virtual Training Environment for Traffic Accident

Creating a Virtual Training Environment for Traffic Accident Investigation for the Dubai Police Force Ahmed BinSubaih Doctor of Philosophy Department of Computer Science University of Sheffield October 2007 Abstract This research investigates the use of gaming technology (especially game engines) in developing virtual training environments, and comprises of two main parts. The first part of the thesis investigates the creation of an architecture that allows a virtual training environment (i.e. a 'game') to be portable between different game engines. The second part of the thesis examines the learning effectiveness of a virtual training environment developed for traffic accident investigators in the Dubai police force. The second part also serves to evaluate the scalability of the architecture created in the first part of the thesis. Current game development addresses different aspects of portability, such as porting assets and using middleware for physics. However, the game itself is not so easily portable. The first part of this thesis addresses this by making the three elements that represent the game's brain portable. These are the game logic, the object model, and the game state, which are collectively referred to in this thesis as the game factor, or G-factor. This separation is achieved by using an architecture called game space architecture (GSA), which combines a variant of the model-view-controller (MVC) pattern to separate the G-factor (the model) from the game engine (the view) with on-the-fly scripting to enable communication through an adapter (the controller). This enables multiple views (i.e. game engines) to exist for the same model (i.e. -

The Zone of 'Becoming': Game, Text and Technicity in Videogame

The Zone of ‘Becoming’: Game, Text and Technicity in Videogame Narratives Souvik Mukherjee A thesis submitted in partial fulfilment of the requirements of Nottingham Trent University for the degree of Doctor of Philosophy. School of Arts and Humanities Nottingham Trent University Clifton Lane Nottingham, NG11 8NS September 2008 Title: The Zone of ‘Becoming’: Game, Text and Technicity in Videogame Narratives Name: Souvik Mukherjee Degree: PhD Abstract: Videogames have emerged as arguably the most prominent form of entertainment in recent years. Their versatility has made them key contributory factors in social, literary, cultural and philosophical discourse; however, critics also tend to see videogames as posing a threat to established cultural parameters. This thesis argues that videogames are firmly grounded in older media and they are important for the development of the notions of textuality, technicity and identity that literary and cultural theories have been debating in recent years. As its point of departure, the thesis takes the contested role of videogames as storytelling media. Challenging the opposition between games and narratives that is posited in earlier research, the framework of the Derridean concept of supplementarity has been adopted to illustrate how the ludic and the narrative inform each other’s core, and yet retain their media-specific identities. It is also vital to consider how the technicity and narrative of games inform their perception as texts. Videogames provide a direct illustration of this but they develop on similar principles in earlier media instead of doing something entirely ‘new’. The multitelic structure of videogames tends to be looked upon as symptomatic of novelty; in reality, however, they illustrate more clearly the inherent nature of telos in all narrative media. -

2020 Buyer's Guide

NEXT ® SALES TEAM CHRYSLER DODGE FIAT JEEP® RAM COMMERCIAL LAW ENFORCEMENT CANADA FLEET OPERATIONS 2020 BUYER’S GUIDE TOUCH THE SELECTION TABS AND BUTTONS THROUGHOUT THE PDF TO NAVIGATE Page 1 CHRYSLER 300 CHRYSLER PACIFICA DODGE CHARGER DODGE DURANGO DODGE GRAND CARAVAN ® DODGE JOURNEY FIAT 500L FIAT 500X JEEP® WRANGLER JEEP GRAND CHEROKEE JEEP CHEROKEE JEEP COMPASS JEEP RENEGADE JEEP GLADIATOR RAM COMMERCIAL UPFIT RAM PROMASTER CITY® RAM PROMASTER® RAM 1500 DT RAM 1500 CLASSIC RAM HEAVY DUTY RAM CHASSIS CAB DODGE CHARGER ENFORCER DODGE DURANGO ENFORCER RAM 1500 CLASSIC SSV RAM PROMASTER POLICE SSV | FCACANADA.CA/FLEET | 1.800.463.3600 Page 2 NATIONAL FLEET TEAM KEN TUCKEY BERNIE MOORCROFT SUJATA YERROW FERNANDO AMILPA Senior Manager, National Commercial National Daily National Government National Fleet and Sales Manager Rental Manager and Sales Manager Commercial Vehicles Fleet Marketing Manager (905) 821-6036 (905) 821-6043 (905) 821-6040 (905) 821-6035 [email protected] [email protected] [email protected] [email protected] JAMIL BOSTANI RANDY KLEIN TBD BHAVNA SETHI Technical Sales and Fleet Operations Manager Fleet Sales Analyst Fleet and Remarketing Service Manager Analyst (519) 973-2060 (905) 821-6198 (905) 821-6007 (905) 821-6046 [email protected] [email protected] [email protected] GLORIA BUSTAMANTE-FAST Government Sales Analyst (905) 821-6176 [email protected] FLEET AND COMMERCIAL SERVICES JOHN BOLTON ANDRÉ DOSTIE CAMIL GINGRAS National Service -

'Game' Portability

A Survey of ‘Game’ Portability Ahmed BinSubaih, Steve Maddock, and Daniela Romano Department of Computer Science University of Sheffield Regent Court, 211 Portobello Street, Sheffield, U.K. +44(0) 114 2221800 {A.BinSubaih, S.Maddock, D.Romano}@dcs.shef.ac.uk Abstract. Many games today are developed using game engines. This development approach supports various aspects of portability. For example games can be ported from one platform to another and assets can be imported into different engines. The portability aspect that requires further examination is the complexity involved in porting a 'game' between game engines. The game elements that need to be made portable are the game logic, the object model, and the game state, which together represent the game's brain. We collectively refer to these as the game factor, or G-factor. This work presents the findings of a survey of 40 game engines to show the techniques they provide for creating the G-factor elements and discusses how these techniques affect G-factor portability. We also present a survey of 30 projects that have used game engines to show how they set the G-factor. Keywords: game development, portability, game engines. 1 Introduction The shift in game development from developing games from scratch to using game engines was first introduced by Quake and marked the advent of the game- independent game engine development approach (Lewis & Jacobson, 2002). In this approach the game engine became “the collection of modules of simulation code that do not directly specify the game’s behaviour (game logic) or game’s environment (level data)” (Wang et al, 2003). -

November 1999

NOVEMBER 1999 GAME DEVELOPER MAGAZINE ON THE FRONT LINE OF GAME INNOVATION GAME PLAN DEVELOPER 600 Harrison Street, San Francisco, CA 94107 t: 415.905.2200 f: 415.905.2228 w: www.gdmag.com Can Subsidized Hardware Publisher Cynthia A. Blair cblair@mfi.com EDITORIAL Save PC Gaming? Editorial Director Alex Dunne [email protected] Managing Editor ith one of the largest inch monitor and a color printer for Kimberley Van Hooser [email protected] console launches ever about $90 more than a Dreamcast. Departments Editor just behind us and at While I’m glad that more PCs are Jennifer Olsen [email protected] least two more loom- working their way into homes — it cer- Art Director W Laura Pool lpool@mfi.com ing on the horizon over the next 18 tainly will grow the base of casual Editor-At-Large months, many people are speculating gamers — there are a couple of aspects Chris Hecker [email protected] about the features of these next sys- to these incentives that don’t bode well Contributing Editors tems. Upon seeing the impressive fea- for the PC game industry. First, these Jeff Lander [email protected] Paul Steed [email protected] ture sets of the new consoles, some rebate programs are seeding households Omid Rahmat [email protected] have raised questions as to the long- with machines barely equipped to play Advisory Board term viability of the PC as a gaming today’s games. The latest graphics and Hal Barwood LucasArts platform. It’s tough to look at a console audio hardware won’t be found in a Noah Falstein The Inspiracy Brian Hook Verant Interactive 4 spec sheet, read that it has broadband $289 computer, and without these capa- Susan Lee-Merrow Lucas Learning Internet access, amazing graphics and bilities, the systems offer little in terms Mark Miller Harmonix Dan Teven Teven Consulting audio capabilities, a keyboard, DVD of a cutting-edge gaming experience. -

Postmortems from Game Developers.Pdf

POSTMORTEMS FROM Austin Grossman, editor San Francisco, CA • New York, NY • Lawrence, KS Published by CMP Books an imprint of CMP Media LLC Main office: 600 Harrison Street, San Francisco, CA 94107 USA Tel: 415-947-6615; fax: 415-947-6015 Editorial office: 1601 West 23rd Street, Suite 200, Lawrence, KS 66046 USA www.cmpbooks.com email: [email protected] Designations used by companies to distinguish their products are often claimed as trademarks. In all instances where CMP is aware of a trademark claim, the product name appears in initial capital letters, in all capital letters, or in accordance with the vendor’s capitalization preference. Readers should contact the appropriate companies for more complete information on trademarks and trademark registrations. All trademarks and registered trademarks in this book are the property of their respective holders. Copyright © 2003 by CMP Media LLC, except where noted otherwise. Published by CMP Books, CMP Media LLC. All rights reserved. Printed in the United States of America. No part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the publisher; with the exception that the program listings may be entered, stored, and executed in a computer system, but they may not be reproduced for publication. The publisher does not offer any warranties and does not guarantee the accuracy, adequacy, or complete- ness of any information herein and is not responsible for any errors or omissions. The publisher assumes no liability for damages resulting from the use of the information in this book or for any infringement of the intellectual property rights of third parties that would result from the use of this information.