Synthetic Data Generation for Deep Learning Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Perlin Textures in Real Time Using Opengl Antoine Miné, Fabrice Neyret

Perlin Textures in Real Time using OpenGL Antoine Miné, Fabrice Neyret To cite this version: Antoine Miné, Fabrice Neyret. Perlin Textures in Real Time using OpenGL. [Research Report] RR- 3713, INRIA. 1999, pp.18. inria-00072955 HAL Id: inria-00072955 https://hal.inria.fr/inria-00072955 Submitted on 24 May 2006 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. INSTITUT NATIONAL DE RECHERCHE EN INFORMATIQUE ET EN AUTOMATIQUE Perlin Textures in Real Time using OpenGL Antoine Mine´ Fabrice Neyret iMAGIS-IMAG, bat C BP 53, 38041 Grenoble Cedex 9, FRANCE [email protected] http://www-imagis.imag.fr/Membres/Fabrice.Neyret/ No 3713 juin 1999 THEME` 3 apport de recherche ISSN 0249-6399 Perlin Textures in Real Time using OpenGL Antoine Miné Fabrice Neyret iMAGIS-IMAG, bat C BP 53, 38041 Grenoble Cedex 9, FRANCE [email protected] http://www-imagis.imag.fr/Membres/Fabrice.Neyret/ Thème 3 — Interaction homme-machine, images, données, connaissances Projet iMAGIS Rapport de recherche n˚3713 — juin 1999 — 18 pages Abstract: Perlin’s procedural solid textures provide for high quality rendering of surface appearance like marble, wood or rock. -

Fast, High Quality Noise

The Importance of Being Noisy: Fast, High Quality Noise Natalya Tatarchuk 3D Application Research Group AMD Graphics Products Group Outline Introduction: procedural techniques and noise Properties of ideal noise primitive Lattice Noise Types Noise Summation Techniques Reducing artifacts General strategies Antialiasing Snow accumulation and terrain generation Conclusion Outline Introduction: procedural techniques and noise Properties of ideal noise primitive Noise in real-time using Direct3D API Lattice Noise Types Noise Summation Techniques Reducing artifacts General strategies Antialiasing Snow accumulation and terrain generation Conclusion The Importance of Being Noisy Almost all procedural generation uses some form of noise If image is food, then noise is salt – adds distinct “flavor” Break the monotony of patterns!! Natural scenes and textures Terrain / Clouds / fire / marble / wood / fluids Noise is often used for not-so-obvious textures to vary the resulting image Even for such structured textures as bricks, we often add noise to make the patterns less distinguishable Ех: ToyShop brick walls and cobblestones Why Do We Care About Procedural Generation? Recent and upcoming games display giant, rich, complex worlds Varied art assets (images and geometry) are difficult and time-consuming to generate Procedural generation allows creation of many such assets with subtle tweaks of parameters Memory-limited systems can benefit greatly from procedural texturing Smaller distribution size Lots of variation -

Generating Realistic City Boundaries Using Two-Dimensional Perlin Noise

Generating realistic city boundaries using two-dimensional Perlin noise Graduation thesis for the Doctoraal program, Computer Science Steven Wijgerse student number 9706496 February 12, 2007 Graduation Committee dr. J. Zwiers dr. M. Poel prof.dr.ir. A. Nijholt ir. F. Kuijper (TNO Defence, Security and Safety) University of Twente Cluster: Human Media Interaction (HMI) Department of Electrical Engineering, Mathematics and Computer Science (EEMCS) Generating realistic city boundaries using two-dimensional Perlin noise Graduation thesis for the Doctoraal program, Computer Science by Steven Wijgerse, student number 9706496 February 12, 2007 Graduation Committee dr. J. Zwiers dr. M. Poel prof.dr.ir. A. Nijholt ir. F. Kuijper (TNO Defence, Security and Safety) University of Twente Cluster: Human Media Interaction (HMI) Department of Electrical Engineering, Mathematics and Computer Science (EEMCS) Abstract Currently, during the creation of a simulator that uses Virtual Reality, 3D content creation is by far the most time consuming step, of which a large part is done by hand. This is no different for the creation of virtual urban environments. In order to speed up this process, city generation systems are used. At re-lion, an overall design was specified in order to implement such a system, of which the first step is to automatically create realistic city boundaries. Within the scope of a research project for the University of Twente, an algorithm is proposed for this first step. This algorithm makes use of two-dimensional Perlin noise to fill a grid with random values. After applying a transformation function, to ensure a minimum amount of clustering, and a threshold mech- anism to the grid, the hull of the resulting shape is converted to a vector representation. -

CMSC 425: Lecture 14 Procedural Generation: Perlin Noise

CMSC 425 Dave Mount CMSC 425: Lecture 14 Procedural Generation: Perlin Noise Reading: The material on Perlin Noise based in part by the notes Perlin Noise, by Hugo Elias. (The link to his materials seems to have been lost.) This is not exactly the same as Perlin noise, but the principles are the same. Procedural Generation: Big game companies can hire armies of artists to create the immense content that make up the game's virtual world. If you are designing a game without such extensive resources, an attractive alternative for certain natural phenomena (such as terrains, trees, and atmospheric effects) is through the use of procedural generation. With the aid of a random number generator, a high quality procedural generation system can produce remarkably realistic models. Examples of such systems include terragen (see Fig. 1(a)) and speedtree (see Fig. 1(b)). terragen speedtree (a) (b) Fig. 1: (a) A terrain generated by terragen and (b) a scene with trees generated by speedtree. Before discussing methods for generating such interesting structures, we need to begin with a background, which is interesting in its own right. The question is how to construct random noise that has nice structural properties. In the 1980's, Ken Perlin came up with a powerful and general method for doing this (for which he won an Academy Award!). The technique is now widely referred to as Perlin Noise (see Fig. 2(a)). A terrain resulting from applying this is shown in Fig. 2(b). (The terragen software uses randomized methods like Perlin noise as a starting point for generating a terrain and then employs additional operations such as simulated erosion, to achieve heightened realism.) Perlin Noise: Natural phenomena derive their richness from random variations. -

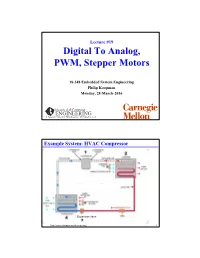

Digital to Analog, PWM, Stepper Motors

Lecture #19 Digital To Analog, PWM, Stepper Motors 18-348 Embedded System Engineering Philip Koopman Monday, 28-March-2016 Electrical& Computer ENGINEERING © Copyright 2006-2016, Philip Koopman, All Rights Reserved Example System: HVAC Compressor Expansion Valve [http://www.solarpowerwindenergy.org] 2 HVAC Embedded Control Compressors (reciprocating & scroll) • Smart loading and unloading of compressor – Want to minimize motor turn on/turn off cycles – May involve bypassing liquid so compressor keeps running but doesn’t compress • Variable speed for better output temperature control • Diagnostics and prognostics – Prevent equipment damage (e.g., liquid entering compressor, compressor stall) – Predict equipment failures (e.g., low refrigerant, motor bearings wearing out) Expansion Valve • Smart control of amount of refrigerant evaporated – Often a stepper motor • Diagnostics and prognostics – Low refrigerant, icing on cold coils, overheating of hot coils System coordination • Coordinate expansion valve and compressor operation • Coordinate multiple compressors • Next lecture – talk about building-level system level diagnostics & coordination 3 Where Are We Now? Where we’ve been: • Interrupts, concurrency, scheduling, RTOS Where we’re going today: • Analog Output Where we’re going next: • Analog Input •Human I/O • Very gentle introduction to control •… • Test #2 and last project demo 4 Preview Digital To Analog Conversion • Example implementation • Understanding performance • Low pass filters Waveform encoding PWM • Digital way -

State of the Art in Procedural Noise Functions

EUROGRAPHICS 2010 / Helwig Hauser and Erik Reinhard STAR – State of The Art Report State of the Art in Procedural Noise Functions A. Lagae1,2 S. Lefebvre2,3 R. Cook4 T. DeRose4 G. Drettakis2 D.S. Ebert5 J.P. Lewis6 K. Perlin7 M. Zwicker8 1Katholieke Universiteit Leuven 2REVES/INRIA Sophia-Antipolis 3ALICE/INRIA Nancy Grand-Est / Loria 4Pixar Animation Studios 5Purdue University 6Weta Digital 7New York University 8University of Bern Abstract Procedural noise functions are widely used in Computer Graphics, from off-line rendering in movie production to interactive video games. The ability to add complex and intricate details at low memory and authoring cost is one of its main attractions. This state-of-the-art report is motivated by the inherent importance of noise in graphics, the widespread use of noise in industry, and the fact that many recent research developments justify the need for an up-to-date survey. Our goal is to provide both a valuable entry point into the field of procedural noise functions, as well as a comprehensive view of the field to the informed reader. In this report, we cover procedural noise functions in all their aspects. We outline recent advances in research on this topic, discussing and comparing recent and well established methods. We first formally define procedural noise functions based on stochastic processes and then classify and review existing procedural noise functions. We discuss how procedural noise functions are used for modeling and how they are applied on surfaces. We then introduce analysis tools and apply them to evaluate and compare the major approaches to noise generation. -

Networking, Noise

Final TAs • Jeff – Ben, Carmen, Audrey • Tyler – Ruiqi – Mason • Ben – Loudon – Jordan, Nick • David – Trent – Vijay Final Design Checks • Optional this week • Required for Final III Playtesting Feedback • Need three people to playtest your game – One will be in class – Two will be out of class (friends, SunLab, etc.) • Should watch your victim volunteer play your game – Don’t comment unless necessary – Take notes – Pay special attention to when the player is lost and when the player is having the most fun Networking STRATEGIES The Illusion • All players are playing in real-time on the same machine • This isn’t possible • We need to emulate this as much as possible The Illusion • What the player should see: – Consistent game state – Responsive controls – Difficult to cheat • Things working against us: – Game state > bandwidth – Variable or high latency – Antagonistic users Send the Entire World! • Players take turns modifying the game world and pass it back and forth • Works alright for turn-based games • …but usually it’s bad – RTS: there are a million units – FPS: there are a million bullets – Fighter: timing is crucial Modeling the World • If we’re sending everything, we’re modeling the world as if everything is equally important Player 1 Player 2 – But it really isn’t! My turn Not my turn Read-only – Composed of entities, only World some of which react to the StateWorld 1 player • We need a better model to solve these problems Client-Server Model • One process is the authoritative server Player 1 (server) Player 2 (client) – Now we -

Tile-Based Method for Procedural Content Generation

Tile-based Method for Procedural Content Generation Dissertation Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By David Maung Graduate Program in Computer Science and Engineering The Ohio State University 2016 Dissertation Committee: Roger Crawfis, Advisor; Srinivasan Parthasarathy; Kannan Srinivasan; Ken Supowit Copyright by David Maung 2016 Abstract Procedural content generation for video games (PCGG) is a growing field due to its benefits of reducing development costs and adding replayability. While there are many different approaches to PCGG, I developed a body of research around tile-based approaches. Tiles are versatile and can be used for materials, 2D game content, or 3D game content. They may be seamless such that a game player cannot perceive that game content was created with tiles. Tile-based approaches allow localized content and semantics while being able to generate infinite worlds. Using techniques such as aperiodic tiling and spatially varying tiling, we can guarantee these infinite worlds are rich playable experiences. My research into tile-based PCGG has led to results in four areas: 1) development of a tile-based framework for PCGG, 2) development of tile-based bandwidth limited noise, 3) development of a complete tile-based game, and 4) application of formal languages to generation and evaluation models in PCGG. ii Vita 2009................................................................B.S. Computer Science, San Diego State -

Procedural Generation of Infinite Terrain from Real-World Elevation

Journal of Computer Graphics Techniques Vol. 3, No. 1, 2014 http://jcgt.org Designer Worlds: Procedural Generation of Infinite Terrain from Real-World Elevation Data Ian Parberry University of North Texas Figure 1.A 180◦ panorama of terrain generated using elevation data from Utah. Abstract The standard way to procedurally generate random terrain for video games and other applica- tions is to post-process the output of a fast noise generator such as Perlin noise. Tuning the post-processing to achieve particular types of terrain requires game designers to be reason- ably well-trained in mathematics. A well-known variant of Perlin noise called value noise is used in a process accessible to designers trained in geography to generate geotypical terrain based on elevation statistics drawn from widely available sources such as the United States Geographical Service. A step-by-step process for downloading and creating terrain from real- world USGS elevation data is described, and an implementation in C++ is given. 1. Introduction Terrain generation is an example of what is known in the game industry as procedu- ral content generation. Procedural content generation needs to have three important properties. First, it needs to be fast, meaning that it has to use only a fraction of the computing power on a current-generation computer. Second, it needs to be both ran- dom and structured so that it creates content that is varied and interesting. Third, it needs to be controllable in a natural and intuitive way. As noted in the excellent survey paper by Smelik et al. [2009], procedural terrain generation is often based on fractal noise generators such as Perlin noise [Perlin 1985; Perlin 2002] (for more details see, for example, the book by Ebert et al. -

A Cellular Texture Basis Function

A Cellular Texture Basis Function Steven Worley 1 ABSTRACT In this paper, we propose a new set of related texture basis func- tions. They are based on scattering ªfeature pointsº throughout 3 Solid texturing is a powerful way to add detail to the surface of < and building a scalar function based on the distribution of the rendered objects. Perlin's ªnoiseº is a 3D basis function used in local points. The use of distributed points in space for texturing some of the most dramatic and useful surface texture algorithms. is not new; ªbombingº is a technique which places geometric fea- We present a new basis function which complements Perlin noise, tures such as spheres throughout space, which generates patterns based on a partitioning of space into a random array of cells. We on surfaces that cut through the volume of these features, forming have used this new basis function to produce textured surfaces re- polkadots, for example. [9, 5] This technique is not a basis func- sembling ¯agstone-like tiled areas, organic crusty skin, crumpled tion, and is signi®cantly less useful than noise. Lewis also used paper, ice, rock, mountain ranges, and craters. The new basis func- points scattered throughout space for texturing. His method forms a tion can be computed ef®ciently without the need for precalculation basis function, but it is better described as an alternative method of or table storage. generating a noise basis than a new basis function with a different appearance.[3] In this paper, we introduce a new texture basis function that has INTRODUCTION interesting behavior, and can be evaluated ef®ciently without any precomputation. -

Coherent Noise

Coherent Noise User manual Table of Contents Introduction...........................................................................................5 Chapter I. .............................................................................................6 Noise Basics........................................................................................6 Combining Noise. ................................................................................8 CoherentNoise library...........................................................................9 Chapter II............................................................................................12 Noise generators................................................................................12 Generator base class.......................................................................12 ValueNoise.....................................................................................13 GradientNoise................................................................................14 ValueNoise2D and GradientNoise2D...................................................16 Constant.......................................................................................16 Function........................................................................................17 Cache...........................................................................................17 Patterns........................................................................................18 Cylinders....................................................................................18 -

Parameterized Melody Generation with Autoencoders and Temporally-Consistent Noise

Parameterized Melody Generation with Autoencoders and Temporally-Consistent Noise Aline Weber, Lucas N. Alegre Jim Torresen Bruno C. da Silva Institute of Informatics Department of Informatics; RITMO Institute of Informatics Federal Univ. of Rio Grande do Sul University of Oslo Federal Univ. of Rio Grande do Sul Porto Alegre, Brazil Oslo, Norway Porto Alegre, Brazil {aweber, lnalegre}@inf.ufrgs.br jimtoer@ifi.uio.no [email protected] ABSTRACT of possibilities when composing|for instance, by suggesting We introduce a machine learning technique to autonomously a few possible novel variations of a base melody informed generate novel melodies that are variations of an arbitrary by the musician. In this paper, we propose and evaluate a base melody. These are produced by a neural network that machine learning technique to generate new melodies based ensures that (with high probability) the melodic and rhyth- on a given arbitrary base melody, while ensuring that it mic structure of the new melody is consistent with a given preserves both properties of the original melody and gen- set of sample songs. We train a Variational Autoencoder eral melodic and rhythmic characteristics of a given set of network to identify a low-dimensional set of variables that sample songs. allows for the compression and representation of sample Previous works have attempted to achieve this goal by songs. By perturbing these variables with Perlin Noise| deploying different types of machine learning techniques. a temporally-consistent parameterized noise function|it is Methods exist, e.g., that interpolate pairs of melodies pro- possible to generate smoothly-changing novel melodies.