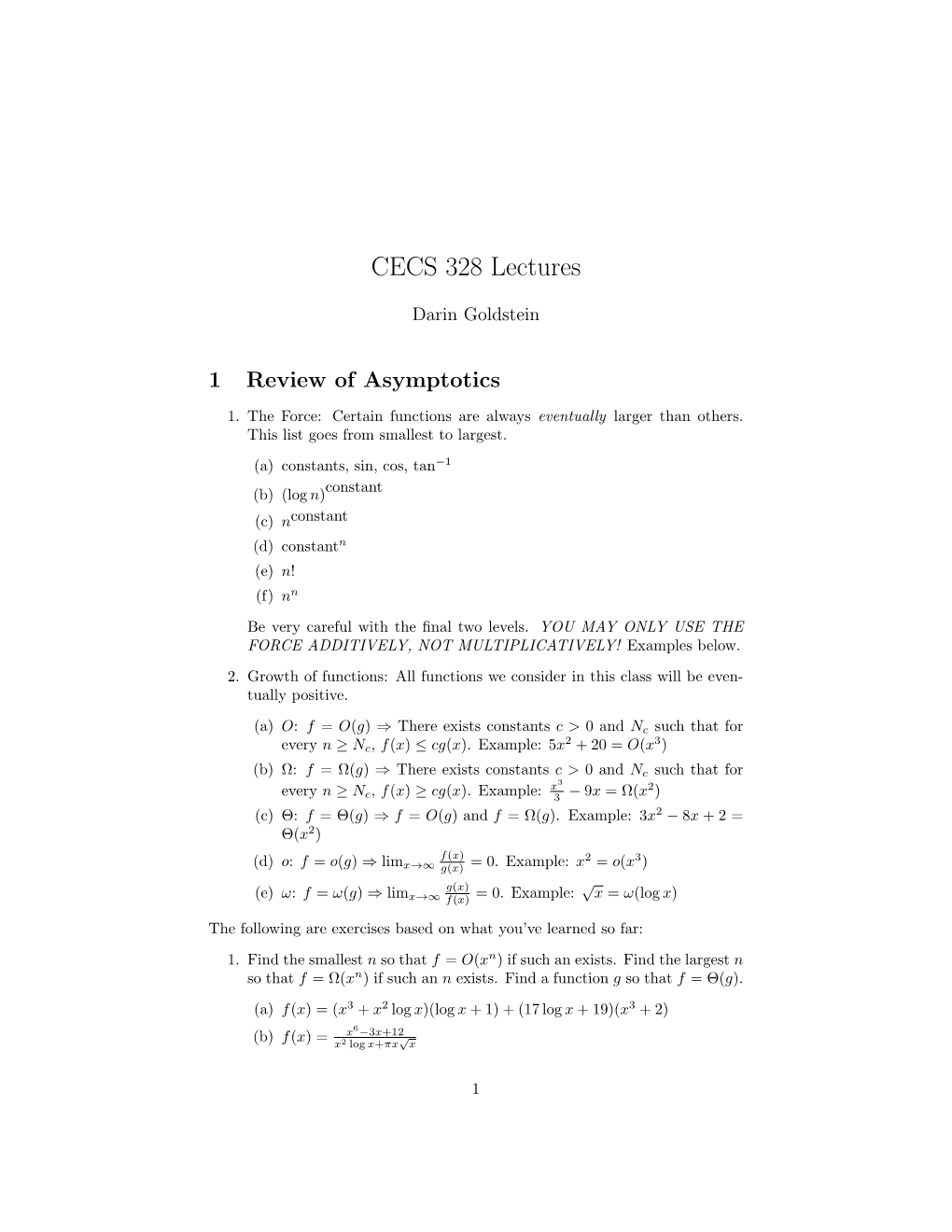

CECS 328 Lectures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reproducibility and Pseudo-Determinism in Log-Space

Reproducibility and Pseudo-determinism in Log-Space by Ofer Grossman S.B., Massachusetts Institute of Technology (2017) Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 2020 c Massachusetts Institute of Technology 2020. All rights reserved. Author...................................................................... Department of Electrical Engineering and Computer Science May 15, 2020 Certified by.................................................................. Shafi Goldwasser RSA Professor of Electrical Engineering and Computer Science Thesis Supervisor Accepted by................................................................. Leslie A. Kolodziejski Professor of Electrical Engineering and Computer Science Chair, Department Committee on Graduate Students 2 Reproducibility and Pseudo-determinism in Log-Space by Ofer Grossman Submitted to the Department of Electrical Engineering and Computer Science on May 15, 2020, in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering and Computer Science Abstract Acuriouspropertyofrandomizedlog-spacesearchalgorithmsisthattheiroutputsareoften longer than their workspace. This leads to the question: how can we reproduce the results of a randomized log space computation without storing the output or randomness verbatim? Running the algorithm again with new -

Efficient Algorithms with Asymmetric Read and Write Costs

Efficient Algorithms with Asymmetric Read and Write Costs Guy E. Blelloch1, Jeremy T. Fineman2, Phillip B. Gibbons1, Yan Gu1, and Julian Shun3 1 Carnegie Mellon University 2 Georgetown University 3 University of California, Berkeley Abstract In several emerging technologies for computer memory (main memory), the cost of reading is significantly cheaper than the cost of writing. Such asymmetry in memory costs poses a fun- damentally different model from the RAM for algorithm design. In this paper we study lower and upper bounds for various problems under such asymmetric read and write costs. We con- sider both the case in which all but O(1) memory has asymmetric cost, and the case of a small cache of symmetric memory. We model both cases using the (M, ω)-ARAM, in which there is a small (symmetric) memory of size M and a large unbounded (asymmetric) memory, both random access, and where reading from the large memory has unit cost, but writing has cost ω 1. For FFT and sorting networks we show a lower bound cost of Ω(ωn logωM n), which indicates that it is not possible to achieve asymptotic improvements with cheaper reads when ω is bounded by a polynomial in M. Moreover, there is an asymptotic gap (of min(ω, log n)/ log(ωM)) between the cost of sorting networks and comparison sorting in the model. This contrasts with the RAM, and most other models, in which the asymptotic costs are the same. We also show a lower bound for computations on an n × n diamond DAG of Ω(ωn2/M) cost, which indicates no asymptotic improvement is achievable with fast reads. -

Tarjan Transcript Final with Timestamps

A.M. Turing Award Oral History Interview with Robert (Bob) Endre Tarjan by Roy Levin San Mateo, California July 12, 2017 Levin: My name is Roy Levin. Today is July 12th, 2017, and I’m in San Mateo, California at the home of Robert Tarjan, where I’ll be interviewing him for the ACM Turing Award Winners project. Good afternoon, Bob, and thanks for spending the time to talk to me today. Tarjan: You’re welcome. Levin: I’d like to start by talking about your early technical interests and where they came from. When do you first recall being interested in what we might call technical things? Tarjan: Well, the first thing I would say in that direction is my mom took me to the public library in Pomona, where I grew up, which opened up a huge world to me. I started reading science fiction books and stories. Originally, I wanted to be the first person on Mars, that was what I was thinking, and I got interested in astronomy, started reading a lot of science stuff. I got to junior high school and I had an amazing math teacher. His name was Mr. Wall. I had him two years, in the eighth and ninth grade. He was teaching the New Math to us before there was such a thing as “New Math.” He taught us Peano’s axioms and things like that. It was a wonderful thing for a kid like me who was really excited about science and mathematics and so on. The other thing that happened was I discovered Scientific American in the public library and started reading Martin Gardner’s columns on mathematical games and was completely fascinated. -

Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber

Outline • Chapter 19: Security (cont) • A Method for Obtaining Digital Signatures and Public-Key Cryptosystems Ronald L. Rivest, Adi Shamir, and Leonard M. Adleman. Communications of the ACM 21,2 (Feb. 1978) – RSA Algorithm – First practical public key crypto system • Authentication in Distributed Systems: Theory and Practice, Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber – Butler Lampson (MSR) - He was one of the designers of the SDS 940 time-sharing system, the Alto personal distributed computing system, the Xerox 9700 laser printer, two-phase commit protocols, the Autonet LAN, and several programming languages – Martin Abadi (Bell Labs) – Michael Burrows, Edward Wobber (DEC/Compaq/HP SRC) Oct-21-03 CSE 542: Operating Systems 1 Encryption • Properties of good encryption technique: – Relatively simple for authorized users to encrypt and decrypt data. – Encryption scheme depends not on the secrecy of the algorithm but on a parameter of the algorithm called the encryption key. – Extremely difficult for an intruder to determine the encryption key. Oct-21-03 CSE 542: Operating Systems 2 Strength • Strength of crypto system depends on the strengths of the keys • Computers get faster – keys have to become harder to keep up • If it takes more effort to break a code than is worth, it is okay – Transferring money from my bank to my credit card and Citibank transferring billions of dollars with another bank should not have the same key strength Oct-21-03 CSE 542: Operating Systems 3 Encryption methods • Symmetric cryptography – Sender and receiver know the secret key (apriori ) • Fast encryption, but key exchange should happen outside the system • Asymmetric cryptography – Each person maintains two keys, public and private • M ≡ PrivateKey(PublicKey(M)) • M ≡ PublicKey (PrivateKey(M)) – Public part is available to anyone, private part is only known to the sender – E.g. -

Great Ideas in Computing

Great Ideas in Computing University of Toronto CSC196 Winter/Spring 2019 Week 6: October 19-23 (2020) 1 / 17 Announcements I added one final question to Assignment 2. It concerns search engines. The question may need a little clarification. I have also clarified question 1 where I have defined (in a non standard way) the meaning of a strict binary search tree which is what I had in mind. Please answer the question for a strict binary search tree. If you answered the quiz question for a non strict binary search tree withn a proper explanation you will get full credit. Quick poll: how many students feel that Q1 was a fair quiz? A1 has now been graded by Marta. I will scan over the assignments and hope to release the grades later today. If you plan to make a regrading request, you have up to one week to submit your request. You must specify clearly why you feel that a question may not have been graded fairly. In general, students did well which is what I expected. 2 / 17 Agenda for the week We will continue to discuss search engines. We ended on what is slide 10 (in Week 5) on Friday and we will continue with where we left off. I was surprised that in our poll, most students felt that the people advocating the \AI view" of search \won the debate" whereas today I will try to argue that the people (e.g., Salton and others) advocating the \combinatorial, algebraic, statistical view" won the debate as to current search engines. -

Rivest, Shamir, and Adleman Receive 2002 Turing Award, Volume 50

Rivest, Shamir, and Adleman Receive 2002 Turing Award Cryptography and Information Se- curity Group. He received a B.A. in mathematics from Yale University and a Ph.D. in computer science from Stanford University. Shamir is the Borman Profes- sor in the Applied Mathematics Department of the Weizmann In- stitute of Science in Israel. He re- Ronald L. Rivest Adi Shamir Leonard M. Adleman ceived a B.S. in mathematics from Tel Aviv University and a Ph.D. in The Association for Computing Machinery (ACM) has computer science from the Weizmann Institute. named RONALD L. RIVEST, ADI SHAMIR, and LEONARD M. Adleman is the Distinguished Henry Salvatori ADLEMAN as winners of the 2002 A. M. Turing Award, Professor of Computer Science and Professor of considered the “Nobel Prize of Computing”, for Molecular Biology at the University of Southern their contributions to public key cryptography. California. He earned a B.S. in mathematics at the The Turing Award carries a $100,000 prize, with University of California, Berkeley, and a Ph.D. in funding provided by Intel Corporation. computer science, also at Berkeley. As researchers at the Massachusetts Institute of The ACM presented the Turing Award on June 7, Technology in 1977, the team developed the RSA 2003, in conjunction with the Federated Computing code, which has become the foundation for an en- Research Conference in San Diego, California. The tire generation of technology security products. It award was named for Alan M. Turing, the British mathematician who articulated the mathematical has also inspired important work in both theoret- foundation and limits of computing and who was a ical computer science and mathematics. -

Fault-Tolerant Distributed Computing in Full-Information Networks

Fault-Tolerant Distributed Computing in Full-Information Networks Shafi Goldwasser∗ Elan Pavlov Vinod Vaikuntanathan∗ CSAIL, MIT MIT CSAIL, MIT Cambridge MA, USA Cambridge MA, USA Cambridge MA, USA December 15, 2006 Abstract In this paper, we use random-selection protocols in the full-information model to solve classical problems in distributed computing. Our main results are the following: • An O(log n)-round randomized Byzantine Agreement (BA) protocol in a synchronous full-information n network tolerating t < 3+ faulty players (for any constant > 0). As such, our protocol is asymp- totically optimal in terms of fault-tolerance. • An O(1)-round randomized BA protocol in a synchronous full-information network tolerating t = n O( (log n)1.58 ) faulty players. • A compiler that converts any randomized protocol Πin designed to tolerate t fail-stop faults, where the n source of randomness of Πin is an SV-source, into a protocol Πout that tolerates min(t, 3 ) Byzantine ∗ faults. If the round-complexity of Πin is r, that of Πout is O(r log n). Central to our results is the development of a new tool, “audited protocols”. Informally “auditing” is a transformation that converts any protocol that assumes built-in broadcast channels into one that achieves a slightly weaker guarantee, without assuming broadcast channels. We regard this as a tool of independent interest, which could potentially find applications in the design of simple and modular randomized distributed algorithms. ∗Supported by NSF grants CNS-0430450 and CCF0514167. 1 1 Introduction The problem of how n players, some of who may be faulty, can make a common random selection in a set, has received much attention. -

Three Puzzles on Mathematics, Computation, and Games

P. I. C. M. – 2018 Rio de Janeiro, Vol. 1 (551–606) THREE PUZZLES ON MATHEMATICS, COMPUTATION, AND GAMES G K Abstract In this lecture I will talk about three mathematical puzzles involving mathemat- ics and computation that have preoccupied me over the years. The first puzzle is to understand the amazing success of the simplex algorithm for linear programming. The second puzzle is about errors made when votes are counted during elections. The third puzzle is: are quantum computers possible? 1 Introduction The theory of computing and computer science as a whole are precious resources for mathematicians. They bring up new questions, profound new ideas, and new perspec- tives on classical mathematical objects, and serve as new areas for applications of math- ematics and mathematical reasoning. In my lecture I will talk about three mathematical puzzles involving mathematics and computation (and, at times, other fields) that have preoccupied me over the years. The connection between mathematics and computing is especially strong in my field of combinatorics, and I believe that being able to person- ally experience the scientific developments described here over the past three decades may give my description some added value. For all three puzzles I will try to describe in some detail both the large picture at hand, and zoom in on topics related to my own work. Puzzle 1: What can explain the success of the simplex algorithm? Linear program- ming is the problem of maximizing a linear function subject to a system of linear inequalities. The set of solutions to the linear inequalities is a convex polyhedron P . -

Efficient Algorithms with Asymmetric Read and Write Costs

Efficient Algorithms with Asymmetric Read and Write Costs Guy E. Blelloch1, Jeremy T. Fineman2, Phillip B. Gibbons1, Yan Gu1, and Julian Shun3 1 Carnegie Mellon University 2 Georgetown University 3 University of California, Berkeley Abstract In several emerging technologies for computer memory (main memory), the cost of reading is significantly cheaper than the cost of writing. Such asymmetry in memory costs poses a fun- damentally different model from the RAM for algorithm design. In this paper we study lower and upper bounds for various problems under such asymmetric read and write costs. We con- sider both the case in which all but O(1) memory has asymmetric cost, and the case of a small cache of symmetric memory. We model both cases using the (M, ω)-ARAM, in which there is a small (symmetric) memory of size M and a large unbounded (asymmetric) memory, both random access, and where reading from the large memory has unit cost, but writing has cost ω 1. For FFT and sorting networks we show a lower bound cost of Ω(ωn logωM n), which indicates that it is not possible to achieve asymptotic improvements with cheaper reads when ω is bounded by a polynomial in M. Moreover, there is an asymptotic gap (of min(ω, log n)/ log(ωM)) between the cost of sorting networks and comparison sorting in the model. This contrasts with the RAM, and most other models, in which the asymptotic costs are the same. We also show a lower bound for computations on an n × n diamond DAG of Ω(ωn2/M) cost, which indicates no asymptotic improvement is achievable with fast reads. -

Cryptography: DH And

1 ì Key Exchange Secure Software Systems Fall 2018 2 Challenge – Exchanging Keys & & − 1 6(6 − 1) !"#ℎ%&'() = = = 15 & 2 2 The more parties in communication, ! $ the more keys that need to be securely exchanged Do we have to use out-of-band " # methods? (e.g., phone?) % Secure Software Systems Fall 2018 3 Key Exchange ì Insecure communica-ons ì Alice and Bob agree on a channel shared secret (“key”) that ì Eve can see everything! Eve doesn’t know ì Despite Eve seeing everything! ! " (alice) (bob) # (eve) Secure Software Systems Fall 2018 Whitfield Diffie and Martin Hellman, 4 “New directions in cryptography,” in IEEE Transactions on Information Theory, vol. 22, no. 6, Nov 1976. Proposed public key cryptography. Diffie-Hellman key exchange. Secure Software Systems Fall 2018 5 Diffie-Hellman Color Analogy (1) It’s easy to mix two colors: + = (2) Mixing two or more colors in a different order results in + + = the same color: + + = (3) Mixing colors is one-way (Impossible to determine which colors went in to produce final result) https://www.crypto101.io/ Secure Software Systems Fall 2018 6 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) + + $ $ = = Mix Mix (1) Start with public color ▇ – share across network (2) Alice picks secret color ▇ and mixes it to get ▇ (3) Bob picks secret color ▇ and mixes it to get ▇ Secure Software Systems Fall 2018 7 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) $ $ Mix Mix = = Eve can’t calculate ▇ !! (secret keys were never shared) (4) Alice and Bob exchange their mixed colors (▇,▇) (5) Eve will -

Race in the Age of Obama Making America More Competitive

american academy of arts & sciences summer 2011 www.amacad.org Bulletin vol. lxiv, no. 4 Race in the Age of Obama Gerald Early, Jeffrey B. Ferguson, Korina Jocson, and David A. Hollinger Making America More Competitive, Innovative, and Healthy Harvey V. Fineberg, Cherry A. Murray, and Charles M. Vest ALSO: Social Science and the Alternative Energy Future Philanthropy in Public Education Commission on the Humanities and Social Sciences Reflections: John Lithgow Breaking the Code Around the Country Upcoming Events Induction Weekend–Cambridge September 30– Welcome Reception for New Members October 1–Induction Ceremony October 2– Symposium: American Institutions and a Civil Society Partial List of Speakers: David Souter (Supreme Court of the United States), Maj. Gen. Gregg Martin (United States Army War College), and David M. Kennedy (Stanford University) OCTOBER NOVEMBER 25th 12th Stated Meeting–Stanford Stated Meeting–Chicago in collaboration with the Chicago Humanities Perspectives on the Future of Nuclear Power Festival after Fukushima WikiLeaks and the First Amendment Introduction: Scott D. Sagan (Stanford Introduction: John A. Katzenellenbogen University) (University of Illinois at Urbana-Champaign) Speakers: Wael Al Assad (League of Arab Speakers: Geoffrey R. Stone (University of States) and Jayantha Dhanapala (Pugwash Chicago Law School), Richard A. Posner (U.S. Conferences on Science and World Affairs) Court of Appeals for the Seventh Circuit), 27th Judith Miller (formerly of The New York Times), Stated Meeting–Berkeley and Gabriel Schoenfeld (Hudson Institute; Healing the Troubled American Economy Witherspoon Institute) Introduction: Robert J. Birgeneau (Univer- DECEMBER sity of California, Berkeley) 7th Speakers: Christina Romer (University of Stated Meeting–Stanford California, Berkeley) and David H. -

Magic Adversaries Versus Individual Reduction: Science Wins Either Way ?

Magic Adversaries Versus Individual Reduction: Science Wins Either Way ? Yi Deng1;2 1 SKLOIS, Institute of Information Engineering, CAS, Beijing, P.R.China 2 State Key Laboratory of Cryptology, P. O. Box 5159, Beijing ,100878,China [email protected] Abstract. We prove that, assuming there exists an injective one-way function f, at least one of the following statements is true: – (Infinitely-often) Non-uniform public-key encryption and key agreement exist; – The Feige-Shamir protocol instantiated with f is distributional concurrent zero knowledge for a large class of distributions over any OR NP-relations with small distinguishability gap. The questions of whether we can achieve these goals are known to be subject to black-box lim- itations. Our win-win result also establishes an unexpected connection between the complexity of public-key encryption and the round-complexity of concurrent zero knowledge. As the main technical contribution, we introduce a dissection procedure for concurrent ad- versaries, which enables us to transform a magic concurrent adversary that breaks the distribu- tional concurrent zero knowledge of the Feige-Shamir protocol into non-black-box construc- tions of (infinitely-often) public-key encryption and key agreement. This dissection of complex algorithms gives insight into the fundamental gap between the known universal security reductions/simulations, in which a single reduction algorithm or simu- lator works for all adversaries, and the natural security definitions (that are sufficient for almost all cryptographic primitives/protocols), which switch the order of qualifiers and only require that for every adversary there exists an individual reduction or simulator. 1 Introduction The seminal work of Impagliazzo and Rudich [IR89] provides a methodology for studying the lim- itations of black-box reductions.