CERN 82-12 23 November 1982

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

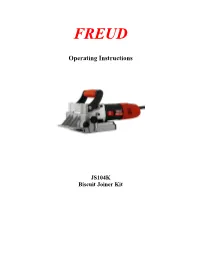

Operating Instructions

FREUD Operating Instructions JS104K Biscuit Joiner Kit Contents Safety General Safety Rules Additional Safety Rules for Biscuit Joiners Functional Description and Specifications Symbols Parts and Feature Diagram Specifications Operating Instructions Prior to Operation Changing the Blade Using the Dust Bag Adjusting Cutting Depth Fence Adjustments Starting the Tool Making a Cut Machine Lubrication Applications Edge to Edge Joints T-Joints Frame Joints Edge Miter Joints Corner Joints Maintenance & Inspection Service Tool Lubrication Bearings Brushes Commutator Accessories Standard Power Tool Warranty BRIEF DESCRIPTION The Freud JS104K biscuit joiner is intended solely for cutting slots for biscuits in solid wood and wood related materials such as MDF, particle board, plywood, etc. Any alternative use of this machine would be considered a failure to comply with the intended purpose of the machine. The manufacturer is not liable for any damage or injury arising from the improper use of this machine and the user assumes sole responsibility in this case. ADDITIONAL SAFETY RULES FOR BISCUIT JOINERS WARNING! Do not cut material containing amianthus/asbestos. Working with materials containing amianthus/asbestos and/or silica stones produces a dust which is harmful to health. Protect yourself from inhaling this dust, in compliance with regulations on accident prevention. Be sure that you use this machine in an uncluttered work environment. Avoid wearing loose fitting clothing while operating this tool. Always wear eye protection, hearing protection, dust mask, and anti-slip footwear when using this tool. Always unplug the power cord before making blade changes or adjustments to the tool. Be sure the blade is properly tightened before turning the machine on and make sure the flange fits in the arbor hole when installing the blade. -

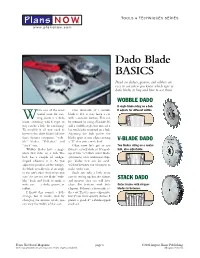

Dado Blade BASICS Dead-On Dadoes, Grooves, and Rabbets Are Easy to Cut When You Know Which Type of Dado Blades to Buy and How to Use Them

TOOLS & TECHNIQUES SERIES Plans NOW® www.plansnow.com Dado Blade BASICS Dead-on dadoes, grooves, and rabbets are easy to cut when you know which type of dado blades to buy and how to use them. WOBBLE DADO A single blade riding on a hub. hile one of the most One downside of a wobble It adjusts for different widths. useful tools for cut- blade is that it may leave a cut Wting joints is a dado with a concave bottom. This can blade, selecting which type to be reduced by using a V-blade. It’s buy can be a little bit confusing. still a wobble-style, but instead it To simplify it, all you need to has two blades mounted on a hub. know is that dado blades fall into Adjusting the hub pushes the three distinct categories: “wob- blades apart at one edge, creating V-BLADE DADO ble” blades, “V-blades,” and a “V” that cuts a wide kerf. “stack” sets. Okay, now let’s get to my Two blades riding on a center Wobble blades have a single favorite:a stack dado set.It’s made hub, also adjustable. 1 blade that rides on a hub. The up of two /8"-thick outer blades hub has a couple of wedge- (trimmers) with additional chip- shaped adjusters in it. As you per blades that can be sand- adjust the position of the wedges, wiched between the trimmers to the blade actually tilts at an angle make wider cuts. to the saw’s arbor.And, when you Stack sets take a little more turn the saw on, the blade “wob- care in setting up, but the dadoes STACK DADO bles” back and forth to make a and grooves they cut will have wide cut — a dado, groove, or clean, flat bottoms with little Outer blades with chipper rabbet. -

Technical Details of the Elliott 152 and 153

Appendix 1 Technical Details of the Elliott 152 and 153 Introduction The Elliott 152 computer was part of the Admiralty’s MRS5 (medium range system 5) naval gunnery project, described in Chap. 2. The Elliott 153 computer, also known as the D/F (direction-finding) computer, was built for GCHQ and the Admiralty as described in Chap. 3. The information in this appendix is intended to supplement the overall descriptions of the machines as given in Chaps. 2 and 3. A1.1 The Elliott 152 Work on the MRS5 contract at Borehamwood began in October 1946 and was essen- tially finished in 1950. Novel target-tracking radar was at the heart of the project, the radar being synchronized to the computer’s clock. In his enthusiasm for perfecting the radar technology, John Coales seems to have spent little time on what we would now call an overall systems design. When Harry Carpenter joined the staff of the Computing Division at Borehamwood on 1 January 1949, he recalls that nobody had yet defined the way in which the control program, running on the 152 computer, would interface with guns and radar. Furthermore, nobody yet appeared to be working on the computational algorithms necessary for three-dimensional trajectory predic- tion. As for the guns that the MRS5 system was intended to control, not even the basic ballistics parameters seemed to be known with any accuracy at Borehamwood [1, 2]. A1.1.1 Communication and Data-Rate The physical separation, between radar in the Borehamwood car park and digital computer in the laboratory, necessitated an interconnecting cable of about 150 m in length. -

Catalogue of Auction Items - Greenville Woodworkers Guild Come and Join Us - Auction and Sale on Saturday October 10, 2020 - All Are Welcome

Catalogue of Auction Items - Greenville Woodworkers Guild Come and join us - Auction and Sale on Saturday October 10, 2020 - All are welcome. Doors open at 8:00 a.m. Auction bidding closes at 10:30 a.m. From center of Mauldin go West on W-Butler for about one mile. The Guild is on the right between the Greer library and Shanks' golf range Fixed priced items are sold at the price listed. Minimum priced items are bid items; the winner takes it home . Minimum priced 2871 Wheeled Plastic Waste Container $100.00 3038Gast Vacuum Pump $250.00 3111 Incra Precision Woodworking system - $200.00 3151Rikon 10" bandsaw 10-305 $125.00 315560 inch 4 drawer workbench $150.00 3156Werner folding ladder $100.00 3157 Central Machinery 6" belt, 9"disk sande $125.00 3158Ryobi 13" planer AP1301 $100.00 3159 Craftsman 10" tablesaw 137.228210 $100.00 3161Craftsman 12 Bandsaw $100.00 3166Grizzly Drum Sander $200.00 3208 Craftsman 5 drawer roller tool chest $140.00 3209Rikon 70-100 lathe $200.00 3210 Dyson Cinetic Vacuum GT5-US-GKA-15 $250.00 3225 Etalon vernier caliper and micrometer $100.00 3285Coring System $200.00 3294Craftsman 10" Table Saw $150.00 3295Shop Smith Tool $150.00 3299 Cyclone Dust Collection System Tempest Cyclone And Dual Grizzly Polar Bear Series $600.00 Dust Collectors 3300 Dixie Chopper Magnum 2750 Mower Zero Turn, 50" cut, 27HP $3,500.00 3322 Craftsman 6 1/8" Jointer 1 1/2hp $150.00 3340 Topcon Survey Transit and tripod $100.00 3344 Craftsman Arc welder with rolling stand $125.00 3345 Central Machinery 2hp Dust Collector $125.00 3346Craftsman 6" Jointer $150.00 3347 Central Machinery 12 x 36" Lathe $200.00 3359 Bostitch 3 Piece Nailer/stapler Set $100.00 3371 Black and Decker Firestorm 10" Tablesa $100.00 3372Ridgid 13" Planer R4331 $250.00 3386 Porter cable fixed/Plunge Router set M $200.00 3394Router Crafter in Box $125.00 3398 Stanley #71 Antique Router plane Made in England $125.00 3403 James Swan antique (1890) Combo Set $150.00 3430 Delta 10 in. -

Build a Plane That Cuts Smooth and Crisp Raised Panels With, Against Or Across the Grain – the Magic Is in the Spring and Skew

Fixed-width PanelBY WILLARD Raiser ANDERSON Build a plane that cuts smooth and crisp raised panels with, against or across the grain – the magic is in the spring and skew. anel-raising planes are used Mass., from 1790 to 1823 (Smith may to shape the raised panels in have apprenticed with Joseph Fuller doors, paneling and lids. The who was one of the most prolific of the profile has a fillet that defines early planemakers), and another similar Pthe field of the panel, a sloped bevel example that has no maker’s mark. to act as a frame for the field and a flat Both are single-iron planes with tongue that fits into the groove of the almost identical dimensions, profiles door or lid frame. and handles. They differ only in the I’ve studied panel-raising planes spring angles (the tilt of the plane off made circa the late 18th and early 19th vertical) and skew of the iron (which centuries, including one made by Aaron creates a slicing cut across the grain to Smith, who was active in Rehoboth, reduce tear-out). The bed angle of the Smith plane is 46º, and the iron is skewed at 32º. Combined, these improve the quality of cut without changing the tool’s cutting angle – which is what happens if you skew Gauges & guides. It’s best to make each of these gauges before you start your plane build. In the long run, they save you time and keep you on track. Shaping tools. The tools required to build this plane are few, but a couple of them – the firmer chisel and floats – are modified to fit this design. -

THE PROVISION of WIDE-AREA NETWORKING FACILITIES Dr

- 240 - THE PROVISION OF WIDE-AREA NETWORKING FACILITIES Dr Barrie J Charles Joint Network Team of the Computer Board and Research Councils, c/o Rutherford Appleton Laboratory, CHILTON, Didcot, Oxon 0X11 OQX, UK ABSTRACT The academic community in the United Kingdom is currently engaged in an extensive programme to provide comprehensive networking facilities within and among the 47 universities and numerous research institutions in the British Isles. The programme is described and the possible provision of similar facilities on an international scale discussed. 1. INTRODUCTION Since the 1960s, funds for large scale computers in universities and academic research institutes in the United Kingdom have been provided centrally through organisations reporting to the Department of Education and Science. This central funding made possible the establishment of national or regional facilities designed to handle the needs of users which could not be met by an institution's local computing equipment. The distance between these users and the central machines encouraged the early establishment of data communication facilities. Today the primary requirements for wide-area networks are seen as: access to large national or regional centres; facility or resource sharing between sites; database access; electronic mail; software distribution; a tool for multi-site scientific collaborations; a means for the itinerant worker to access his home base. Many of these activities are also now becoming practical internationally and local networks are seen as satisfying similar requirements on a smaller geographical scale. - 241 - HISTORY During the early to mid 1970s many separate networks were set up in the UK academic community. These were based either on the packet- switching techniques made popular by the advent of the ARPA network in the United States or on proprietary RJE protocols such as IBM HASP, CDC 200UT or ICL 7020. -

German Warships Make Daring Escape; Japs Two Miles from Singapore Heart W

y THUR5DAT, rEBHTAIlT 12,19ft F O U K T E R t iSRtmr^rBtnr lEttmittg B^ntlb ----------------- 1 Average Daily Circulation The Weather For the Month of January, 1945 "Ain’t It the Lim it!’’ waa preaent- Forecast of U. S.' Waather Bdrcaa The Past Chiefs Club of Me Mrs. E ail F. l3,.aUo4v of 387 Center street who has neen 111 for Valentine Party ed by four of the Dorcaa mem- morial Temple, Pythian Sisters, Guest Speaker bert. Miss A lva Anderson, Mra. YOU CAN’T MISS About Town will meet tonight at the home of the past six weeks, was removed 7,088 Continued moderately cold to yesterday afternoon to the Hart Viola M iffitt, Mias Anne M. E. WITH CANDY! Mrs. Walter Henry. 46 Starl.- Of Dorcas Group Johnson and Misa Lillian Larson. Member of the Audit night. ^ ford hospital. ____ _ following » *«»rt weather street. Mrs. Henry will be It waa greatly enjoyed by all Gat some today for Val Bureau o f CtrcnlatloBB meeting" of tho Cothollc assisted by her mother, Mrs. lv.a present. entine gifts and parties! Manchester— 'A City of Village Charm Ingraham. The 'Volunteer Service Bureau The Dorcas Society’s Valentine Mrs. J. Martin Lehr of Chest Tj<H«a of Columbiu at the K. of in the Municipal Building will Get PETER ’S D E L I C home on Tue^loy. Feb. ITth^ party held last night at Emanuel nut street sang a vocal aolo, and close at noon today, having been , group ringing interspersed the CIOUS CANDIES— (SIXTEEN PAGES) M n . -

Howard Brady

Howard Brady WOOD DESIGNS Copyright © 2019 by Howard L. Brady Note: Every item shown in this album was an original, one-of-a-kind design. The design process from concept through crafting to the finished item is a source of incredible satisfaction to me, and a process that extends well beyond woodworking into music composition, and (with my brother) creation of innovative educational materials. A few years before I retired, Dave Campbell, a senior engineer and friend at Skydata where I worked, remarked that as a writer of technical communications documents for satellite communications equipment, I was an “information designer.” Those were kind works, and, I hope, accurate. This octogenarian loves designing. ii Toys for my great-grandson, Mason: The front-end loader and dump truck were my first major toy designs, built November 2017. Most solid wood is poplar, plywood parts are Baltic birch, dark wood is Indian rosewood, from a local tree destroyed in one of the 2004 hurricanes. Metal parts were aluminum (truck bed edge rails, front end loader bucket and crosspiece), brass (truck steering components) and stainless steel (all fasteners, operating levers). Lever and knob above truck cab controls steering. 1 Built March 2018: Toy box for Mason’s third birthday, May 6th: 2 November 2018: Toy flat-bed wrecker and grumpy wrecked race car for Mason’s Christmas. One of Mason’s parent’s close friends is Tim Daugherty, who races his #88 car (so far NOT wrecked) in figure-8 races at the Antelope Valley Fairgrounds in Southern California. I hope he didn’t mind my version. -

Inlay Cutter Head 05P38.45

Inlay Cutter Head 05P38.45 The Veritas® Inlay Cutter Head is used with the Post Veritas® Router Plane Blade to score both sides of a Carrier shallow groove that will accept purfl ing, banding or Blade stringing. The inlay cutter head includes an assortment of steel and plastic shims Fillister- so that the two spring steel Head blades can score grooves Plastic Shims Screws Steel Shims from 1/16" or 1mm to just Stylus 15 32 under / " or 12mm wide. Fence The router plane/inlay Figure 1: Components of the inlay cutter head. cutter assembly can be used (Router plane not included.) free hand in the middle of a workpiece, but it is more common to guide the cutter with a fence. When used with the optional fence, the router plane/inlay cutter assembly can score both sides of a shallow groove parallel to a straight edge. For following curved edges with a constant offset, the brass stylus that is included with the inlay cutter head can be threaded into the fence rod. Shim Stack Set-Up Caution: Be aware that the blades are sharp; careless handling can result in serious injury. Table 1: Blades and Shims Included Item Thickness (in) Thickness (mm) Quantity Blade 0.020 0.5 2 Orange Plastic 0.002 0.05 5 Purple Plastic 0.005 0.13 5 Blue Plastic 0.010 0.25 5 Black Plastic 0.020 0.5 5 1mm Steel 0.040 1 1 2mm Steel 0.080 2 1 3mm Steel 0.120 3 1 To score a shallow groove of a specifi c width, you will need to determine which shims are needed between the blades. -

Dado & Accessories

20-73 pages 8-28-06 8/30/06 11:21 AM Page 63 Dado Sets & Saw Blade Accessories Dado Sets 63 Whether you’re a skilled professional or a weekend hobbiest, Freud has a dado for you. The SD608, Freud’s Dial-A-Width Dado, has a patented dial system for easy and precise adjustments while offering extremely accurate cuts. The SD300 Series adds a level of safety not found in other manufacturers’ dadoes, while the SD200 Series provides the quality of cuts you expect from Freud, at an attractive price. 20-73 pages 8-28-06 8/30/06 11:21 AM Page 64 Dial-A-Width Stacked Dado Sets NOT A 1 Loosen SD600 WOBBLE Series DADO! 2 Turn The Dial 3 Tighten Features TiCo™ High Dado Cutter Heads Density Carbide Crosscutting Blend For Maximum Performance Chip Free Dadoes In Veneered Plywoods and Laminates The Dial-A-Width Dado set performs like a stacked dado, but Recommended Use & Cut Quality we have replaced the shims with a patented dial system and HARDWOOD: with our exclusive Dial hub, ensures accurate adjustments. SOFTWOOD: Each “click” of the dial adjusts the blade by .004". The Dial- A-Width dado set is easy to use, and very precise. For the CHIP BOARD: serious woodworker, there’s nothing better. PLYWOOD: • Adjusts in .004" increments. 64 LAMINATE: • Maximum 29/32" cut width. NON-FERROUS: • Adjusts easily to right or left operating machines. • Set includes 2 outside blades, 5 chippers, wrench and Application CUT QUALITY: carrying case. (Not recommended for ferrous metals or masonry) • Does not need shims. -

4 Remembering the War in the Solomons

4 Remembering the War in the Solomons Jonathan Fiji'; The Coming of the War The white people told us that a war would eventually come. But even though we listened, we didn't believe them. We couldn't think of any reason that fighting would come to the Solomons. Other people advised us that if fighting did come, we shouldn't be afraid. They said that even if a bomb fell, it would be five minutes before it exploded. All we had to do was pick it up and throw it to where it would explode away from us! 1 In 1942 I was still at schooi at Kwailabesi, in northeast Malaita. One day we saw the Seventh Day Adventist ship Melanesia arrive. But there were no . Solomon Islanders aboard, only missionaries. They warned all of the students that fighting was coming soon. They said "We will be leaving tonight. We can't say if we will ever see you again. But though we are leaving, the Americans may come to evacuate you. You must not worry, because there are Seventh Day Adventists among the Americans too." Some of the other students and I prepared to see our teachers off. At dusk we boarded a small postal boat, and we and the Melanesia traveled along in the dark, without any lights. By daybreak we had reached Siota, on N ggela, and at Taroaniara we camouflaged the two boats with branches. We went in two dinghies to Tulagi, the capital at that time, to see what was happening there. Very few people remained in town. -

The Kermit File Transfer Protocol

THE KERMIT FILE TRANSFER PROTOCOL Frank da Cruz February 1985 This is the original manuscript of the Digital Press book Kermit, A File Transfer Protocol, ISBN 0-932976-88-6, Copyright 1987, written in 1985 and in print from 1986 until 2001. This PDF file was produced by running the original Scribe markup-language source files through the Scribe publishing package, which still existed on an old Sun Solaris computer that was about to be shut off at the end of February 2016, and then converting the resulting PostScript version to PDF. Neither PostScript nor PDF existed in 1985, so this result is a near miracle, especially since the last time this book was "scribed" was on a DECSYSTEM-20 for a Xerox 9700 laser printer (one of the first). Some of the tables are messed up, some of the source code comes out in the wrong font; there's not much I can do about that. Also (unavoidably) the page numbering is different from the printed book and of couse the artwork is missing. Bear in mind Kermit protocol and software have seen over 30 years of progress and development since this book was written. All information herein regarding the Kermit Project, how to get Kermit software, or its license or status, etc, is no longer valid. The Kermit Project at Columbia University survived until 2011 but now it's gone and all Kermit software was converted to Open Source at that time. For current information, please visit the New Open Source Kermit Project website at http://www.kermitproject.org (as long as it lasts).