Introduction to Queueing Theory Review on Poisson Process

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Queueing Network Analyzer

THE BELL SYSTEM TECHNICAL JOURNAL Vol. 62, No.9, November 1983 Printed in U.S.A. The Queueing Network Analyzer By W. WHITT* (Manuscript received March 11, 1983) This paper describes the Queueing Network Analyzer (QNA), a software package developed at Bell Laboratories to calculate approximate congestion measures for a network of queues. The first version of QNA analyzes open networks of multiserver nodes with the first-come, first-served discipline and no capacity constraints. An important feature is that the external arrival processes need not be Poisson and the service-time distributions need not be exponential. Treating other kinds of variability is important. For example, with packet-switchedcommunication networks we need to describe the conges tion resulting from bursty traffic and the nearly constant service times of packets. The general approach in QNA is to approximately characterize the arrival processes by two or three parameters and then analyze the individual nodes separately. The first version of QNA uses two parameters to characterize the arrival processes and service times, one to describe the rate and the other to describe the variability. The nodes are then analyzed as standard GI/G/m queues partially characterized by the first two moments of the interarrival time and service-time distributions. Congestion measures for the network as a whole are obtained by assuming as an approximation that the nodes are stochastically independent given the approximate flow parameters. I. INTRODUCTION AND SUMMARY Networks of queues have proven to be useful models to analyze the performance of complex systems such as computers, switching ma chines, communications networks, and production job shOpS.1-7 To facilitate the analysis of these models, several software packages have * Bell Laboratories. -

M/M/C Queue with Two Priority Classes

Submitted to Operations Research manuscript (Please, provide the mansucript number!) M=M=c Queue with Two Priority Classes Jianfu Wang Nanyang Business School, Nanyang Technological University, [email protected] Opher Baron Rotman School of Management, University of Toronto, [email protected] Alan Scheller-Wolf Tepper School of Business, Carnegie Mellon University, [email protected] This paper provides the first exact analysis of a preemptive M=M=c queue with two priority classes having different service rates. To perform our analysis, we introduce a new technique to reduce the 2-dimensionally (2D) infinite Markov Chain (MC), representing the two class state space, into a 1-dimensionally (1D) infinite MC, from which the Generating Function (GF) of the number of low-priority jobs can be derived in closed form. (The high-priority jobs form a simple M=M=c system, and are thus easy to solve.) We demonstrate our methodology for the c = 1; 2 cases; when c > 2, the closed-form expression of the GF becomes cumbersome. We thus develop an exact algorithm to calculate the moments of the number of low-priority jobs for any c ≥ 2. Numerical examples demonstrate the accuracy of our algorithm, and generate insights on: (i) the relative effect of improving the service rate of either priority class on the mean sojourn time of low-priority jobs; (ii) the performance of a system having many slow servers compared with one having fewer fast servers; and (iii) the validity of the square root staffing rule in maintaining a fixed service level for the low priority class. -

Product-Form in Queueing Networks

Product-form in queueing networks VRIJE UNIVERSITEIT Product-form in queueing networks ACADEMISCH PROEFSCHRIFT ter verkrijging van de graad van doctor aan de Vrije Universiteit te Amsterdam, op gezag van de rector magnificus dr. C. Datema, hoogleraar aan de faculteit der letteren, in het openbaar te verdedigen ten overstaan van de promotiecommissie van de faculteit der economische wetenschappen en econometrie op donderdag 21 mei 1992 te 15.30 uur in het hoofdgebouw van de universiteit, De Boelelaan 1105 door Richardus Johannes Boucherie geboren te Oost- en West-Souburg Thesis Publishers Amsterdam 1992 Promotoren: prof.dr. N.M. van Dijk prof.dr. H.C. Tijms Referenten: prof.dr. A. Hordijk prof.dr. P. Whittle Preface This monograph studies product-form distributions for queueing networks. The celebrated product-form distribution is a closed-form expression, that is an analytical formula, for the queue-length distribution at the stations of a queueing network. Based on this product-form distribution various so- lution techniques for queueing networks can be derived. For example, ag- gregation and decomposition results for product-form queueing networks yield Norton's theorem for queueing networks, and the arrival theorem implies the validity of mean value analysis for product-form queueing net- works. This monograph aims to characterize the class of queueing net- works that possess a product-form queue-length distribution. To this end, the transient behaviour of the queue-length distribution is discussed in Chapters 3 and 4, then in Chapters 5, 6 and 7 the equilibrium behaviour of the queue-length distribution is studied under the assumption that in each transition a single customer is allowed to route among the stations only, and finally, in Chapters 8, 9 and 10 the assumption that a single cus- tomer is allowed to route in a transition only is relaxed to allow customers to route in batches. -

Introduction to Queueing Theory Review on Poisson Process

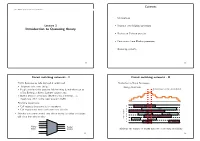

Contents ELL 785–Computer Communication Networks Motivations Lecture 3 Discrete-time Markov processes Introduction to Queueing theory Review on Poisson process Continuous-time Markov processes Queueing systems 3-1 3-2 Circuit switching networks - I Circuit switching networks - II Traffic fluctuates as calls initiated & terminated Fluctuation in Trunk Occupancy Telephone calls come and go • Number of busy trunks People activity follow patterns: Mid-morning & mid-afternoon at All trunks busy, new call requests blocked • office, Evening at home, Summer vacation, etc. Outlier Days are extra busy (Mother’s Day, Christmas, ...), • disasters & other events cause surges in traffic Providing resources so Call requests always met is too expensive 1 active • Call requests met most of the time cost-effective 2 active • 3 active Switches concentrate traffic onto shared trunks: blocking of requests 4 active active will occur from time to time 5 active Trunk number Trunk 6 active active 7 active active Many Fewer lines trunks – minimize the number of trunks subject to a blocking probability 3-3 3-4 Packet switching networks - I Packet switching networks - II Statistical multiplexing Fluctuations in Packets in the System Dedicated lines involve not waiting for other users, but lines are • used inefficiently when user traffic is bursty (a) Dedicated lines A1 A2 Shared lines concentrate packets into shared line; packets buffered • (delayed) when line is not immediately available B1 B2 C1 C2 (a) Dedicated lines A1 A2 B1 B2 (b) Shared line A1 C1 B1 A2 B2 C2 C1 C2 A (b) -

Queueing Theory

Queueing theory Agner Krarup Erlang, a Danish engineer who worked for the Copenhagen Telephone Exchange, published the first paper on what would now be called queueing theory in 1909.[8][9][10] He modeled the number of telephone calls arriving at an exchange by a Poisson process and solved the M/D/1 queue in 1917 and M/D/k queueing model in 1920.[11] In Kendall’s notation: • M stands for Markov or memoryless and means ar- rivals occur according to a Poisson process • D stands for deterministic and means jobs arriving at the queue require a fixed amount of service • k describes the number of servers at the queueing node (k = 1, 2,...). If there are more jobs at the node than there are servers then jobs will queue and wait for service Queue networks are systems in which single queues are connected The M/M/1 queue is a simple model where a single server by a routing network. In this image servers are represented by serves jobs that arrive according to a Poisson process and circles, queues by a series of retangles and the routing network have exponentially distributed service requirements. In by arrows. In the study of queue networks one typically tries to an M/G/1 queue the G stands for general and indicates obtain the equilibrium distribution of the network, although in an arbitrary probability distribution. The M/G/1 model many applications the study of the transient state is fundamental. was solved by Felix Pollaczek in 1930,[12] a solution later recast in probabilistic terms by Aleksandr Khinchin and Queueing theory is the mathematical study of waiting now known as the Pollaczek–Khinchine formula.[11] lines, or queues.[1] In queueing theory a model is con- structed so that queue lengths and waiting time can be After World War II queueing theory became an area of [11] predicted.[1] Queueing theory is generally considered a research interest to mathematicians. -

Delay Models in Data Networks

3 Delay Models in Data Networks 3.1 INTRODUCTION One of the most important perfonnance measures of a data network is the average delay required to deliver a packet from origin to destination. Furthennore, delay considerations strongly influence the choice and perfonnance of network algorithms, such as routing and flow control. For these reasons, it is important to understand the nature and mechanism of delay, and the manner in which it depends on the characteristics of the network. Queueing theory is the primary methodological framework for analyzing network delay. Its use often requires simplifying assumptions since, unfortunately, more real- istic assumptions make meaningful analysis extremely difficult. For this reason, it is sometimes impossible to obtain accurate quantitative delay predictions on the basis of queueing models. Nevertheless, these models often provide a basis for adequate delay approximations, as well as valuable qualitative results and worthwhile insights. In what follows, we will mostly focus on packet delay within the communication subnet (i.e., the network layer). This delay is the sum of delays on each subnet link traversed by the packet. Each link delay in tum consists of four components. 149 150 Delay Models in Data Networks Chap. 3 1. The processinR delay between the time the packet is correctly received at the head node of the link and the time the packet is assigned to an outgoing link queue for transmission. (In some systems, we must add to this delay some additional processing time at the DLC and physical layers.) 2. The queueinR delay between the time the packet is assigned to a queue for trans- mission and the time it starts being transmitted. -

Matrix Geometric Approach for Random Walks

Matrix geometric approach for random walks Citation for published version (APA): Kapodistria, S., & Palmowski, Z. B. (2017). Matrix geometric approach for random walks: stability condition and equilibrium distribution. Stochastic Models, 33(4), 572-597. https://doi.org/10.1080/15326349.2017.1359096 Document license: CC BY-NC-ND DOI: 10.1080/15326349.2017.1359096 Document status and date: Published: 02/10/2017 Document Version: Publisher’s PDF, also known as Version of Record (includes final page, issue and volume numbers) Please check the document version of this publication: • A submitted manuscript is the version of the article upon submission and before peer-review. There can be important differences between the submitted version and the official published version of record. People interested in the research are advised to contact the author for the final version of the publication, or visit the DOI to the publisher's website. • The final author version and the galley proof are versions of the publication after peer review. • The final published version features the final layout of the paper including the volume, issue and page numbers. Link to publication General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal. -

Improving Queuing System Throughput Using Distributed Mean Value Analysis to Control Network Congestion

Communications and Network, 2015, 7, 21-29 Published Online February 2015 in SciRes. http://www.scirp.org/journal/cn http://dx.doi.org/10.4236/cn.2015.71003 Improving Queuing System Throughput Using Distributed Mean Value Analysis to Control Network Congestion Faisal Shahzad1, Muhammad Faheem Mushtaq1, Saleem Ullah1*, M. Abubakar Siddique2, Shahzada Khurram1, Najia Saher1 1Department of Computer Science & IT, The Islamia University of Bahawalpur, Bahawalpur, Pakistan 2College of Computer Science, Chongqing University, Chongqing, China Email: [email protected], [email protected], *[email protected], [email protected], [email protected], [email protected] Received 12 June 2014; accepted 30 January 2015; published 2 February 2015 Copyright © 2015 by authors and Scientific Research Publishing Inc. This work is licensed under the Creative Commons Attribution International License (CC BY). http://creativecommons.org/licenses/by/4.0/ Abstract In this paper, we have used the distributed mean value analysis (DMVA) technique with the help of random observe property (ROP) and palm probabilities to improve the network queuing system throughput. In such networks, where finding the complete communication path from source to destination, especially when these nodes are not in the same region while sending data between two nodes. So, an algorithm is developed for single and multi-server centers which give more in- teresting and successful results. The network is designed by a closed queuing network model and we will use mean value analysis to determine the network throughput (β) for its different values. For certain chosen values of parameters involved in this model, we found that the maximum net- work throughput for β ≥ 0.7 remains consistent in a single server case, while in multi-server case for β ≥ 0.5 throughput surpass the Marko chain queuing system. -

Optimal Surplus Capacity Utilization in Polling Systems Via Fluid Models

Optimal Surplus Capacity Utilization in Polling Systems via Fluid Models Ayush Rawal, Veeraruna Kavitha and Manu K. Gupta Industrial Engineering and Operations Research, IIT Bombay, Powai, Mumbai - 400076, India E-mail: ayush.rawal, vkavitha, manu.gupta @iitb.ac.in Abstract—We discuss the idea of differential fairness in polling while maintaining the QoS requirements of primary customers. systems. One such example scenario is: primary customers One can model this scenario with polling systems, when it demand certain Quality of Service (QoS) and the idea is to takes non-zero time to switch the services between the two utilize the surplus server capacity to serve a secondary class of customers. We use achievable region approach for this. Towards classes of customers. Another example scenario is that of data this, we consider a two queue polling system and study its and voice users utilizing the same wireless network. In this ‘approximate achievable region’ using a new class of delay case, the network needs to maintain the drop probability of priority kind of schedulers. We obtain this approximate region, the impatient voice customers (who drop calls if not picked-up via a limit polling system with fluid queues. The approximation is within a negligible waiting times) below an acceptable level. accurate in the limit when the arrival rates and the service rates converge towards infinity while maintaining the load factor and Alongside, it also needs to optimize the expected sojourn times the ratio of arrival rates fixed. We show that the set of proposed of the data calls. schedulers and the exhaustive schedulers form a complete class: Polling systems can be categorized on the basis of different every point in the region is achieved by one of those schedulers. -

AN EXTENSION of the MATRIX-ANALYTIC METHOD for M/G/1-TYPE MARKOV PROCESSES 1. Introduction We Consider a Bivariate Markov Proces

Journal of the Operations Research Society of Japan ⃝c The Operations Research Society of Japan Vol. 58, No. 4, October 2015, pp. 376{393 AN EXTENSION OF THE MATRIX-ANALYTIC METHOD FOR M/G/1-TYPE MARKOV PROCESSES Yoshiaki Inoue Tetsuya Takine Osaka University Osaka University Research Fellow of JSPS (Received December 25, 2014; Revised May 23, 2015) Abstract We consider a bivariate Markov process f(U(t);S(t)); t ≥ 0g, where U(t)(t ≥ 0) takes values in [0; 1) and S(t)(t ≥ 0) takes values in a finite set. We assume that U(t)(t ≥ 0) is skip-free to the left, and therefore we call it the M/G/1-type Markov process. The M/G/1-type Markov process was first introduced as a generalization of the workload process in the MAP/G/1 queue and its stationary distribution was analyzed under a strong assumption that the conditional infinitesimal generator of the underlying Markov chain S(t) given U(t) > 0 is irreducible. In this paper, we extend known results for the stationary distribution to the case that the conditional infinitesimal generator of the underlying Markov chain given U(t) > 0 is reducible. With this extension, those results become applicable to the analysis of a certain class of queueing models. Keywords: Queue, bivariate Markov process, skip-free to the left, matrix-analytic method, reducible infinitesimal generator, MAP/G/1 queue 1. Introduction We consider a bivariate Markov process f(U(t);S(t)); t ≥ 0g, where U(t) and S(t) are referred to as the level and the phase, respectively, at time t. -

BUSF 40901-1/CMSC 34901-1: Stochastic Performance Modeling Winter 2014

BUSF 40901-1/CMSC 34901-1: Stochastic Performance Modeling Winter 2014 Syllabus (January 15, 2014) Instructor: Varun Gupta Office: 331 Harper Center e-mail: [email protected] Phone: 773-702-7315 Office hours: by appointment Class Times: Wed, Fri { 10:10-11:30 am { Harper Center (3A) Final Exam (tentative): March 21, Friday { 8:00-11:00am { Harper Center (3A) Course Website: http://chalk.uchicago.edu Course Objectives This is an introductory course in queueing theory and performance modeling, with applications including but not limited to service operations (healthcare, call centers) and computer system resource management (from datacenter to kernel level). The aim of the course is two-fold: 1. Build insights into best practices for designing service systems (How many service stations should I provision? What speed? How should I separate/prioritize customers based on their service requirements?) 2. Build a basic toolbox for analyzing queueing systems in particular and stochastic processes in general. Tentative list of topics: Open/closed queueing networks; Operational laws; M=M=1 queue; Burke's theorem and reversibility; M=M=k queue; M=G=1 queue; G=M=1 queue; P h=P h=k queues and their solution using matrix-analytic methods; Arrival theorem and Mean Value Analysis; Analysis of scheduling policies (e.g., Last-Come-First Served; Processor Sharing); Jackson network and the BCMP theorem (product form networks); Asymptotic analysis (M=M=k queue in heavy/light traf- fic, Supermarket model in mean-field regime) Prerequisites Exposure to undergraduate probability (random variables, discrete and continuous probability dis- tributions, discrete time Markov chains) and calculus is required. -

3 Lecture 3: Queueing Networks and Their Fluid and Diffusion Approximation

3 Lecture 3: Queueing Networks and their Fluid and Diffusion Approximation • Fluid and diffusion scales • Queueing networks • Fluid and diffusion approximation of Generalized Jackson networks. • Reflected Brownian Motions • Routing in single class queueing networks. • Multi class queueing networks • Stability of policies for MCQN via fluid • Optimization of MCQN — MDP and the fluid model. • Formulation of the fluid problem for a MCQN 3.1 Fluid and diffusion scales As we saw, queues can only be studied under some very detailed assumptions. If we are willing to assume Poisson arrivals and exponetial service we get a very complete description of the queuing process, in which we can study almost every phenomenon of interest by analytic means. However, much of what we see in M/M/1 simply does not describe what goes on in non M/M/1 systems. If we give up the assumption of exponential service time, then it needs to be replaced by a distribution function G. Fortunately, M/G/1 can also be analyzed in moderate detail, and quite a lot can be said about it which does not depend too much on the detailed form of G. In particular, for the M/G/1 system in steady state, we can calculate moments of queue length and waiting times in terms of the same moments plus one of the distribution G, and so we can evaluate performance of M/G/1 without too many details on G, sometimes 2 E(G) and cG suffice. Again, behavior of M/G/1 does not describe what goes on in GI/G/1 systems.