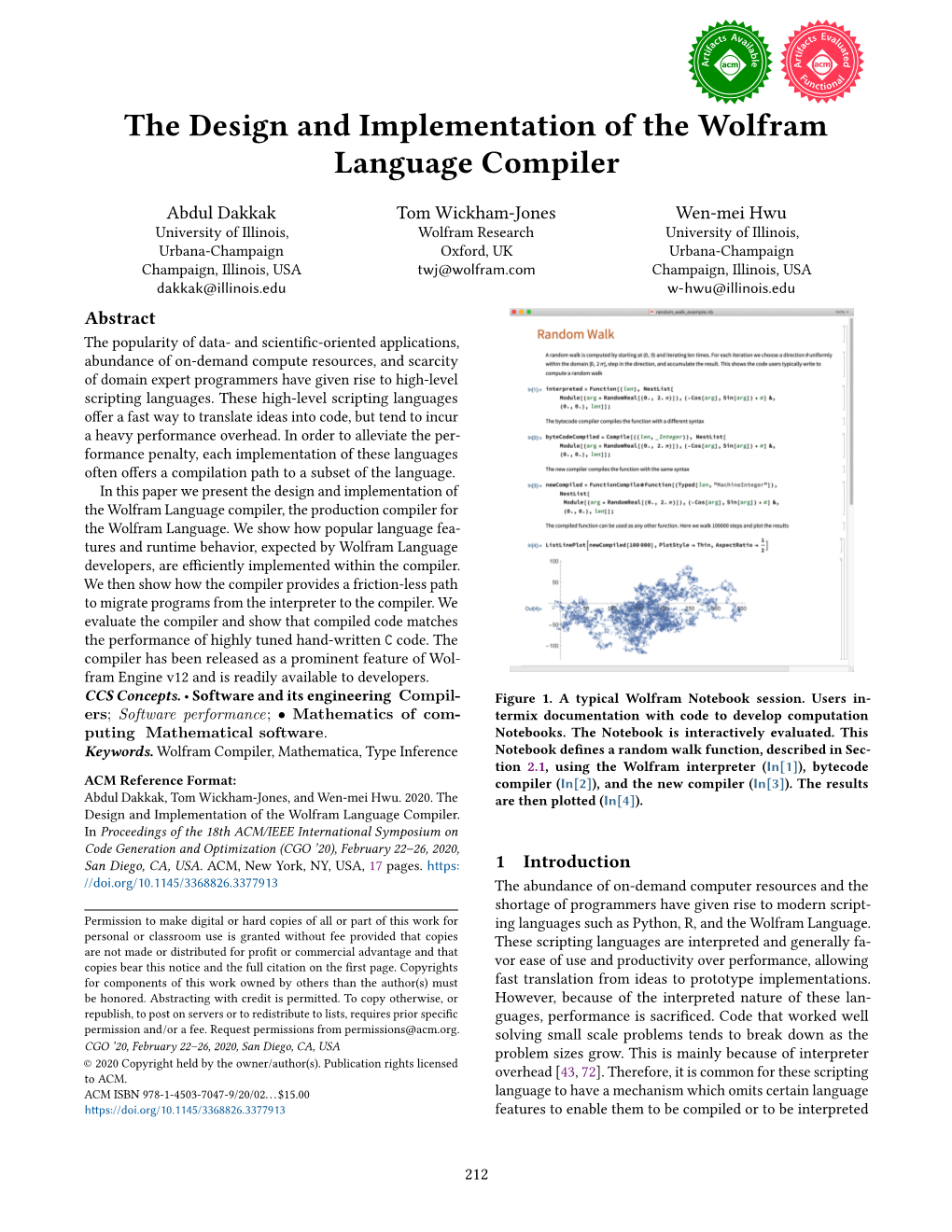

The Design and Implementation of the Wolfram Language Compiler

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rapid Research with Computer Algebra Systems

doi: 10.21495/71-0-109 25th International Conference ENGINEERING MECHANICS 2019 Svratka, Czech Republic, 13 – 16 May 2019 RAPID RESEARCH WITH COMPUTER ALGEBRA SYSTEMS C. Fischer* Abstract: Computer algebra systems (CAS) are gaining popularity not only among young students and schol- ars but also as a tool for serious work. These highly complicated software systems, which used to just be regarded as toys for computer enthusiasts, have reached maturity. Nowadays such systems are available on a variety of computer platforms, starting from freely-available on-line services up to complex and expensive software packages. The aim of this review paper is to show some selected capabilities of CAS and point out some problems with their usage from the point of view of 25 years of experience. Keywords: Computer algebra system, Methodology, Wolfram Mathematica 1. Introduction The Wikipedia page (Wikipedia contributors, 2019a) defines CAS as a package comprising a set of algo- rithms for performing symbolic manipulations on algebraic objects, a language to implement them, and an environment in which to use the language. There are 35 different systems listed on the page, four of them discontinued. The oldest one, Reduce, was publicly released in 1968 (Hearn, 2005) and is still available as an open-source project. Maple (2019a) is among the most popular CAS. It was first publicly released in 1984 (Maple, 2019b) and is still popular, also among users in the Czech Republic. PTC Mathcad (2019) was published in 1986 in DOS as an engineering calculation solution, and gained popularity for its ability to work with typeset mathematical notation in combination with automatic computations. -

Jupyter Notebooks—A Publishing Format for Reproducible Computational Workflows

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elpub digital library Jupyter Notebooks—a publishing format for reproducible computational workflows Thomas KLUYVERa,1, Benjamin RAGAN-KELLEYb,1, Fernando PÉREZc, Brian GRANGERd, Matthias BUSSONNIERc, Jonathan FREDERICd, Kyle KELLEYe, Jessica HAMRICKc, Jason GROUTf, Sylvain CORLAYf, Paul IVANOVg, Damián h i d j AVILA , Safia ABDALLA , Carol WILLING and Jupyter Development Team a University of Southampton, UK b Simula Research Lab, Norway c University of California, Berkeley, USA d California Polytechnic State University, San Luis Obispo, USA e Rackspace f Bloomberg LP g Disqus h Continuum Analytics i Project Jupyter j Worldwide Abstract. It is increasingly necessary for researchers in all fields to write computer code, and in order to reproduce research results, it is important that this code is published. We present Jupyter notebooks, a document format for publishing code, results and explanations in a form that is both readable and executable. We discuss various tools and use cases for notebook documents. Keywords. Notebook, reproducibility, research code 1. Introduction Researchers today across all academic disciplines often need to write computer code in order to collect and process data, carry out statistical tests, run simulations or draw figures. The widely applicable libraries and tools for this are often developed as open source projects (such as NumPy, Julia, or FEniCS), but the specific code researchers write for a particular piece of work is often left unpublished, hindering reproducibility. Some authors may describe computational methods in prose, as part of a general description of research methods. -

Deep Dive on Project Jupyter

A I M 4 1 3 Deep dive on Project Jupyter Dr. Brian E. Granger Principal Technical Program Manager Co-Founder and Leader Amazon Web Services Project Jupyter © 2019, Amazon Web Services, Inc. or its affiliates. All rights reserved. © 2019, Amazon Web Services, Inc. or its affiliates. All rights reserved. Project Jupyter exists to develop open-source software, open standards and services for interactive and reproducible computing. https://jupyter.org/ Overview • Project Jupyter is a multi-stakeholder, open source project. • Jupyter’s flagship application is the Text Math Jupyter Notebook. • Notebook document format: • Live code, narrative text, equations, images, visualizations, audio. • ~100 programming languages supported. Live code • Over 500 contributors across >100 GitHub repositories. • Part of the NumFOCUS Foundation: • Along with NumPy, Pandas, Julia, Matplotlib,… Charts Who uses Jupyter and how? Students/Teachers Data science Data Engineers Machine learning Data Scientists Scientific computing Researchers Data cleaning and transformation Scientists Exploratory data analysis ML Engineers Analytics ML Researchers Simulation Analysts Algorithm development Reporting Data visualization Jupyter has a large and diverse user community • Many millions of Jupyter users worldwide • Thousands of AWS customers • Highly international • Over 5M public notebooks on GitHub alone Example: Dive into Deep Learning • Dive into Deep Learning is a free, open-source book about deep learning. • Zhang, Lipton, Li, Smola from AWS • All content is Jupyter Notebooks on GitHub. • 890 page PDF, hundreds of notebooks • https://d2l.ai/ © 2019, Amazon Web Services, Inc. or its affiliates. All rights reserved. Common threads • Jupyter serves an extremely broad range of personas, usage cases, industries, and applications • What do these have in common? Ideas of Jupyter • Computational narrative • “Real-time thinking” with a computer • Direct manipulation user interfaces that augment the writing of code “Computers are good at consuming, producing, and processing data. -

Books About Computing Tools

Books about Programming and Computing Tools Separate Section of: Books about Computing, Programming, Algorithms, and Software Development Collection of References edited by Stanislav Sýkora Permalink via DOI: 10.3247/SL6Refs16.001 Stan's LIBRARY and its Programming Section Extra Byte | Stan's HUB Free online texts Forward a missing book reference Site Plan & SEARCH This growing compilation includes titles yet to be released (they have a month specified in the release date). The entries are sorted by publication year and the first Author. Green-color titles indicate educational texts. You can download a PDF version of this document for off-line use. But keep coming back, the list is growing! Many of the books are available from Amazon. Entering Amazon from here helps this site at no cost to you. F Other Lists: Popular Science F Mathematics F Physics F Chemistry Visitor # Patents+IP F Electronics | DSP | Tinkering F Computing Spintronics F Materials ADVERTISE with us WWW issues F Instruments / Measurements Quantum Computing F NMR | ESR | MRI F Spectroscopy Extra Byte Hint: the F symbols above, where present, are links to free online texts (books, courses, theses, ...) Advance notices (years ≥ 2016) and, at page bottom, Related Works: Link Directories: SCIENCE | Edu+Fun 1. Garvan Frank, MATH | COMPUTING The Maple Book, PHYSICS | CHEMISTRY 2nd Edition, Chapman and Hall/CRC, February 2016. ISBN 978-1439898286. Hardcover >>. NMR-MRI-ESR-NQR 2. Green Dale, ELECTRONICS Procedural Content Generation for C++ Game Development, PATENTS+IP Packt Publishing, March 2016. Kindle >>. WWW stuff 3. Guido Sarah, Introduction to Machine Learning with Python, Other resources: O'Reilly Media, January 2016. -

Understanding Differential Equations Using Mathematica and Interactive Demonstrations Paritosh Mokhasi

CODEE Journal Volume 9 Article 8 11-25-2012 Understanding Differential Equations Using Mathematica and Interactive Demonstrations Paritosh Mokhasi James Adduci Devendra Kapadia Follow this and additional works at: http://scholarship.claremont.edu/codee Recommended Citation Mokhasi, Paritosh; Adduci, James; and Kapadia, Devendra (2012) "Understanding Differential Equations Using Mathematica and Interactive Demonstrations," CODEE Journal: Vol. 9, Article 8. Available at: http://scholarship.claremont.edu/codee/vol9/iss1/8 This Article is brought to you for free and open access by the Journals at Claremont at Scholarship @ Claremont. It has been accepted for inclusion in CODEE Journal by an authorized administrator of Scholarship @ Claremont. For more information, please contact [email protected]. Understanding Differential Equations Using Mathematica and Interactive Demonstrations Paritosh Mokhasi, James Adduci and Devendra Kapadia Wolfram Research, Inc., Champaign, IL. Keywords: Mathematica, Wolfram Demonstrations Project Manuscript received on May 24, 2012; published on November 25, 2012. Abstract: The solution of differential equations using the software package Mathematica is discussed in this paper. We focus on two functions, DSolve and NDSolve, and give various examples of how one can obtain symbolic or numerical results using these functions. An overview of the Wolfram Demon- strations Project is given, along with various novel user-contributed examples in the field of differential equations. The use of these Demonstrations in a classroom setting is elaborated upon to emphasize their significance for education. 1 Introduction 1 Mathematica is a powerful software package used for all kinds of symbolic and numerical computations. It has been available for around 25 years. Mathematica is sometimes viewed as a very sophisticated calculator useful for solving a variety of different problems, including differential equations. -

The World of Scripting Languages Pdf, Epub, Ebook

THE WORLD OF SCRIPTING LANGUAGES PDF, EPUB, EBOOK David Barron | 506 pages | 02 Aug 2000 | John Wiley & Sons Inc | 9780471998860 | English | New York, United States The World of Scripting Languages PDF Book How to get value of selected radio button using JavaScript? She wrote an algorithm for the Analytical Engine that was the first of its kind. There is no specific rule on what is, or is not, a scripting language. Most computer programming languages were inspired by or built upon concepts from previous computer programming languages. Anyhow, that led to me singing the praises of Python the same way that someone would sing the praises of a lover who ditched them unceremoniously. Find the best bootcamp for you Our matching algorithm will connect you to job training programs that match your schedule, finances, and skill level. Java is not the same as JavaScript. Found on all windows and Linux servers. All content from Kiddle encyclopedia articles including the article images and facts can be freely used under Attribution-ShareAlike license, unless stated otherwise. Looking for more information like this? Timur Meyster in Applying to Bootcamps. Primarily, JavaScript is light weighed, interpreted and plays a major role in front-end development. These can be used to control jobs on mainframes and servers. Recover your password. It accounts for garbage allocation, memory distribution, etc. It is easy to learn and was originally created as a tool for teaching computer programming. Kyle Guercio - January 21, 0. Data Science. Java is everywhere, from computers to smartphones to parking meters. Requires less code than modern programming languages. -

SENTINEL-2 DATA PROCESSING USING R Case Study: Imperial Valley, USA

TRAINING KIT – R01 DFSDFGAGRIAGRA SENTINEL-2 DATA PROCESSING USING R Case Study: Imperial Valley, USA Research and User Support for Sentinel Core Products The RUS Service is funded by the European Commission, managed by the European Space Agency and operated by CSSI and its partners. Authors would be glad to receive your feedback or suggestions and to know how this material was used. Please, contact us on [email protected] Cover image credits: ESA The following training material has been prepared by Serco Italia S.p.A. within the RUS Copernicus project. Date of publication: November 2020 Version: 1.1 Suggested citation: Serco Italia SPA (2020). Sentinel-2 data processing using R (version 1.1). Retrieved from RUS Lectures at https://rus-copernicus.eu/portal/the-rus-library/learn-by-yourself/ This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. DISCLAIMER While every effort has been made to ensure the accuracy of the information contained in this publication, RUS Copernicus does not warrant its accuracy or will, regardless of its or their negligence, assume liability for any foreseeable or unforeseeable use made of this publication. Consequently, such use is at the recipient’s own risk on the basis that any use by the recipient constitutes agreement to the terms of this disclaimer. The information contained in this publication does not purport to constitute professional advice. 2 Table of Contents 1 Introduction to RUS ........................................................................................................................ -

Final Report for the Redus Project

FINAL REPORT FOR THE REDUS PROJECT Reduced Uncertainty in Stock Assessment Erik Olsen (HI), Sondre Aanes Norwegian Computing Center, Magne Aldrin Norwegian Computing Center, Olav Nikolai Breivik Norwegian Computing Center, Edvin Fuglebakk, Daisuke Goto, Nils Olav Handegard, Cecilie Hansen, Arne Johannes Holmin, Daniel Howell, Espen Johnsen, Natoya Jourdain, Knut Korsbrekke, Ono Kotaro, Håkon Otterå, Holly Ann Perryman, Samuel Subbey, Guldborg Søvik, Ibrahim Umar, Sindre Vatnehol og Jon Helge Vølstad (HI) RAPPORT FRA HAVFORSKNINGEN NR. 2021-16 Tittel (norsk og engelsk): Final report for the REDUS project Sluttrapport for REDUS-prosjektet Undertittel (norsk og engelsk): Reduced Uncertainty in Stock Assessment Rapportserie: År - Nr.: Dato: Distribusjon: Rapport fra havforskningen 2021-16 17.03.2021 Åpen ISSN:1893-4536 Prosjektnr: Forfatter(e): 14809 Erik Olsen (HI), Sondre Aanes Norwegian Computing Center, Magne Aldrin Norwegian Computing Center, Olav Nikolai Breivik Norwegian Program: Computing Center, Edvin Fuglebakk, Daisuke Goto, Nils Olav Handegard, Cecilie Hansen, Arne Johannes Holmin, Daniel Howell, Marine prosesser og menneskelig Espen Johnsen, Natoya Jourdain, Knut Korsbrekke, Ono Kotaro, påvirkning Håkon Otterå, Holly Ann Perryman, Samuel Subbey, Guldborg Søvik, Ibrahim Umar, Sindre Vatnehol og Jon Helge Vølstad (HI) Forskningsgruppe(r): Godkjent av: Forskningsdirektør(er): Geir Huse Programleder(e): Bentiske ressurser og prosesser Frode Vikebø Bunnfisk Fiskeridynamikk Pelagisk fisk Sjøpattedyr Økosystemakustikk Økosystemprosesser -

Treball (1.484Mb)

Treball Final de Màster MÀSTER EN ENGINYERIA INFORMÀTICA Escola Politècnica Superior Universitat de Lleida Mòdul d’Optimització per a Recursos del Transport Adrià Vall-llaura Salas Tutors: Antonio Llubes, Josep Lluís Lérida Data: Juny 2017 Pròleg Aquest projecte s’ha desenvolupat per donar solució a un problema de l’ordre del dia d’una empresa de transports. Es basa en el disseny i implementació d’un model matemàtic que ha de permetre optimitzar i automatitzar el sistema de planificació de viatges de l’empresa. Per tal de poder implementar l’algoritme s’han hagut de crear diversos mòduls que extreuen les dades del sistema ERP, les tracten, les envien a un servei web (REST) i aquest retorna un emparellament òptim entre els vehicles de l’empresa i les ordres dels clients. La primera fase del projecte, la teòrica, ha estat llarga en comparació amb les altres. En aquesta fase s’ha estudiat l’estat de l’art en la matèria i s’han repassat molts dels models més importants relacionats amb el transport per comprendre’n les seves particularitats. Amb els conceptes ben estudiats, s’ha procedit a desenvolupar un nou model matemàtic adaptat a les necessitats de la lògica de negoci de l’empresa de transports objecte d’aquest treball. Posteriorment s’ha passat a la fase d’implementació dels mòduls. En aquesta fase m’he trobat amb diferents limitacions tecnològiques degudes a l’antiguitat de l’ERP i a l’ús del sistema operatiu Windows. També han sorgit diferents problemes de rendiment que m’han fet redissenyar l’extracció de dades de l’ERP, el càlcul de distàncies i el mòdul d’optimització. -

WOLFRAM EDUCATION SOLUTIONS MATHEMATICA® TECHNOLOGIES for TEACHING and RESEARCH About Wolfram Research

WOLFRAM EDUCATION SOLUTIONS MATHEMATICA® TECHNOLOGIES FOR TEACHING AND RESEARCH About Wolfram Research For over two decades, Wolfram Research has been dedicated to developing tools that inspire exploration and innovation. As we work toward our goal to make the world’s data computable, we have expanded our portfolio to include a variety of products and technologies that, when combined, provide a true campuswide solution. At the center is Mathematica—our ever-advancing core product that has become the ultimate application for computation, visualization, and development. With millions of dedicated users throughout the technical and educational communities, Mathematica is used for everything from teaching simple concepts in the classroom to doing serious research using some of the world’s largest clusters. Wolfram’s commitment to education spans from elementary education to research universities. Through our free educational resources, STEM teacher workshops, and on-campus technical talks, we interact with educators whose feedback we rely on to develop tools that support their changing needs. Just as Mathematica revolutionized technical computing 20 years ago, our ongoing development of Mathematica technology and continued dedication to education are transforming the composition of tomorrow’s classroom. With more added all the time, Wolfram educational resources bolster pedagogy and support technology for classrooms and campuses everywhere. Favorites among educators include: Wolfram|Alpha®, the Wolfram Demonstrations Project™, MathWorld™, -

Exploratory Data Science Using a Literate Programming Tool Mary Beth Kery1 Marissa Radensky2 Mahima Arya1 Bonnie E

The Story in the Notebook: Exploratory Data Science using a Literate Programming Tool Mary Beth Kery1 Marissa Radensky2 Mahima Arya1 Bonnie E. John3 Brad A. Myers1 1Human-Computer Interaction Institute 2Amherst College 3Bloomberg L. P. Carnegie Mellon University Amherst, MA New York City, NY Pittsburgh, PA [email protected] [email protected] mkery, mahimaa, bam @cs.cmu.edu ABSTRACT engineers, many of whom never receive formal training in Literate programming tools are used by millions of software engineering [30]. programmers today, and are intended to facilitate presenting data analyses in the form of a narrative. We interviewed 21 As even more technical novices engage with code and data data scientists to study coding behaviors in a literate manipulations, it is key to have end-user programming programming environment and how data scientists kept track tools that address barriers to doing effective data science. For of variants they explored. For participants who tried to keep instance, programming with data often requires heavy a detailed history of their experimentation, both informal and exploration with different ways to manipulate the data formal versioning attempts led to problems, such as reduced [14,23]. Currently even experts struggle to keep track of the notebook readability. During iteration, participants actively experimentation they do, leading to lost work, confusion curated their notebooks into narratives, although primarily over how a result was achieved, and difficulties effectively through cell structure rather than markdown explanations. ideating [11]. Literate programming has recently arisen as a Next, we surveyed 45 data scientists and asked them to promising direction to address some of these problems [17]. -

2019 Annual Report

ANNUAL REPORT 2019 TABLE OF CONTENTS LETTER FROM THE BOARD CO-CHAIRPERSON ..............................................03 PROJECTS ....................................................................................................................... 04 New Sponsored Projects Project Highlights from 2019 Project Events Case Studies Affiliated Projects NumFOCUS Services to Projects PROGRAMS ..................................................................................................................... 16 PyData PyData Meetups PyData Conferences PyData Videos Small Development Grants to NumFOCUS Projects Inaugural Visiting Fellow Diversity and Inclusion in Scientific Computing (DISC) Google Season of Docs Google Summer of Code Sustainability Program GRANTS ........................................................................................................................... 25 Major Grants to Sponsored Projects through NumFOCUS SUPPORT ........................................................................................................................ 28 2019 NumFOCUS Corporate Sponsors Donor List FINANCIALS ................................................................................................................... 34 Revenue & Expenses Project Income Detail Project EOY Balance Detail PEOPLE ............................................................................................................................ 38 Staff Board of Directors Advisory Council LETTER FROM THE BOARD CO-CHAIRPERSON NumFOCUS was founded in