Exploratory Data Science Using a Literate Programming Tool Mary Beth Kery1 Marissa Radensky2 Mahima Arya1 Bonnie E

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Using Mathematica As a Teaching Tool in the Undergraduate Economics Curriculum

USING MATHEMATICA AS A TEACHING TOOL IN THE UNDERGRADUATE ECONOMICS CURRICULUM Gary Hodgin* Abstract In recent years, the quantitative skills required of economics students have increased significantly. Students often experience difficulty in making the transition from their mathematics courses to the use of mathematics in their economics courses. Symbolic computation programs are mathematical tools that can potentially smooth this transition. This paper illustrates a few ways in which one symbolic computation program, Mathematica, has been used in the undergraduate economics curriculum. I. Introduction The undergraduate curriculum in economics has become increasingly quantitative. An economics major typically includes college algebra, statistics, and some form of calculus in its degree requirements.1 In addition, mathematical economics and econometrics are now standard courses in many undergraduate programs. Moreover, this quantitative emphasis generally extends to other courses across the economics curriculum, particularly the intermediate theory and managerial economics courses. For example, approximately 90 percent of the intermediate microeconomics instructors included in von Allmen and Brower's survey (1998, 279) use calculus in their courses. Although one might question whether too much emphasis is placed on quantitative skills, there appears to be consensus among economics professors that mathematics is an important ingredient in undergraduate economic education. Over the past three years, I have used a symbolic computation program entitled Mathematica in two of my intermediate microeconomics and three of my managerial economics courses. My observations in these courses suggests that some students experience difficulty in making the transition from their courses in mathematics to their use of mathematics in economics. By smoothing this transition, symbolic computation programs can assist students in learning economics. -

Knuthweb.Pdf

Literate Programming Donald E. Knuth Computer Science Department, Stanford University, Stanford, CA 94305, USA The author and his associates have been experimenting for the past several years with a program- ming language and documentation system called WEB. This paper presents WEB by example, and discusses why the new system appears to be an improvement over previous ones. I would ordinarily have assigned to student research A. INTRODUCTION assistants; and why? Because it seems to me that at last I’m able to write programs as they should be written. The past ten years have witnessed substantial improve- My programs are not only explained better than ever ments in programming methodology. This advance, before; they also are better programs, because the new carried out under the banner of “structured program- methodology encourages me to do a better job. For ming,” has led to programs that are more reliable and these reasons I am compelled to write this paper, in easier to comprehend; yet the results are not entirely hopes that my experiences will prove to be relevant to satisfactory. My purpose in the present paper is to others. propose another motto that may be appropriate for the I must confess that there may also be a bit of mal- next decade, as we attempt to make further progress ice in my choice of a title. During the 1970s I was in the state of the art. I believe that the time is ripe coerced like everybody else into adopting the ideas of for significantly better documentation of programs, and structured programming, because I couldn’t bear to be that we can best achieve this by considering programs found guilty of writing unstructured programs. -

Programming Pearls: a Literate Program

by jot1 Be~~tley programming with Special Guest Oysters Don Knuth and Doug McIlroy pearls A LITERATE PROGRAM Last month‘s column introduced Don Knuth’s style of frequency; or there might not even be as many as k “Literate Programming” and his WEB system for building words. Let’s be more precise: The most common programs that are works of literature. This column pre- words are to be printed in order of decreasing fre- sents a literate program by Knuth (its origins are sketched quency, with words of equal frequency listed in al- in last month‘s column) and, as befits literature, a review. phabetic order. Printing should stop after k words So without further ado, here is Knuth’s program, have been output, if more than k words are present. retypeset in Communications style. -Jon Bentley 2. The input file is assumed to contain the given Common Words Section text. If it begins with a positive decimal number Introduction.. , . , , . , . , . , , . 1 (preceded by optional blanks), that number will be Strategic considerations . , a the value of k; otherwise we shall assume that Basic input routines . , , . 9 k = 100. Answers will be sent to the output file. Dictionary lookup . , . , . , .17 define default-k = 100 (use this value if k isn’t The frequency counts . .32 otherwise specified) Sortingatrie . ...36 Theendgame................................41 3. Besides solving the given problem, this program is Index . ...42 supposed to be an example of the WEB system, for people who know some Pascal but who have never 1. Introduction. The purpose of this program is to seen WEB before. -

Jupyter Notebooks—A Publishing Format for Reproducible Computational Workflows

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elpub digital library Jupyter Notebooks—a publishing format for reproducible computational workflows Thomas KLUYVERa,1, Benjamin RAGAN-KELLEYb,1, Fernando PÉREZc, Brian GRANGERd, Matthias BUSSONNIERc, Jonathan FREDERICd, Kyle KELLEYe, Jessica HAMRICKc, Jason GROUTf, Sylvain CORLAYf, Paul IVANOVg, Damián h i d j AVILA , Safia ABDALLA , Carol WILLING and Jupyter Development Team a University of Southampton, UK b Simula Research Lab, Norway c University of California, Berkeley, USA d California Polytechnic State University, San Luis Obispo, USA e Rackspace f Bloomberg LP g Disqus h Continuum Analytics i Project Jupyter j Worldwide Abstract. It is increasingly necessary for researchers in all fields to write computer code, and in order to reproduce research results, it is important that this code is published. We present Jupyter notebooks, a document format for publishing code, results and explanations in a form that is both readable and executable. We discuss various tools and use cases for notebook documents. Keywords. Notebook, reproducibility, research code 1. Introduction Researchers today across all academic disciplines often need to write computer code in order to collect and process data, carry out statistical tests, run simulations or draw figures. The widely applicable libraries and tools for this are often developed as open source projects (such as NumPy, Julia, or FEniCS), but the specific code researchers write for a particular piece of work is often left unpublished, hindering reproducibility. Some authors may describe computational methods in prose, as part of a general description of research methods. -

Deep Dive on Project Jupyter

A I M 4 1 3 Deep dive on Project Jupyter Dr. Brian E. Granger Principal Technical Program Manager Co-Founder and Leader Amazon Web Services Project Jupyter © 2019, Amazon Web Services, Inc. or its affiliates. All rights reserved. © 2019, Amazon Web Services, Inc. or its affiliates. All rights reserved. Project Jupyter exists to develop open-source software, open standards and services for interactive and reproducible computing. https://jupyter.org/ Overview • Project Jupyter is a multi-stakeholder, open source project. • Jupyter’s flagship application is the Text Math Jupyter Notebook. • Notebook document format: • Live code, narrative text, equations, images, visualizations, audio. • ~100 programming languages supported. Live code • Over 500 contributors across >100 GitHub repositories. • Part of the NumFOCUS Foundation: • Along with NumPy, Pandas, Julia, Matplotlib,… Charts Who uses Jupyter and how? Students/Teachers Data science Data Engineers Machine learning Data Scientists Scientific computing Researchers Data cleaning and transformation Scientists Exploratory data analysis ML Engineers Analytics ML Researchers Simulation Analysts Algorithm development Reporting Data visualization Jupyter has a large and diverse user community • Many millions of Jupyter users worldwide • Thousands of AWS customers • Highly international • Over 5M public notebooks on GitHub alone Example: Dive into Deep Learning • Dive into Deep Learning is a free, open-source book about deep learning. • Zhang, Lipton, Li, Smola from AWS • All content is Jupyter Notebooks on GitHub. • 890 page PDF, hundreds of notebooks • https://d2l.ai/ © 2019, Amazon Web Services, Inc. or its affiliates. All rights reserved. Common threads • Jupyter serves an extremely broad range of personas, usage cases, industries, and applications • What do these have in common? Ideas of Jupyter • Computational narrative • “Real-time thinking” with a computer • Direct manipulation user interfaces that augment the writing of code “Computers are good at consuming, producing, and processing data. -

Teaching Foundations of Computation and Deduction Through Literate Functional Programming and Type Theory Formalization

Teaching Foundations of Computation and Deduction Through Literate Functional Programming and Type Theory Formalization Gérard Huet Inria Paris Laboratory, France Abstract We describe experiments in teaching fundamental informatics notions around mathematical struc- tures for formal concepts, and effective algorithms to manipulate them. The major themes of lambda-calculus and type theory served as guides for the effective implementation of functional programming languages and higher-order proof assistants, appropriate for reflecting the theor- etical material into effective tools to represent constructively the concepts and formally certify the proofs of their properties. Progressively, a literate programming and proving style replaced informal mathematics in the presentation of the material as executable course notes. The talk will evoke the various stages of (in)completion of the corresponding set of notes along the years (see [1, 2, 14, 5, 6, 10, 12, 16, 7, 15, 17, 9, 3, 13, 11, 4, 8]), and tell how their elaboration proved to be essential to the discovery of fundamental results. 1998 ACM Subject Classification F.3.1 Specifying and Verifying Programs, F.4.1 Mathematical Logic and G.4 Mathematical Software Keywords and phrases Foundations, Computation, Deduction, Programming, Proofs Digital Object Identifier 10.4230/LIPIcs.FSCD.2016.3 Category Invited Talk References 1 Gérard Huet. Initiation à la logique mathématique. Notes de cours du DEA d’Informatique, Université Paris-Sud, 1977. 2 Gérard Huet. Démonstration automatique en logique de premier ordre et programmation en clauses de horn. Notes de cours du DEA d’Informatique, Université Paris-Sud, 1978. 3 Gérard Huet. Confluent reductions: Abstract properties and applications to term rewriting systems. -

Typeset MMIX Programs with TEX Udo Wermuth Abstract a TEX Macro

TUGboat, Volume 35 (2014), No. 3 297 Typeset MMIX programs with TEX Example: In section 9 the lines \See also sec- tion 10." and \This code is used in section 24." are given. Udo Wermuth No such line appears in section 10 as it only ex- tends the replacement code of section 9. (Note that Abstract section 10 has in its headline the number 9.) In section 24 the reference to section 9 stands for all of ATEX macro package is presented as a literate pro- the eight code lines stated in sections 9 and 10. gram. It can be included in programs written in the If a section is not used in any other section then languages MMIX or MMIXAL without affecting the it is a root and during the extraction of the code a assembler. Such an instrumented file can be pro- file is created that has the name of the root. This file cessed by TEX to get nicely formatted output. Only collects all the code in the sequence of the referenced a new first line and a new last line must be entered. sections from the code part. The collection process And for each end-of-line comment a flag is set to for all root sections is called tangle. A second pro- indicate that the comment is written in TEX. cess is called weave. It outputs the documentation and the code parts as a TEX document. How to read the following program Example: The following program has only one The text that starts in the next chapter is a literate root that is defined in section 4 with the headline program [2, 1] written in a style similar to noweb [7]. -

Exploratory Programming for the Arts and Humanities

Exploratory Programming for the Arts and Humanities Exploratory Programming for the Arts and Humanities Second Edition Nick Montfort The MIT Press Cambridge, Massachusetts London, England © 2021 Nick Montfort The open access edition of this work was made possible by generous funding from the MIT Libraries. This work is subject to a Creative Commons CC-BY-NC-SA license. Subject to such license, all rights are reserved. This book was set in ITC Stone Serif Std and ITC Stone Sans Std by New Best-set Typesetters Ltd. Library of Congress Cataloging-in-Publication Data Names: Montfort, Nick, author. Title: Exploratory programming for the arts and humanities / Nick Montfort. Description: Second edition. | Cambridge, Massachusetts : The MIT Press, [2021] | Includes bibliographical references and index. | Summary: "Exploratory Programming for the Arts and Humanities offers a course on programming without prerequisites. It covers both the essentials of computing and the main areas in which computing applies to the arts and humanities"—Provided by publisher. Identifiers: LCCN 2019059151 | ISBN 9780262044608 (hardcover) Subjects: LCSH: Computer programming. | Digital humanities. Classification: LCC QA76.6 .M664 2021 | DDC 005.1—dc23 LC record available at https://lccn.loc.gov/2019059151 10 9 8 7 6 5 4 3 2 1 [Contents] [List of Figures] xv [Acknowledgments] xvii [1] Introduction 1 [1.1] 1 [1.2] Exploration versus Exploitation 4 [1.3] A Justification for Learning to Program? 6 [1.4] Creative Computing and Programming as Inquiry 7 [1.5] Programming Breakthroughs -

Sage Tutorial (Pdf)

Sage Tutorial Release 9.4 The Sage Development Team Aug 24, 2021 CONTENTS 1 Introduction 3 1.1 Installation................................................4 1.2 Ways to Use Sage.............................................4 1.3 Longterm Goals for Sage.........................................5 2 A Guided Tour 7 2.1 Assignment, Equality, and Arithmetic..................................7 2.2 Getting Help...............................................9 2.3 Functions, Indentation, and Counting.................................. 10 2.4 Basic Algebra and Calculus....................................... 14 2.5 Plotting.................................................. 20 2.6 Some Common Issues with Functions.................................. 23 2.7 Basic Rings................................................ 26 2.8 Linear Algebra.............................................. 28 2.9 Polynomials............................................... 32 2.10 Parents, Conversion and Coercion.................................... 36 2.11 Finite Groups, Abelian Groups...................................... 42 2.12 Number Theory............................................. 43 2.13 Some More Advanced Mathematics................................... 46 3 The Interactive Shell 55 3.1 Your Sage Session............................................ 55 3.2 Logging Input and Output........................................ 57 3.3 Paste Ignores Prompts.......................................... 58 3.4 Timing Commands............................................ 58 3.5 Other IPython -

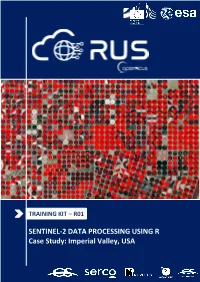

SENTINEL-2 DATA PROCESSING USING R Case Study: Imperial Valley, USA

TRAINING KIT – R01 DFSDFGAGRIAGRA SENTINEL-2 DATA PROCESSING USING R Case Study: Imperial Valley, USA Research and User Support for Sentinel Core Products The RUS Service is funded by the European Commission, managed by the European Space Agency and operated by CSSI and its partners. Authors would be glad to receive your feedback or suggestions and to know how this material was used. Please, contact us on [email protected] Cover image credits: ESA The following training material has been prepared by Serco Italia S.p.A. within the RUS Copernicus project. Date of publication: November 2020 Version: 1.1 Suggested citation: Serco Italia SPA (2020). Sentinel-2 data processing using R (version 1.1). Retrieved from RUS Lectures at https://rus-copernicus.eu/portal/the-rus-library/learn-by-yourself/ This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. DISCLAIMER While every effort has been made to ensure the accuracy of the information contained in this publication, RUS Copernicus does not warrant its accuracy or will, regardless of its or their negligence, assume liability for any foreseeable or unforeseeable use made of this publication. Consequently, such use is at the recipient’s own risk on the basis that any use by the recipient constitutes agreement to the terms of this disclaimer. The information contained in this publication does not purport to constitute professional advice. 2 Table of Contents 1 Introduction to RUS ........................................................................................................................ -

Final Report for the Redus Project

FINAL REPORT FOR THE REDUS PROJECT Reduced Uncertainty in Stock Assessment Erik Olsen (HI), Sondre Aanes Norwegian Computing Center, Magne Aldrin Norwegian Computing Center, Olav Nikolai Breivik Norwegian Computing Center, Edvin Fuglebakk, Daisuke Goto, Nils Olav Handegard, Cecilie Hansen, Arne Johannes Holmin, Daniel Howell, Espen Johnsen, Natoya Jourdain, Knut Korsbrekke, Ono Kotaro, Håkon Otterå, Holly Ann Perryman, Samuel Subbey, Guldborg Søvik, Ibrahim Umar, Sindre Vatnehol og Jon Helge Vølstad (HI) RAPPORT FRA HAVFORSKNINGEN NR. 2021-16 Tittel (norsk og engelsk): Final report for the REDUS project Sluttrapport for REDUS-prosjektet Undertittel (norsk og engelsk): Reduced Uncertainty in Stock Assessment Rapportserie: År - Nr.: Dato: Distribusjon: Rapport fra havforskningen 2021-16 17.03.2021 Åpen ISSN:1893-4536 Prosjektnr: Forfatter(e): 14809 Erik Olsen (HI), Sondre Aanes Norwegian Computing Center, Magne Aldrin Norwegian Computing Center, Olav Nikolai Breivik Norwegian Program: Computing Center, Edvin Fuglebakk, Daisuke Goto, Nils Olav Handegard, Cecilie Hansen, Arne Johannes Holmin, Daniel Howell, Marine prosesser og menneskelig Espen Johnsen, Natoya Jourdain, Knut Korsbrekke, Ono Kotaro, påvirkning Håkon Otterå, Holly Ann Perryman, Samuel Subbey, Guldborg Søvik, Ibrahim Umar, Sindre Vatnehol og Jon Helge Vølstad (HI) Forskningsgruppe(r): Godkjent av: Forskningsdirektør(er): Geir Huse Programleder(e): Bentiske ressurser og prosesser Frode Vikebø Bunnfisk Fiskeridynamikk Pelagisk fisk Sjøpattedyr Økosystemakustikk Økosystemprosesser -

Statistical Software

Statistical Software A. Grant Schissler1;2;∗ Hung Nguyen1;3 Tin Nguyen1;3 Juli Petereit1;4 Vincent Gardeux5 Keywords: statistical software, open source, Big Data, visualization, parallel computing, machine learning, Bayesian modeling Abstract Abstract: This article discusses selected statistical software, aiming to help readers find the right tool for their needs. We categorize software into three classes: Statisti- cal Packages, Analysis Packages with Statistical Libraries, and General Programming Languages with Statistical Libraries. Statistical and analysis packages often provide interactive, easy-to-use workflows while general programming languages are built for speed and optimization. We emphasize each software's defining characteristics and discuss trends in popularity. The concluding sections muse on the future of statistical software including the impact of Big Data and the advantages of open-source languages. This article discusses selected statistical software, aiming to help readers find the right tool for their needs (not provide an exhaustive list). Also, we acknowledge our experiences bias the discussion towards software employed in scholarly work. Throughout, we emphasize the software's capacity to analyze large, complex data sets (\Big Data"). The concluding sections muse on the future of statistical software. To aid in the discussion, we classify software into three groups: (1) Statistical Packages, (2) Analysis Packages with Statistical Libraries, and (3) General Programming Languages with Statistical Libraries. This structure